|

|

--- |

|

|

library_name: transformers |

|

|

tags: |

|

|

- text-summarization |

|

|

- text-generation |

|

|

- clinical-report-summarization |

|

|

- document-summarization |

|

|

license: mit |

|

|

language: |

|

|

- en |

|

|

- fr |

|

|

- pt |

|

|

- es |

|

|

metrics: |

|

|

- bertscore |

|

|

- rouge |

|

|

base_model: |

|

|

- Qwen/Qwen2.5-0.5B-Instruct |

|

|

pipeline_tag: text-generation |

|

|

--- |

|

|

|

|

|

# Model Details |

|

|

|

|

|

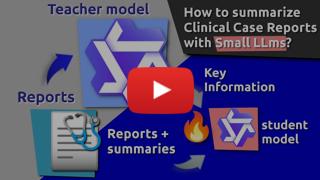

> **Update 13'th July 2025**: Added [video review on youtube](https://youtu.be/uOAiUvLghuE) |

|

|

|

|

|

This model represent a fine-tuned version of `Qwen/Qwen2.5-0.5B-Instruct` on [MultiClinSum](https://zenodo.org/records/15463353) training data |

|

|

for [BioASQ-2025](http://bioasq.org/) Workshop / [CLEF 2025](https://clef2025.clef-initiative.eu/). |

|

|

|

|

|

This model represent a baseline for the `distil` version: |

|

|

|

|

|

https://huggingface.co/nicolay-r/qwen25-05b-multiclinsum-distil |

|

|

|

|

|

### Video Overview |

|

|

|

|

|

<div align="center"> |

|

|

|

|

|

[](https://youtu.be/uOAiUvLghuE) |

|

|

|

|

|

</div> |

|

|

|

|

|

### Model Description |

|

|

|

|

|

- **Model type:** Decoder-based Model |

|

|

- **Language(s) (NLP):** Supported by Qwen2.5 + fine-tuned on summarries written in `en`, `fr`, `pt`, `es` |

|

|

- **License:** MIT |

|

|

- **Finetuned from model [optional]:** https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct |

|

|

|

|

|

### Model Sources [optional] |

|

|

[](https://colab.research.google.com/drive/1TXGaz39o73nBucEQw12gbad7Tw11j2Ol?usp=sharing) |

|

|

|

|

|

- **Repository:** https://github.com/nicolay-r/distil-tuning-llm |

|

|

- **Paper:** **TBA** |

|

|

- **Demo:** https://colab.research.google.com/drive/1TXGaz39o73nBucEQw12gbad7Tw11j2Ol?usp=sharing |

|

|

|

|

|

## Usage |

|

|

|

|

|

We use [bulk-chain](https://github.com/nicolay-r/bulk-chain) for inference with the Qwen2 provider based on `transformers` **pipelines API**. |

|

|

|

|

|

**Provider** `huggingface_qwen.py`: https://github.com/nicolay-r/nlp-thirdgate/blob/9e46629792e9a53871710884f7b9e2fe42666aa7/llm/transformers_qwen2.py |

|

|

|

|

|

```python |

|

|

from bulk_chain.api import iter_content |

|

|

from bulk_chain.core.utils import dynamic_init |

|

|

|

|

|

content_it = iter_content( |

|

|

schema={"schema": [ |

|

|

{"prompt": "Summarize: {input}", "out": "summary"}] |

|

|

}, |

|

|

llm=dynamic_init( |

|

|

class_filepath="huggingface_qwen.py", |

|

|

class_name="Qwen2")( |

|

|

api_token="YOUR_HF_API_KEY_GOES_HERE", |

|

|

model_name="nicolay-r/qwen25-05b-multiclinsum-standard", |

|

|

temp=0.1, |

|

|

use_bf16=True, |

|

|

max_new_tokens=args.max_tokens, |

|

|

device=args.device |

|

|

), |

|

|

infer_mode="batch", |

|

|

batch_size=4, |

|

|

return_mode="record", |

|

|

# INPUT TEXTS: |

|

|

input_dicts_it=[ |

|

|

{"input": "A patient 62 years old with ..."} |

|

|

], |

|

|

) |

|

|

|

|

|

for record in content_it: |

|

|

# here is the result dictionary that includes summary. |

|

|

print(record["summary"]) |

|

|

``` |

|

|

|

|

|

## Training Details |

|

|

|

|

|

### Training Data |

|

|

|

|

|

* **MultiClinSum** |

|

|

* We use the [following script](https://github.com/nicolay-r/distill-tuning-llm/blob/main/resources/download_dataset.sh) for downloading datasets. |

|

|

* **Web**: https://temu.bsc.es/multiclinsum |

|

|

* **Data**: https://zenodo.org/records/15463353 |

|

|

* **BioASQ**: http://bioasq.org/ |

|

|

|

|

|

### Training Procedure |

|

|

|

|

|

The training procedure involves: |

|

|

1. Preparation of the `rationale` for summaries distillation. |

|

|

2. Launch of the **fine-tuning** process. |

|

|

|

|

|

**Fine-tuning:** Please follow this script for using [`MultiClinSum` dataset](https://zenodo.org/records/15463353) for fine-tuning at GoogleColab A100 (40GB VRAM) + 80GB RAM: |

|

|

* https://github.com/nicolay-r/distil-tuning-llm/blob/master/distil_ft_qwen25_05b_A100-40GB_80GB_std.sh |

|

|

|

|

|

#### Preprocessing [optional] |

|

|

|

|

|

Refer to the following script for the `fine-tuning` pre-processing: |

|

|

* https://github.com/nicolay-r/distil-tuning-llm/blob/master/resources/make_dataset_mult.py |

|

|

|

|

|

#### Training Hyperparameters |

|

|

|

|

|

We refer to the original parameters here: |

|

|

* https://github.com/QwenLM/Qwen2.5-VL/tree/main/qwen-vl-finetune |

|

|

And use the following script: |

|

|

* https://github.com/nicolay-r/distil-tuning-llm/blob/master/distil_ft_qwen25_05b_A100-40GB_80GB_std.sh |

|

|

|

|

|

|

|

|

#### Speeds, Sizes, Times [optional] |

|

|

|

|

|

The fine-tuning procedure for `3` epochs takes around `~1 hour` using the GoogleColab A100. |

|

|

|

|

|

## Evaluation |

|

|

|

|

|

|

|

|

#### Testing Data |

|

|

|

|

|

We use evaluation split of the 20 documents out of the small portion the available training data across all the languages: `en`, `fr`, `pt`, `es` |

|

|

|

|

|

#### Metrics |

|

|

|

|

|

In this evaluation we use onle `rouge` score. |

|

|

|

|

|

### Results |

|

|

|

|

|

We launch 3 individual fine-tuning processes for `distil` and `standard` versions to showcase results variation among multiple runs. |

|

|

|

|

|

> **Figure**: the obtained results for this model correspond to the `standard` version 🟠 |

|

|

|

|

|

|

|

|

|

|

|

#### Summary |

|

|

|

|

|

#### Hardware |

|

|

|

|

|

We experiment with model inference and launching using GoolgeColab Notebook service and related resources: |

|

|

* Fine-tuning: A100 (40GB) |

|

|

* Inference: T4 (16GB) |

|

|

|

|

|

Follow the Google Codalab Notebook at the repository: |

|

|

* https://github.com/nicolay-r/distil-tuning-llm |

|

|

|

|

|

#### Software |

|

|

|

|

|

This is an official repository for this card: |

|

|

* https://github.com/nicolay-r/distil-tuning-llm |

|

|

|

|

|

## Citation [optional] |

|

|

|

|

|

**BibTeX:** |

|

|

|

|

|

> **TO BE ADDED** |

|

|

|

|

|

|

|

|

## Model Card Authors |

|

|

|

|

|

Nicolay Rusnachenko |

|

|

|