File size: 5,147 Bytes

4b3bdeb 6048812 4b3bdeb 22766da 4b3bdeb 7c425f1 4f0f9d9 c6991ef 7c425f1 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 04a1d40 4b3bdeb d790ea4 4b3bdeb 6048812 51403e9 6048812 3fe1b49 51403e9 3fe1b49 6048812 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb c33f417 d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 4b3bdeb d790ea4 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 |

---

library_name: transformers

tags:

- text-summarization

- text-generation

- clinical-report-summarization

- document-summarization

license: mit

language:

- en

- fr

- pt

- es

metrics:

- bertscore

- rouge

base_model:

- Qwen/Qwen2.5-0.5B-Instruct

pipeline_tag: text-generation

---

# Model Details

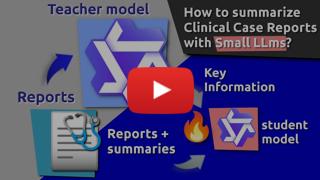

> **Update 13'th July 2025**: Added [video review on youtube](https://youtu.be/uOAiUvLghuE)

This model represent a fine-tuned version of `Qwen/Qwen2.5-0.5B-Instruct` on [MultiClinSum](https://zenodo.org/records/15463353) training data

for [BioASQ-2025](http://bioasq.org/) Workshop / [CLEF 2025](https://clef2025.clef-initiative.eu/).

This model represent a baseline for the `distil` version:

https://huggingface.co/nicolay-r/qwen25-05b-multiclinsum-distil

### Video Overview

<div align="center">

[](https://youtu.be/uOAiUvLghuE)

</div>

### Model Description

- **Model type:** Decoder-based Model

- **Language(s) (NLP):** Supported by Qwen2.5 + fine-tuned on summarries written in `en`, `fr`, `pt`, `es`

- **License:** MIT

- **Finetuned from model [optional]:** https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct

### Model Sources [optional]

[](https://colab.research.google.com/drive/1TXGaz39o73nBucEQw12gbad7Tw11j2Ol?usp=sharing)

- **Repository:** https://github.com/nicolay-r/distil-tuning-llm

- **Paper:** **TBA**

- **Demo:** https://colab.research.google.com/drive/1TXGaz39o73nBucEQw12gbad7Tw11j2Ol?usp=sharing

## Usage

We use [bulk-chain](https://github.com/nicolay-r/bulk-chain) for inference with the Qwen2 provider based on `transformers` **pipelines API**.

**Provider** `huggingface_qwen.py`: https://github.com/nicolay-r/nlp-thirdgate/blob/9e46629792e9a53871710884f7b9e2fe42666aa7/llm/transformers_qwen2.py

```python

from bulk_chain.api import iter_content

from bulk_chain.core.utils import dynamic_init

content_it = iter_content(

schema={"schema": [

{"prompt": "Summarize: {input}", "out": "summary"}]

},

llm=dynamic_init(

class_filepath="huggingface_qwen.py",

class_name="Qwen2")(

api_token="YOUR_HF_API_KEY_GOES_HERE",

model_name="nicolay-r/qwen25-05b-multiclinsum-standard",

temp=0.1,

use_bf16=True,

max_new_tokens=args.max_tokens,

device=args.device

),

infer_mode="batch",

batch_size=4,

return_mode="record",

# INPUT TEXTS:

input_dicts_it=[

{"input": "A patient 62 years old with ..."}

],

)

for record in content_it:

# here is the result dictionary that includes summary.

print(record["summary"])

```

## Training Details

### Training Data

* **MultiClinSum**

* We use the [following script](https://github.com/nicolay-r/distill-tuning-llm/blob/main/resources/download_dataset.sh) for downloading datasets.

* **Web**: https://temu.bsc.es/multiclinsum

* **Data**: https://zenodo.org/records/15463353

* **BioASQ**: http://bioasq.org/

### Training Procedure

The training procedure involves:

1. Preparation of the `rationale` for summaries distillation.

2. Launch of the **fine-tuning** process.

**Fine-tuning:** Please follow this script for using [`MultiClinSum` dataset](https://zenodo.org/records/15463353) for fine-tuning at GoogleColab A100 (40GB VRAM) + 80GB RAM:

* https://github.com/nicolay-r/distil-tuning-llm/blob/master/distil_ft_qwen25_05b_A100-40GB_80GB_std.sh

#### Preprocessing [optional]

Refer to the following script for the `fine-tuning` pre-processing:

* https://github.com/nicolay-r/distil-tuning-llm/blob/master/resources/make_dataset_mult.py

#### Training Hyperparameters

We refer to the original parameters here:

* https://github.com/QwenLM/Qwen2.5-VL/tree/main/qwen-vl-finetune

And use the following script:

* https://github.com/nicolay-r/distil-tuning-llm/blob/master/distil_ft_qwen25_05b_A100-40GB_80GB_std.sh

#### Speeds, Sizes, Times [optional]

The fine-tuning procedure for `3` epochs takes around `~1 hour` using the GoogleColab A100.

## Evaluation

#### Testing Data

We use evaluation split of the 20 documents out of the small portion the available training data across all the languages: `en`, `fr`, `pt`, `es`

#### Metrics

In this evaluation we use onle `rouge` score.

### Results

We launch 3 individual fine-tuning processes for `distil` and `standard` versions to showcase results variation among multiple runs.

> **Figure**: the obtained results for this model correspond to the `standard` version 🟠

#### Summary

#### Hardware

We experiment with model inference and launching using GoolgeColab Notebook service and related resources:

* Fine-tuning: A100 (40GB)

* Inference: T4 (16GB)

Follow the Google Codalab Notebook at the repository:

* https://github.com/nicolay-r/distil-tuning-llm

#### Software

This is an official repository for this card:

* https://github.com/nicolay-r/distil-tuning-llm

## Citation [optional]

**BibTeX:**

> **TO BE ADDED**

## Model Card Authors

Nicolay Rusnachenko

|