Model Card for Model ID

This repository contains a fine-tuned version of the Table Transformer model, specifically adapted for detecting tables in IFRS (International Financial Reporting Standards) PDFs. The model is based on the Table Transformer architecture, which is designed to extract tables from unstructured documents such as PDFs and images.

Model Details

Base Model: microsoft/table-transformer-detection

Library: transformers

Training Data: The model was trained on a dataset consisting of 2359 IFRS scans, with a focus on detecting tables without borders.

Classes: The model is trained to detect two classes: 0 - table (regular tables) and 1 - table_rotated (rotated tables).

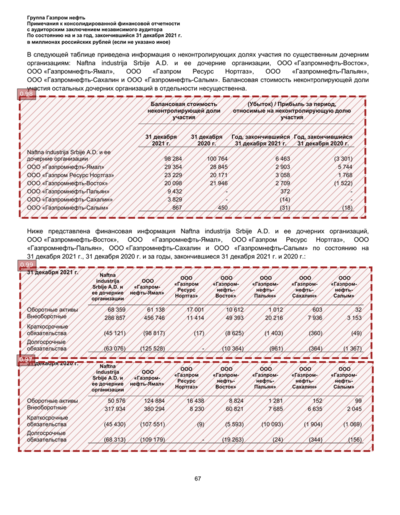

Example Image

Usage

from transformers import DetrForObjectDetection, DetrImageProcessor

from PIL import Image

import torch

# Load the image processor and model

# DetrImageProcessor is used to preprocess the images before feeding them to the model

image_processor = DetrImageProcessor()

# Load the pre-trained TableTransformer model for object detection

# This model is specifically trained for detecting tables in IFRS documents

model = TableTransformerForObjectDetection.from_pretrained(

"apkonsta/table-transformer-detection-ifrs",

)

# Prepare the image

# Open the image file and convert it to RGB format

image = Image.open("path/to/your/ifrs_pdf_page.png").convert("RGB")

# Table detection threshold

# Set a threshold for detecting tables; only detections with a confidence score above this threshold will be considered

TD_th = 0.5

# Preprocess the image using the image processor

# The image is encoded into a format that the model can understand

encoding = image_processor(image, return_tensors="pt")

# Perform inference without computing gradients (saves memory and computations)

with torch.no_grad():

outputs = model(**encoding)

# Get the probabilities for each detected object

# The softmax function is applied to the logits to get probabilities

probas = outputs.logits.softmax(-1)[0, :, :-1]

# Keep only the detections with a confidence score above the threshold

keep = probas.max(-1).values > TD_th

# Get the target sizes for post-processing

# The target sizes are the dimensions of the original image

target_sizes = torch.tensor(image.size[::-1]).unsqueeze(0)

# Post-process the model outputs to get the final bounding boxes

# The bounding boxes are scaled back to the original image size

postprocessed_outputs = image_processor.post_process(outputs, target_sizes)

bboxes_scaled = postprocessed_outputs[0]["boxes"][keep]

- Downloads last month

- 1,402