metadata

license: etalab-2.0

pipeline_tag: image-segmentation

tags:

- semantic segmentation

- pytorch

- landcover

model-index:

- name: FLAIR-HUB_LC-A_convnextv2base-unet

results:

- task:

type: semantic-segmentation

dataset:

name: IGNF/FLAIR-HUB/

type: earth-observation-dataset

metrics:

- type: mIoU

value: 64.162

name: mIoU

- type: OA

value: 77.166

name: Overall Accuracy

- type: IoU

value: 84.153

name: IoU building

- type: IoU

value: 76.218

name: IoU greenhouse

- type: IoU

value: 61.59

name: IoU swimming pool

- type: IoU

value: 75.239

name: IoU impervious surface

- type: IoU

value: 56.174

name: IoU pervious surface

- type: IoU

value: 63.016

name: IoU bare soil

- type: IoU

value: 88.96

name: IoU water

- type: IoU

value: 72.539

name: IoU snow

- type: IoU

value: 54.219

name: IoU herbaceous vegetation

- type: IoU

value: 57.088

name: IoU agricultural land

- type: IoU

value: 36.271

name: IoU plowed land

- type: IoU

value: 77.468

name: IoU vineyard

- type: IoU

value: 71.327

name: IoU deciduous

- type: IoU

value: 60.427

name: IoU coniferous

- type: IoU

value: 29.305

name: IoU brushwood

library_name: pytorch

🌐 FLAIR-HUB Model Collection

- Trained on: FLAIR-HUB dataset 🔗

- Available modalities: Aerial images, SPOT images, Topographic info, Sentinel-2 yearly time-series, Sentinel-1 yearly time-series, Historical aerial images

- Encoders: ConvNeXTV2, Swin (Tiny, Small, Base, Large)

- Decoders: UNet, UPerNet

- Tasks: Land-cover mapping (LC), Crop-type mapping (LPIS)

- Class nomenclature: 15 classes for LC, 23 classes for LPIS

🔍 Model: FLAIR-HUB_LC-A_convnextv2base-unet

- Encoder: convnextv2_base

- Decoder: unet

- Metrics:

- Params.: 92.8

General Informations

- Contact: [email protected]

- Code repository: https://github.com/IGNF/FLAIR-HUB

- Paper: https://arxiv.org/abs/2506.07080

- Project page: https://ignf.github.io/FLAIR/FLAIR-HUB/flairhub

- Developed by: IGN

- Compute infrastructure:

- software: python, pytorch-lightning

- hardware: HPC/AI resources provided by GENCI-IDRIS

- License: Etalab 2.0

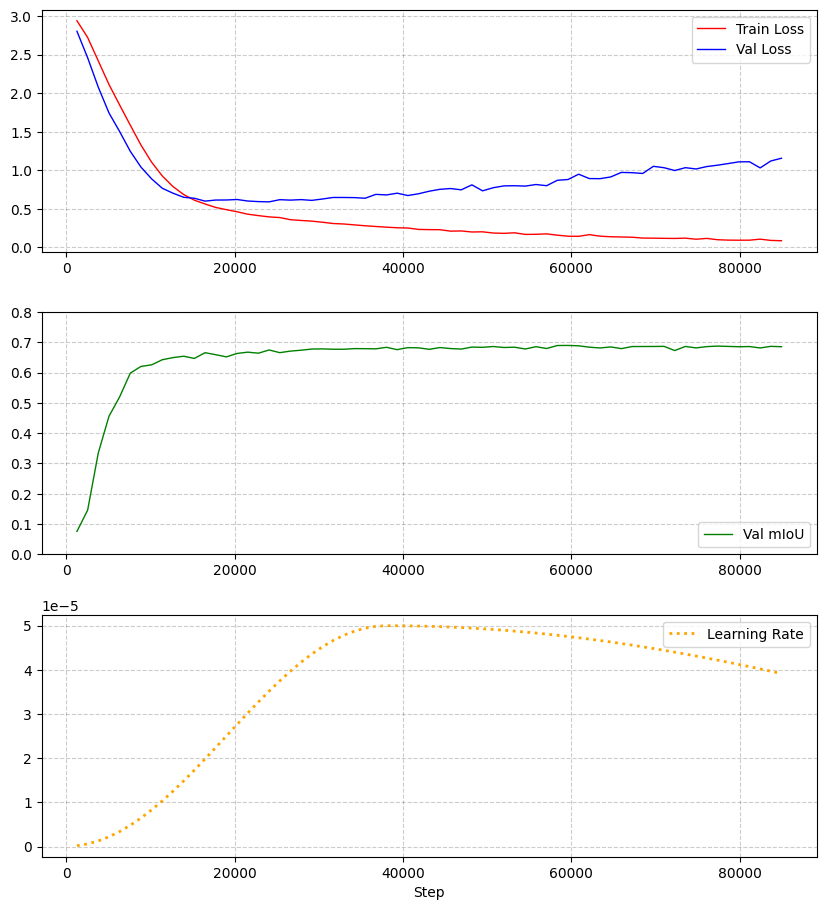

Training Config Hyperparameters

- Model architecture: convnextv2_base-unet

- Optimizer: AdamW (betas=[0.9, 0.999], weight_decay=0.01)

- Learning rate: 5e-5

- Scheduler: one_cycle_lr (warmup_fraction=0.2)

- Epochs: 150

- Batch size: 5

- Seed: 2025

- Early stopping: patience 20, monitor val_miou (mode=max)

- Class weights:

- default: 1.0

- masked classes: [clear cut, ligneous, mixed, other] → weight = 0

- Input channels:

- AERIAL_RGBI : [4,1,2]

- Input normalization (custom):

- AERIAL_RGBI:

mean: [106.59, 105.66, 111.35]

std: [39.78, 52.23, 45.62]

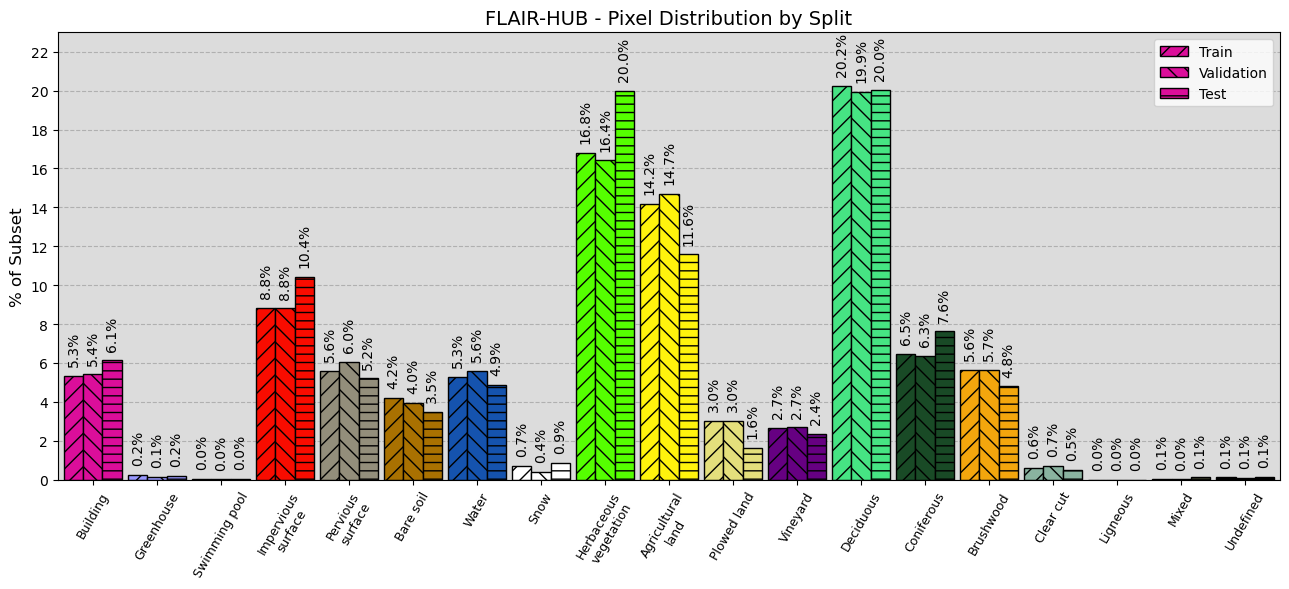

Training Data

- Train patches: 152225

- Validation patches: 38175

- Test patches: 50700

Training Logging

Metrics

| Metric | Value |

|---|---|

| mIoU | 64.13% |

| Overall Accuracy | 77.45% |

| F-score | 76.88% |

| Precision | 77.36% |

| Recall | 76.89% |

| Class | IoU (%) | F-score (%) | Precision (%) | Recall (%) |

|---|---|---|---|---|

| building | 84.15 | 91.39 | 91.15 | 91.64 |

| greenhouse | 76.22 | 86.50 | 84.11 | 89.04 |

| swimming pool | 60.03 | 75.02 | 76.08 | 73.99 |

| impervious surface | 75.24 | 85.87 | 86.75 | 85.01 |

| pervious surface | 56.17 | 71.94 | 69.87 | 74.14 |

| bare soil | 63.02 | 77.31 | 74.19 | 80.71 |

| water | 88.96 | 94.16 | 94.98 | 93.35 |

| snow | 72.54 | 84.08 | 97.77 | 73.76 |

| herbaceous vegetation | 54.22 | 70.31 | 71.67 | 69.01 |

| agricultural land | 57.09 | 72.68 | 69.75 | 75.87 |

| plowed land | 36.27 | 53.23 | 52.71 | 53.77 |

| vineyard | 77.47 | 87.30 | 85.34 | 89.36 |

| deciduous | 71.33 | 83.26 | 81.90 | 84.67 |

| coniferous | 60.43 | 75.33 | 80.13 | 71.08 |

| brushwood | 29.30 | 45.33 | 47.34 | 43.48 |

Inference

Aerial ROI

Inference ROI

Cite

BibTeX:

@article{ign2025flairhub,

doi = {10.48550/arXiv.2506.07080},

url = {https://arxiv.org/abs/2506.07080},

author = {Garioud, Anatol and Giordano, Sébastien and David, Nicolas and Gonthier, Nicolas},

title = {FLAIR-HUB: Large-scale Multimodal Dataset for Land Cover and Crop Mapping},

publisher = {arXiv},

year = {2025}

}

APA:

Anatol Garioud, Sébastien Giordano, Nicolas David, Nicolas Gonthier.

FLAIR-HUB: Large-scale Multimodal Dataset for Land Cover and Crop Mapping. (2025).

DOI: https://doi.org/10.48550/arXiv.2506.07080