This model is fine-tuned version of microsoft/conditional-detr-resnet-50.

You can find details of model in this github repo -> fashion-visual-search

And you can find fashion image feature extractor model -> yainage90/fashion-image-feature-extractor

This model was trained using a combination of two datasets: modanet and fashionpedia

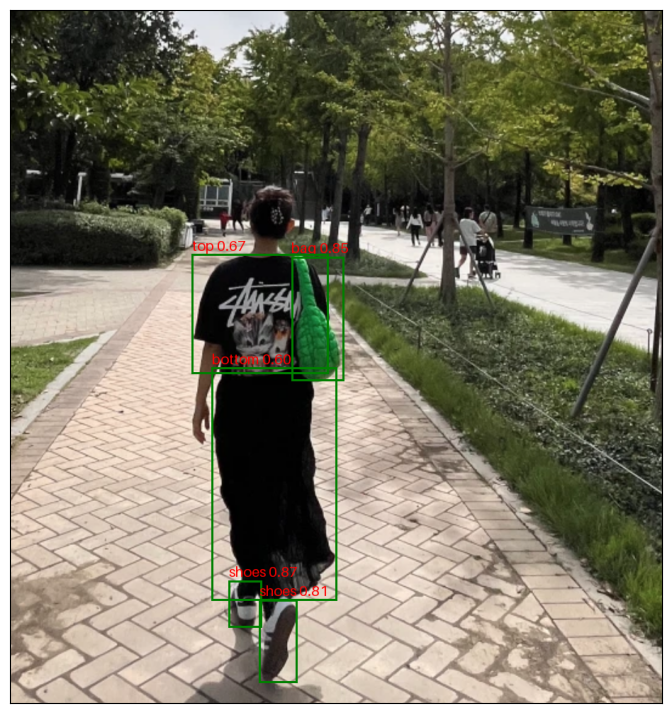

The labels are ['bag', 'bottom', 'dress', 'hat', 'shoes', 'outer', 'top']

In the 96th epoch out of total of 100 epochs, the best score was achieved with mAP 0.7542. Therefore, it is believed that there is a little room for performance improvement.

from PIL import Image

import torch

from transformers import AutoImageProcessor, AutoModelForObjectDetection

device = 'cpu'

if torch.cuda.is_available():

device = torch.device('cuda')

elif torch.backends.mps.is_available():

device = torch.device('mps')

ckpt = 'yainage90/fashion-object-detection'

image_processor = AutoImageProcessor.from_pretrained(ckpt)

model = AutoModelForObjectDetection.from_pretrained(ckpt).to(device)

image = Image.open('<path/to/image>').convert('RGB')

with torch.no_grad():

inputs = image_processor(images=[image], return_tensors="pt")

outputs = model(**inputs.to(device))

target_sizes = torch.tensor([[image.size[1], image.size[0]]])

results = image_processor.post_process_object_detection(outputs, threshold=0.4, target_sizes=target_sizes)[0]

items = []

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

score = score.item()

label = label.item()

box = [i.item() for i in box]

print(f"{model.config.id2label[label]}: {round(score, 3)} at {box}")

items.append((score, label, box))

- Downloads last month

- 4,481

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support