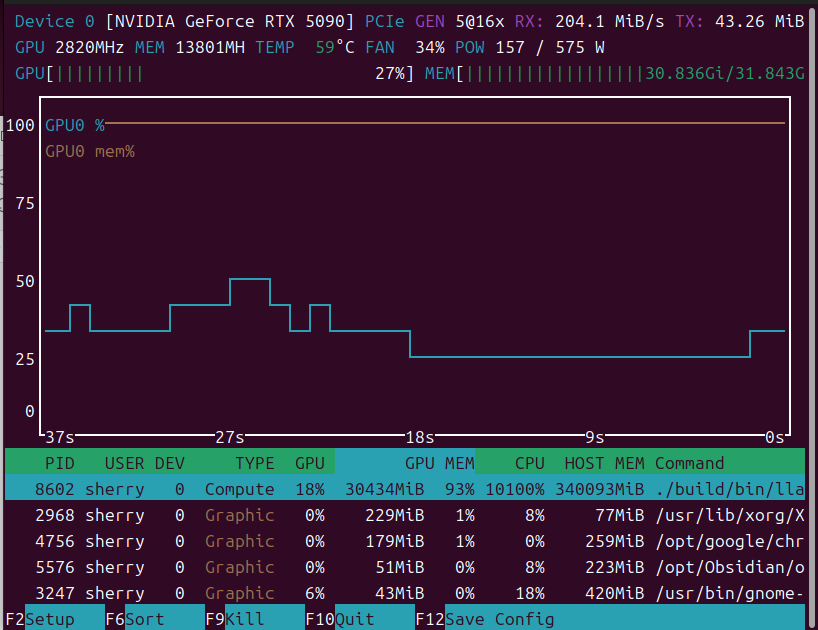

qwen3 coder test

W790E Sage + QYFS + 512G + RTX5090

IQ5_K:

llm_load_tensors: offloading 62 repeating layers to GPU

llm_load_tensors: offloading non-repeating layers to GPU

llm_load_tensors: offloaded 63/63 layers to GPU

llm_load_tensors: CPU buffer size = 41600.58 MiB

llm_load_tensors: CPU buffer size = 43386.38 MiB

llm_load_tensors: CPU buffer size = 43386.38 MiB

llm_load_tensors: CPU buffer size = 43386.38 MiB

llm_load_tensors: CPU buffer size = 43386.38 MiB

llm_load_tensors: CPU buffer size = 43386.38 MiB

llm_load_tensors: CPU buffer size = 43386.38 MiB

llm_load_tensors: CPU buffer size = 34927.03 MiB

llm_load_tensors: CPU buffer size = 737.24 MiB

llm_load_tensors: CUDA0 buffer size = 9156.73 MiB

....................................................................................................

llama_new_context_with_model: n_ctx = 98304

llama_new_context_with_model: n_batch = 4096

llama_new_context_with_model: n_ubatch = 4096

llama_new_context_with_model: flash_attn = 1

llama_new_context_with_model: mla_attn = 0

llama_new_context_with_model: attn_max_b = 512

llama_new_context_with_model: fused_moe = 1

llama_new_context_with_model: ser = -1, 0

llama_new_context_with_model: freq_base = 10000000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CUDA0 KV buffer size = 12648.03 MiB

llama_new_context_with_model: KV self size = 12648.00 MiB, K (q8_0): 6324.00 MiB, V (q8_0): 6324.00 MiB

llama_new_context_with_model: CUDA_Host output buffer size = 0.58 MiB

llama_new_context_with_model: CUDA0 compute buffer size = 6837.52 MiB

llama_new_context_with_model: CUDA_Host compute buffer size = 1632.05 MiB

llama_new_context_with_model: graph nodes = 2424

llama_new_context_with_model: graph splits = 126

main: n_kv_max = 98304, n_batch = 4096, n_ubatch = 4096, flash_attn = 1, n_gpu_layers = 99, n_threads = 101, n_threads_batch = 101

| PP | TG | N_KV | T_PP s | S_PP t/s | T_TG s | S_TG t/s |

|---|---|---|---|---|---|---|

| 4096 | 1024 | 0 | 36.147 | 113.32 | 91.782 | 11.16 |

| 4096 | 1024 | 4096 | 36.396 | 112.54 | 96.775 | 10.58 |

| 4096 | 1024 | 8192 | 38.433 | 106.58 | 111.770 | 9.16 |

| 4096 | 1024 | 12288 | 38.772 | 105.64 | 119.940 | 8.54 |

| 4096 | 1024 | 16384 | 39.167 | 104.58 | 123.763 | 8.27 |

We have a similar setup. I'm using an MS73-HB1 with 2xQYFS and a 5090. Here are my results:

$ numactl --interleave=all build/bin/llama-sweep-bench \

-m /mnt/data2/models/IK_GGUF/ubergarm_Qwen3-Coder-480B-A35B-Instruct-GGUF/IQ5_K/Qwen3-480B-A35B-Instruct-IQ5_K-00001-of-00008.gguf \

-ngl 99 \

-ot "blk.*\.ffn.*=CPU" \

-t 56 \

--threads-batch 112 \

-ub 4096 \

-b 4096 \

--numa distribute \

-fa \

-fmoe \

-ctk q8_0 \

-ctv q8_0 \

-c 98304 \

-op 27,0,29,0

| PP | TG | N_KV | T_PP s | S_PP t/s | T_TG s | S_TG t/s |

|---|---|---|---|---|---|---|

| 4096 | 1024 | 0 | 16.767 | 244.29 | 98.516 | 10.39 |

| 4096 | 1024 | 4096 | 16.775 | 244.17 | 102.692 | 9.97 |

| 4096 | 1024 | 8192 | 17.442 | 234.84 | 105.049 | 9.75 |

| 4096 | 1024 | 12288 | 17.684 | 231.63 | 108.202 | 9.46 |

| 4096 | 1024 | 16384 | 18.003 | 227.52 | 109.467 | 9.35 |

You may want to try adding -op 27,0,29,0 to see if it helps PP. I believe my TG is lower due to NUMA overhead.

with -op 27,0,29,0

better prompt processing

Thanks for your help.

XXXXXXXXXXXXXXXXXXXXX Setting offload policy for op MUL_MAT_ID to OFF

XXXXXXXXXXXXXXXXXXXXX Setting offload policy for op MOE_FUSED_UP_GATE to OFF

main: n_kv_max = 98304, n_batch = 4096, n_ubatch = 4096, flash_attn = 1, n_gpu_layers = 99, n_threads = 101, n_threads_batch = 101

| PP | TG | N_KV | T_PP s | S_PP t/s | T_TG s | S_TG t/s |

|---|---|---|---|---|---|---|

| 4096 | 1024 | 0 | 27.414 | 149.41 | 91.496 | 11.19 |

| 4096 | 1024 | 4096 | 26.671 | 153.57 | 95.829 | 10.69 |

| 4096 | 1024 | 8192 | 26.742 | 153.17 | 106.375 | 9.63 |

| 4096 | 1024 | 12288 | 27.338 | 149.83 | 116.544 | 8.79 |

| 4096 | 1024 | 16384 | 30.521 | 134.20 | 123.362 | 8.30 |

my full options:

-fa

-ctk q8_0 -ctv q8_0

-c 98304

-b 4096 -ub 4096

-fmoe

-amb 512

--override-tensor exps=CPU

-ngl 99

--threads 101

--flash-attn

-op 27,0,29,0 \

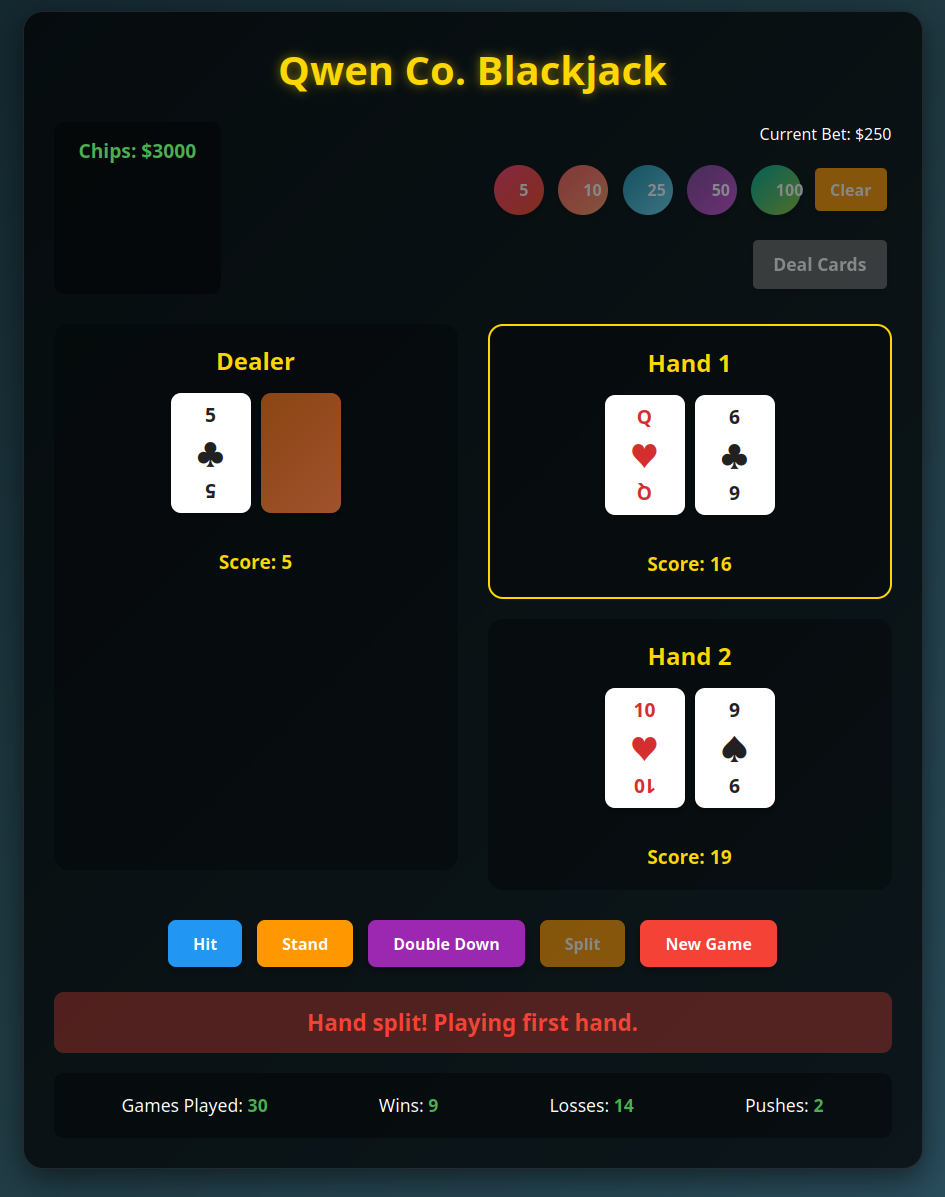

I'm locally coding with this model in RooCode , this model is absolutely thinking model.

hey guys why q2ks not doing best while browsing ? its just gets stucked in between every time

I recommand glm 4.5 model. I'm testing it in lm studio . less memory and faster speed. very good story teller and thinking model.

I recommand glm 4.5 model. I'm testing it in lm studio . less memory and faster speed. very good story teller and thinking model.

qwen dropped another model

update

Qwen/Qwen3-30B-A3B-Instruct-2507 great model very similar to gpt4o i think sam will get more pressure :P

Yes, I'll try to make some quants of Qwen3-30B given it is much easier for people to run!

Again, thanks very much for all these quants.

I had a long discussion with my new Qwen3 Coder 480B on my system (128GB RAM and 32 GB VRAM) heavily quantized, so I am limited to use the IQ1_KT where parts of the model is down to 1-bit per weight.

Qwen3 recommended to offload the higher layers to the GPU rather than the lower layers.

"Late layers are critical for final output generation — they dominate latency in autoregressive decoding.

✅ Best practice: Offload the latest layers to GPU, since:

Decoding is sequential: each new token goes through all layers.

The last few layers are executed on every generation step.

Thus, offloading later layers reduces latency more than early ones.

✅ Recommended Strategy: Prioritize End of the Network

"

It also recommended to run KV-cache with q4_0 instead of q8_0 (since precision anyway is lowered alot in some layers going all the way down to q1).

Said and done, I found below options to ik_llama to perform the best on my system so far. Any more ideas for me?

/home/mir/services/ik_llama/ik_llama.cpp/build/bin/llama-server

-fa

-fmoe

-ctk q4_0

-ctv q4_0

-c 32768

-ngl 99

-ot "blk.(70|71|72|73|74|75|76|77|78|79|80|81|82|83|84|85|86|87|88|89|90|91|92|93).ffn_.*=CUDA0"

-ot exps=CPU

--parallel 1

--threads 24

--no-warmup

--port ${PORT}

✅ Best practice: Offload the latest layers to GPU, since:

Decoding is sequential: each new token goes through all layers.

The last few layers are executed on every generation step.

Thus, offloading later layers reduces latency more than early ones.

✅ Recommended Strategy: Prioritize End of the Network

I wouldn't listen to any of that personally. It doesn't matter much as long as you offload layers in order to avoid extra hops across PCIe bus between GPUs / CPU devices. Should be same speed as offloading any sequential group of layers I'd expect, but I haven't benchmarked it myself with llama-sweep-bench.

You could have two options for your command:

- also add

-ub 4096 -b 4096to increase prompt processing speed significantly. you could also consider--no-mmapif you have RAM to possibly make use of transparent huge pages but likely won't effect speed any more. - otherwise, do not do 1 and try

-rtrfor run-time-repack to _R4 row interleaved quants which may give slightly more TG. These don't benefit so much from larger batch sizes and it already disables mmap.

Glad you can get some use out of that smallest IQ1_KT. Keep in mind the KT trellis quants are optimized for GPU and slower on CPU for TG. But very cool it allows you to use this big model!