Conditional-DETR ResNet-50 - Handwritten Signature Detection

This repository presents a Conditional-DETR model with ResNet-50 backbone, fine-tuned to detect handwritten signatures in document images. This model achieved the highest [email protected] (93.65%) among all tested architectures in our comprehensive evaluation.

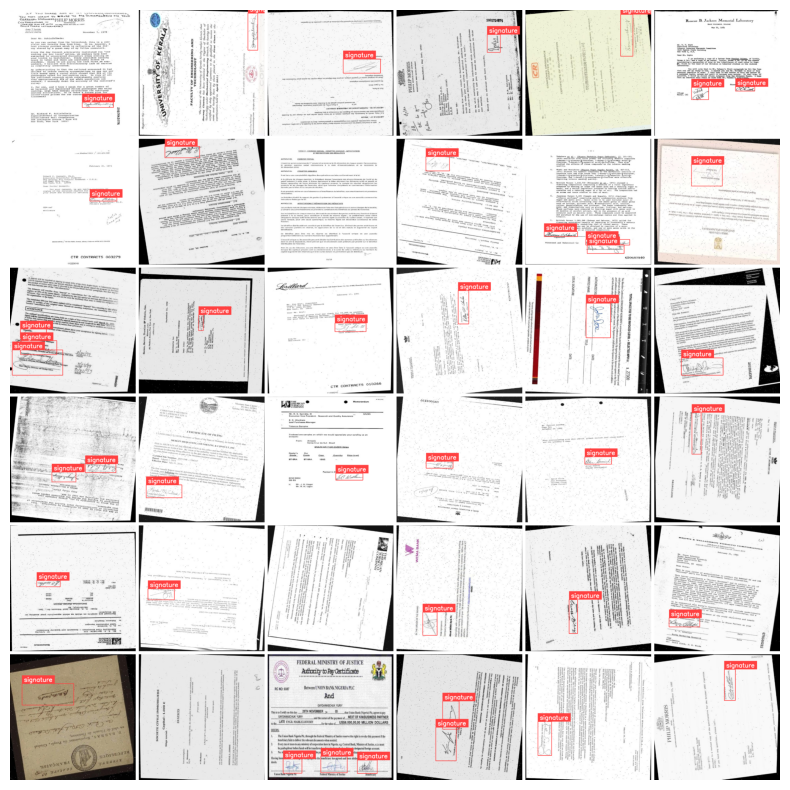

Dataset

|

|

Dataset Summary:

- Training: 1,980 images (70%)

- Validation: 420 images (15%)

- Testing: 419 images (15%)

- Format: COCO JSON

- Resolution: 640x640 pixels

Training Process

The training process involved the following steps:

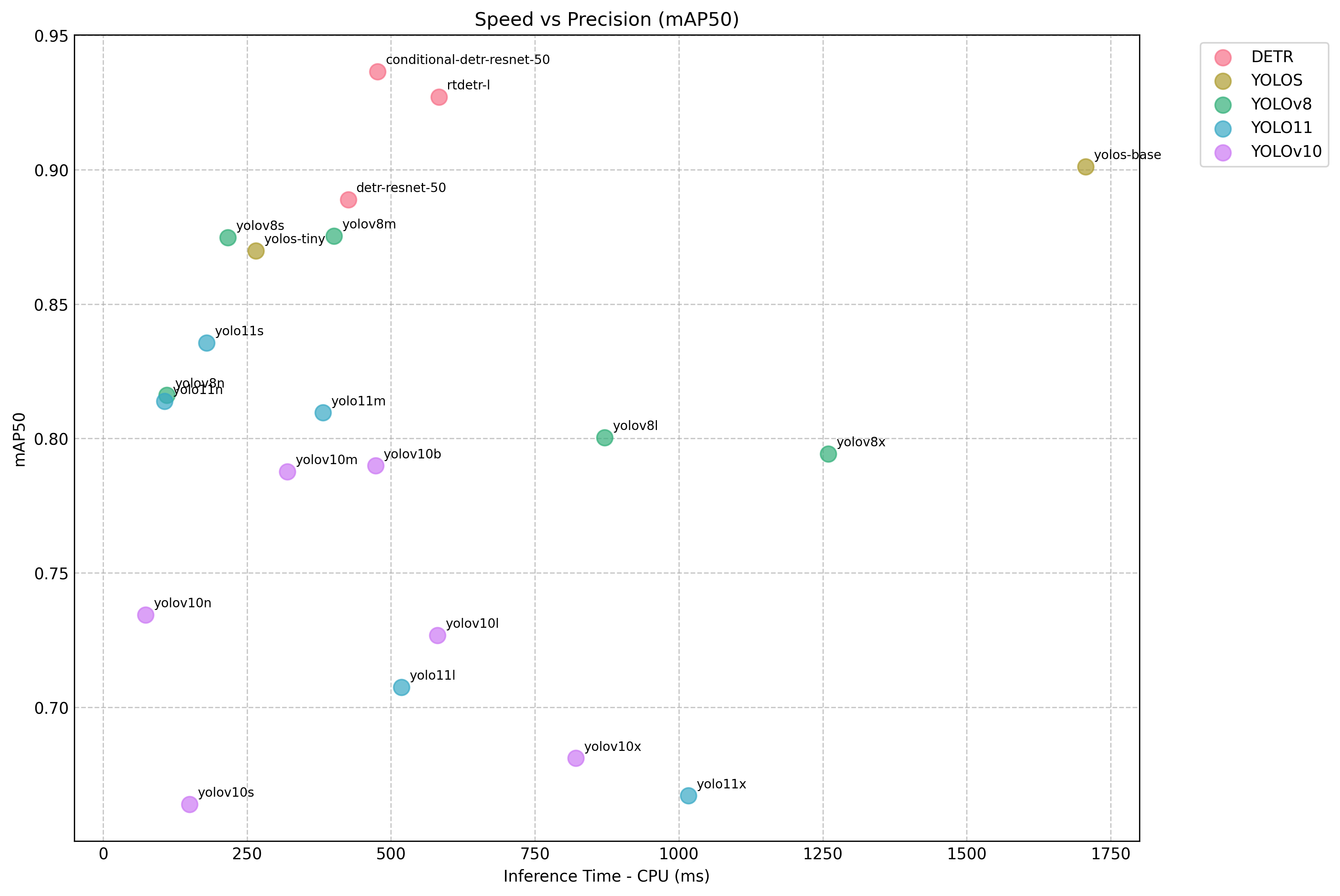

1. Model Selection:

Various object detection models were evaluated to identify the best balance between precision, recall, and inference time.

| Metric | rtdetr-l | yolos-base | yolos-tiny | conditional-detr-resnet-50 | detr-resnet-50 | yolov8x | yolov8l | yolov8m | yolov8s | yolov8n | yolo11x | yolo11l | yolo11m | yolo11s | yolo11n | yolov10x | yolov10l | yolov10b | yolov10m | yolov10s | yolov10n |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Inference Time - CPU (ms) | 583.608 | 1706.49 | 265.346 | 476.831 | 425.649 | 1259.47 | 871.329 | 401.183 | 216.6 | 110.442 | 1016.68 | 518.147 | 381.652 | 179.792 | 106.656 | 821.183 | 580.767 | 473.109 | 320.12 | 150.076 | 73.8596 |

| mAP50 | 0.92709 | 0.901154 | 0.869814 | 0.936524 | 0.88885 | 0.794237 | 0.800312 | 0.875322 | 0.874721 | 0.816089 | 0.667074 | 0.707409 | 0.809557 | 0.835605 | 0.813799 | 0.681023 | 0.726802 | 0.789835 | 0.787688 | 0.663877 | 0.734332 |

| mAP50-95 | 0.622364 | 0.583569 | 0.469064 | 0.653321 | 0.579428 | 0.552919 | 0.593976 | 0.665495 | 0.65457 | 0.623963 | 0.482289 | 0.499126 | 0.600797 | 0.638849 | 0.617496 | 0.474535 | 0.522654 | 0.578874 | 0.581259 | 0.473857 | 0.552704 |

Highlights:

- Best mAP50:

conditional-detr-resnet-50(0.936524) - Best mAP50-95:

yolov8m(0.665495) - Fastest Inference Time:

yolov10n(73.8596 ms)

Detailed experiments are available on Weights & Biases.

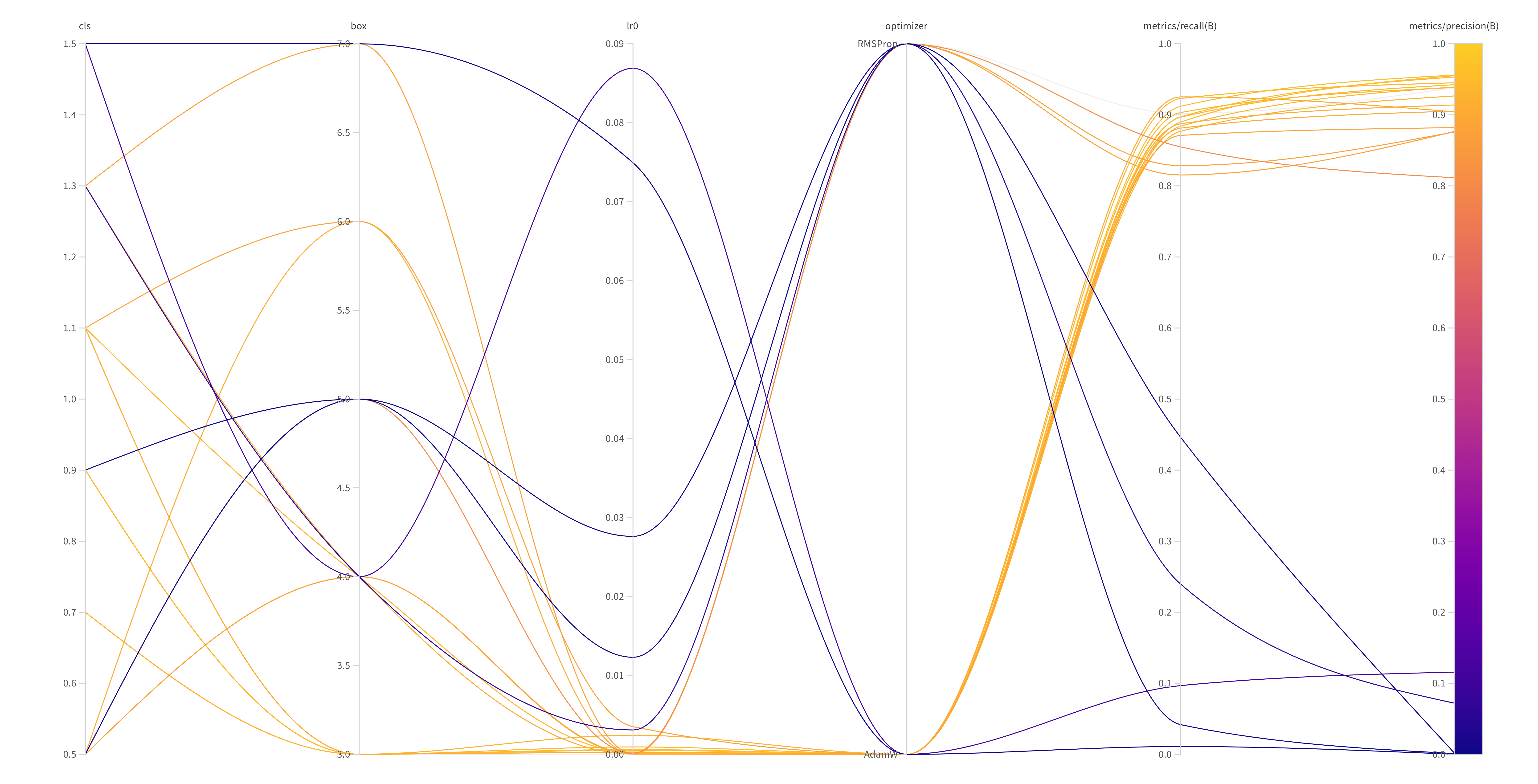

2. Hyperparameter Tuning:

The YOLOv8s model, which demonstrated a good balance of inference time, precision, and recall, was selected for hyperparameter tuning.

Optuna was used for 20 optimization trials. The hyperparameter tuning used the following parameter configuration:

dropout = trial.suggest_float("dropout", 0.0, 0.5, step=0.1)

lr0 = trial.suggest_float("lr0", 1e-5, 1e-1, log=True)

box = trial.suggest_float("box", 3.0, 7.0, step=1.0)

cls = trial.suggest_float("cls", 0.5, 1.5, step=0.2)

opt = trial.suggest_categorical("optimizer", ["AdamW", "RMSProp"])

Results can be visualized here: Hypertuning Experiment.

3. Evaluation:

The models were evaluated on the test set at the end of training in ONNX (CPU) and TensorRT (GPU - T4) formats. Performance metrics included precision, recall, mAP50, and mAP50-95.

Results Comparison:

| Metric | Base Model | Best Trial (#10) | Difference |

|---|---|---|---|

| mAP50 | 87.47% | 95.75% | +8.28% |

| mAP50-95 | 65.46% | 66.26% | +0.81% |

| Precision | 97.23% | 95.61% | -1.63% |

| Recall | 76.16% | 91.21% | +15.05% |

| F1-score | 85.42% | 93.36% | +7.94% |

Results

After hyperparameter tuning of the YOLOv8s model, the best model achieved the following results on the test set:

- Precision: 94.74%

- Recall: 89.72%

- mAP@50: 94.50%

- mAP@50-95: 67.35%

- Inference Time:

- ONNX Runtime (CPU): 171.56 ms

- TensorRT (GPU - T4): 7.657 ms

How to Use

Installation

pip install transformers torch torchvision pillow

Inference

from transformers import AutoImageProcessor, AutoModelForObjectDetection

from PIL import Image

import torch

# Load model and processor

model_name = "tech4humans/conditional-detr-50-signature-detector"

processor = AutoImageProcessor.from_pretrained(model_name)

model = AutoModelForObjectDetection.from_pretrained(model_name)

# Load and process image

image = Image.open("path/to/your/document.jpg")

inputs = processor(images=image, return_tensors="pt")

# Run inference

with torch.no_grad():

outputs = model(**inputs)

# Post-process results

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(

outputs, target_sizes=target_sizes, threshold=0.5

)[0]

# Extract detections

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(f"Detected signature with confidence {round(score.item(), 3)} at location {box}")

Visualization

import matplotlib.pyplot as plt

import matplotlib.patches as patches

from PIL import Image

def visualize_predictions(image_path, results, threshold=0.5):

image = Image.open(image_path)

fig, ax = plt.subplots(1, figsize=(12, 9))

ax.imshow(image)

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

if score > threshold:

x, y, x2, y2 = box.tolist()

width, height = x2 - x, y2 - y

rect = patches.Rectangle(

(x, y), width, height,

linewidth=2, edgecolor='red', facecolor='none'

)

ax.add_patch(rect)

ax.text(x, y-10, f'Signature: {score:.3f}',

bbox=dict(boxstyle="round,pad=0.3", facecolor="yellow", alpha=0.7))

ax.set_title("Signature Detection Results")

plt.axis('off')

plt.show()

# Use the visualization

visualize_predictions("path/to/your/document.jpg", results)

Demo

You can explore the model and test real-time inference in the Hugging Face Spaces demo, built with Gradio and ONNXRuntime.

🔗 Inference with Triton Server

If you want to deploy this signature detection model in a production environment, check out our inference server repository based on the NVIDIA Triton Inference Server.

|

|

Infrastructure

Software

The model was trained and tuned using a Jupyter Notebook environment.

- Operating System: Ubuntu 22.04

- Python: 3.10.12

- PyTorch: 2.5.1+cu121

- Ultralytics: 8.3.58

- Roboflow: 1.1.50

- Optuna: 4.1.0

- ONNX Runtime: 1.20.1

- TensorRT: 10.7.0

Hardware

Training was performed on a Google Cloud Platform n1-standard-8 instance with the following specifications:

- CPU: 8 vCPUs

- GPU: NVIDIA Tesla T4

License

Model Weights, Code and Training Materials – Apache 2.0

- License: Apache License 2.0

- Usage: All training scripts, deployment code, and usage instructions are licensed under the Apache 2.0 license.

Contact and Information

For further information, questions, or contributions, contact us at [email protected].

📧 Email: [email protected]

🌐 Website: www.tech4.ai

💼 LinkedIn: Tech4Humans

Author

Samuel LimaAI Research Engineer |

Responsibilities in this Project

|

Developed with 💜 by Tech4Humans

- Downloads last month

- 2,790

Model tree for tech4humans/conditional-detr-50-signature-detector

Base model

microsoft/conditional-detr-resnet-50Dataset used to train tech4humans/conditional-detr-50-signature-detector

Collection including tech4humans/conditional-detr-50-signature-detector

Evaluation results

- [email protected] on tech4humans/signature-detectiontest set self-reported0.937

- [email protected]:0.95 on tech4humans/signature-detectiontest set self-reported0.653