A newer version of the Streamlit SDK is available:

1.49.1

title: VacAIgent

emoji: 🐨

colorFrom: yellow

colorTo: purple

sdk: streamlit

sdk_version: 1.45.1

app_file: app.py

pinned: false

license: mit

short_description: Let AI agents plan your next vacation!

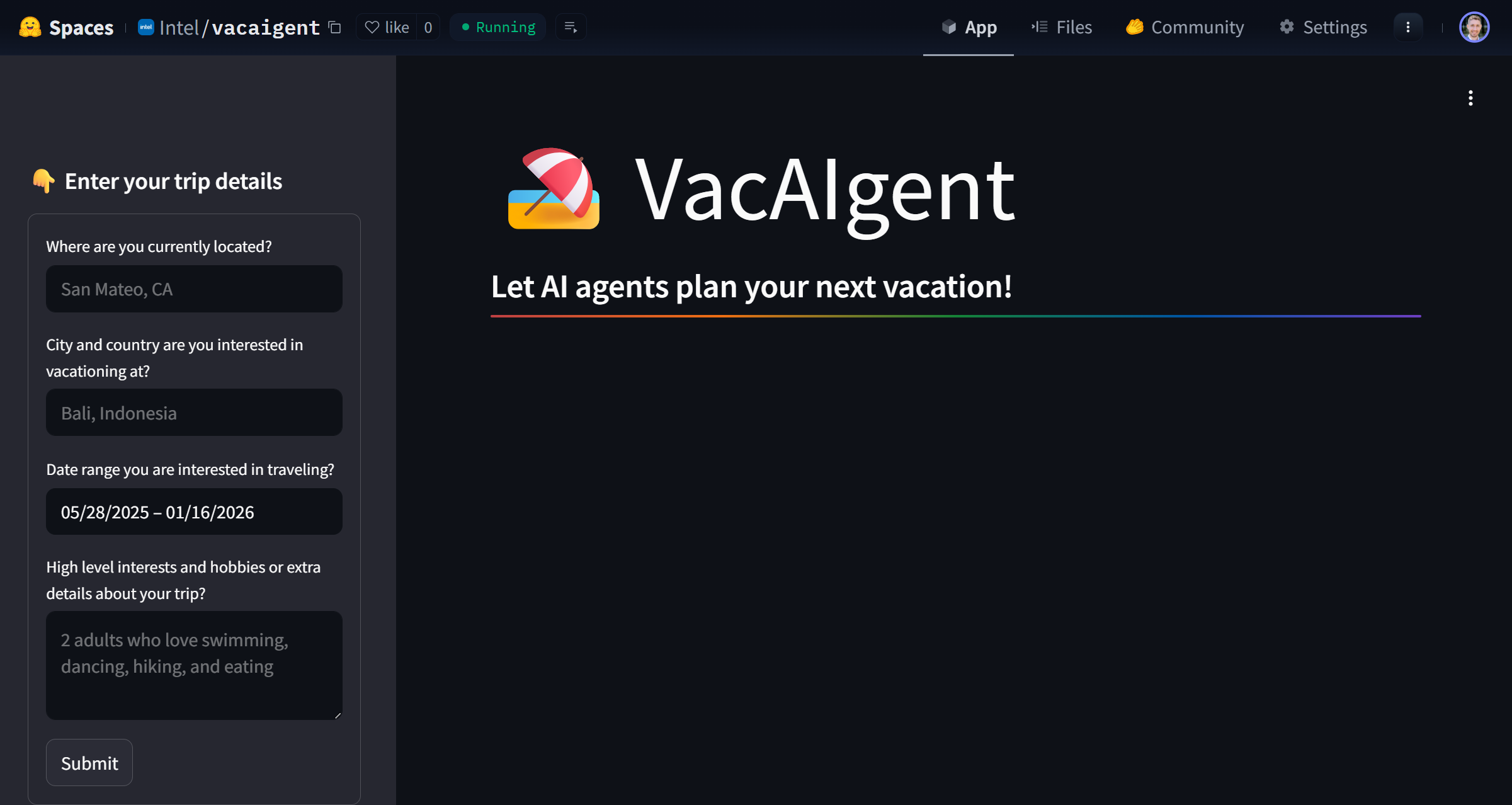

🏖️ VacAIgent: Let AI agents plan your next vacation!

VacAIgent leverages the CrewAI agentic framework to automate and enhance the trip planning experience, integrating a user-friendly Streamlit interface. This project demonstrates how autonomous AI agents can collaborate and execute complex tasks efficiently for the purpose of planning a vacation. It takes advantage of the inference endpoint called Intel® AI for Enterprise Inference with an OpenAI-compatible API key.

Forked and enhanced from the crewAI examples repository. You can find the application hosted on Hugging Face Spaces here:

Check out the video below for a code walkthrough, and steps written out below 👇

(Trip example originally developed by @joaomdmoura)

Installing and Using the Application

Pre-Requisites

- Get the API key from scrapingant for HTML web-scraping.

- Get the API from serper for Google Search API.

- Bring your OpenAI-compatible API key

- Bring your model endpoint URL and LLM model ID

Installation steps

To host the interface locally, first, clone the repository:

git clone https://huggingface.co/spaces/Intel/vacaigent

cd vacaigent

Then, install the necessary libraries:

pip install -r requirements.txt

Add Streamlit secrets. Create a .streamlit/secrets.toml file and update the variables below:

SERPER_API_KEY="serper-api-key"

SCRAPINGANT_API_KEY="scrapingant_api_key"

OPENAI_API_KEY="openai_api_key"

MODEL_ID="meta-llama/Llama-3.3-70B-Instruct"

MODEL_BASE_URL="https://api.inference.denvrdata.com/v1/"

Here we are using the model meta-llama/Llama-3.3-70B-Instruct by default, and the model endpoint is hosted on Denvr Dataworks; but you can bring your own OpenAI-compatible API key, model ID, and model endpoint URL.

Note: You can alternatively add these secrets directly to Hugging Face Spaces Secrets, under the Settings tab, if deploying the Streamlit application directly on Hugging Face.

Run the application

To run the application locally, execute this command to pull up a Streamlit interface in your web browser:

streamlit run app.py

Components:

- trip_tasks.py: Contains task prompts for the agents.

- trip_agents.py: Manages the creation of agents.

- tools directory: Houses tool classes used by agents.

- app.py: The heart of the frontend Streamlit app.

Using Local Models with Ollama

For enhanced privacy and customization, you could easily substitute cloud-hosted models with locally-hosted models from Ollama.

License

VacAIgent is open-sourced under the MIT license.

Follow Up

Connect to LLMs on Intel Gaudi AI accelerators with just an endpoint and an OpenAI-compatible API key, using the inference endpoint Intel® AI for Enterprise Inference, powered by OPEA. At the time of writing, the endpoint is available on cloud provider Denvr Dataworks.

Chat with 6K+ fellow developers on the Intel DevHub Discord.

Follow Intel Software on LinkedIn.

For more Intel AI developer resources, see developer.intel.com/ai.