Model description

This is a Histogram-based Gradient Boosting Classification Tree model trained on HPC history jobs between 1Feb-1Aug 2022, window number 0.

Window Start: 2022-02-01 00:06:58; Window End: 2022-03-03 04:05:20; Total Jobs in Window 0: 35812.

Best parameters: {'hgbc__learning_rate': 0.1, 'hgbc__max_depth': 9, 'hgbc__max_iter': 600}

Performance on TEST

Accuracy on entire set: 0.946168166304685

Accuracy for last bin scheduling assuming bins <= 0 are incorrect: 0.9454; (936/990)

Accuracy for last bin scheduling assuming bins <= 1 are incorrect: 0.9242; (915/990)

Accuracy for last bin scheduling assuming bins <= 2 are incorrect: 0.9121; (903/990)

Accuracy for last bin scheduling assuming bins <= 3 are incorrect: 0.8878; (879/990)

Intended uses & limitations

[More Information Needed]

Training Procedure

[More Information Needed]

Hyperparameters

Click to expand

| Hyperparameter | Value |

|---|---|

| memory | |

| steps | [('scale', StandardScaler()), ('hgbc', HistGradientBoostingClassifier(max_depth=9, max_iter=600))] |

| verbose | False |

| scale | StandardScaler() |

| hgbc | HistGradientBoostingClassifier(max_depth=9, max_iter=600) |

| scale__copy | True |

| scale__with_mean | True |

| scale__with_std | True |

| hgbc__categorical_features | |

| hgbc__class_weight | |

| hgbc__early_stopping | auto |

| hgbc__interaction_cst | |

| hgbc__l2_regularization | 0.0 |

| hgbc__learning_rate | 0.1 |

| hgbc__loss | log_loss |

| hgbc__max_bins | 255 |

| hgbc__max_depth | 9 |

| hgbc__max_iter | 600 |

| hgbc__max_leaf_nodes | 31 |

| hgbc__min_samples_leaf | 20 |

| hgbc__monotonic_cst | |

| hgbc__n_iter_no_change | 10 |

| hgbc__random_state | |

| hgbc__scoring | loss |

| hgbc__tol | 1e-07 |

| hgbc__validation_fraction | 0.1 |

| hgbc__verbose | 0 |

| hgbc__warm_start | False |

Model Plot

Pipeline(steps=[('scale', StandardScaler()),('hgbc',HistGradientBoostingClassifier(max_depth=9, max_iter=600))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('scale', StandardScaler()),('hgbc',HistGradientBoostingClassifier(max_depth=9, max_iter=600))])StandardScaler()

HistGradientBoostingClassifier(max_depth=9, max_iter=600)

Evaluation Results

| Metric | Value |

|---|---|

| accuracy | 0.946168166304685 |

| classification report | precision recall f1-score support 0 0.97 0.98 0.98 5075 1 0.74 0.57 0.64 218 2 0.70 0.59 0.64 108 3 0.67 0.55 0.60 86 4 0.89 0.92 0.90 959 accuracy 0.95 6446 macro avg 0.79 0.72 0.75 6446 weighted avg 0.94 0.95 0.94 6446 |

How to Get Started with the Model

[More Information Needed]

Model Card Authors

This model card is written by following authors:

[More Information Needed]

Model Card Contact

You can contact the model card authors through following channels: [More Information Needed]

Citation

Below you can find information related to citation.

BibTeX:

[More Information Needed]

citation_bibtex

bibtex @inproceedings{...,year={2024}}

get_started_code

import pickle with open(dtc_pkl_filename, 'rb') as file: clf = pickle.load(file)

model_card_authors

Smruti Padhy Joe Stubbs

limitations

This model is ready to be used in production.

model_description

This is a Histogram-based Gradient Boosting Classification Tree model trained on HPC history jobs between 1Feb-1Aug 2022, window number0

eval_method

The model is evaluated using test split, on accuracy and F1 score with macro average.

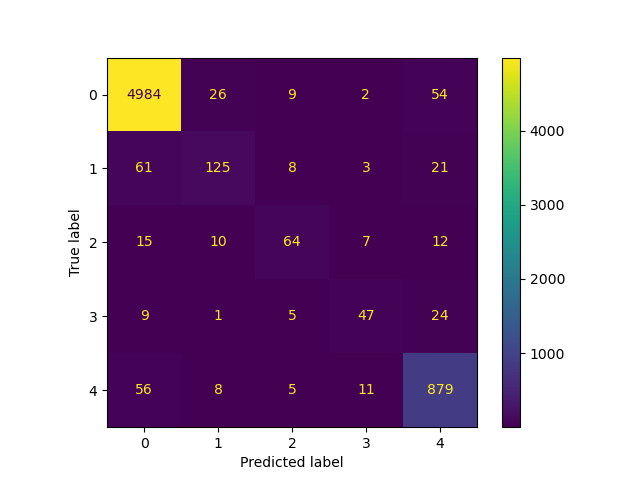

confusion_matrix

- Downloads last month

- 0