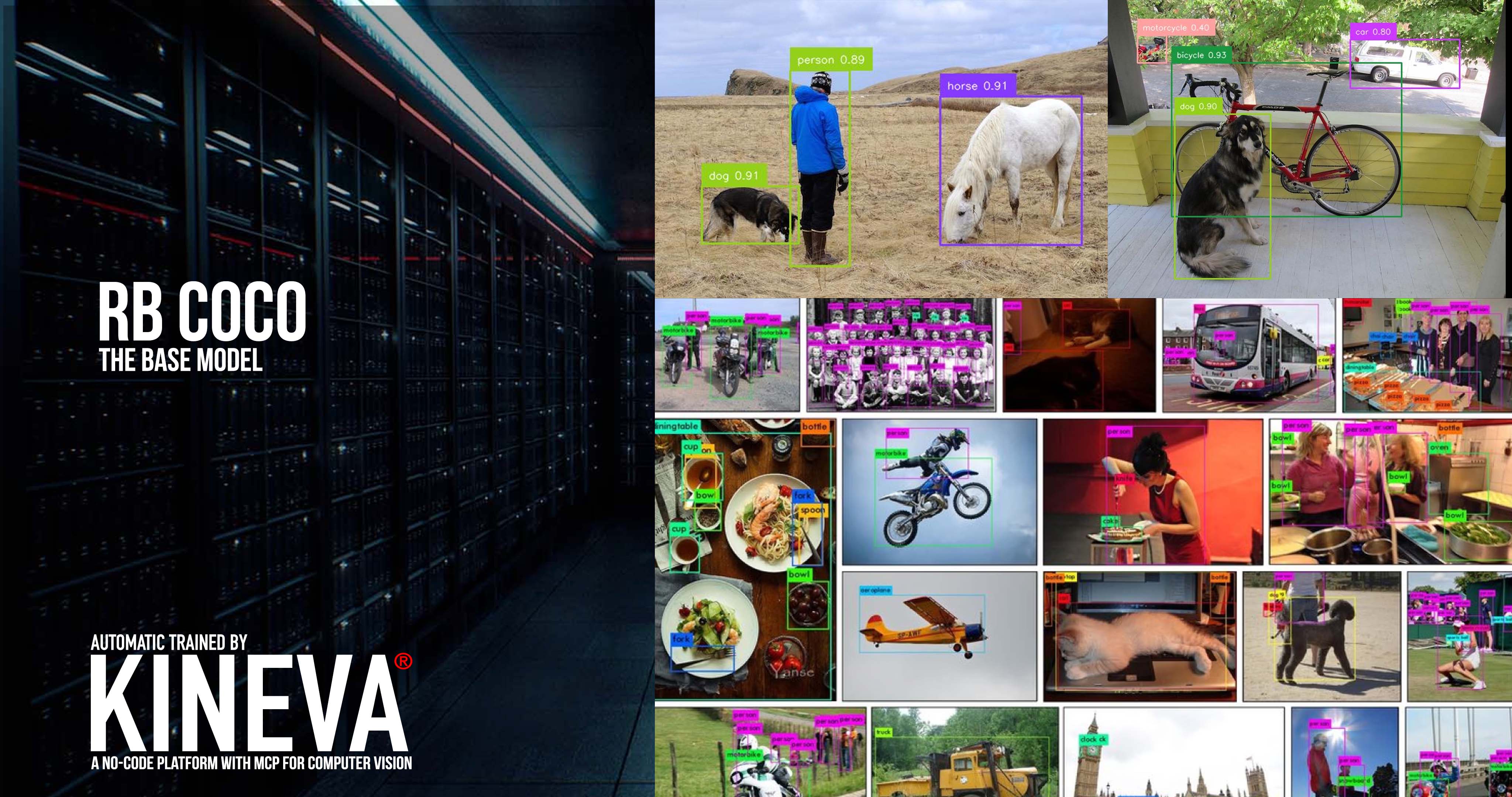

Model Card for rebotnix/rb_coco

🎯 General Object Detection on COCO Dataset – Trained by KINEVA, Built by REBOTNIX, Germany Current State: in production and re-training.

rb_coco is a high-performance object detection model trained on the COCO dataset, supporting detection across a wide range of object categories (e.g., people, vehicles, animals, furniture, etc.). Designed for robust performance in varied lighting, scale, and background conditions, this model suits research, prototyping, and applied AI in urban monitoring, automation, and more.

Developed and maintained by REBOTNIX, Germany, https://rebotnix.com

About KINEVA

KINEVA® is an automated training platform based on the MCP Agent system. It regularly delivers new visual computing models, all developed entirely from scratch. This approach enables the creation of customized models tailored to specific client requirements, which can be retrained and re-released as needed. The platform is particularly suited for applications that demand flexibility, adaptability, and technological precision—such as industrial image processing, smart city analytics, or automated object detection.

KINEVA is continuously evolving to meet the growing demands in the fields of artificial intelligence and machine vision. https://rebotnix.com/en/kineva

✈️ Example Predictions

| Input Image | Detection Result |

|---|---|

|

|

|

|

| (More example visualizations coming soon) |

Model Details

- Architecture: RF-DETR (custom training head with optimized anchor boxes)

- Task: Object Detection (80 COCO categories, e.g. person, car, dog, bicycle)

- Trained on: COCO (Common Objects in Context) dataset

- Format: PyTorch

.pth+ ONNX and trt export available on request - Backbone: EfficientNet B3 (adapted)

- Training Framework: PyTorch + RF-DETR + custom augmentation

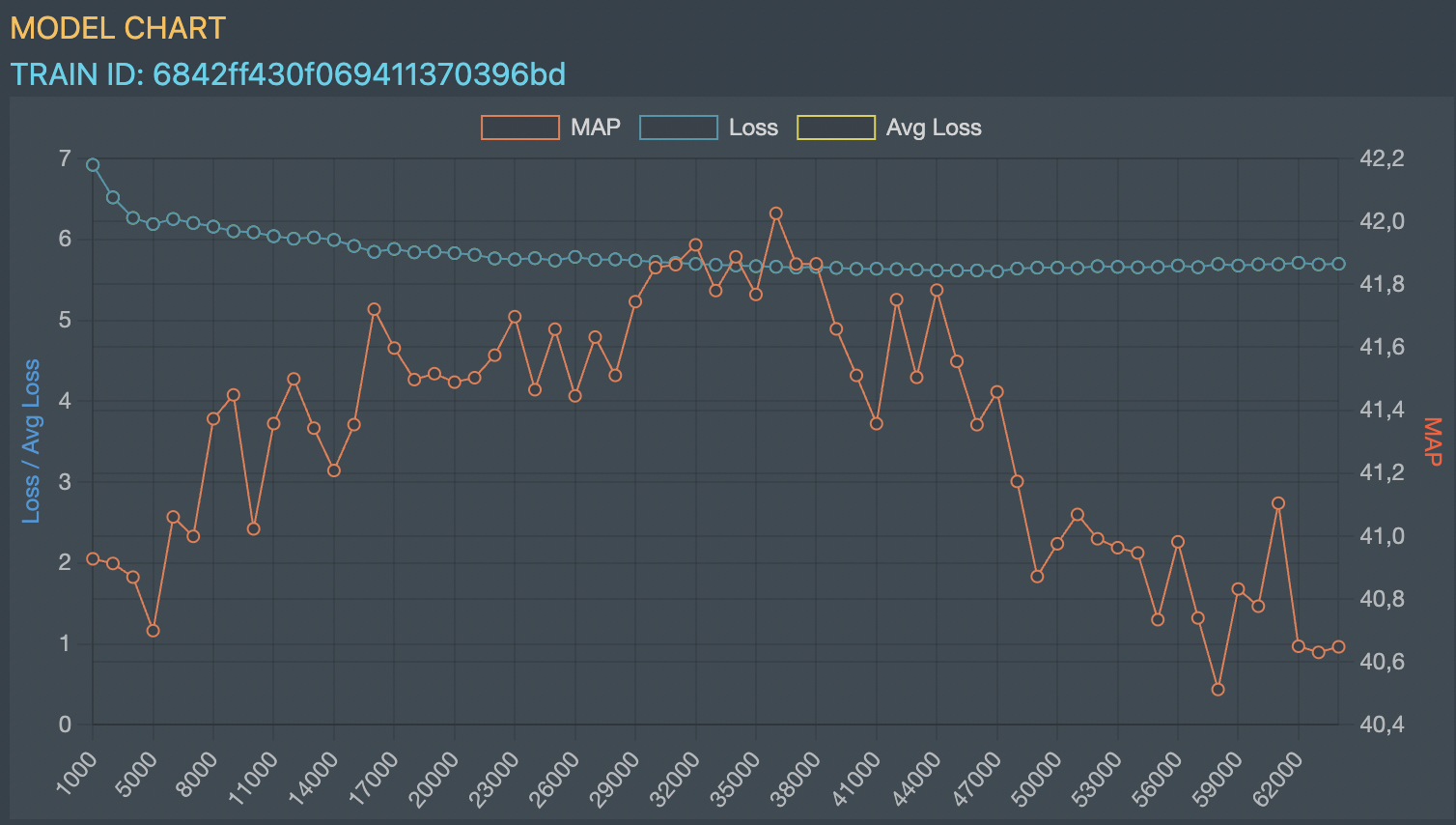

Chart

During training, we observed a distinct trend beginning at around step 36,000 where the MAP (Mean Average Precision) began to decline, while the loss values remained relatively stable or started fluctuating. This divergence indicated the onset of overfitting, where the model performance on the training data continued to improve, but generalization to unseen data was at risk.

To prevent further degradation and preserve model generalization, we intentionally stopped training beyond this point. The model checkpoint closest to the optimal balance of loss and MAP has been retained and made available.

We’re happy to license or provide access to all intermediate weights for research or further development purposes. Please feel free to reach out.

📦 Dataset

The model was trained exclusively on the COCO dataset, which includes:

- 80 object categories

- Over 330,000 images

- Diverse backgrounds and lighting conditions

- Complex scenes with multiple overlapping objects

More on COCO: https://cocodataset.org

Intended Use

| ✅ Intended Use | ❌ Not Intended Use |

|---|---|

| General object detection in images | Surveillance without human review |

| Academic research & prototyping | Military / lethal applications |

| Smart city & automation projects | Real-time tracking of people in critical situations |

⚠️ Limitations

- May yield false positives in highly cluttered environments

- Not fine-tuned for thermal or night vision

- Object occlusion and scale variance may reduce detection accuracy

Usage Example

import supervision as sv

from PIL import Image

from rfdetr import RFDETRBase

model_path= "./rb_coco.pth"

CLASS_NAMES = ["person", "bicycle", "car", "motorcycle", "airplane", ...] # truncated for clarity

model = RFDETRBase(pretrain_weights=model_path,num_classes=len(CLASS_NAMES))

image_path = "./example_coco1.jpg"

image = Image.open(image_path)

detections = model.predict(image, threshold=0.35)

labels = [

f"{CLASS_NAMES[class_id]} {confidence:.2f}"

for class_id, confidence

in zip(detections.class_id, detections.confidence)

]

print(labels)

annotated_image = image.copy()

annotated_image = sv.BoxAnnotator().annotate(annotated_image, detections)

annotated_image = sv.LabelAnnotator().annotate(annotated_image, detections, labels)

annotated_image.save("./output_1.jpg")

Contact

📫 For commercial use or re-training this model support, or dataset access, contact:

REBOTNIX

✉️ Email: [email protected]

🌐 Website: https://rebotnix.com

License

This model is released under CC-BY-NC-SA unless otherwise noted. For commercial licensing, please reach out to the contact email.