Upload folder using huggingface_hub

Browse files- checkpoints/checkpoint-pt-65000/model.safetensors +3 -0

- checkpoints/checkpoint-pt-65000/random_states_0.pkl +3 -0

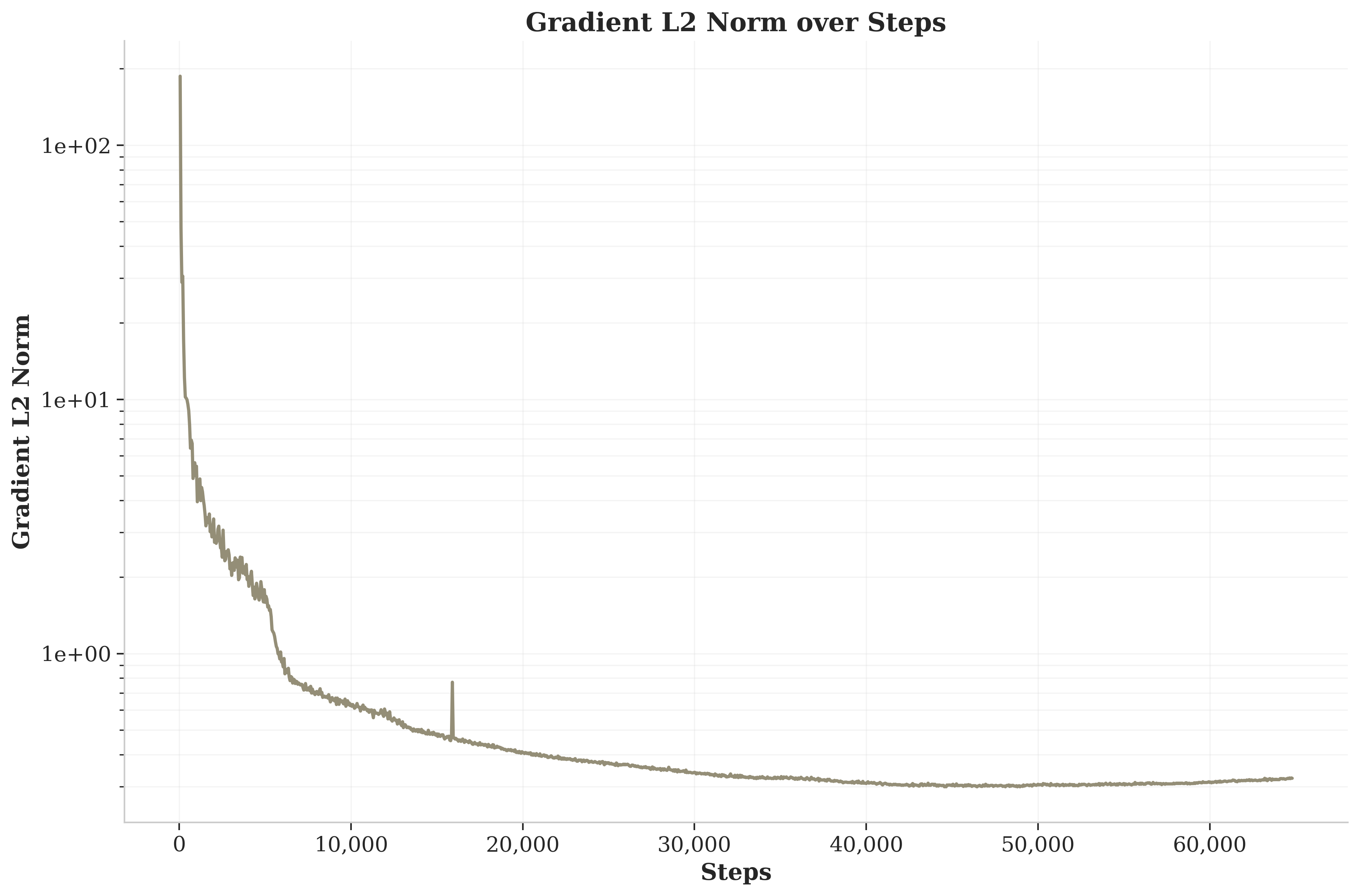

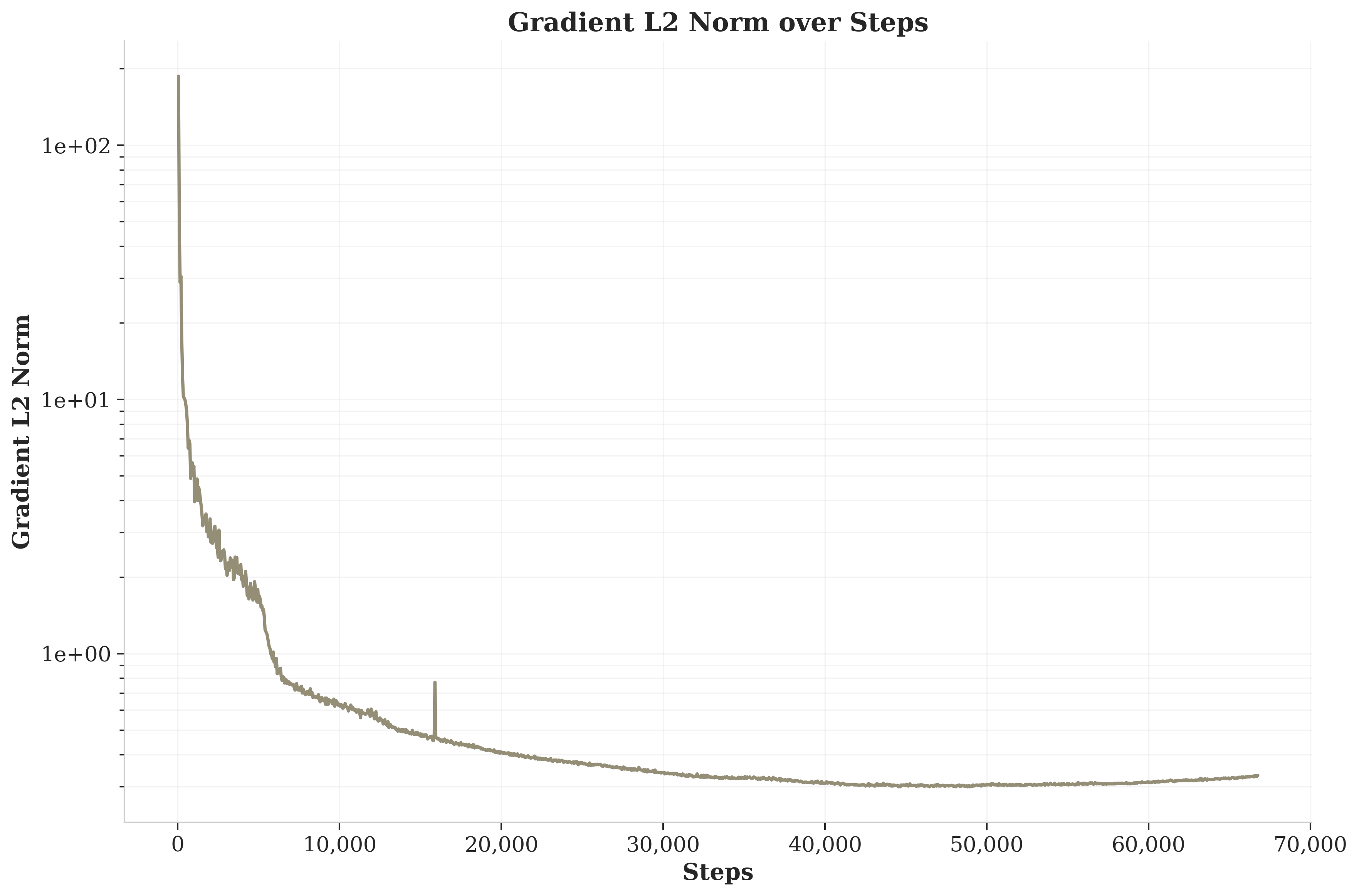

- checkpoints/grad_l2_over_steps.png +0 -0

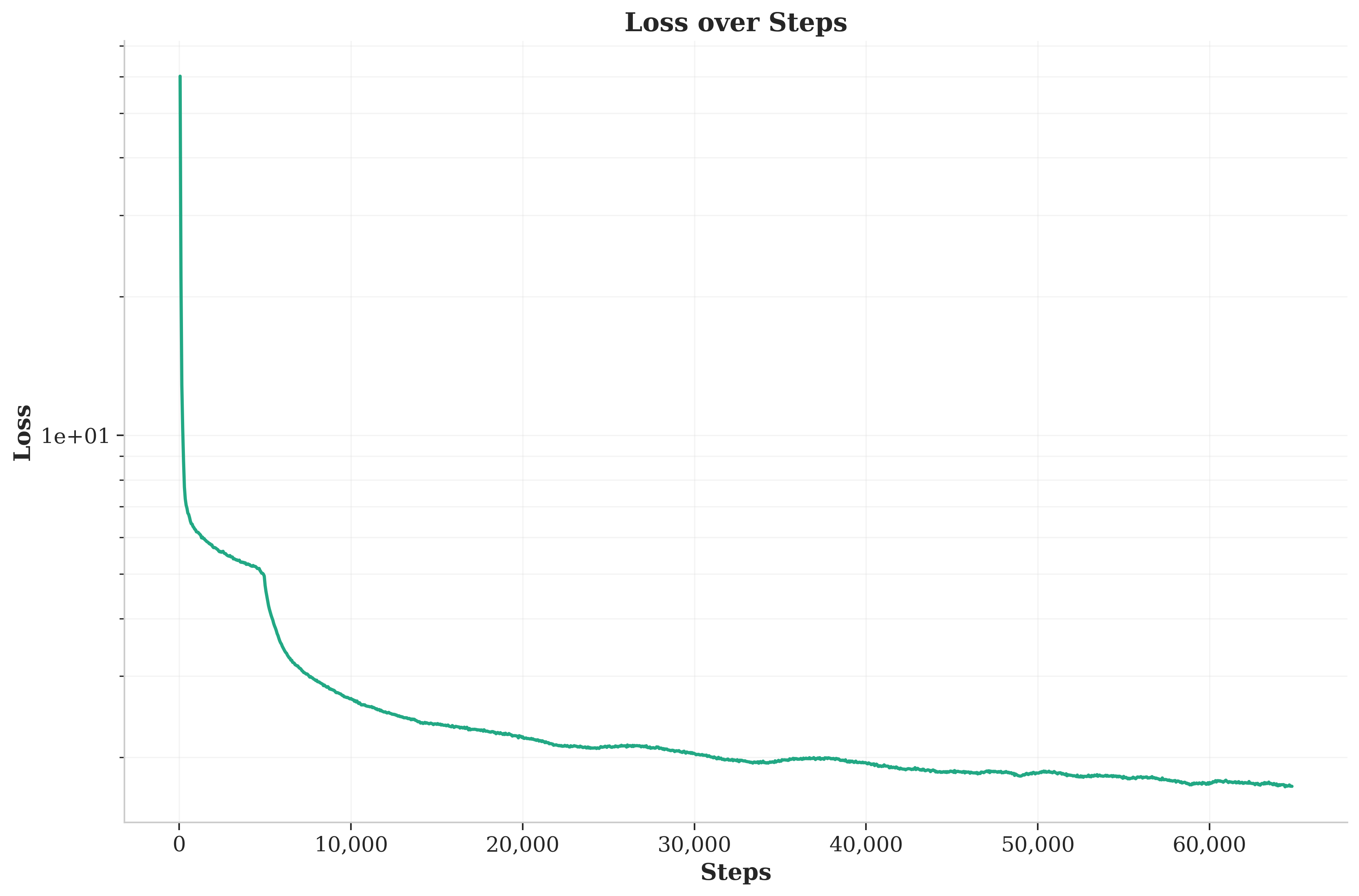

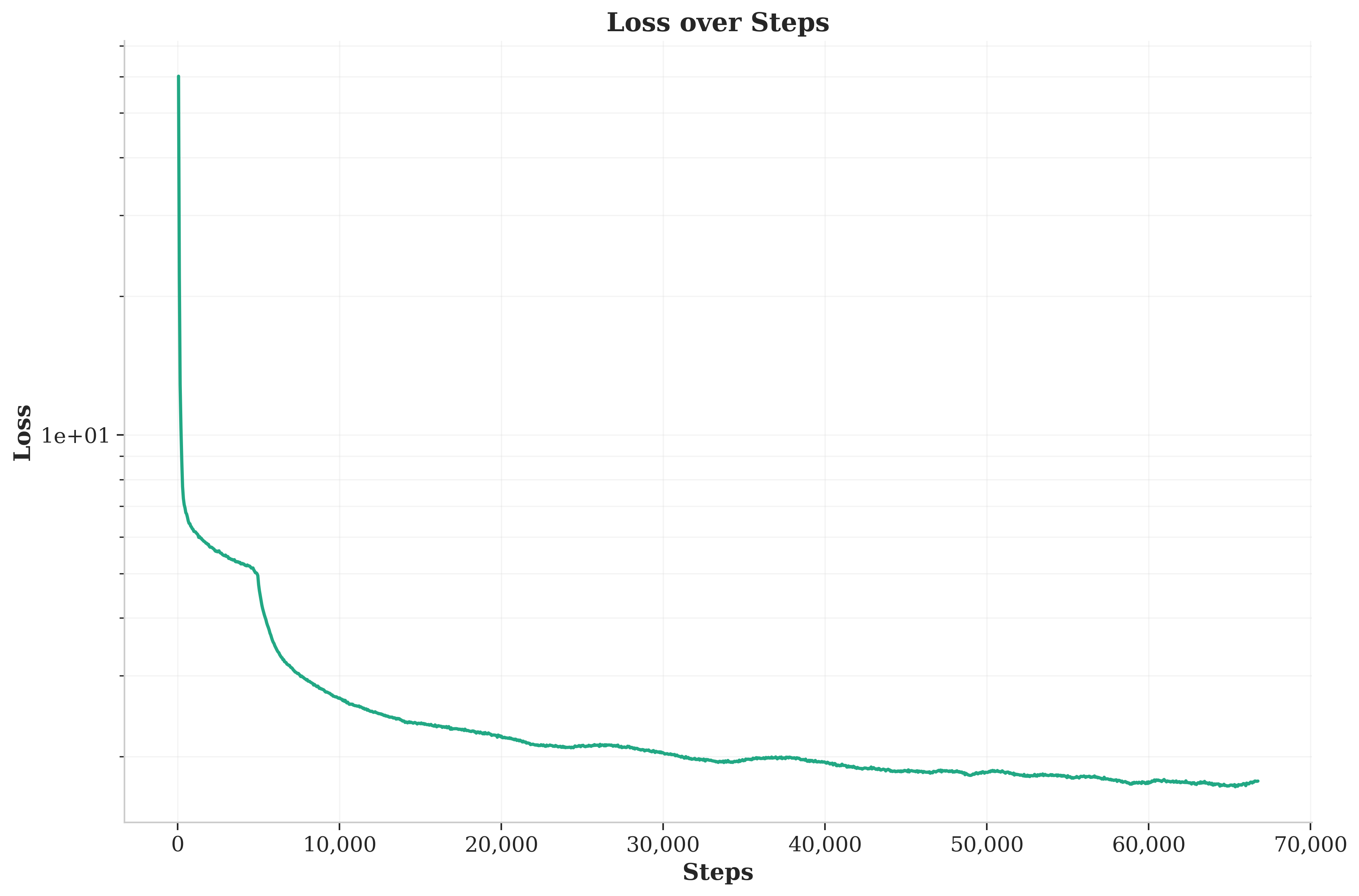

- checkpoints/loss_over_steps.png +0 -0

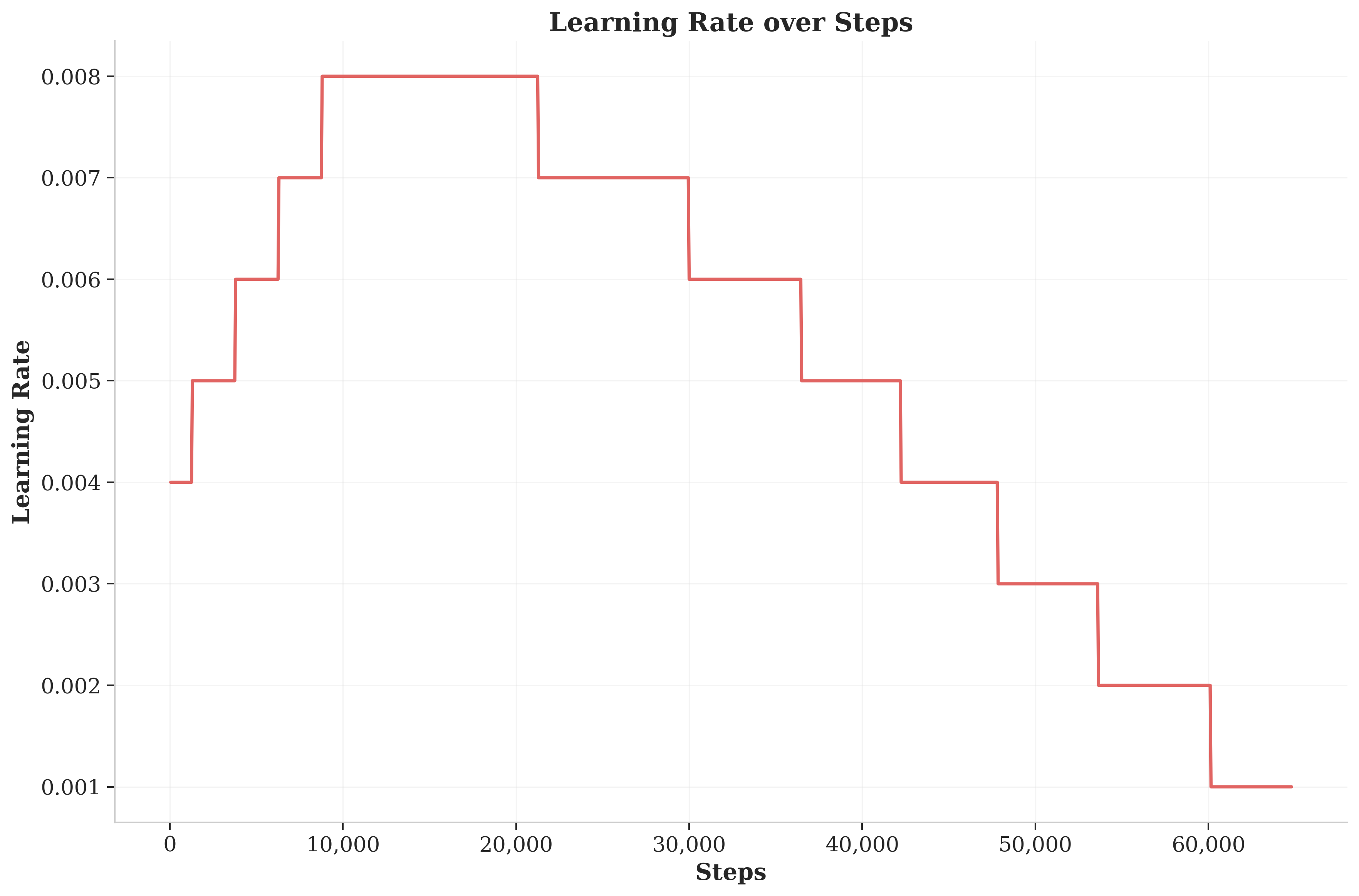

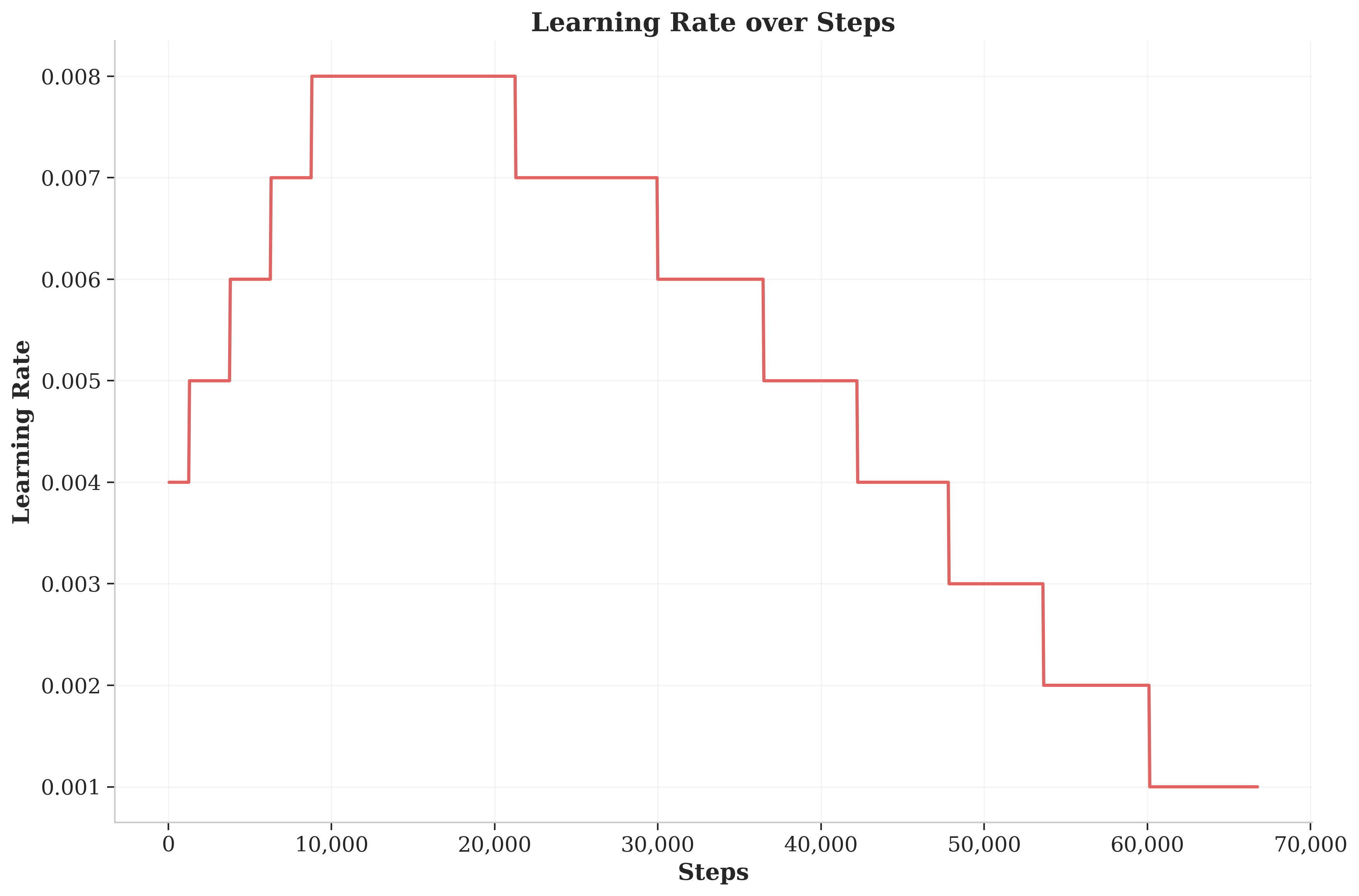

- checkpoints/lr_over_steps.png +0 -0

- checkpoints/main.log +45 -0

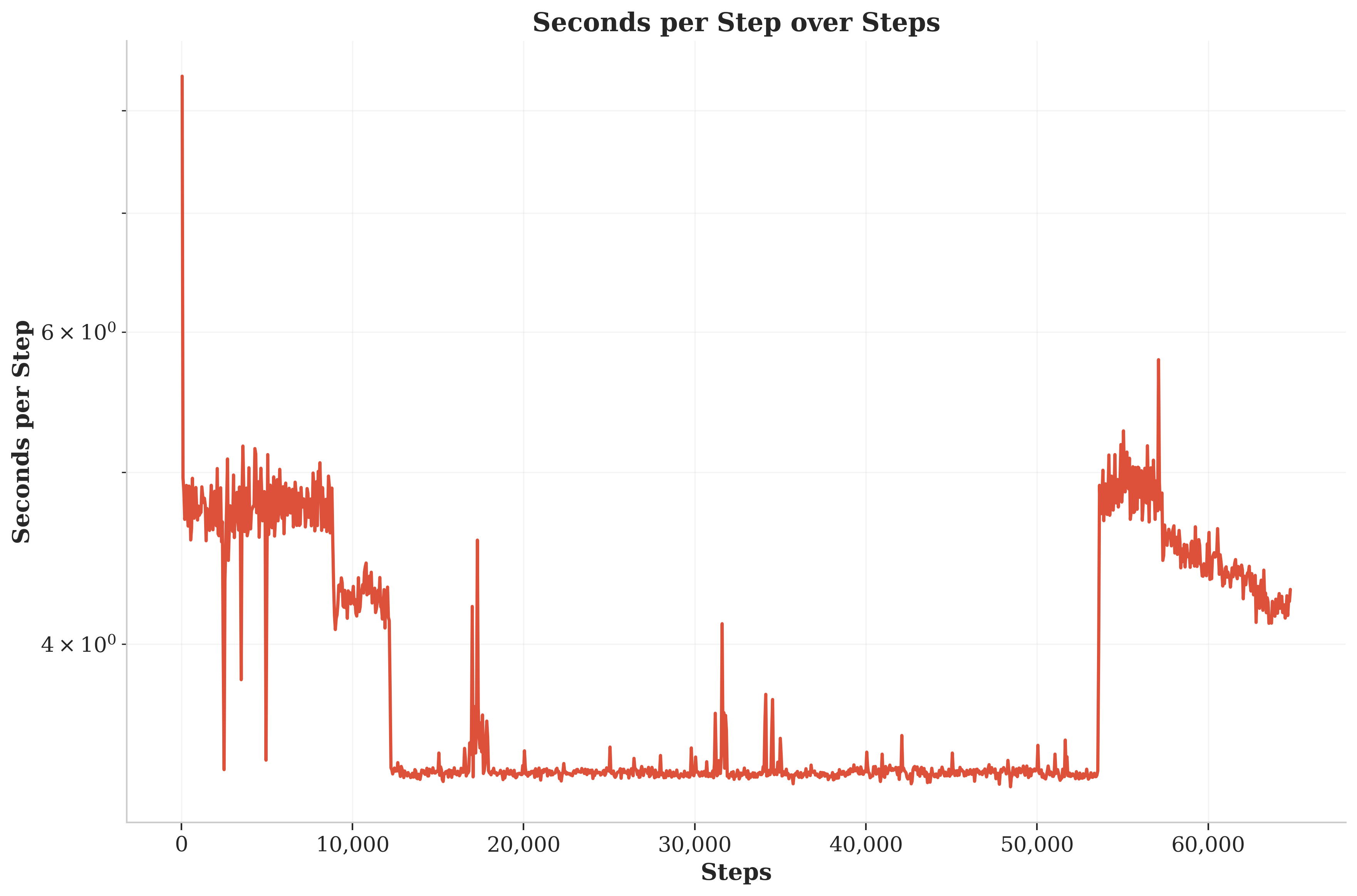

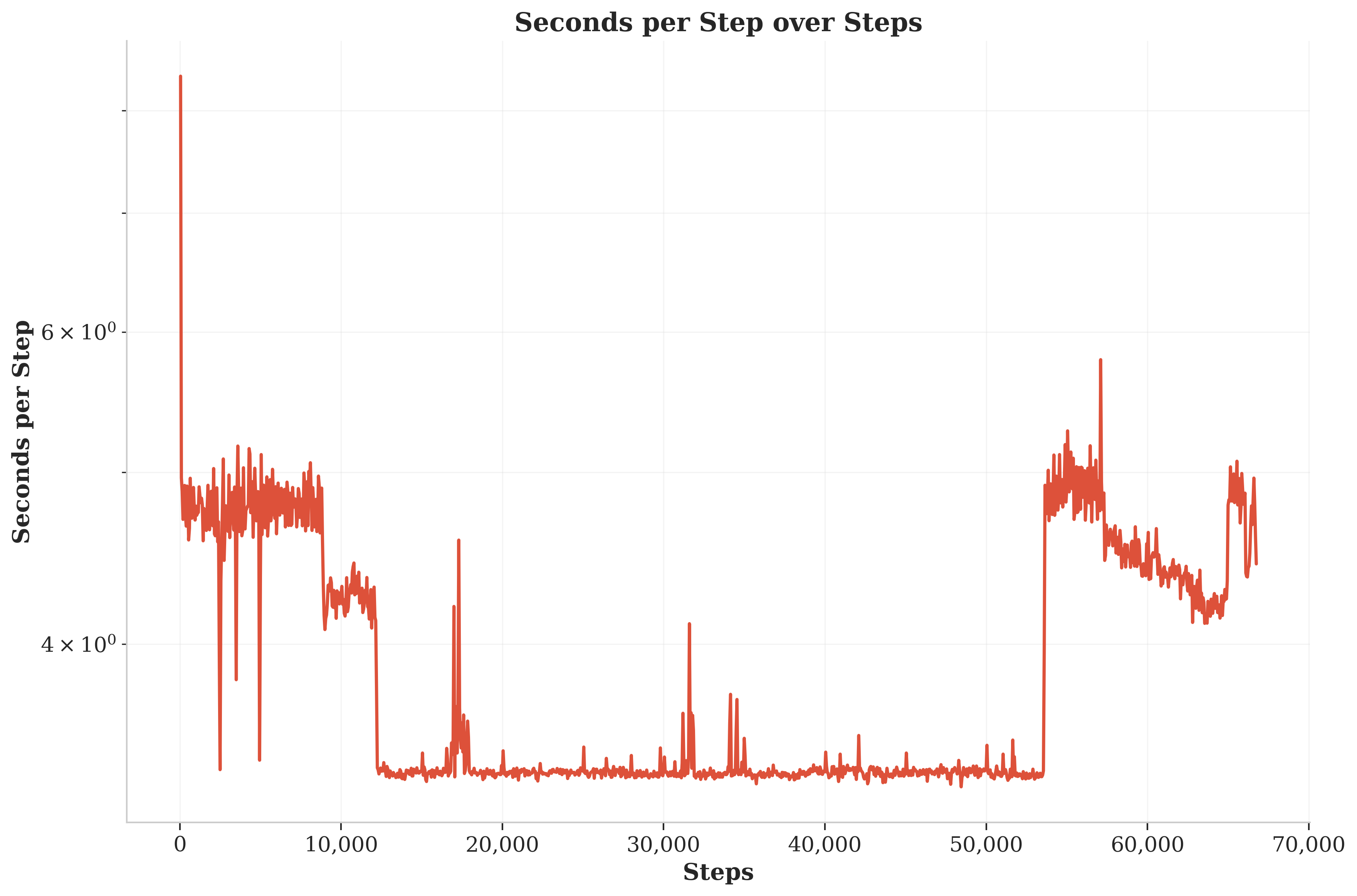

- checkpoints/seconds_per_step_over_steps.png +0 -0

- checkpoints/training_metrics.csv +39 -0

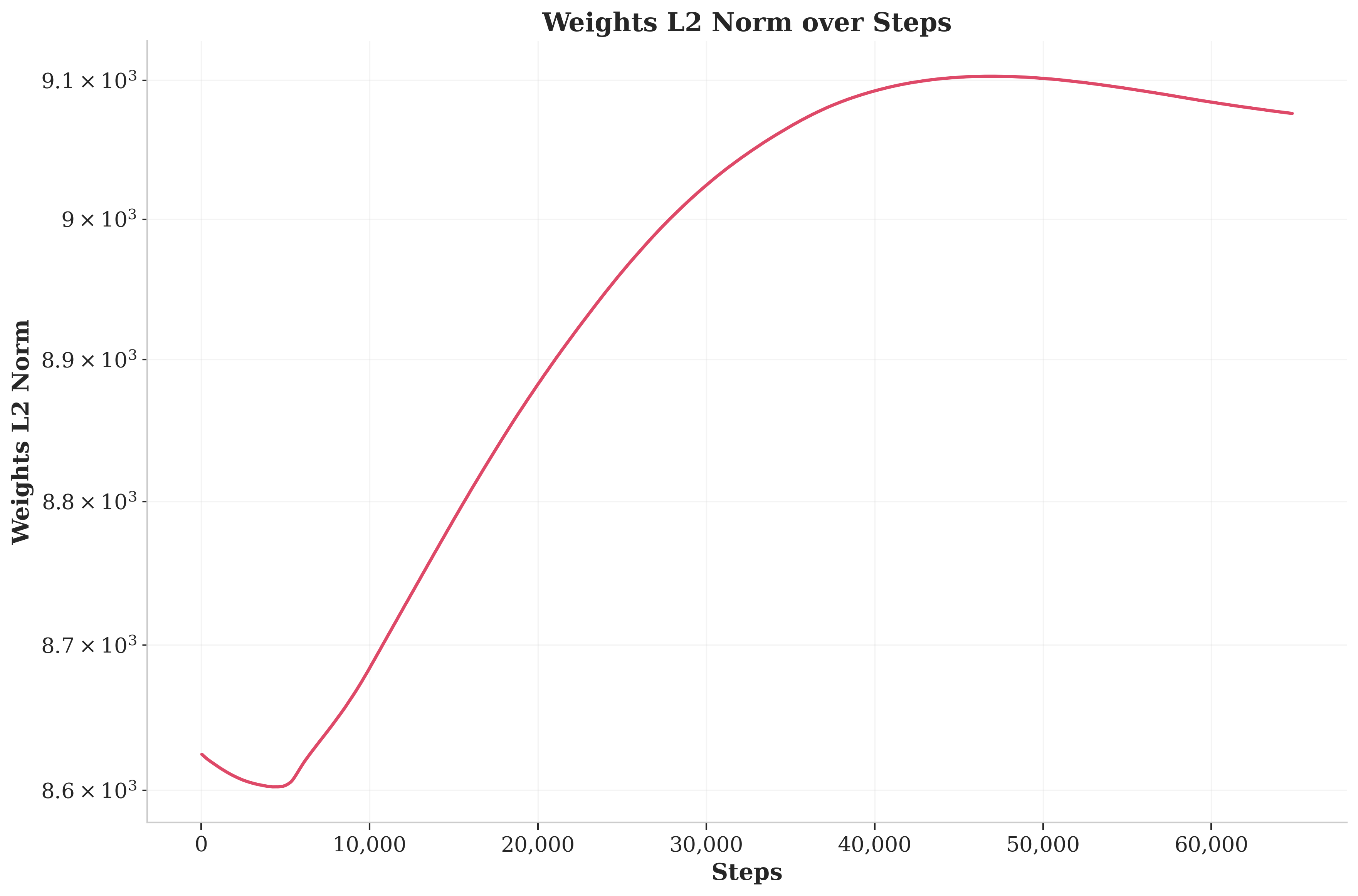

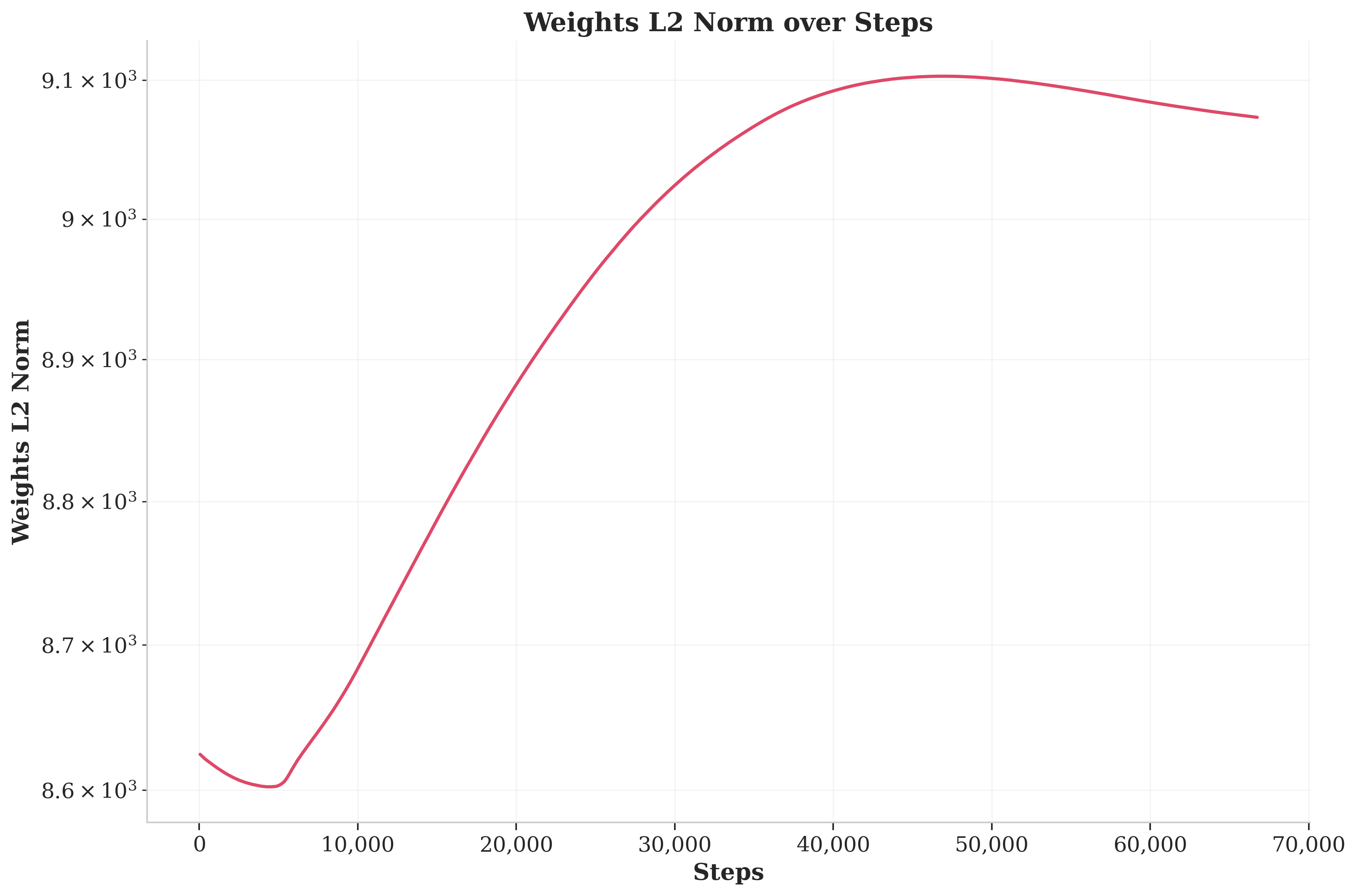

- checkpoints/weights_l2_over_steps.png +0 -0

checkpoints/checkpoint-pt-65000/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:37366996f247c2e989dd31e23d73c03f4a809b2938ec50ff35dea641d43cb028

|

| 3 |

+

size 1202681712

|

checkpoints/checkpoint-pt-65000/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:634ae87ad9ec14553a807f970f4e595e3fef7b62fd4afaddf671a76426ff94ed

|

| 3 |

+

size 14344

|

checkpoints/grad_l2_over_steps.png

CHANGED

|

|

checkpoints/loss_over_steps.png

CHANGED

|

|

checkpoints/lr_over_steps.png

CHANGED

|

|

checkpoints/main.log

CHANGED

|

@@ -1408,3 +1408,48 @@ Mixed precision type: bf16

|

|

| 1408 |

[2024-08-12 05:30:25,765][Main][INFO] - [train] Step 64850 out of 80000 | Loss --> 1.726 | Grad_l2 --> 0.323 | Weights_l2 --> 9075.868 | Lr --> 0.001 | Seconds_per_step --> 4.239 |

|

| 1409 |

[2024-08-12 05:33:57,856][Main][INFO] - [train] Step 64900 out of 80000 | Loss --> 1.729 | Grad_l2 --> 0.322 | Weights_l2 --> 9075.794 | Lr --> 0.001 | Seconds_per_step --> 4.242 |

|

| 1410 |

[2024-08-12 05:37:34,949][Main][INFO] - [train] Step 64950 out of 80000 | Loss --> 1.727 | Grad_l2 --> 0.324 | Weights_l2 --> 9075.723 | Lr --> 0.001 | Seconds_per_step --> 4.342 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1408 |

[2024-08-12 05:30:25,765][Main][INFO] - [train] Step 64850 out of 80000 | Loss --> 1.726 | Grad_l2 --> 0.323 | Weights_l2 --> 9075.868 | Lr --> 0.001 | Seconds_per_step --> 4.239 |

|

| 1409 |

[2024-08-12 05:33:57,856][Main][INFO] - [train] Step 64900 out of 80000 | Loss --> 1.729 | Grad_l2 --> 0.322 | Weights_l2 --> 9075.794 | Lr --> 0.001 | Seconds_per_step --> 4.242 |

|

| 1410 |

[2024-08-12 05:37:34,949][Main][INFO] - [train] Step 64950 out of 80000 | Loss --> 1.727 | Grad_l2 --> 0.324 | Weights_l2 --> 9075.723 | Lr --> 0.001 | Seconds_per_step --> 4.342 |

|

| 1411 |

+

[2024-08-12 05:41:34,479][Main][INFO] - [train] Step 65000 out of 80000 | Loss --> 1.731 | Grad_l2 --> 0.321 | Weights_l2 --> 9075.650 | Lr --> 0.001 | Seconds_per_step --> 4.791 |

|

| 1412 |

+

[2024-08-12 05:41:34,479][accelerate.accelerator][INFO] - Saving current state to checkpoint-pt-65000

|

| 1413 |

+

[2024-08-12 05:41:34,483][accelerate.utils.other][WARNING] - Removed shared tensor {'encoder.embed_tokens.weight', 'decoder.embed_tokens.weight'} while saving. This should be OK, but check by verifying that you don't receive any warning while reloading

|

| 1414 |

+

[2024-08-12 05:41:37,445][accelerate.checkpointing][INFO] - Model weights saved in checkpoint-pt-65000/model.safetensors

|

| 1415 |

+

[2024-08-12 05:41:45,138][accelerate.checkpointing][INFO] - Optimizer state saved in checkpoint-pt-65000/optimizer.bin

|

| 1416 |

+

[2024-08-12 05:41:45,138][accelerate.checkpointing][INFO] - Scheduler state saved in checkpoint-pt-65000/scheduler.bin

|

| 1417 |

+

[2024-08-12 05:41:45,138][accelerate.checkpointing][INFO] - Sampler state for dataloader 0 saved in checkpoint-pt-65000/sampler.bin

|

| 1418 |

+

[2024-08-12 05:41:45,138][accelerate.checkpointing][INFO] - Sampler state for dataloader 1 saved in checkpoint-pt-65000/sampler_1.bin

|

| 1419 |

+

[2024-08-12 05:41:45,139][accelerate.checkpointing][INFO] - Random states saved in checkpoint-pt-65000/random_states_0.pkl

|

| 1420 |

+

[2024-08-12 05:45:35,690][Main][INFO] - [train] Step 65050 out of 80000 | Loss --> 1.735 | Grad_l2 --> 0.322 | Weights_l2 --> 9075.576 | Lr --> 0.001 | Seconds_per_step --> 4.824 |

|

| 1421 |

+

[2024-08-12 05:49:36,445][Main][INFO] - [train] Step 65100 out of 80000 | Loss --> 1.735 | Grad_l2 --> 0.323 | Weights_l2 --> 9075.504 | Lr --> 0.001 | Seconds_per_step --> 4.815 |

|

| 1422 |

+

[2024-08-12 05:53:48,172][Main][INFO] - [train] Step 65150 out of 80000 | Loss --> 1.735 | Grad_l2 --> 0.325 | Weights_l2 --> 9075.434 | Lr --> 0.001 | Seconds_per_step --> 5.035 |

|

| 1423 |

+

[2024-08-12 05:57:50,665][Main][INFO] - [train] Step 65200 out of 80000 | Loss --> 1.730 | Grad_l2 --> 0.325 | Weights_l2 --> 9075.358 | Lr --> 0.001 | Seconds_per_step --> 4.850 |

|

| 1424 |

+

[2024-08-12 06:01:51,474][Main][INFO] - [train] Step 65250 out of 80000 | Loss --> 1.730 | Grad_l2 --> 0.324 | Weights_l2 --> 9075.282 | Lr --> 0.001 | Seconds_per_step --> 4.816 |

|

| 1425 |

+

[2024-08-12 06:05:53,194][Main][INFO] - [train] Step 65300 out of 80000 | Loss --> 1.739 | Grad_l2 --> 0.324 | Weights_l2 --> 9075.209 | Lr --> 0.001 | Seconds_per_step --> 4.834 |

|

| 1426 |

+

[2024-08-12 06:10:02,625][Main][INFO] - [train] Step 65350 out of 80000 | Loss --> 1.720 | Grad_l2 --> 0.322 | Weights_l2 --> 9075.137 | Lr --> 0.001 | Seconds_per_step --> 4.989 |

|

| 1427 |

+

[2024-08-12 06:14:02,960][Main][INFO] - [train] Step 65400 out of 80000 | Loss --> 1.733 | Grad_l2 --> 0.324 | Weights_l2 --> 9075.064 | Lr --> 0.001 | Seconds_per_step --> 4.807 |

|

| 1428 |

+

[2024-08-12 06:18:05,636][Main][INFO] - [train] Step 65450 out of 80000 | Loss --> 1.728 | Grad_l2 --> 0.324 | Weights_l2 --> 9074.992 | Lr --> 0.001 | Seconds_per_step --> 4.854 |

|

| 1429 |

+

[2024-08-12 06:22:11,143][Main][INFO] - [train] Step 65500 out of 80000 | Loss --> 1.725 | Grad_l2 --> 0.323 | Weights_l2 --> 9074.925 | Lr --> 0.001 | Seconds_per_step --> 4.910 |

|

| 1430 |

+

[2024-08-12 06:26:24,738][Main][INFO] - [train] Step 65550 out of 80000 | Loss --> 1.739 | Grad_l2 --> 0.326 | Weights_l2 --> 9074.848 | Lr --> 0.001 | Seconds_per_step --> 5.072 |

|

| 1431 |

+

[2024-08-12 06:30:24,331][Main][INFO] - [train] Step 65600 out of 80000 | Loss --> 1.735 | Grad_l2 --> 0.326 | Weights_l2 --> 9074.775 | Lr --> 0.001 | Seconds_per_step --> 4.792 |

|

| 1432 |

+

[2024-08-12 06:34:30,155][Main][INFO] - [train] Step 65650 out of 80000 | Loss --> 1.736 | Grad_l2 --> 0.326 | Weights_l2 --> 9074.702 | Lr --> 0.001 | Seconds_per_step --> 4.916 |

|

| 1433 |

+

[2024-08-12 06:38:38,111][Main][INFO] - [train] Step 65700 out of 80000 | Loss --> 1.738 | Grad_l2 --> 0.325 | Weights_l2 --> 9074.630 | Lr --> 0.001 | Seconds_per_step --> 4.959 |

|

| 1434 |

+

[2024-08-12 06:42:32,160][Main][INFO] - [train] Step 65750 out of 80000 | Loss --> 1.735 | Grad_l2 --> 0.327 | Weights_l2 --> 9074.556 | Lr --> 0.001 | Seconds_per_step --> 4.681 |

|

| 1435 |

+

[2024-08-12 06:46:31,254][Main][INFO] - [train] Step 65800 out of 80000 | Loss --> 1.742 | Grad_l2 --> 0.324 | Weights_l2 --> 9074.488 | Lr --> 0.001 | Seconds_per_step --> 4.782 |

|

| 1436 |

+

[2024-08-12 06:50:40,876][Main][INFO] - [train] Step 65850 out of 80000 | Loss --> 1.748 | Grad_l2 --> 0.327 | Weights_l2 --> 9074.414 | Lr --> 0.001 | Seconds_per_step --> 4.992 |

|

| 1437 |

+

[2024-08-12 06:54:45,474][Main][INFO] - [train] Step 65900 out of 80000 | Loss --> 1.747 | Grad_l2 --> 0.326 | Weights_l2 --> 9074.342 | Lr --> 0.001 | Seconds_per_step --> 4.892 |

|

| 1438 |

+

[2024-08-12 06:58:45,288][Main][INFO] - [train] Step 65950 out of 80000 | Loss --> 1.740 | Grad_l2 --> 0.326 | Weights_l2 --> 9074.268 | Lr --> 0.001 | Seconds_per_step --> 4.796 |

|

| 1439 |

+

[2024-08-12 07:02:45,790][Main][INFO] - [train] Step 66000 out of 80000 | Loss --> 1.730 | Grad_l2 --> 0.326 | Weights_l2 --> 9074.203 | Lr --> 0.001 | Seconds_per_step --> 4.810 |

|

| 1440 |

+

[2024-08-12 07:06:49,098][Main][INFO] - [train] Step 66050 out of 80000 | Loss --> 1.752 | Grad_l2 --> 0.328 | Weights_l2 --> 9074.128 | Lr --> 0.001 | Seconds_per_step --> 4.866 |

|

| 1441 |

+

[2024-08-12 07:10:28,457][Main][INFO] - [train] Step 66100 out of 80000 | Loss --> 1.750 | Grad_l2 --> 0.327 | Weights_l2 --> 9074.061 | Lr --> 0.001 | Seconds_per_step --> 4.387 |

|

| 1442 |

+

[2024-08-12 07:14:06,799][Main][INFO] - [train] Step 66150 out of 80000 | Loss --> 1.745 | Grad_l2 --> 0.327 | Weights_l2 --> 9073.990 | Lr --> 0.001 | Seconds_per_step --> 4.367 |

|

| 1443 |

+

[2024-08-12 07:17:45,000][Main][INFO] - [train] Step 66200 out of 80000 | Loss --> 1.746 | Grad_l2 --> 0.329 | Weights_l2 --> 9073.915 | Lr --> 0.001 | Seconds_per_step --> 4.364 |

|

| 1444 |

+

[2024-08-12 07:21:25,805][Main][INFO] - [train] Step 66250 out of 80000 | Loss --> 1.754 | Grad_l2 --> 0.327 | Weights_l2 --> 9073.847 | Lr --> 0.001 | Seconds_per_step --> 4.416 |

|

| 1445 |

+

[2024-08-12 07:25:07,094][Main][INFO] - [train] Step 66300 out of 80000 | Loss --> 1.761 | Grad_l2 --> 0.328 | Weights_l2 --> 9073.777 | Lr --> 0.001 | Seconds_per_step --> 4.426 |

|

| 1446 |

+

[2024-08-12 07:28:52,170][Main][INFO] - [train] Step 66350 out of 80000 | Loss --> 1.760 | Grad_l2 --> 0.329 | Weights_l2 --> 9073.706 | Lr --> 0.001 | Seconds_per_step --> 4.502 |

|

| 1447 |

+

[2024-08-12 07:32:44,959][Main][INFO] - [train] Step 66400 out of 80000 | Loss --> 1.753 | Grad_l2 --> 0.329 | Weights_l2 --> 9073.633 | Lr --> 0.001 | Seconds_per_step --> 4.656 |

|

| 1448 |

+

[2024-08-12 07:36:44,237][Main][INFO] - [train] Step 66450 out of 80000 | Loss --> 1.762 | Grad_l2 --> 0.328 | Weights_l2 --> 9073.563 | Lr --> 0.001 | Seconds_per_step --> 4.786 |

|

| 1449 |

+

[2024-08-12 07:40:37,789][Main][INFO] - [train] Step 66500 out of 80000 | Loss --> 1.761 | Grad_l2 --> 0.327 | Weights_l2 --> 9073.497 | Lr --> 0.001 | Seconds_per_step --> 4.671 |

|

| 1450 |

+

[2024-08-12 07:44:38,576][Main][INFO] - [train] Step 66550 out of 80000 | Loss --> 1.770 | Grad_l2 --> 0.331 | Weights_l2 --> 9073.425 | Lr --> 0.001 | Seconds_per_step --> 4.816 |

|

| 1451 |

+

[2024-08-12 07:48:46,663][Main][INFO] - [train] Step 66600 out of 80000 | Loss --> 1.770 | Grad_l2 --> 0.328 | Weights_l2 --> 9073.357 | Lr --> 0.001 | Seconds_per_step --> 4.962 |

|

| 1452 |

+

[2024-08-12 07:52:46,860][Main][INFO] - [train] Step 66650 out of 80000 | Loss --> 1.768 | Grad_l2 --> 0.330 | Weights_l2 --> 9073.285 | Lr --> 0.001 | Seconds_per_step --> 4.804 |

|

| 1453 |

+

[2024-08-12 07:56:36,923][Main][INFO] - [train] Step 66700 out of 80000 | Loss --> 1.768 | Grad_l2 --> 0.329 | Weights_l2 --> 9073.215 | Lr --> 0.001 | Seconds_per_step --> 4.601 |

|

| 1454 |

+

[2024-08-12 08:00:18,881][Main][INFO] - [train] Step 66750 out of 80000 | Loss --> 1.770 | Grad_l2 --> 0.331 | Weights_l2 --> 9073.141 | Lr --> 0.001 | Seconds_per_step --> 4.439 |

|

| 1455 |

+

[2024-08-12 08:04:03,533][Main][INFO] - [train] Step 66800 out of 80000 | Loss --> 1.769 | Grad_l2 --> 0.330 | Weights_l2 --> 9073.071 | Lr --> 0.001 | Seconds_per_step --> 4.493 |

|

checkpoints/seconds_per_step_over_steps.png

CHANGED

|

|

checkpoints/training_metrics.csv

CHANGED

|

@@ -1295,3 +1295,42 @@ timestamp,step,loss,grad_l2,weights_l2,lr,seconds_per_step

|

|

| 1295 |

"2024-08-12 05:19:47,700",64700,1.731,0.322,9076.093,0.001,4.237

|

| 1296 |

"2024-08-12 05:23:19,101",64750,1.732,0.324,9076.017,0.001,4.228

|

| 1297 |

"2024-08-12 05:26:53,820",64800,1.73,0.323,9075.943,0.001,4.294

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1295 |

"2024-08-12 05:19:47,700",64700,1.731,0.322,9076.093,0.001,4.237

|

| 1296 |

"2024-08-12 05:23:19,101",64750,1.732,0.324,9076.017,0.001,4.228

|

| 1297 |

"2024-08-12 05:26:53,820",64800,1.73,0.323,9075.943,0.001,4.294

|

| 1298 |

+

"2024-08-12 05:30:25,765",64850,1.726,0.323,9075.868,0.001,4.239

|

| 1299 |

+

"2024-08-12 05:33:57,856",64900,1.729,0.322,9075.794,0.001,4.242

|

| 1300 |

+

"2024-08-12 05:37:34,949",64950,1.727,0.324,9075.723,0.001,4.342

|

| 1301 |

+

"2024-08-12 05:41:34,479",65000,1.731,0.321,9075.65,0.001,4.791

|

| 1302 |

+

"2024-08-12 05:45:35,690",65050,1.735,0.322,9075.576,0.001,4.824

|

| 1303 |

+

"2024-08-12 05:49:36,445",65100,1.735,0.323,9075.504,0.001,4.815

|

| 1304 |

+

"2024-08-12 05:53:48,172",65150,1.735,0.325,9075.434,0.001,5.035

|

| 1305 |

+

"2024-08-12 05:57:50,665",65200,1.73,0.325,9075.358,0.001,4.85

|

| 1306 |

+

"2024-08-12 06:01:51,474",65250,1.73,0.324,9075.282,0.001,4.816

|

| 1307 |

+

"2024-08-12 06:05:53,194",65300,1.739,0.324,9075.209,0.001,4.834

|

| 1308 |

+

"2024-08-12 06:10:02,625",65350,1.72,0.322,9075.137,0.001,4.989

|

| 1309 |

+

"2024-08-12 06:14:02,960",65400,1.733,0.324,9075.064,0.001,4.807

|

| 1310 |

+

"2024-08-12 06:18:05,636",65450,1.728,0.324,9074.992,0.001,4.854

|

| 1311 |

+

"2024-08-12 06:22:11,143",65500,1.725,0.323,9074.925,0.001,4.91

|

| 1312 |

+

"2024-08-12 06:26:24,738",65550,1.739,0.326,9074.848,0.001,5.072

|

| 1313 |

+

"2024-08-12 06:30:24,331",65600,1.735,0.326,9074.775,0.001,4.792

|

| 1314 |

+

"2024-08-12 06:34:30,155",65650,1.736,0.326,9074.702,0.001,4.916

|

| 1315 |

+

"2024-08-12 06:38:38,111",65700,1.738,0.325,9074.63,0.001,4.959

|

| 1316 |

+

"2024-08-12 06:42:32,160",65750,1.735,0.327,9074.556,0.001,4.681

|

| 1317 |

+

"2024-08-12 06:46:31,254",65800,1.742,0.324,9074.488,0.001,4.782

|

| 1318 |

+

"2024-08-12 06:50:40,876",65850,1.748,0.327,9074.414,0.001,4.992

|

| 1319 |

+

"2024-08-12 06:54:45,474",65900,1.747,0.326,9074.342,0.001,4.892

|

| 1320 |

+

"2024-08-12 06:58:45,288",65950,1.74,0.326,9074.268,0.001,4.796

|

| 1321 |

+

"2024-08-12 07:02:45,790",66000,1.73,0.326,9074.203,0.001,4.81

|

| 1322 |

+

"2024-08-12 07:06:49,098",66050,1.752,0.328,9074.128,0.001,4.866

|

| 1323 |

+

"2024-08-12 07:10:28,457",66100,1.75,0.327,9074.061,0.001,4.387

|

| 1324 |

+

"2024-08-12 07:14:06,799",66150,1.745,0.327,9073.99,0.001,4.367

|

| 1325 |

+

"2024-08-12 07:17:45,000",66200,1.746,0.329,9073.915,0.001,4.364

|

| 1326 |

+

"2024-08-12 07:21:25,805",66250,1.754,0.327,9073.847,0.001,4.416

|

| 1327 |

+

"2024-08-12 07:25:07,094",66300,1.761,0.328,9073.777,0.001,4.426

|

| 1328 |

+

"2024-08-12 07:28:52,170",66350,1.76,0.329,9073.706,0.001,4.502

|

| 1329 |

+

"2024-08-12 07:32:44,959",66400,1.753,0.329,9073.633,0.001,4.656

|

| 1330 |

+

"2024-08-12 07:36:44,237",66450,1.762,0.328,9073.563,0.001,4.786

|

| 1331 |

+

"2024-08-12 07:40:37,789",66500,1.761,0.327,9073.497,0.001,4.671

|

| 1332 |

+

"2024-08-12 07:44:38,576",66550,1.77,0.331,9073.425,0.001,4.816

|

| 1333 |

+

"2024-08-12 07:48:46,663",66600,1.77,0.328,9073.357,0.001,4.962

|

| 1334 |

+

"2024-08-12 07:52:46,860",66650,1.768,0.33,9073.285,0.001,4.804

|

| 1335 |

+

"2024-08-12 07:56:36,923",66700,1.768,0.329,9073.215,0.001,4.601

|

| 1336 |

+

"2024-08-12 08:00:18,881",66750,1.77,0.331,9073.141,0.001,4.439

|

checkpoints/weights_l2_over_steps.png

CHANGED

|

|