Model summary

OpenR1-Distill-7B is post-trained version of Qwen/Qwen2.5-Math-7B on Mixture-of-Thoughts: a curated dataset of 350k verified reasoning traces distilled from DeepSeek-R1. The dataset spans tasks in mathematics, coding, and science, and is designed to teach language models to reason step-by-step.

OpenR1-Distill-7B replicates the reasoning capabilities of deepseek-ai/DeepSeek-R1-Distill-Qwen-7B while remaining fully open and reproducible. It is ideal for research on inference-time compute and reinforcement learning with verifiable rewards (RLVR).

Model description

- Model type: A 7B parameter GPT-like model, post-trained on a mix of publicly available, synthetic datasets.

- Language(s) (NLP): Primarily English

- License: Apache 2.0

- Finetuned from model: a variant of Qwen/Qwen2.5-Math-7B, whose RoPE base frequency was extended to 300k to enable training on a context of 32k tokens.

Model Sources

- Repository: https://github.com/huggingface/open-r1

- Training logs: https://wandb.ai/huggingface/open-r1/runs/199cum6l

- Evaluation logs: https://huggingface.co/datasets/open-r1/details-open-r1_OpenR1-Distill-7B

Usage

To chat with the model, first install 🤗 Transformers:

pip install transformers>0.52

Then run the chat CLI as follows:

transformers chat open-r1/OpenR1-Distill-7B \

max_new_tokens=2048 \

do_sample=True \

temperature=0.6 \

top_p=0.95

Alternatively, run the model using the pipeline() function:

import torch

from transformers import pipeline

pipe = pipeline("text-generation", model="open-r1/OpenR1-Distill-7B", torch_dtype=torch.bfloat16, device_map="auto")

messages = [

{"role": "user", "content": "Which number is larger, 9.9 or 9.11?"},

]

outputs = pipe(messages, max_new_tokens=2048, do_sample=True, temperature=0.6, top_p=0.95, return_full_text=False)

print(outputs[0]["generated_text"])

Performance

We use Lighteval to evaluate models on the following benchmarks:

| Model | AIME 2024 | MATH-500 | GPQA Diamond | LiveCodeBench v5 |

|---|---|---|---|---|

| OpenR1-Distill-7B | 52.7 | 89.0 | 52.8 | 39.4 |

| DeepSeek-R1-Distill-Qwen-7B | 51.3 | 93.5 | 52.4 | 37.4 |

All scores denote pass@1 accuracy and use sampling with temperature=0.6 and top_p=0.95. The DeepSeek-R1 tech report uses sampling with 4-64 responses per query to estimate pass@1, but does not specify the specific number of responses per benchmark. In the table above, we estimate pass@1 accuracy with the following number of responses per query:

| Benchmark | Number of responses per query |

|---|---|

| AIME 2024 | 64 |

| MATH-500 | 4 |

| GPQA Diamond | 8 |

| LiveCodeBench | 16 |

Note that for benchmarks like AIME 2024, it is important to sample many responses as there are only 30 problems and this introduces high variance across repeated runs. The choice of how many responses to sample per prompt likely explains the small differences between our evaluation results and those reported by DeepSeek. Check out the open-r1 repo for instructions on how to reproduce these results.

Training methodology

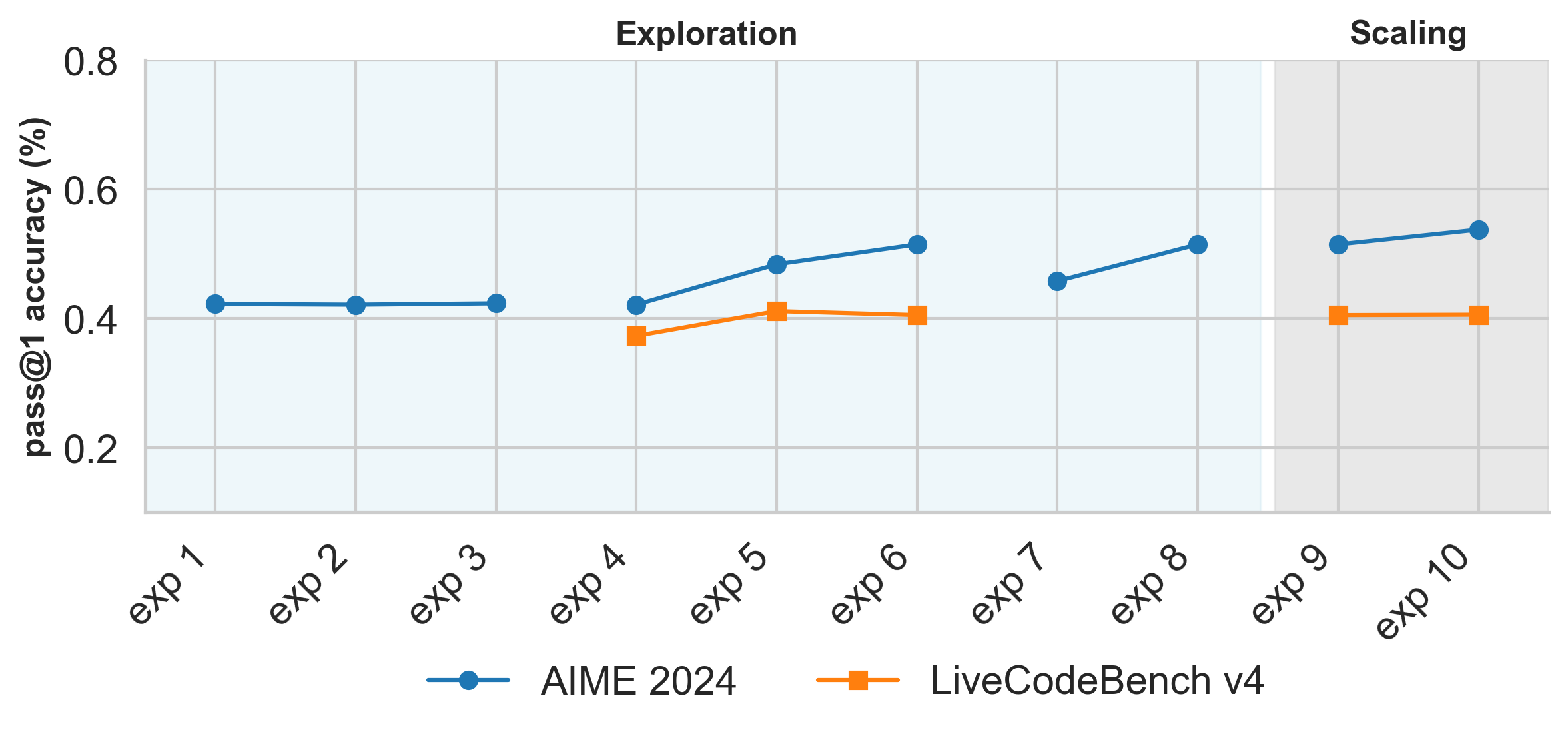

OpenR1-Distill-7B was trained using supervised fine-tuning (SFT) on the Mixture-of-Thoughts dataset, which contains 350k reasoning traces distilled from DeepSeek-R1. To optimise the data mixture, we followed the same methodology described in the Phi-4-reasoning tech report, namely that mixtures can be optimised independently per domain, and then combined into a single dataset. The figure below shows the evolution of our experiments on the math and code domains:

The individual experiments correspond to the following:

- exp1 - exp3: extending the model's base RoPE frequency from 10k to 100k, 300k, and 500k respectively. We find there is no significant difference between the scaling factors, and used 300k in all subsequent experiments.

- exp4 - exp6: independently scaling the learning rate on the math and code mixtures from 1e-5 to 2e-5, and 4e-5 respectively.

- exp7 - exp8: measuring the impact of sequence packing (exp7) versus no packing (exp8) on the math mixture.

- exp9 - exp10: measuring the impact of training on all three mixtures (math, code, and science) versus training on math and code only.

We use LiveCodeBench v4 to accelerate evaluation during our ablations as it contains around half the problems of v5, yet is still representative of the full benchmark.

Training hyperparameters

The following hyperparameters were used during training:

- num_epochs: 5.0

- learning_rate: 4.0e-05

- num_devices: 8

- train_batch_size: 2

- gradient_accumulation_steps: 8

- total_train_batch_size: 2 * 8 * 8 = 128

- seed: 42

- distributed_type: DeepSpeed ZeRO-3

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine_with_min_lr with min_lr_rate=0.1

- lr_scheduler_warmup_ratio: 0.03

- max_grad_norm: 0.2

Training results

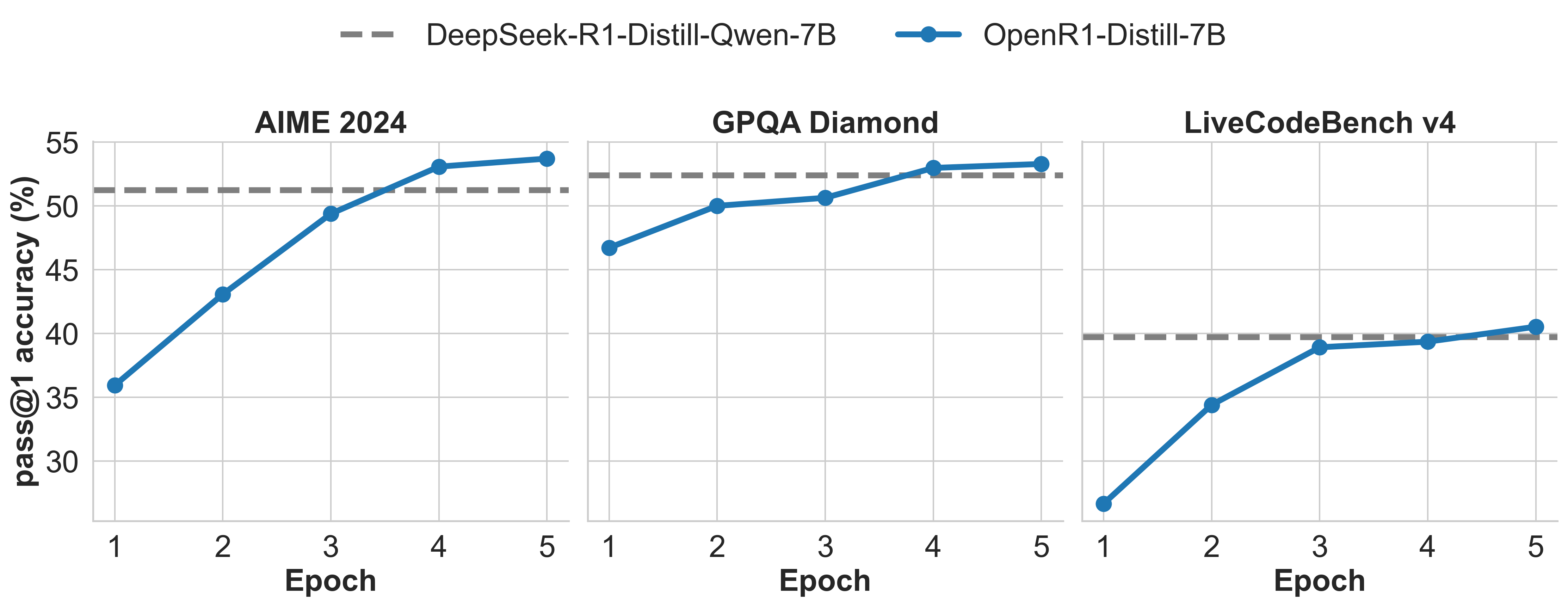

During training, we monitor progress on AIME 2024, GPQA Diamond, and LiveCodeBench v4 every epoch. The following plot shows the training results:

Framework versions

- Platform: Linux-5.15.0-1049-aws-x86_64-with-glibc2.31

- Python version: 3.11.11

- TRL version: 0.18.0.dev0

- PyTorch version: 2.6.0

- Transformers version: 4.52.0.dev0

- Accelerate version: 1.4.0

- Datasets version: 3.5.1

- HF Hub version: 0.30.2

- bitsandbytes version: 0.45.5

- DeepSpeed version: 0.16.8

- Liger-Kernel version: 0.5.9

- OpenAI version: 1.76.2

- vLLM version: 0.8.4

Citation

If you find this model is useful in your own work, please consider citing it as follows:

@misc{openr1,

title = {Open R1: A fully open reproduction of DeepSeek-R1},

url = {https://github.com/huggingface/open-r1},

author = {Hugging Face},

month = {January},

year = {2025}

}

- Downloads last month

- 2,452

Model tree for open-r1/OpenR1-Distill-7B

Base model

Qwen/Qwen2.5-7B