🧠 Article Relevance Classifier (Prototype)

This project aims to classify news articles as relevant (i.e., discussing a new event) or non-relevant. The articles are then provided to an LLM pipeline. We should maximize the lowest false positive rate as we don't want the LLMs to be polluted.

🧾 Available Features

For each article, we collect a set of features from both the metadata and the raw content of the web page:

- Metadata Title: The

<title>tag of the page, often used by browsers and search engines. - Metadata Description: The

<meta name="description">field, typically summarizing the article content. - Content: The main textual content of the article, extracted using trafilatura.

- Date: The publication date of the article (when available).

- CSS Title: A title found in the visible content, usually marked with large or header-style HTML tags (e.g.,

<h1>). - og:type: The Open Graph

og:typeproperty, which often indicates the type of content (e.g.,article,video,website). - Text-to-HTML Ratio: The ratio between the length of the extracted text and the total HTML size, indicating how content-focused the page is.

- Paragraph Count: The number of

<p>tags, giving a rough idea of how much structured text the page contains. - Link Count: The total number of hyperlinks (

<a>tags) on the page. - Weekday: The day of the week the article was published, which can help identify publishing patterns.

- Average Link Count of the Website: The average number of hyperlinks per page across the entire website domain. This helps differentiate content-heavy domains from link-heavy or index-style sites.

➡️ The Link Count feature becomes more meaningful when compared to the Average Link Count of the Website. For example, a page with very few links on a generally link-heavy site may indicate that it is an article rather than a hub or landing page.

⚠️ However, computing the Average Link Count of the Website requires crawling multiple pages of the same domain, which is not feasible in a real-time prediction setting (i.e., when you want to classify a single article instantly). For this reason, features like Average Link Count of the Website can only be used during offline training and are not available at inference time.

🔍 Approach

For this first prototype, I decided to use text embeddings to semantically represent each article. These embeddings are then used to train a binary classifier.

Each article is represented using three components:

- The title (from metadata, up to 512 tokens),

- The description (from metadata, up to 512 tokens),

- The main content (extracted using trafilatura, up to 512 tokens).

⚠️ Due to token limits, only the beginning of each text field is used. This may affect classification performance when relevant information appears later in the article.

For the classifier, I chose a Support Vector Machine (SVM) model because:

- K-Nearest Neighbors (KNN) is too slow at inference time due to the high dimensionality (512 × 3 features),

- Random Forests risk overfitting when dealing with a large number of features,

- Logistic Regression is a viable alternative, but SVMs generally perform better on high-dimensional, sparse datasets.

🧪 Second Test: Chunked Embeddings with Averaging

To address the limitations of the first test, a second experiment was conducted using chunked embeddings:

- Instead of truncating the text at 512 tokens, each field (title, description, content) is split into multiple chunks of up to 512 tokens.

- Each chunk is embedded separately.

- The final representation is the average of all the chunk embeddings.

This method allows the model to consider a broader portion of the article, potentially capturing relevant information that appears later in the text.

The goal of this second test is to evaluate whether this approach improves classification performance compared to the truncated version.

📊 Results (Test 2)

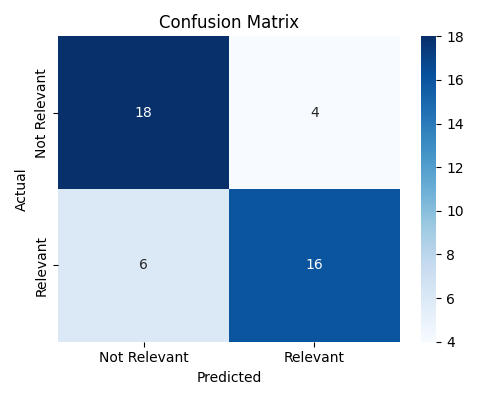

Below is the confusion matrix for the second test:

- Accuracy: (16 + 18) / (16 + 6 + 4 + 18) = 77.3%

- Precision (Relevant): 18 / (18 + 6) = 75.0%

- Recall (Relevant): 18 / (18 + 4) = 81.8%

Compared to Test 1, this version shows a slight improvement in both accuracy and precision, indicating that including more of the article content via chunked embeddings helps reduce false positives and better capture relevant information.

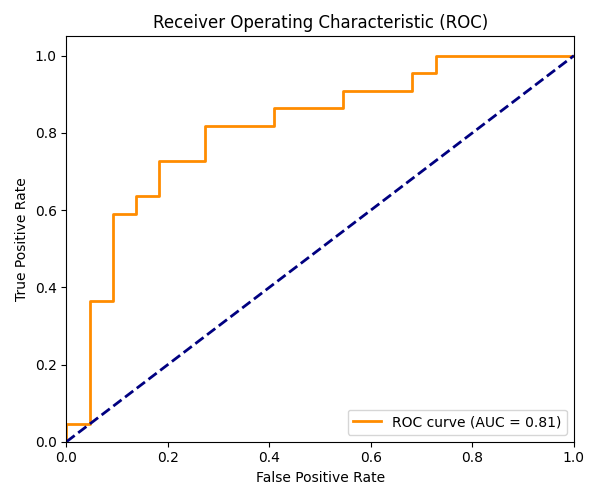

📈 ROC Curve

For this test, I also generated the ROC curve:

The curve appears to have a stepped shape, which is expected due to the limited number of test samples. As a result, it’s difficult to draw strong conclusions from the ROC curve alone.

However, we may tentatively observe that lowering the decision threshold could help reduce false positives — a promising direction to explore in future experiments with more data.

Analysis of the results

After analyzing the results, it seems that the model has difficulty distinguishing between the content types of articles, specifically whether they are news or not. However, it excels at identifying the structural layout of pages, such as determining if a page is a homepage, article, video, etc. Therefore, adding features like og:type, text-to-HTML ratio, and paragraph count may not be beneficial, as these features are primarily useful for differentiating page structure rather than content type.

- Downloads last month

- 0