Running into issue when trying to run this model with vllm

#4

by

Travisjw25

- opened

When I try to run this model with vllm/vllm-openai:v0.9.1 I get the following error:

vllm-1 | INFO 06-30 05:42:36 [__init__.py:244] Automatically detected platform cuda.

vllm-1 | INFO 06-30 05:42:39 [api_server.py:1287] vLLM API server version 0.9.1

vllm-1 | INFO 06-30 05:42:39 [cli_args.py:309] non-default args: {'model': 'moonshotai/Kimi-VL-A3B-Thinking-2506', 'max_model_len': 7600, 'gpu_memory_utilization': 0.8}

vllm-1 | INFO 06-30 05:42:48 [config.py:823] This model supports multiple tasks: {'reward', 'embed', 'generate', 'score', 'classify'}. Defaulting to 'generate'.

vllm-1 | INFO 06-30 05:42:48 [config.py:2195] Chunked prefill is enabled with max_num_batched_tokens=2048.

vllm-1 | Traceback (most recent call last):

vllm-1 | File "<frozen runpy>", line 198, in _run_module_as_main

vllm-1 | File "<frozen runpy>", line 88, in _run_code

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 1387, in <module>

vllm-1 | uvloop.run(run_server(args))

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/uvloop/__init__.py", line 109, in run

vllm-1 | return __asyncio.run(

vllm-1 | ^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/asyncio/runners.py", line 195, in run

vllm-1 | return runner.run(main)

vllm-1 | ^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/asyncio/runners.py", line 118, in run

vllm-1 | return self._loop.run_until_complete(task)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/uvloop/__init__.py", line 61, in wrapper

vllm-1 | return await main

vllm-1 | ^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 1323, in run_server

vllm-1 | await run_server_worker(listen_address, sock, args, **uvicorn_kwargs)

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 1343, in run_server_worker

vllm-1 | async with build_async_engine_client(args, client_config) as engine_client:

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/contextlib.py", line 210, in __aenter__

vllm-1 | return await anext(self.gen)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 155, in build_async_engine_client

vllm-1 | async with build_async_engine_client_from_engine_args(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/contextlib.py", line 210, in __aenter__

vllm-1 | return await anext(self.gen)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 191, in build_async_engine_client_from_engine_args

vllm-1 | async_llm = AsyncLLM.from_vllm_config(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/v1/engine/async_llm.py", line 162, in from_vllm_config

vllm-1 | return cls(

vllm-1 | ^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/v1/engine/async_llm.py", line 106, in __init__

vllm-1 | self.tokenizer = init_tokenizer_from_configs(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer_group.py", line 111, in init_tokenizer_from_configs

vllm-1 | return TokenizerGroup(

vllm-1 | ^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer_group.py", line 24, in __init__

vllm-1 | self.tokenizer = get_tokenizer(self.tokenizer_id, **tokenizer_config)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer.py", line 253, in get_tokenizer

vllm-1 | raise e

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer.py", line 232, in get_tokenizer

vllm-1 | tokenizer = AutoTokenizer.from_pretrained(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/transformers/models/auto/tokenization_auto.py", line 985, in from_pretrained

vllm-1 | trust_remote_code = resolve_trust_remote_code(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/transformers/dynamic_module_utils.py", line 731, in resolve_trust_remote_code

vllm-1 | raise ValueError(

vllm-1 | ValueError: The repository `moonshotai/Kimi-VL-A3B-Thinking-2506` contains custom code which must be executed to correctly load the model. You can inspect the repository content at https://hf.co/moonshotai/Kimi-VL-A3B-Thinking-2506.

vllm-1 | Please pass the argument `trust_remote_code=True` to allow custom code to be run.

This is complaining about the trust_remote_code, so I enabled that flag but it's complaining about a blobfile library not being installed:

Attaching to vllm-1

vllm-1 | INFO 06-30 05:41:59 [__init__.py:244] Automatically detected platform cuda.

vllm-1 | INFO 06-30 05:42:02 [api_server.py:1287] vLLM API server version 0.9.1

vllm-1 | INFO 06-30 05:42:03 [cli_args.py:309] non-default args: {'model': 'moonshotai/Kimi-VL-A3B-Thinking-2506', 'trust_remote_code': True, 'max_model_len': 7600, 'gpu_memory_utilization': 0.8}

vllm-1 | INFO 06-30 05:42:11 [config.py:823] This model supports multiple tasks: {'generate', 'classify', 'embed', 'score', 'reward'}. Defaulting to 'generate'.

vllm-1 | INFO 06-30 05:42:12 [config.py:2195] Chunked prefill is enabled with max_num_batched_tokens=2048.

vllm-1 | Traceback (most recent call last):

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/tiktoken/load.py", line 11, in read_file

vllm-1 | import blobfile

vllm-1 | ModuleNotFoundError: No module named 'blobfile'

vllm-1 |

vllm-1 | The above exception was the direct cause of the following exception:

vllm-1 |

vllm-1 | Traceback (most recent call last):

vllm-1 | File "<frozen runpy>", line 198, in _run_module_as_main

vllm-1 | File "<frozen runpy>", line 88, in _run_code

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 1387, in <module>

vllm-1 | uvloop.run(run_server(args))

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/uvloop/__init__.py", line 109, in run

vllm-1 | return __asyncio.run(

vllm-1 | ^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/asyncio/runners.py", line 195, in run

vllm-1 | return runner.run(main)

vllm-1 | ^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/asyncio/runners.py", line 118, in run

vllm-1 | return self._loop.run_until_complete(task)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/uvloop/__init__.py", line 61, in wrapper

vllm-1 | return await main

vllm-1 | ^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 1323, in run_server

vllm-1 | await run_server_worker(listen_address, sock, args, **uvicorn_kwargs)

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 1343, in run_server_worker

vllm-1 | async with build_async_engine_client(args, client_config) as engine_client:

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/contextlib.py", line 210, in __aenter__

vllm-1 | return await anext(self.gen)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 155, in build_async_engine_client

vllm-1 | async with build_async_engine_client_from_engine_args(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/lib/python3.12/contextlib.py", line 210, in __aenter__

vllm-1 | return await anext(self.gen)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/entrypoints/openai/api_server.py", line 191, in build_async_engine_client_from_engine_args

vllm-1 | async_llm = AsyncLLM.from_vllm_config(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/v1/engine/async_llm.py", line 162, in from_vllm_config

vllm-1 | return cls(

vllm-1 | ^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/v1/engine/async_llm.py", line 106, in __init__

vllm-1 | self.tokenizer = init_tokenizer_from_configs(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer_group.py", line 111, in init_tokenizer_from_configs

vllm-1 | return TokenizerGroup(

vllm-1 | ^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer_group.py", line 24, in __init__

vllm-1 | self.tokenizer = get_tokenizer(self.tokenizer_id, **tokenizer_config)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/vllm/transformers_utils/tokenizer.py", line 232, in get_tokenizer

vllm-1 | tokenizer = AutoTokenizer.from_pretrained(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/transformers/models/auto/tokenization_auto.py", line 998, in from_pretrained

vllm-1 | return tokenizer_class.from_pretrained(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/transformers/tokenization_utils_base.py", line 2025, in from_pretrained

vllm-1 | return cls._from_pretrained(

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/transformers/tokenization_utils_base.py", line 2278, in _from_pretrained

vllm-1 | tokenizer = cls(*init_inputs, **init_kwargs)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/root/.cache/huggingface/modules/transformers_modules/moonshotai/Kimi-VL-A3B-Thinking-2506/35bd9189e1f9e101a0f326de07dd92a25d20f384/tokenization_moonshot.py", line 98, in __init__

vllm-1 | mergeable_ranks = load_tiktoken_bpe(vocab_file)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/tiktoken/load.py", line 148, in load_tiktoken_bpe

vllm-1 | contents = read_file_cached(tiktoken_bpe_file, expected_hash)

vllm-1 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/tiktoken/load.py", line 63, in read_file_cached

vllm-1 | contents = read_file(blobpath)

vllm-1 | ^^^^^^^^^^^^^^^^^^^

vllm-1 | File "/usr/local/lib/python3.12/dist-packages/tiktoken/load.py", line 13, in read_file

vllm-1 | raise ImportError(

vllm-1 | ImportError: blobfile is not installed. Please install it by running `pip install blobfile`.

vllm-1 exited with code 1

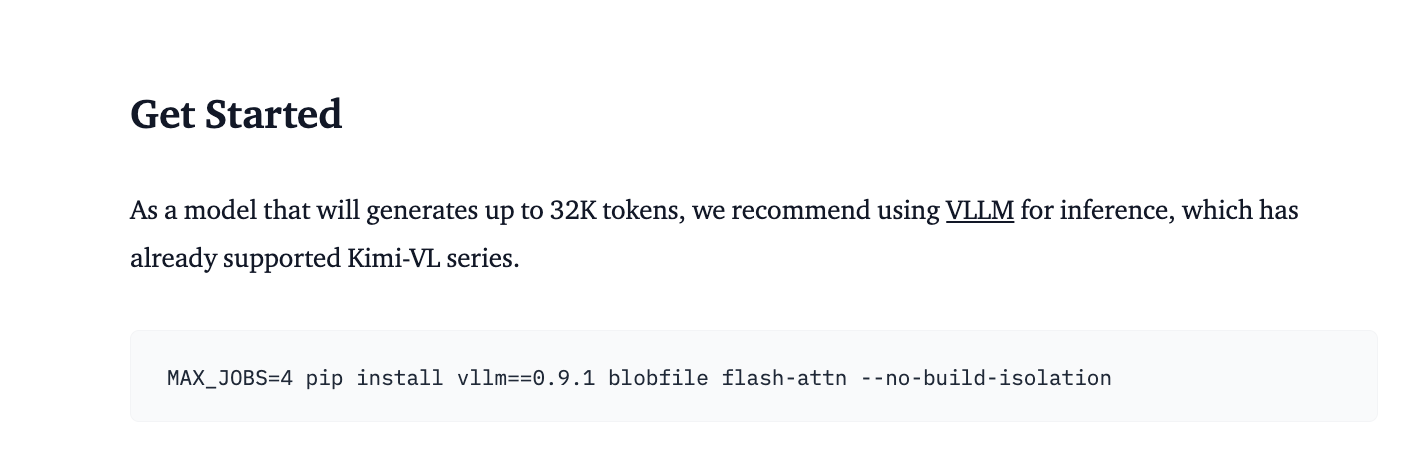

Please install blobfile.pip install blobfile

https://huggingface.co/blog/moonshotai/kimi-vl-a3b-thinking-2506