metadata

license: bigscience-bloom-rail-1.0

datasets:

- OpenAssistant/oasst1

- LEL-A/translated_german_alpaca_validation

- deepset/germandpr

- oscar-corpus/OSCAR-2301

language:

- de

pipeline_tag: conversational

Instruction-fine-tuned German language model (6B parameters; early alpha version)

Base model: malteos/bloom-6b4-clp-german (Ostendorff and Rehm, 2023)

Trained on:

- 20B additional German tokens (Wikimedia dumps and OSCAR 2023)

- OpenAssistant/oasst1 (German subset)

- LEL-A/translated_german_alpaca_validation

- LEL-A's version of deepset/germandpr

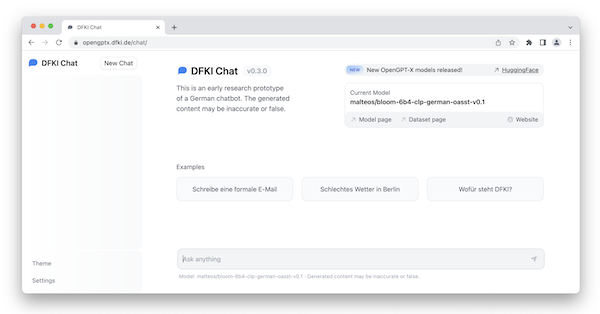

Chat demo

https://opengptx.dfki.de/chat/

Please note that this a research prototype and may not be suitable for extensive use.

How to cite

If you are using our code or models, please cite our paper:

@misc{Ostendorff2023clp,

doi = {10.48550/ARXIV.2301.09626},

author = {Ostendorff, Malte and Rehm, Georg},

title = {Efficient Language Model Training through Cross-Lingual and Progressive Transfer Learning},

publisher = {arXiv},

year = {2023}

}

License

Acknowledgements

This model was trained during the Helmholtz GPU Hackathon 2023. We gratefully thank the organizers for hosting this event and the provided computing resources.