DeepSeek Children's Stories Model

A lightweight (15-18M parameters) story generation model specifically designed for children's content, featuring advanced architecture components like Mixture of Experts (MoE) and Multihead Latent Attention (MLA).

Model Description

The model is built on a modified DeepSeek architecture, optimized for generating age-appropriate, engaging children's stories.

Key Features

- Size: ~15-18M parameters

- Architecture: 6 layers, 8 heads, 512 embedding dimension

- Context Window: 1024 tokens

- Special Components:

- Mixture of Experts (MoE) with 4 experts

- Multihead Latent Attention (MLA)

- Multi-token prediction

- Rotary Positional Encodings (RoPE)

Training

- Dataset: ajibawa-2023/Children-Stories-Collection

- Training Time: ~2,884 seconds

- Hardware: NVIDIA RTX 4090 (24GB VRAM)

- Memory Usage: ~2.24GB GPU memory

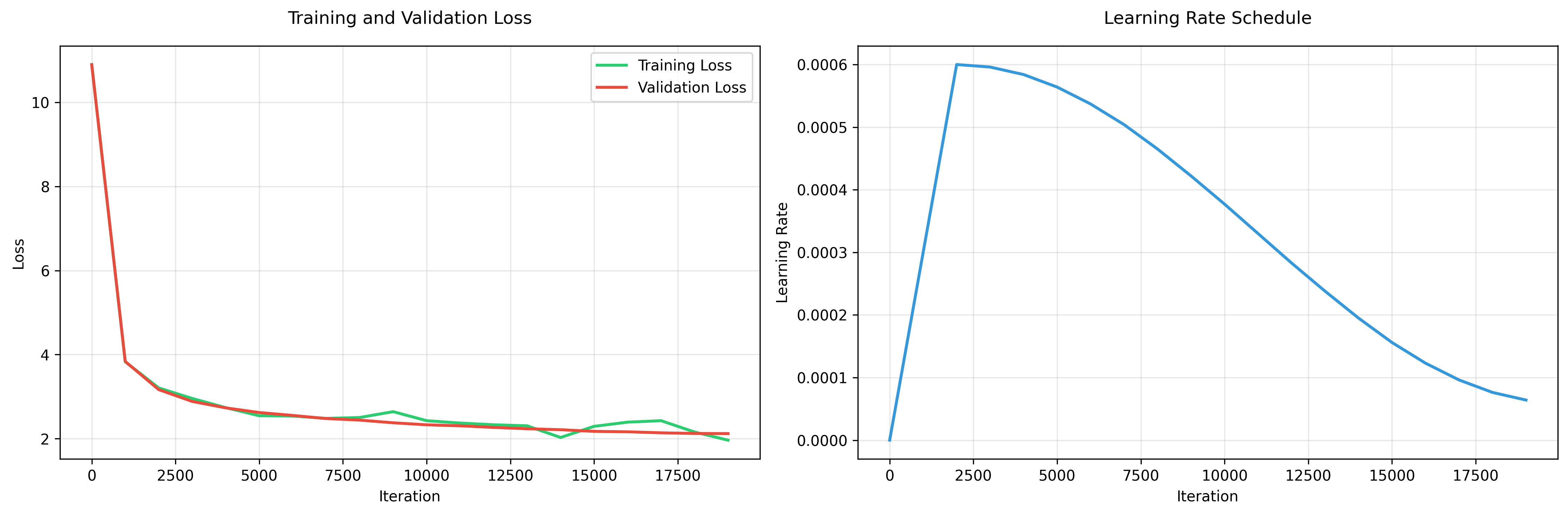

Training Metrics

The training metrics show:

- Rapid initial convergence (loss drops from 10.89 to 3.83 in first 1000 iterations)

- Stable learning with consistent improvement

- Final validation loss of 2.12

- Cosine learning rate schedule with warmup

Example Output

Prompt: "Once upon a time"

Generated Story:

it was a bright, sunny day, and lily and her little brother max were playing in their backyard. they found a piece of paper with two sentence written on it. "let's make sense of some of these sentences," said max, pointing to the first sentence. "these people are playing on the grass," "but i don't know," replied lily. she thought for a moment. "maybe they only talk with the others or not, right?" she asked. max nodded. "yeah, and what about 'he', 'he', 'an', 'man', and 'man'?" lily explained, "it means they're playing with their dogs. but they don't say anything about someone talking." max asked, "but what about the others? we don't talk to each other!" lily thought for a moment before answering, "that's right! sometimes, people try to talk to each other. when we talk about something, we need to tell others"

Usage

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load model and tokenizer

model = AutoModelForCausalLM.from_pretrained("your-username/deepseek-children-stories")

tokenizer = AutoTokenizer.from_pretrained("your-username/deepseek-children-stories")

# Generate text

prompt = "Once upon a time"

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=200, temperature=0.8)

story = tokenizer.decode(outputs[0])

print(story)

Limitations

- Limited to English language stories

- Context window of 1024 tokens may limit longer narratives

- May occasionally generate repetitive patterns

- Best suited for short to medium-length children's stories

Citation

@misc{deepseek-children-stories,

author = {Prashant Lakhera},

title = {DeepSeek Children's Stories: A Lightweight Story Generation Model},

year = {2024},

publisher = {GitHub},

url = {https://github.com/ideaweaver-ai/DeepSeek-Children-Stories-15M-model}

}

License

This project is licensed under the MIT License. See the LICENSE file for details.

- Downloads last month

- 0

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

1

Ask for provider support