⭐ GLiClass: Generalist and Lightweight Model for Sequence Classification

This is an efficient zero-shot classifier inspired by GLiNER work. It demonstrates the same performance as a cross-encoder while being more compute-efficient because classification is done at a single forward path.

It can be used for topic classification, sentiment analysis and as a reranker in RAG pipelines.

The model was trained on synthetic data and can be used in commercial applications.

This version of the model utilize the LLM2Vec approach for converting modern decoders to bi-directional encoder. It brings the following benefits:

- Enhanced performance and generalization capabilities;

- Support for Flash Attention;

- Extended context window.

How to use:

First of all, you need to install GLiClass library:

pip install gliclass

To use this particular Qwen-based model you need different transformers package version than llm2vec requires, so install it manually:

pip install transformers==4.44.1

Than you need to initialize a model and a pipeline:

from gliclass import GLiClassModel, ZeroShotClassificationPipeline

from transformers import AutoTokenizer

model = GLiClassModel.from_pretrained("knowledgator/gliclass-qwen-1.5B-v1.0")

tokenizer = AutoTokenizer.from_pretrained("knowledgator/gliclass-qwen-1.5B-v1.0")

pipeline = ZeroShotClassificationPipeline(model, tokenizer, classification_type='multi-label', device='cuda:0')

text = "One day I will see the world!"

labels = ["travel", "dreams", "sport", "science", "politics"]

results = pipeline(text, labels, threshold=0.5)[0] #because we have one text

for result in results:

print(result["label"], "=>", result["score"])

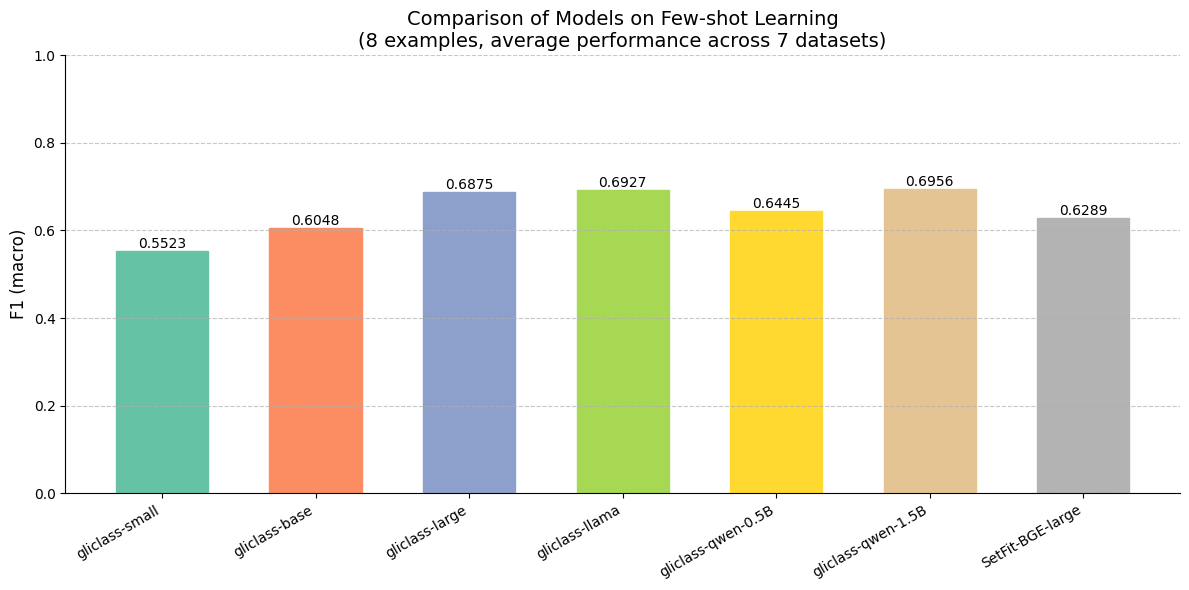

Benchmarks:

While the model is some how comparable to DeBERTa version in zero-shot setting, it demonstrates state-of-the-art performance in few-shot setting.

Join Our Discord

Connect with our community on Discord for news, support, and discussion about our models. Join Discord.

- Downloads last month

- 21