LVNet

Official Code for Too Many Frames, Not All Useful: Efficient Strategies for Long-Form Video QA

Accepted to the 9 pages Workshop on Video-Language Models at NeurIPS 2024

Abstract

Long-form videos that span wide temporal intervals are highly information-redundant and contain multiple distinct events or entities that are often loosely-related. Therefore, when performing long-form video question answering (LVQA), all information necessary to generate a correct response can often be contained within a small subset of frames. Recent literature explores the use of large language models (LLMs) in LVQA benchmarks, achieving exceptional performance, while relying on vision language models (VLMs) to convert all visual content within videos into natural language. Such VLMs often independently caption a large number of frames uniformly sampled from long videos, which is not efficient and can mostly be redundant.

Questioning these decision choices, we explore optimal strategies for key-frame selection and sequence-aware captioning that can significantly reduce these redundancies. We propose two novel approaches that improve each aspect, namely Hierarchical Keyframe Selector and Sequential Visual LLM. Our resulting framework, called LVNet, achieves state-of-the-art performance across three benchmark LVQA datasets.

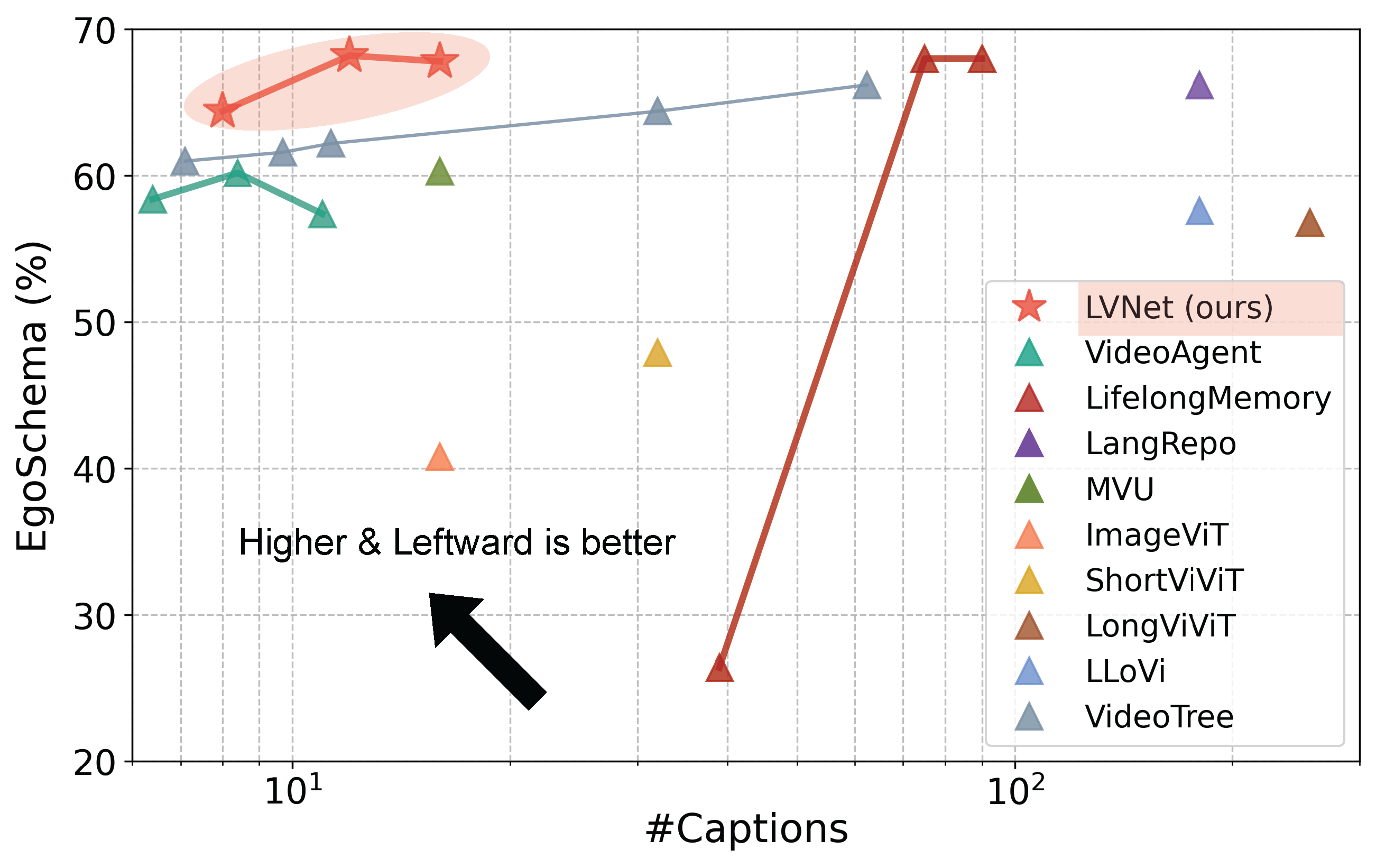

Accuracy vs. Captions on the EgoSchema Subset

- LVNet shows a SOTA 68.2% accuracy, using only 12 captions.

- This highlights the quality of keyframes from the Hierarchical Keyframe Selector.

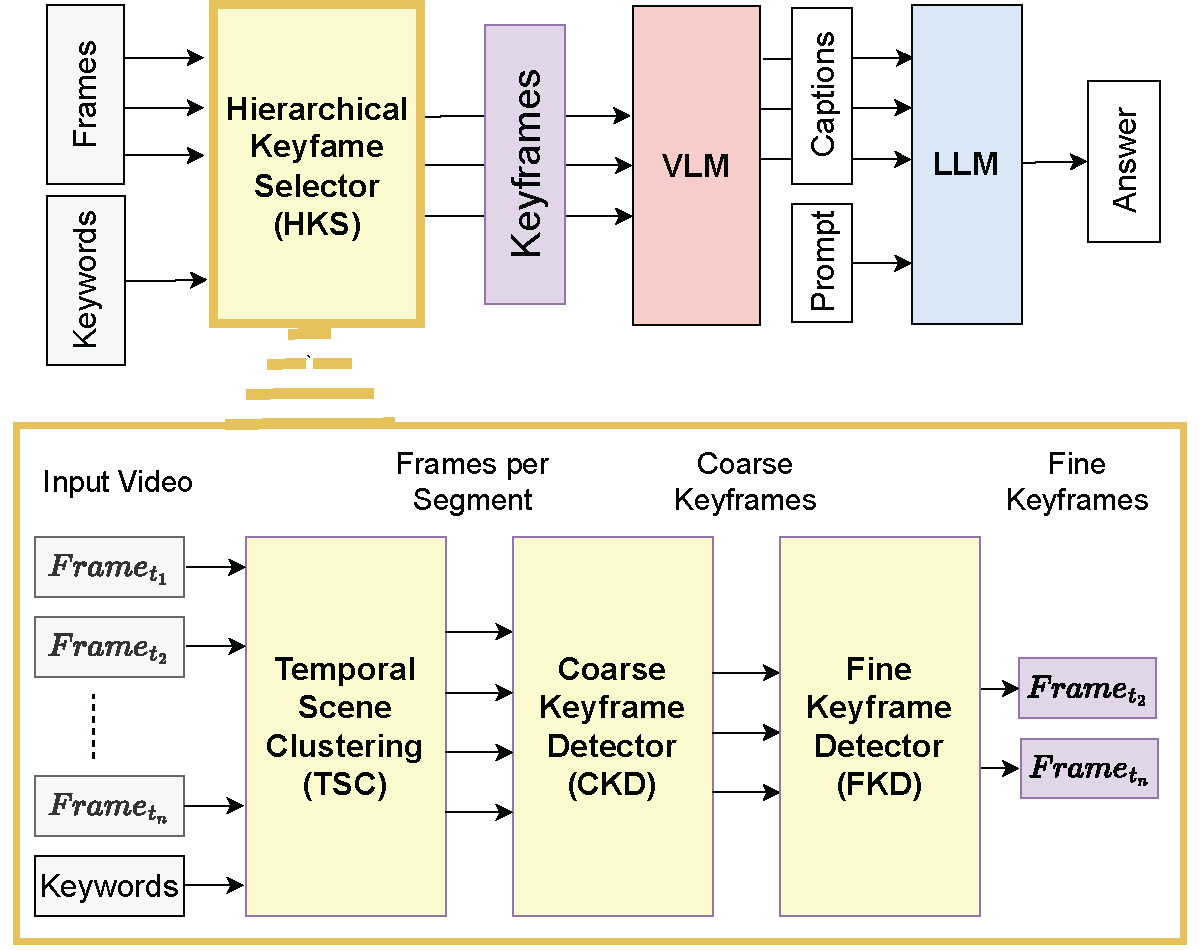

Hierarchical Keyframe Selector: Structural Overview

- Overall strategy: Generate captions via a Hierarchical Keyframe Selector, then feed them to a separate LLM to answer the question.

- Temporal Scene Clustering (TSC): Divides the long video into multiple scenes, enabling per-scene subsampling.

- Coarse Keyframe Detector (CKD): Selects frames best-aligned with keywords relevant to the query.

- Fine Keyframe Detector (FKD): Refines the keyword alignments within a smaller set of frames via templated visual prompting.

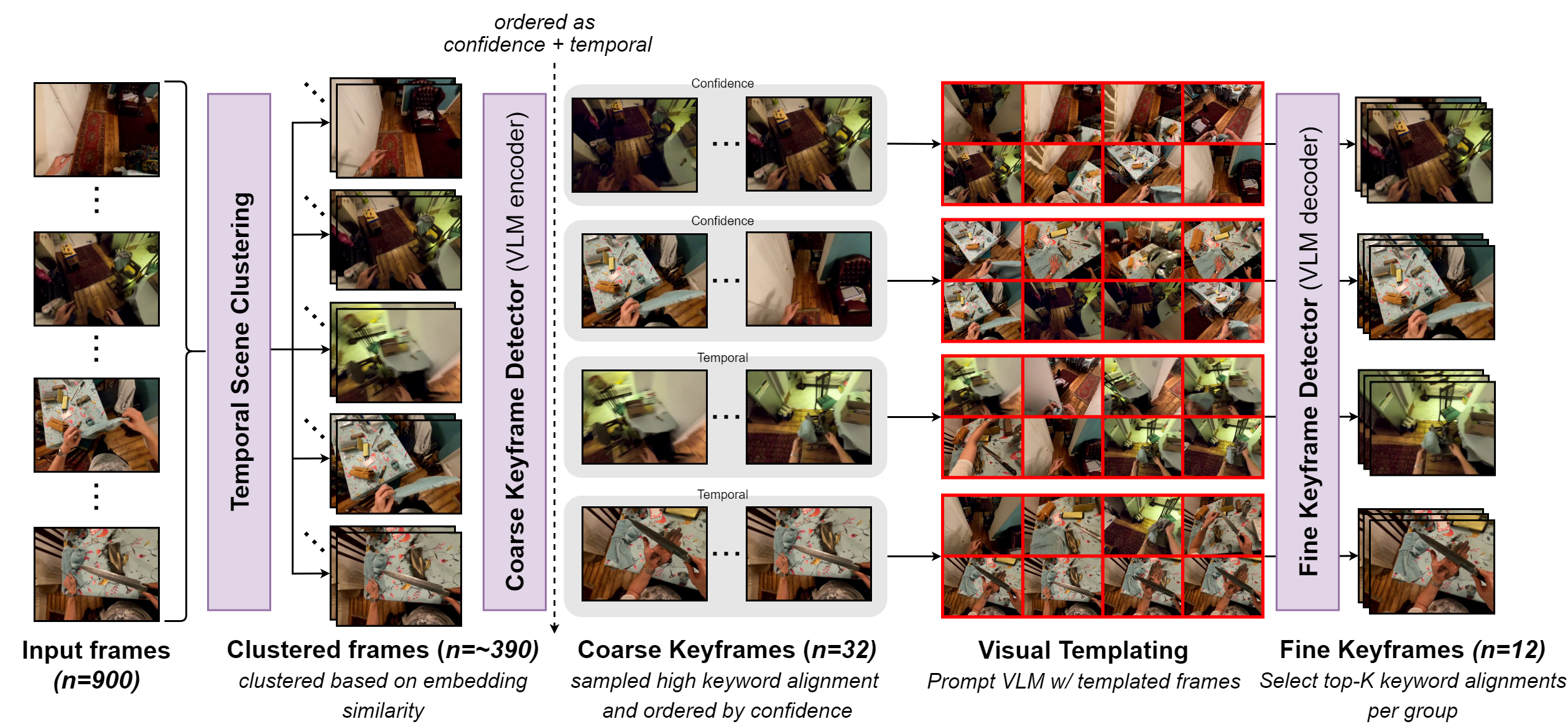

Operational Visualization of HKS

- Temporal Scene Clustering (TSC): 900 frames get clustered into scenes, then uniformly subsampled to produce about 280 frames.

- Coarse Keyframe Detector (CKD): Among those, 32 frames are selected, based on alignment with query keywords.

- Visual Templating: The coarsely refined keyframes are ordered by confidence and temporal order, grouped into 4 chunks of 8 frames.

- Fine Keyframe Detector (FKD): Selects the final 12 frames via further keyword alignment checks.

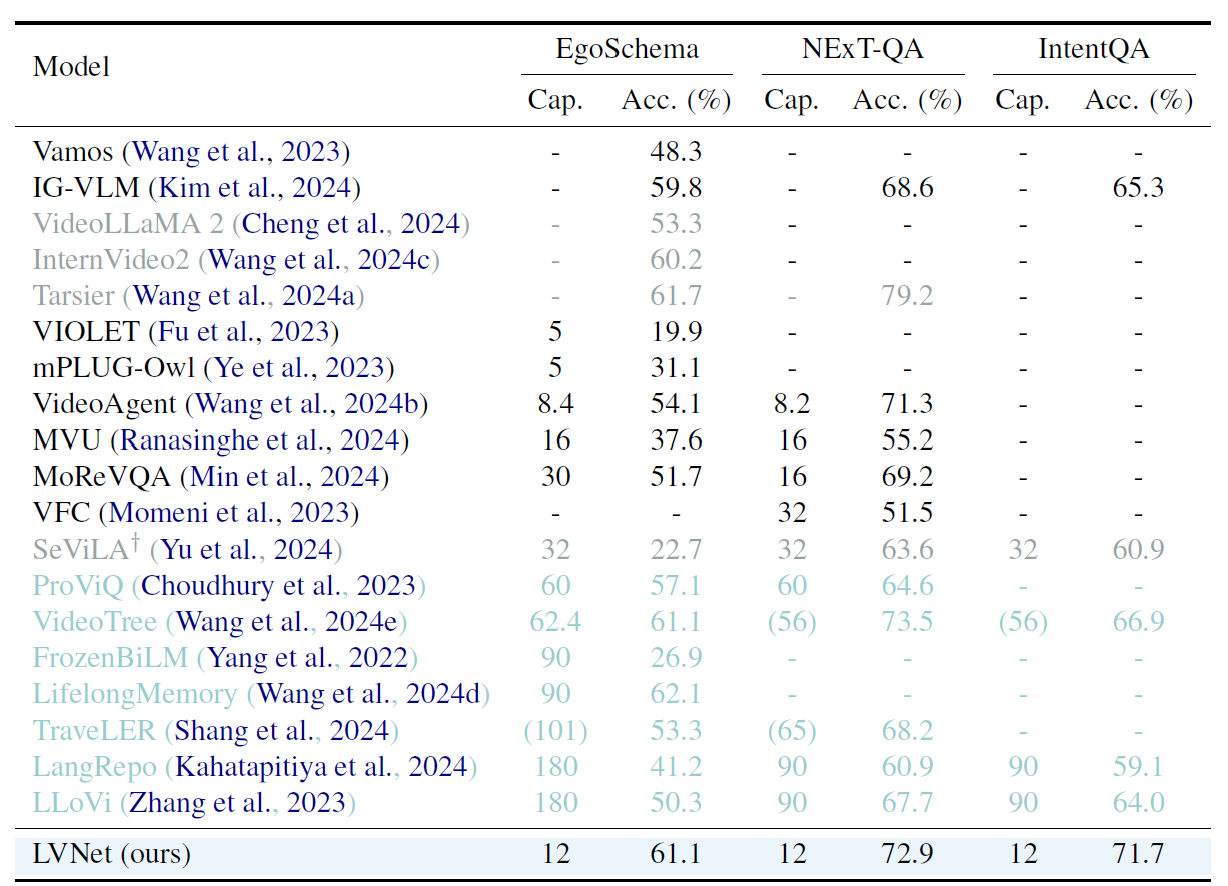

Experiments: EgoSchema, NExT-QA, and IntentQA

- LVNet achieves 61.1%, 72.9%, and 71.7% on the three benchmarks, respectively, using just 12 frames—on par with or exceeding models that use many more frames.

- Models with specialized video-caption pretraining or significantly more captions are shown in gray/light green for fairness comparison.

Comparison with Other Keyframe Selection Methods

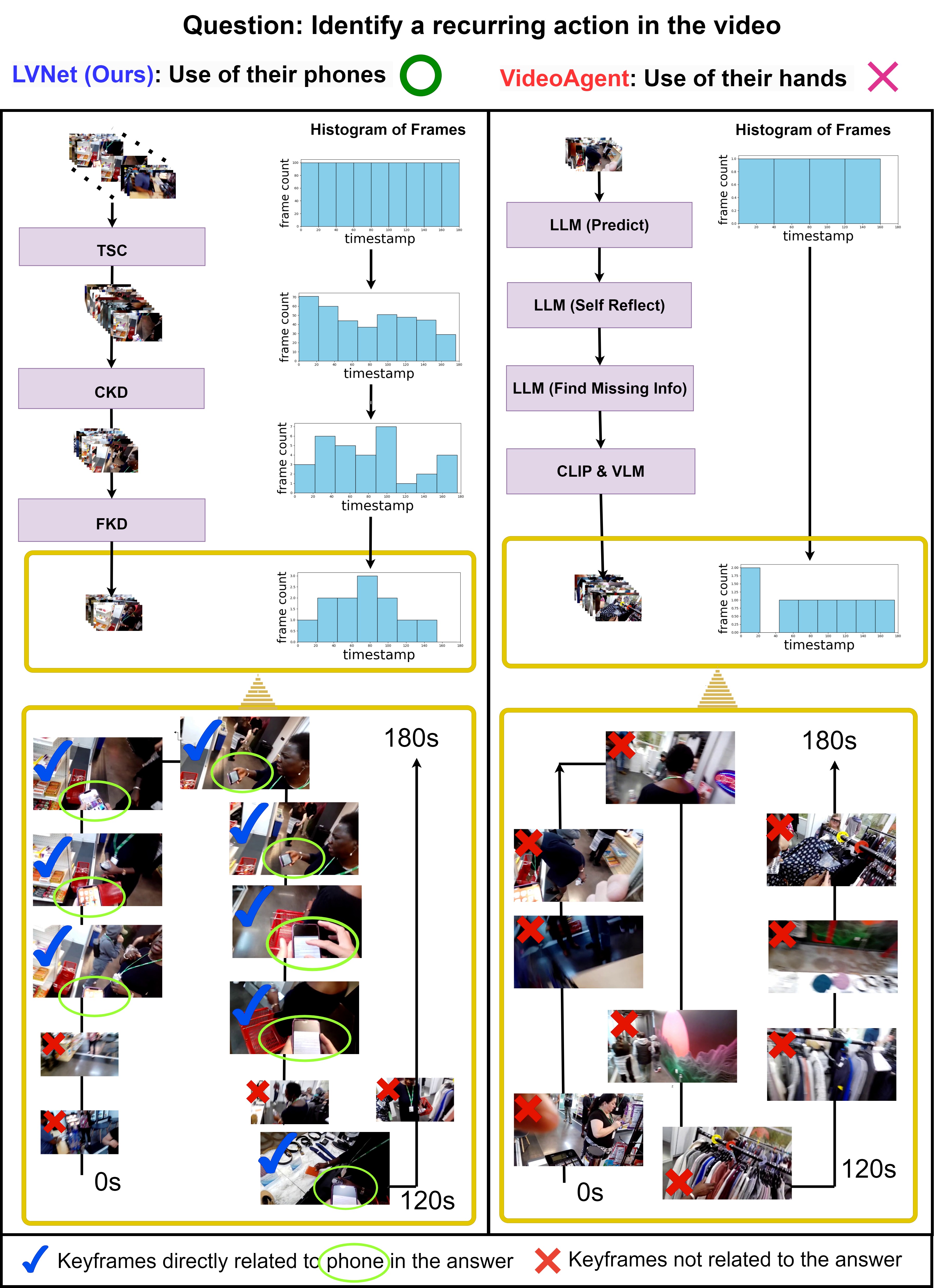

Below is a side-by-side comparison of LVNet and VideoAgent.

- LVNet starts with uniform sampling but then refines keyframes via TSC, CKD, and FKD. This yields 12 frames, 8 of which show “phone usage,” the correct activity.

- VideoAgent continues uniform sampling due to insufficient initial frames, resulting in 0 relevant frames out of 9 and an incorrect final answer.

Evaluation

Generating Answers Using LLM

You can quickly run the LLM to produce answers once you have the keyframe-based captions:

- Download the Captions for Dataset

- EgoSchema:

bash scripts/get_ES_captions.sh

- Run LLM

bash scripts/eval_ES.sh

Generate captions using our provided modules

Hierarchical Keyframe Selector (HKS)

- Temporal Scene Clustering (TSC): temporalSceneClustering.py

- Coarse Keyframe Detector (CKD): coarseKeyframeDetector.py

- Fine Keyframe detector (FKD): fineKeyframeDetector.py

EgoSchema keyframe selection from images:

bash config/run.shGenerate captions based on the keyframes:

bash scripts/create_caption.sh

Data

Hierarchical Keyframe Selector hyper-parameters & paths

coarseKeyframeDetector.py CLIP model checkpoint

- ICCV 2023 Perceptual Grouping in Contrastive Vision-Language Models

- Checkpoint: clippy_5k.pt in the root directory.

Citation

@inproceedings{Park2024TooMF,

title={Too Many Frames, not all Useful: Efficient Strategies for Long-Form Video QA},

author={Jongwoo Park and Kanchana Ranasinghe and Kumara Kahatapitiya and Wonjeong Ryoo and Donghyun Kim and Michael S. Ryoo},

year={2024}

}

For more details and updates, please see our GitHub Repository.