Nepali GPT Model

Overview

The NepaliGPTModel is a custom GPT-style pretrained transformer model designed for natural language processing tasks, with a focus on the Nepali language. It is built using PyTorch and made compatible with the Hugging Face transformers library for easy integration and deployment. This model is intended for text generation and other language modeling tasks, particularly for Nepali text.

This repository contains the model weights, tokenizer, and necessary files to load and use the model on Hugging Face.

Model Details

Architecture

- Model Type:

nep_gptv1 - Vocabulary Size: 51,728

- Embedding Dimension: 768

- Context Length: 1,024

- Number of Attention Heads: 12

- Number of Layers: 9

- Dropout Rate: 0.1

- QKV Bias:

false(attention layers do not use bias) - Torch Data Type:

float32 - Transformers Version: 4.51.0.dev0

The model follows a decoder-only transformer architecture, similar to GPT, with 9 transformer blocks, each containing multi-head attention and feedforward layers. It uses causal masking to ensure autoregressive generation.

Training Details

Training Configuration

The model was trained with the following hyperparameters:

- Batch Size: 1

- Learning Rate: 3.00e-4

- Weight Decay: 0.01

- Betas (for AdamW Optimizer): (0.9, 0.999)

- Number of Workers: 8

- Maximum Epochs: 1

- Warmup Rate: 0.15 (fraction of steps for learning rate warmup)

- Initial Learning Rate: 2.94e-4

- Minimum Learning Rate: 2.92e-4

- Maximum Gradient Norm: 1.0 (for gradient clipping)

- Evaluation Frequency: Every 8,000 steps

- No Improvement Loss Count: 5 (early stopping criterion)

- Start Context:

"रोगविज्ञान रोग वा चोटको कारण तथा प्रभावहरूको अध्ययन गर्ने"(used for generation during training)

Training and Evaluation Loss

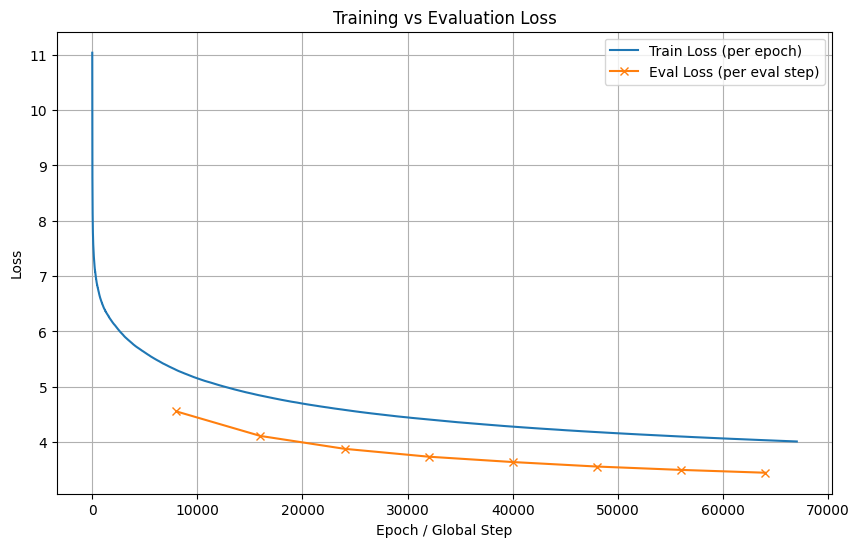

The plot below shows the training and evaluation loss over the course of training:

- Training Loss (Blue Line): Measured per epoch, showing a steady decrease from around 11 to below 4 over 65,000 global steps.

- Evaluation Loss (Orange Line with 'x' Markers): Measured per evaluation step (every 8,000 steps), starting at around 5 and decreasing to just below 4.

The plot indicates that the model is learning effectively, with both training and evaluation losses converging, suggesting good generalization and minimal overfitting.

Installation

To use this model, you’ll need to install the required dependencies:

pip install torch transformers

Usage

Loading the Model and Tokenizer

To load and use the model, you need to register the custom model class by importing the model.py file included in this repository. Then, you can use the transformers library to load the model and tokenizer.

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load the model and tokenizer

model_name = "dinesh-bk/nepali-gpt" # Replace with your Hugging Face repo name

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Generate text

input_text = "नमस्ते, संसार!" # "Hello, world!" in Nepali

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs, max_length=50)

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print("Generated text:", generated_text)

Notes

- Generation Options: You can customize generation with parameters like

do_sample=True,top_k=50, ortemperature=0.7for more diverse outputs.

Files in This Repository

config.json: Model configuration.model.safetensors: Model weights in SafeTensors format.configuration_nepaligpt.py: Defines theNepaliGPTConfigconfiguration of the model.model_nepaligpt.py: Defines theTransformerBlock, andNepaliGPTModelclasses, including registration.special_tokens_map.json: Special tokens for the tokenizer.tokenizer_config.json: Tokenizer settings.tokenizer.json: Core tokenizer configuration and vocabulary.

Training Data

The model was trained on a dataset of Nepali text from hugging face(IRIISNEPAL/Nepali-Text-Corpus).

Limitations

- Training Duration: The model was trained for only 1 epoch, which might limit its performance. Further training could improve results.

- Overfitting: While the training and evaluation losses are close, the small gap suggests potential for slight overfitting, especially with a single epoch.

- Language Specificity: The model is tailored for Nepali but may not generalize well to other languages without fine-tuning.

License

This project is licensed under the MIT License. See the LICENSE file for details.

Contact

For questions or contributions, please contact

- Downloads last month

- 21