PathFinder-PRM-7B

Introduction

PathFinder-PRM-7B is a hierarchical discriminative Process Reward Model (PRM) designed to identify errors and reward correct math reasoning in multi-step outputs from large language models (LLMs). Instead of treating evaluation as a single correct-or-wrong decision, PathFinder-PRM-7B breaks down its error judgment into 2 parts: whether the reasoning is mathematically correct, and logically consistent. It predicts these aspects separately and then combines them to decide if the current reasoning steps leads to a correct final solution. PathFinder-PRM-7B is trained on a combination of high-quality human annotated data (PRM800K) and additional automatically annotated samples, enabling robustness to common failure patterns and strong generalization across diverse benchmarks such as ProcessBench and PRMBench.

Model Details

Model Description

- Model type: Process Reward Model

- Language(s) (NLP): English

- License: MIT

- Finetuned from model: Qwen/Qwen2.5-Math-7B-Instruct

Model Sources

- Repository: https://github.com/declare-lab/PathFinder-PRM

- Paper: https://arxiv.org/abs/2505.19706

For more details, please refer to our paper and Github repository.

Usage

🤗 Hugging Face Transformers

Here we show a code snippet to show you how to use the PathFinder-PRM-7B with transformers:

import torch

from transformers import AutoModel, AutoTokenizer

import torch.nn.functional as F

model_name = "declare-lab/PathFinder-PRM-7B"

device = "auto"

PROMPT_PREFIX = "You are a Math Teacher. Given a question and a student's solution, evaluate the mathemetical correctness, logic consistency of the current step and whether it will lead to the correct final solution"

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

model = AutoModel.from_pretrained(

model_name,

device_map=device,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

attn_implementation = "flash_attention_2",

).eval()

pos_token_id = tokenizer.encode("<+>")[0]

neg_token_id = tokenizer.encode("<->")[0]

def run_inference(sample_input):

message_ids = tokenizer.apply_chat_template(

sample_input,

tokenize=True,

return_dict=True,

return_tensors='pt'

).to(model.device)

mask_token_id = tokenizer.encode("<extra>")[0]

token_masks = (message_ids['input_ids'] == mask_token_id)

shifted_mask = torch.cat(

[

token_masks[:, 1:],

torch.zeros(token_masks.size(0), 1, dtype=torch.bool, device=model.device)

],

dim=1

)

# 1st Forward Pass

with torch.no_grad():

outputs = model(**message_ids)

allowed_token_ids = torch.tensor([pos_token_id, neg_token_id], device=outputs.logits.device)

masked_logits = outputs.logits[shifted_mask][:, allowed_token_ids]

predicted_indices = masked_logits.argmax(dim=-1)

predicted_tokens = allowed_token_ids[predicted_indices]

decoded_tokens = [tokenizer.decode([int(token_id)], skip_special_tokens=False) for token_id in predicted_tokens]

if '<->' in decoded_tokens:

# error found in step

return -1

# preparing input for 2nd Forward Pass

new_messages = sample_input.copy()

asst_response = new_messages[-1]['content']

# replacing mask tokens with pred tokens for math and consistency

for pred in decoded_tokens:

asst_response = asst_response.replace("<extra>", pred, 1)

asst_response += ', Correctness: <extra>'

new_messages[-1]['content'] = asst_response

new_message_ids = tokenizer.apply_chat_template(

new_messages,

tokenize=True,

return_dict=True,

return_tensors='pt'

).to(model.device)

token_masks = (new_message_ids['input_ids'] == mask_token_id)

shifted_mask = torch.cat(

[

token_masks[:, 1:],

torch.zeros(token_masks.size(0), 1, dtype=torch.bool, device=model.device)

],

dim=1

)

# 2nd Forward Pass

with torch.no_grad():

outputs = model(**new_message_ids)

masked_logits = outputs.logits[shifted_mask]

restricted_logits = masked_logits[:, [pos_token_id, neg_token_id]]

probs_pos_neg = F.softmax(restricted_logits, dim=-1)

return probs_pos_neg[0][0].cpu().item()

question = "Sue lives in a fun neighborhood. One weekend, the neighbors decided to play a prank on Sue. On Friday morning, the neighbors placed 18 pink plastic flamingos out on Sue's front yard. On Saturday morning, the neighbors took back one third of the flamingos, painted them white, and put these newly painted white flamingos back out on Sue's front yard. Then, on Sunday morning, they added another 18 pink plastic flamingos to the collection. At noon on Sunday, how many more pink plastic flamingos were out than white plastic flamingos?"

prev_steps = [ "To find out how many more pink plastic flamingos were out than white plastic flamingos at noon on Sunday, we can break down the problem into steps. First, on Friday, the neighbors start with 18 pink plastic flamingos.",

"On Saturday, they take back one third of the flamingos. Since there were 18 flamingos, (1/3 \\times 18 = 6) flamingos are taken back. So, they have (18 - 6 = 12) flamingos left in their possession. Then, they paint these 6 flamingos white and put them back out on Sue's front yard. Now, Sue has the original 12 pink flamingos plus the 6 new white ones. Thus, by the end of Saturday, Sue has (12 + 6 = 18) pink flamingos and 6 white flamingos.",

"On Sunday, the neighbors add another 18 pink plastic flamingos to Sue's front yard. By the end of Sunday morning, Sue has (18 + 18 = 36) pink flamingos and still 6 white flamingos."]

curr_step = "To find the difference, subtract the number of white flamingos from the number of pink flamingos: (36 - 6 = 30). Therefore, at noon on Sunday, there were 30 more pink plastic flamingos out than white plastic flamingos. The answer is (\\boxed{30})."

prev_steps_str = "\n\n".join(prev_steps)

messages = [

{"role": "user", "content": PROMPT_PREFIX + "\n\n Question: "+ question},

{"role": "assistant", "content": prev_steps_str + "\n\nCurrent Step: " + now_step +" Math reasoning: <extra>, Consistency: <extra>"},

]

reward_score = run_inference(messages)

Evaluation

Evalaution Benchmarks

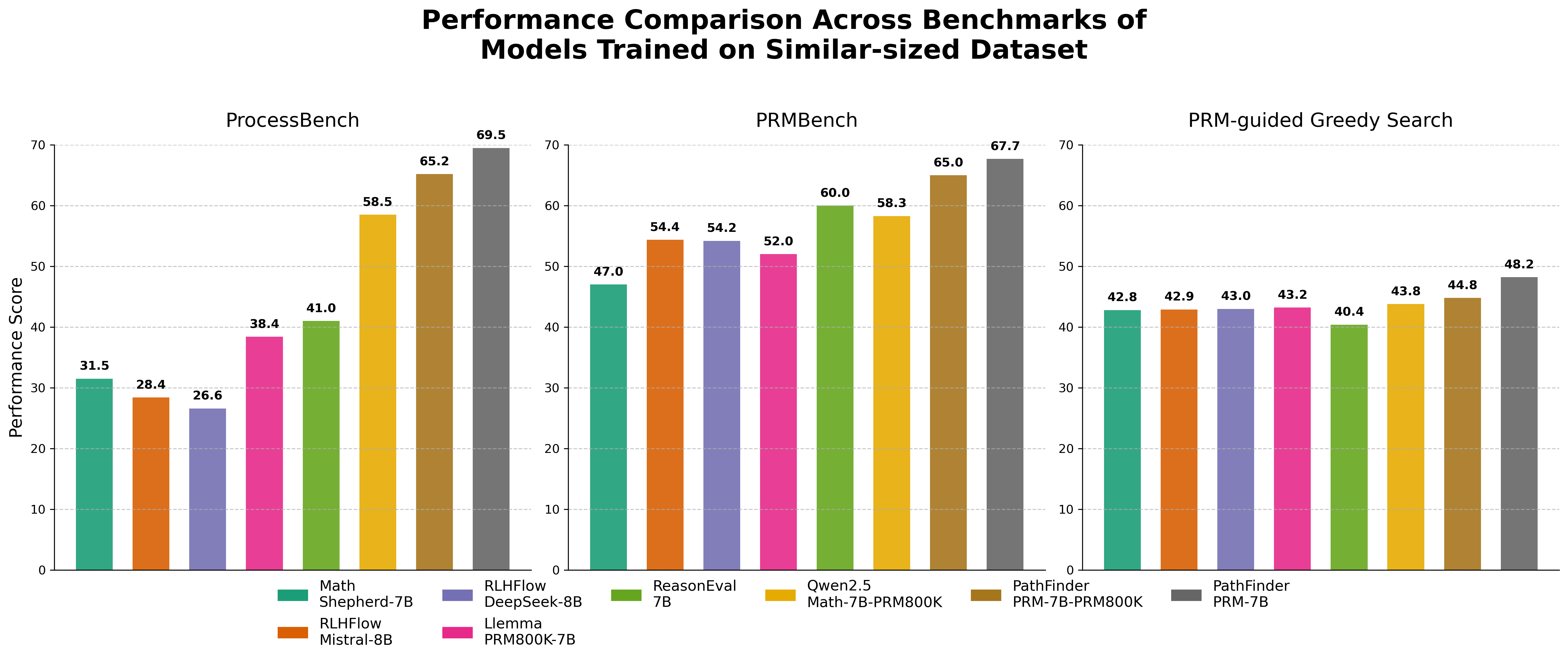

Results

PRMBench Results

| Model | Simplicity | Soundness | Sensitivity | Overall |

|---|---|---|---|---|

| LLM-as-judge, Proprietary Language Models | ||||

| Gemini-2.0-thinking-exp-1219 | 66.2 | 71.8 | 75.3 | 68.8 |

| GPT-4o | 59.7 | 70.9 | 75.8 | 66.8 |

| LLM-as-judge, Open-source Language Models | ||||

| Qwen-2.5-Math-72B | 55.1 | 61.1 | 67.1 | 57.4 |

| QwQ-Preview-32B | 56.4 | 68.2 | 73.5 | 63.6 |

| Discriminative Process Reward Models | ||||

| Math-Shepherd-7B | 47.1 | 45.7 | 60.7 | 47.0 |

| Math-PSA-7B | 51.3 | 51.8 | 64.9 | 52.3 |

| RLHFlow-Mistral-8B | 46.7 | 57.5 | 68.5 | 54.4 |

| Lemma-PRM800k-7B | 51.4 | 50.9 | 66.0 | 52.0 |

| ReasonEval-7B | 55.5 | 63.9 | 71.0 | 60.0 |

| Qwen2.5-Math-PRM-7B | 52.1 | 71.0 | 75.5 | 65.5 |

| 🟢 PathFinder-PRM-7B | 58.9 | 70.8 | 76.9 | 67.7 |

Note: Simplicity, Soundness, and Sensitivity are averaged sub-metrics from PRMBench. Our model, PathFinder-PRM-7B, outperforms all open-source discriminative PRMs and LLM-as-judge models, while achieving competitive performance compared to large proprietary models.

ProcessBench Results

| Model | # Samples | GSM8K | MATH | Olympiad | OmniMath | Avg. F1 |

|---|---|---|---|---|---|---|

| Math-Shepherd-7B | 445K | 47.9 | 29.5 | 24.8 | 23.8 | 31.5 |

| RLHFlow-Mistral-8B | 273K | 50.4 | 33.4 | 13.8 | 15.8 | 28.4 |

| Llemma-PRM800K-7B | ~350K | 48.4 | 43.1 | 28.5 | 33.4 | 38.4 |

| Qwen2.5-Math-7B-PRM800K | 264K | 68.2 | 62.6 | 50.7 | 44.3 | 58.5 |

| 🟢 PathFinder-PRM-7B | ~400K | 77.9 | 75.3 | 65.0 | 59.7 | 69.5 |

| Qwen2.5-Math-PRM-7B | ~1.5M | 82.4 | 77.6 | 67.5 | 66.3 | 73.5 |

PathFinder-PRM-7B outperforms models trained on similar data sizes on ProcessBench but performs 4 points worse compared to Qwen2.5-Math-PRM-7B which was trained with 3x more data.

Reward-Guided Greedy Search (PRM@8)

| Model | AIME24 | AMC23 | MATH | Olympiad | College | Minerva | Avg |

|---|---|---|---|---|---|---|---|

| Math-Shepherd-7B | 13.3 | 52.5 | 74.6 | 38.5 | 36.5 | 41.2 | 42.8 |

| Math-PSA-7B | 6.7 | 57.5 | 79.8 | 42.5 | 41.0 | 39.3 | 44.5 |

| Skywork-PRM-7B | 10.0 | 57.5 | 77.8 | 41.5 | 39.0 | 43.4 | 44.9 |

| Qwen2.5-Math-PRM-7B | 16.7 | 60.0 | 81.0 | 43.5 | 39.0 | 40.4 | 46.8 |

| 🟢 PathFinder-PRM-7B | 20.0 | 62.5 | 78.8 | 36.5 | 55.0 | 36.7 | 48.3 |

Note: All results are computed using reward-guided greedy search with Qwen2.5‑7B‑Instruct as the policy model. PathFinder-PRM-7B, outperforms all open-source discriminative PRMs in Reward-Guided Greedy Search showcasing its ability to better guide policy models towards correct solutions

Citation

@misc{pala2025errortypingsmarterrewards,

title={Error Typing for Smarter Rewards: Improving Process Reward Models with Error-Aware Hierarchical Supervision},

author={Tej Deep Pala and Panshul Sharma and Amir Zadeh and Chuan Li and Soujanya Poria},

year={2025},

eprint={2505.19706},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.19706},

}

- Downloads last month

- 27