⚠️ Note: This model has been re-uploaded to a new organization as part of a consolidated collection.

The updated version is available at https://huggingface.co/aieng-lab/bert-base-cased-mamut.

Please refer to the new repository for future updates, documentation, and related models.

MAMUT Bert (Mathematical Structure Aware BERT)

Pretrained model based on bert-base-cased with further mathematical pre-training, introduced in MAMUT: A Novel Framework for Modifying Mathematical Formulas for the Generation of Specialized Datasets for Language Model Training.

Model Details

Model Description

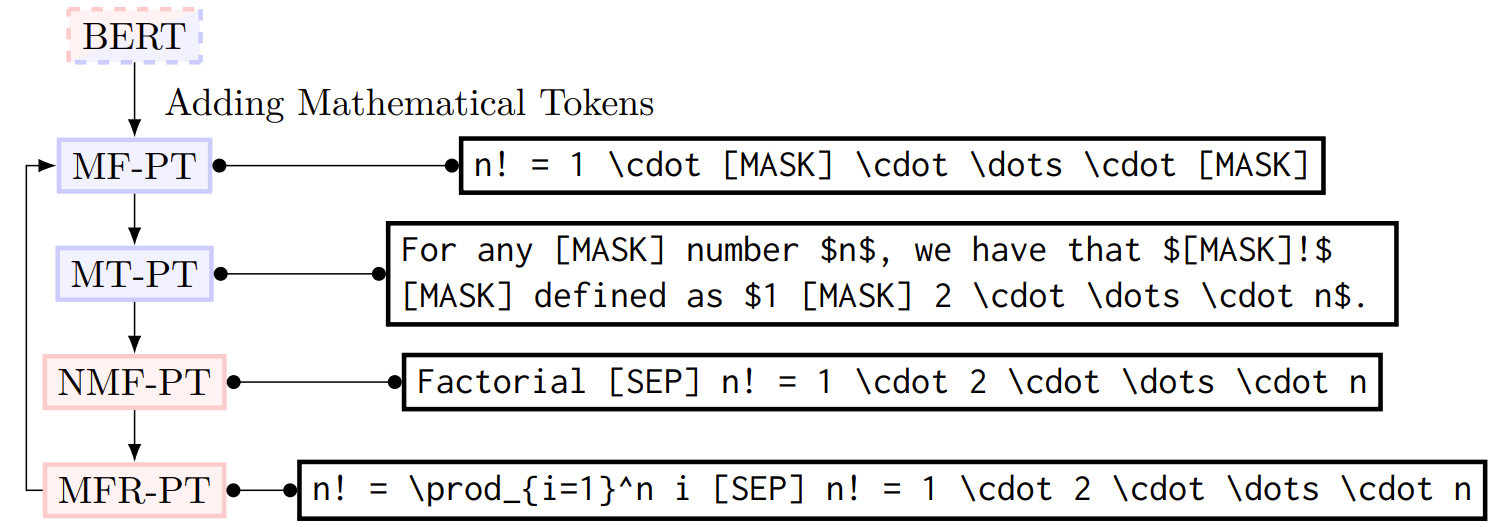

This model has been mathematically pretrained based on four tasks/datasets:

- Mathematical Formulas (MF): Masked Language Modeling (MLM) task on math formulas written in LaTeX

- Mathematical Texts (MT): MLM task on mathematical texts (i.e., texts containing LaTeX formulas). The masked tokens are more likely to be a one of the formula tokens or mathematical words (e.g., sum, one, ...)

- Named Math Formulas (NMF): Next-Sentence-Prediction (NSP)-like task associating a name of a well known mathematical identity (e.g., Pythagorean Theorem) with a formula representation (and the task is to classify whether the formula matches the identity described by the name)

- Math Formula Retrieval (MFR): NSP-like task associating two formulas (and the task is to decide whether both describe the same mathematical concept(identity))

Compared to bert-base-cased, 300 additional mathematical LaTeX tokens have been added before the mathematical pre-training.

- Further pretrained from model: bert-base-cased

Model Sources [optional]

- Repository: aieng-lab/transformer-math-pretraining](https://github.com/aieng-lab/transformer-math-pretraining)

- Paper: MAMUT: A Novel Framework for Modifying Mathematical Formulas for the Generation of Specialized Datasets for Language Model Training

Uses

How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

Training Details

Training Data

[More Information Needed]

Training Procedure

Evaluation

Testing Data, Factors & Metrics

Testing Data

[More Information Needed]

Factors

[More Information Needed]

Metrics

[More Information Needed]

Results

[More Information Needed]

Summary

Environmental Impact

- Hardware Type: 8xA100

- Hours used: 48

- Compute Region: Germany

Citation

BibTeX:

[More Information Needed]

- Downloads last month

- 2