model_id

stringlengths 9

102

| model_card

stringlengths 4

343k

| model_labels

listlengths 2

50.8k

|

|---|---|---|

shilpid/candy-finetuned |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# candy-finetuned

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 600

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"black_star",

"cat",

"grey_star",

"insect",

"moon",

"owl",

"unicorn_head",

"unicorn_whole"

] |

LiviaQi/trained_model |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# trained_model

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 500

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"black_star",

"cat",

"grey_star",

"insect",

"moon",

"owl",

"unicorn_head",

"unicorn_whole"

] |

ZilongLiu/Zilong_Candy_counter |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Zilong_Candy_counter

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 300

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"black_star",

"cat",

"grey_star",

"insect",

"moon",

"owl",

"unicorn_head",

"unicorn_whole"

] |

daloopa/tatr-dataset-1000-500epochs |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tatr-dataset-1000-500epochs

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.7819

- eval_runtime: 10.4713

- eval_samples_per_second: 13.943

- eval_steps_per_second: 1.814

- epoch: 243.23

- step: 6324

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 500

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

krystaleahr/detr-resnet-50_finetuned_candy |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_candy

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 75

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"black_star",

"cat",

"grey_star",

"insect",

"moon",

"owl",

"unicorn_head",

"unicorn_whole"

] |

AladarMezga/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

GwenGawon/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

pratik33/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

amyeroberts/detr-resnet-50-base-coco |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50-base-coco

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the detection-datasets/coco dataset.

It achieves the following results on the evaluation set:

- Loss: 5.2641

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 1337

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 4 | 5.5331 |

| No log | 2.0 | 8 | 5.5277 |

| 5.4377 | 3.0 | 12 | 5.4450 |

| 5.4377 | 4.0 | 16 | 5.3960 |

| 5.1582 | 5.0 | 20 | 5.3349 |

| 5.1582 | 6.0 | 24 | 5.3144 |

| 5.1582 | 7.0 | 28 | 5.2738 |

| 5.0556 | 8.0 | 32 | 5.2641 |

| 5.0556 | 9.0 | 36 | 5.2848 |

| 4.9784 | 10.0 | 40 | 5.2792 |

### Framework versions

- Transformers 4.32.0.dev0

- Pytorch 2.0.0+cu117

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"person",

"bicycle",

"fire hydrant",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"car",

"elephant",

"bear",

"zebra",

"giraffe",

"backpack",

"umbrella",

"handbag",

"tie",

"suitcase",

"frisbee",

"motorcycle",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"airplane",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"bus",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"train",

"dining table",

"toilet",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"truck",

"toaster",

"sink",

"refrigerator",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush",

"boat",

"traffic light"

] |

TheNobody-12/my_awesome_model |

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

| [

"label_0",

"label_1",

"label_2",

"label_3",

"label_4",

"label_5",

"label_6",

"label_7",

"label_8"

] |

grays-ai/table-transformer-structure-recognition |

# Table Transformer (fine-tuned for Table Structure Recognition)

Table Transformer (DETR) model trained on PubTables1M. It was introduced in the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Smock et al. and first released in [this repository](https://github.com/microsoft/table-transformer).

Disclaimer: The team releasing Table Transformer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The Table Transformer is equivalent to [DETR](https://huggingface.co/docs/transformers/model_doc/detr), a Transformer-based object detection model. Note that the authors decided to use the "normalize before" setting of DETR, which means that layernorm is applied before self- and cross-attention.

## Usage

You can use the raw model for detecting the structure (like rows, columns) in tables. See the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/table-transformer) for more info. | [

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

grays-ai/table-detection |

# Table Transformer (fine-tuned for Table Detection)

Table Transformer (DETR) model trained on PubTables1M. It was introduced in the paper [PubTables-1M: Towards Comprehensive Table Extraction From Unstructured Documents](https://arxiv.org/abs/2110.00061) by Smock et al. and first released in [this repository](https://github.com/microsoft/table-transformer).

Disclaimer: The team releasing Table Transformer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The Table Transformer is equivalent to [DETR](https://huggingface.co/docs/transformers/model_doc/detr), a Transformer-based object detection model. Note that the authors decided to use the "normalize before" setting of DETR, which means that layernorm is applied before self- and cross-attention.

## Usage

You can use the raw model for detecting tables in documents. See the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/table-transformer) for more info. | [

"table",

"table rotated"

] |

hsanchez/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.1

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

DunnBC22/yolos-tiny-NFL_Object_Detection |

# *** This model is not completely trained!!! *** #

<hr/>

## This model requires more training than what the resouces I have can offer!!! #

# yolos-tiny-NFL_Object_Detection

This model is a fine-tuned version of [hustvl/yolos-tiny](https://huggingface.co/hustvl/yolos-tiny) on the nfl-object-detection dataset.

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/tree/main/Computer%20Vision/Object%20Detection/Trained%2C%20But%20to%20Standard/NFL%20Object%20Detection/Successful%20Attempt

* Fine-tuning and evaluation of this model are in separate files.

** If you plan on fine-tuning an Object Detection model on the NFL Helmet detection dataset, I would recommend using (at least) the Yolos-small checkpoint.

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/keremberke/nfl-object-detection

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 18

### Training results

| Metric Name | IoU | Area | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.003 |

| Average Precision (AP) | IoU=0.50 | area= all | maxDets=100 | 0.010 |

| Average Precision (AP) | IoU=0.75 | area= all | maxDets=100 | 0.000 |

| Average Precision (AP) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.002 |

| Average Precision (AP) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.014 |

| Average Precision (AP) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.000 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 1 | 0.002 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 10 | 0.014 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.029 |

| Average Recall (AR) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.026 |

| Average Recall (AR) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.105 |

| Average Recall (AR) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.000 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.1

- Tokenizers 0.13.3 | [

"helmet",

"helmet-blurred",

"helmet-difficult",

"helmet-partial",

"helmet-sideline"

] |

DunnBC22/yolos-small-Abdomen_MRI |

# yolos-small-Abdomen_MRI

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small).

## Model description

https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Abdomen%20MRIs%20Object%20Detection/Abdomen_MRI_Object_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/abdomen-mri

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 15

### Training results

| Metric Name | IoU | Area | maxDets | Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | 0.50:0.95 | all | 100 | 0.453 |

| Average Precision (AP) | 0.50 | all | 100 | 0.928 |

| Average Precision (AP) | 0.75 | all | 100 | 0.319 |

| Average Precision (AP) | 0.50:0.95 | small | 100 | -1.000 |

| Average Precision (AP) | 0.50:0.95 | medium | 100 | 0.426 |

| Average Precision (AP) | 0.50:0.95 | large | 100 | 0.457 |

| Average Recall (AR) | 0.50:0.95 | all | 1 | 0.518 |

| Average Recall (AR) | 0.50:0.95 | all | 10 | 0.645 |

| Average Recall (AR) | 0.50:0.95 | all | 100 | 0.715 |

| Average Recall (AR) | 0.50:0.95 | small | 100 | -1.000 |

| Average Recall (AR) | 0.50:0.95 | medium | 100 | 0.633 |

| Average Recall (AR) | 0.50:0.95 | large | 100 | 0.716 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.1

- Tokenizers 0.13.3 | [

"abdomen-mri",

"0"

] |

decene/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"input",

"label",

"button",

"p",

"html",

"h2"

] |

DunnBC22/yolos-small-Wall_Damage |

# yolos-small-Wall_Damage

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small).

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Trained%2C%20But%20to%20Standard/Wall%20Damage%20Object%20Detection/Wall_Damage_Object_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/wall-damage

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 40

### Training results

| Metric Name | IoU | Area | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.241 |

| Average Precision (AP) | IoU=0.50 | area= all | maxDets=100 | 0.400 |

| Average Precision (AP) | IoU=0.75 | area= all | maxDets=100 | 0.231 |

| Average Precision (AP) | IoU=0.50:0.95 | area= small | maxDets=100 | -1.000 |

| Average Precision (AP) | IoU=0.50:0.95 | area=medium | maxDets=100 | -1.000 |

| Average Precision (AP) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.241 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 1 | 0.488 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 10 | 0.579 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.621 |

| Average Recall (AR) | IoU=0.50:0.95 | area= small | maxDets=100 | -1.000 |

| Average Recall (AR) | IoU=0.50:0.95 | area=medium | maxDets=100 | -1.000 |

| Average Recall (AR) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.621 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.2

- Tokenizers 0.13.3 | [

"wall-damage",

"minorrotation",

"moderaterotation",

"severerotation"

] |

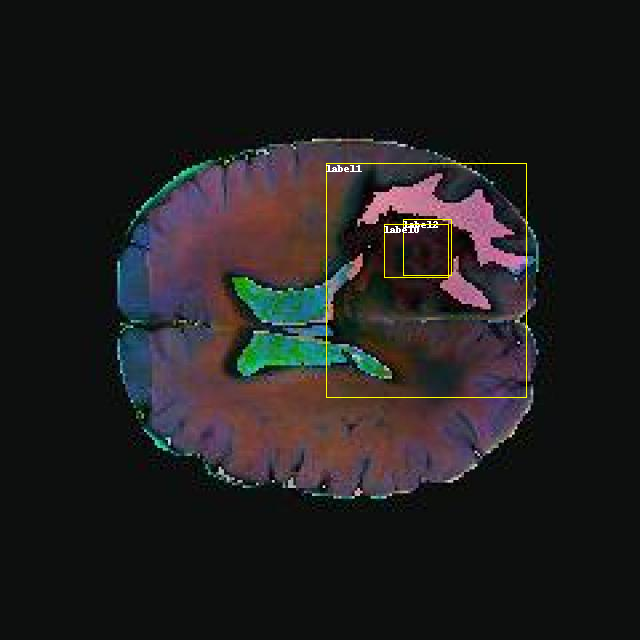

DunnBC22/yolos-tiny-Brain_Tumor_Detection |

# yolos-tiny-Brain_Tumor_Detection

This model is a fine-tuned version of [hustvl/yolos-tiny](https://huggingface.co/hustvl/yolos-tiny).

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Brain%20Tumors/Brain_Tumor_m2pbp_Object_Detection_YOLOS.ipynb

**If you intend on trying this project yourself, I highly recommend using (at least) the yolos-small checkpoint.

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/brain-tumor-m2pbp

**Example**

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Metric Name | IoU | Area | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.185

| Average Precision (AP) | IoU=0.50 | area= all | maxDets=100 | 0.448

| Average Precision (AP) | IoU=0.75 | area= all | maxDets=100 | 0.126

| Average Precision (AP) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.001

| Average Precision (AP) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.080

| Average Precision (AP) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.296

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 1 | 0.254

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 10 | 0.353

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.407

| Average Recall (AR) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.036

| Average Recall (AR) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.312

| Average Recall (AR) |IoU=0.50:0.95 | area= large | maxDets=100 | 0.565

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.2

- Tokenizers 0.13.3 | [

"brain-tumor",

"label0",

"label1",

"label2"

] |

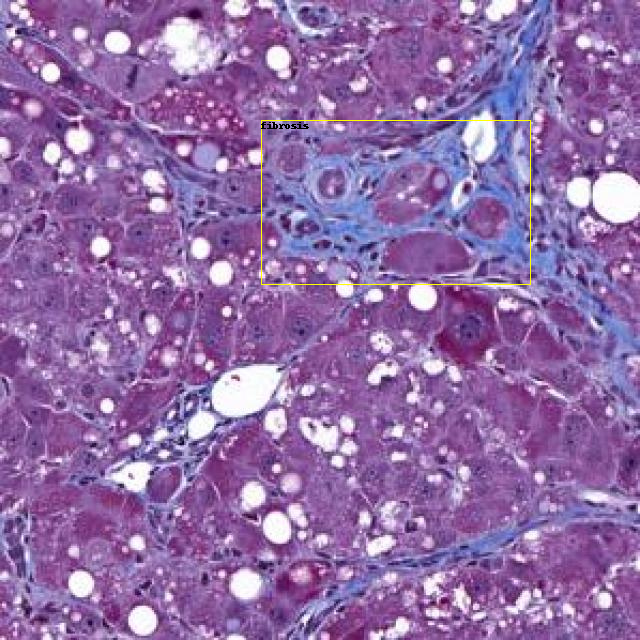

DunnBC22/yolos-small-Liver_Disease |

# yolos-small-Liver_Disease

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small).

## Model description

https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Liver%20Disease%20Object%20Detection/Liver_Disease_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/liver-disease

**Example Image**

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

|Metric Name | IoU | Area | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.254 |

| Average Precision (AP) | IoU=0.50 | area= all | maxDets=100 | 0.399 |

| Average Precision (AP) | IoU=0.75 | area= all | maxDets=100 | 0.291 |

| Average Precision (AP) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.000 |

| Average Precision (AP) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.154 |

| Average Precision (AP) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.283 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 1 | 0.147 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 10 | 0.451 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.552 |

| Average Recall (AR) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.000 |

| Average Recall (AR) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.444 |

| Average Recall (AR) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.572 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.2

- Tokenizers 0.13.3 | [

"diseases",

"ballooning",

"fibrosis",

"inflammation",

"steatosis"

] |

rice-rice/detr-resnet-50_finetuned_dataset |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_dataset

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the pothole-segmentation dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.3

- Tokenizers 0.13.3

| [

"potholes",

"object",

"pothole"

] |

DunnBC22/yolos-tiny-Hard_Hat_Detection |

# yolos-tiny-Hard_Hat_Detection

This model is a fine-tuned version of [hustvl/yolos-tiny](https://huggingface.co/hustvl/yolos-tiny) on the hard-hat-detection dataset.

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Hard%20Hat%20Detection/Hard_Hat_Object_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/keremberke/hard-hat-detection

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Metric Name | IoU | Area| maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP)| IoU=0.50:0.95 | all | maxDets=100 | 0.346 |

| Average Precision (AP)| IoU=0.50 | all | maxDets=100 | 0.747 |

| Average Precision (AP)| IoU=0.75 | all | maxDets=100 | 0.275 |

| Average Precision (AP)| IoU=0.50:0.95 | small | maxDets=100 | 0.128 |

| Average Precision (AP)| IoU=0.50:0.95 | medium | maxDets=100 | 0.343 |

| Average Precision (AP)| IoU=0.50:0.95 | large | maxDets=100 | 0.521 |

| Average Recall (AR)| IoU=0.50:0.95 | all | maxDets=1 | 0.188 |

| Average Recall (AR)| IoU=0.50:0.95 | all | maxDets=10 | 0.484 |

| Average Recall (AR)| IoU=0.50:0.95 | all | maxDets=100 | 0.558 |

| Average Recall (AR)| IoU=0.50:0.95 | small | maxDets=100 | 0.320 |

| Average Recall (AR)| IoU=0.50:0.95 | medium | maxDets=100 | 0.538 |

| Average Recall (AR)| IoU=0.50:0.95 | large | maxDets=100 | 0.743 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.3

- Tokenizers 0.13.3 | [

"hardhat",

"no-hardhat"

] |

DunnBC22/yolos-small-Stomata_Cells |

# yolos-small-Stomata_Cells

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small).

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Stomata%20Cells/Stomata_Cells_Object_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/stomata-cells

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 12

### Training results

| Metric Name | IoU | Area| maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | all | maxDets=100 | 0.340 |

| Average Precision (AP) | IoU=0.50 | all | maxDets=100 | 0.571 |

| Average Precision (AP) | IoU=0.75 | all | maxDets=100 | 0.361 |

| Average Precision (AP) | IoU=0.50:0.95 | small | maxDets=100 | 0.155 |

| Average Precision (AP) | IoU=0.50:0.95 | medium | maxDets=100 | 0.220 |

| Average Precision (AP) | IoU=0.50:0.95 | large | maxDets=100 | 0.498 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets= 1 | 0.146 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets= 10 | 0.423 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=100 | 0.547 |

| Average Recall (AR) | IoU=0.50:0.95 | small | maxDets=100 | 0.275 |

| Average Recall (AR) | IoU=0.50:0.95 | medium | maxDets=100 | 0.439 |

| Average Recall (AR) | IoU=0.50:0.95 | large | maxDets=100 | 0.764 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.3

- Tokenizers 0.13.3 | [

"stomata-cells",

"close",

"open"

] |

DunnBC22/yolos-small-Forklift_Object_Detection |

# yolos-small-Forklift_Object_Detection

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small) on the forklift-object-detection dataset.

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/tree/main/Computer%20Vision/Object%20Detection/Forklift%20Object%20Detection

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/keremberke/forklift-object-detection

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Metric Name | IoU | Area Category | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.136 |

| Average Precision (AP) | IoU=0.50 | area= all | maxDets=100 | 0.400 |

| Average Precision (AP) | IoU=0.75 | area= all | maxDets=100 | 0.054 |

| Average Precision (AP) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.001 |

| Average Precision (AP) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.051 |

| Average Precision (AP) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.177 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 1 | 0.178 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 10 | 0.294 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.340 |

| Average Recall (AR) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.075 |

| Average Recall (AR) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.299 |

| Average Recall (AR) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.373 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.3

- Tokenizers 0.13.3 | [

"forklift",

"person"

] |

DunnBC22/yolos-small-Axial_MRIs |

# yolos-small-Axial_MRIs

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small).

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Axial%20MRIs/Axial_MRIs_Object_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/axial-mri

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Metric Name | IoU | Area| maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | all | maxDets=100 | 0.284 |

| Average Precision (AP) | IoU=0.50 | all | maxDets=100 | 0.451 |

| Average Precision (AP) | IoU=0.75 | all | maxDets=100 | 0.351 |

| Average Precision (AP) | IoU=0.50:0.95 | small | maxDets=100 | 0.000 |

| Average Precision (AP) | IoU=0.50:0.95 | medium | maxDets=100 | 0.182 |

| Average Precision (AP) | IoU=0.50:0.95 | large | maxDets=100 | 0.663 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=1 | 0.388 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=10 | 0.524 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=100 | 0.566 |

| Average Recall (AR) | IoU=0.50:0.95 | small | maxDets=100 | 0.000 |

| Average Recall (AR) | IoU=0.50:0.95 | medium | maxDets=100 | 0.502 |

| Average Recall (AR) | IoU=0.50:0.95 | large | maxDets=100 | 0.791 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.3

- Tokenizers 0.13.3 | [

"axial-mri",

"negative",

"positive"

] |

DunnBC22/yolos-small-Blood_Cell_Object_Detection |

# yolos-small-Blood_Cell_Object_Detection

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small) on the blood-cell-object-detection dataset.

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Blood%20Cell%20Object%20Detection/Blood_Cell_Object_Detection_YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/keremberke/blood-cell-object-detection

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Metric Name | IoU | Area | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | all | maxDets=100 | 0.344 |

| Average Precision (AP) | IoU=0.50 | all | maxDets=100 | 0.579 |

| Average Precision (AP) | IoU=0.75 | all | maxDets=100 | 0.374 |

| Average Precision (AP) | IoU=0.50:0.95 | small | maxDets=100 | 0.097 |

| Average Precision (AP) | IoU=0.50:0.95 | medium | maxDets=100 | 0.258 |

| Average Precision (AP) | IoU=0.50:0.95 | large | maxDets=100 | 0.224 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=1 | 0.210 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=10 | 0.376 |

| Average Recall (AR) | IoU=0.50:0.95 | all | maxDets=100 | 0.448 |

| Average Recall (AR) | IoU=0.50:0.95 | small | maxDets=100 | 0.108 |

| Average Recall (AR) | IoU=0.50:0.95 | medium | maxDets=100 | 0.375 |

| Average Recall (AR) | IoU=0.50:0.95 | large | maxDets=100 | 0.448 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.3

- Tokenizers 0.13.3 | [

"platelets",

"rbc",

"wbc"

] |

decene/detr-resnet-50_finetuned_ht1 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_ht1

This model is a fine-tuned version of [decene/detr-resnet-50_finetuned_ht1](https://huggingface.co/decene/detr-resnet-50_finetuned_ht1) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 200

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"input",

"label",

"button",

"p",

"html",

"h2"

] |

machinelearningzuu/detr-resnet-50_finetuned-normal-vs-disabled |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned-normal-vs-disabled

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 100

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 1.13.1

- Datasets 2.12.0

- Tokenizers 0.13.3

| [

"normal",

"diabled"

] |

priynka/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

distill-io/detr-amzss3-v2 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-amzss3-v2

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3494

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| No log | 0.54 | 1000 | 0.4810 |

| 0.5325 | 1.08 | 2000 | 0.4812 |

| 0.5325 | 1.62 | 3000 | 0.4739 |

| 0.5322 | 2.16 | 4000 | 0.4759 |

| 0.5322 | 2.7 | 5000 | 0.4818 |

| 0.5259 | 3.24 | 6000 | 0.4522 |

| 0.5259 | 3.78 | 7000 | 0.4632 |

| 0.5167 | 4.32 | 8000 | 0.4628 |

| 0.5167 | 4.86 | 9000 | 0.4345 |

| 0.5076 | 5.4 | 10000 | 0.4563 |

| 0.5076 | 5.94 | 11000 | 0.4326 |

| 0.494 | 6.48 | 12000 | 0.4424 |

| 0.4906 | 7.02 | 13000 | 0.4272 |

| 0.4906 | 7.56 | 14000 | 0.4164 |

| 0.4801 | 8.1 | 15000 | 0.4213 |

| 0.4801 | 8.64 | 16000 | 0.4320 |

| 0.4699 | 9.18 | 17000 | 0.4100 |

| 0.4699 | 9.72 | 18000 | 0.4127 |

| 0.4613 | 10.26 | 19000 | 0.4035 |

| 0.4613 | 10.8 | 20000 | 0.4039 |

| 0.4556 | 11.34 | 21000 | 0.4149 |

| 0.4556 | 11.88 | 22000 | 0.4092 |

| 0.4475 | 12.42 | 23000 | 0.3965 |

| 0.4475 | 12.96 | 24000 | 0.3973 |

| 0.4389 | 13.5 | 25000 | 0.4013 |

| 0.4349 | 14.04 | 26000 | 0.3797 |

| 0.4349 | 14.58 | 27000 | 0.3728 |

| 0.4288 | 15.12 | 28000 | 0.3834 |

| 0.4288 | 15.66 | 29000 | 0.3885 |

| 0.4222 | 16.2 | 30000 | 0.3820 |

| 0.4222 | 16.74 | 31000 | 0.3755 |

| 0.4152 | 17.28 | 32000 | 0.3693 |

| 0.4152 | 17.82 | 33000 | 0.3679 |

| 0.4122 | 18.36 | 34000 | 0.3605 |

| 0.4122 | 18.9 | 35000 | 0.3625 |

| 0.4077 | 19.44 | 36000 | 0.3631 |

| 0.4077 | 19.98 | 37000 | 0.3607 |

| 0.4 | 20.52 | 38000 | 0.3615 |

| 0.3972 | 21.06 | 39000 | 0.3561 |

| 0.3972 | 21.6 | 40000 | 0.3594 |

| 0.3953 | 22.14 | 41000 | 0.3554 |

| 0.3953 | 22.68 | 42000 | 0.3515 |

| 0.3903 | 23.22 | 43000 | 0.3539 |

| 0.3903 | 23.76 | 44000 | 0.3500 |

| 0.3878 | 24.3 | 45000 | 0.3489 |

| 0.3878 | 24.84 | 46000 | 0.3494 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"block",

"footer",

"header"

] |

akar49/detr-resnet-50_machinery-I |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_machinery-I

This model is a fine-tuned version of [akar49/detr-resnet-50_machinery-I](https://huggingface.co/akar49/detr-resnet-50_machinery-I) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.1.0+cu121

- Datasets 2.16.0

- Tokenizers 0.13.3

| [

"excavators",

"dump truck",

"wheel loader"

] |

machinelearningzuu/detr-resnet-50_finetuned-room-objects |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned-room-objects

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.30.2

- Pytorch 1.13.0

- Datasets 2.11.0

- Tokenizers 0.13.0

| [

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"backpack",

"umbrella",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"dining table",

"toilet",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

chanelcolgate/detr-resnet-50_finetuned_yenthienviet |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_yenthienviet

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the yenthienviet dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"hop_dln",

"hop_jn",

"hop_vtg",

"hop_ytv",

"lo_kids",

"lo_ytv",

"loc_dln",

"loc_jn",

"loc_kids",

"loc_ytv"

] |

DunnBC22/yolos-small-Cell_Tower_Detection |

# yolos-small-Cell_Tower_Detection

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small).

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Object%20Detection/Cell%20Tower%20Object%20Detection/Cell%20Tower%20Detection%20YOLOS.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://huggingface.co/datasets/Francesco/cell-towers

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 30

### Training results

| Metric Name | IoU | Area | maxDets | Metric Value |

|:-----:|:-----:|:-----:|:-----:|:-----:|

| Average Precision (AP) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.287 |

| Average Precision (AP) | IoU=0.50 | area= all | maxDets=100 | 0.636 |

| Average Precision (AP) | IoU=0.75 | area= all | maxDets=100 | 0.239 |

| Average Precision (AP) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.069 |

| Average Precision (AP) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.289 |

| Average Precision (AP) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.556 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 1 | 0.192 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets= 10 | 0.460 |

| Average Recall (AR) | IoU=0.50:0.95 | area= all | maxDets=100 | 0.492 |

| Average Recall (AR) | IoU=0.50:0.95 | area= small | maxDets=100 | 0.151 |

| Average Recall (AR) | IoU=0.50:0.95 | area=medium | maxDets=100 | 0.488 |

| Average Recall (AR) | IoU=0.50:0.95 | area= large | maxDets=100 | 0.760 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3 | [

"pieces",

"joint",

"side"

] |

SIA86/detr-resnet-50_finetuned_WFCR |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_WFCR

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the water_flow_counters_recognition dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"value_a",

"value_b",

"serial"

] |

SIA86/detr-resnet-50_finetuned_WFCR3 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_WFCR3

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the water_flow_counters_recognition dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"value_a",

"value_b",

"serial"

] |

akar49/deform_detr-crack-I |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deform_detr-crack-I

This model is a fine-tuned version of [facebook/deformable-detr-box-supervised](https://huggingface.co/facebook/deformable-detr-box-supervised) on the crack_detection-merged dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"crack",

"mold",

"peeling_paint",

"stairstep_crack",

"water_seepage"

] |

akar49/detr-crack-II |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-crack-II

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the crack_detection-merged-ii dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"crack",

"fissures"

] |

govindrai/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.33.0.dev0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

distill-io/detr-v8 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-V8

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2139

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:------:|:---------------:|

| No log | 0.48 | 1000 | 0.3770 |

| No log | 0.96 | 2000 | 0.3967 |

| 0.4391 | 1.43 | 3000 | 0.3822 |

| 0.4391 | 1.91 | 4000 | 0.4163 |

| 0.4434 | 2.39 | 5000 | 0.3888 |

| 0.4434 | 2.87 | 6000 | 0.3867 |

| 0.4509 | 3.35 | 7000 | 0.4205 |

| 0.4509 | 3.83 | 8000 | 0.4014 |

| 0.455 | 4.3 | 9000 | 0.4117 |

| 0.455 | 4.78 | 10000 | 0.3964 |

| 0.4476 | 5.26 | 11000 | 0.3915 |

| 0.4476 | 5.74 | 12000 | 0.3919 |

| 0.444 | 6.22 | 13000 | 0.4026 |

| 0.444 | 6.7 | 14000 | 0.3832 |

| 0.443 | 7.17 | 15000 | 0.4057 |

| 0.443 | 7.65 | 16000 | 0.3677 |

| 0.4232 | 8.13 | 17000 | 0.3746 |

| 0.4232 | 8.61 | 18000 | 0.3672 |

| 0.4202 | 9.09 | 19000 | 0.3629 |

| 0.4202 | 9.56 | 20000 | 0.3739 |

| 0.4131 | 10.04 | 21000 | 0.3712 |

| 0.4131 | 10.52 | 22000 | 0.3470 |

| 0.4131 | 11.0 | 23000 | 0.3632 |

| 0.4024 | 11.48 | 24000 | 0.3561 |

| 0.4024 | 11.96 | 25000 | 0.3562 |

| 0.4013 | 12.43 | 26000 | 0.3253 |

| 0.4013 | 12.91 | 27000 | 0.3390 |

| 0.3925 | 13.39 | 28000 | 0.3398 |

| 0.3925 | 13.87 | 29000 | 0.3460 |

| 0.3804 | 14.35 | 30000 | 0.3338 |

| 0.3804 | 14.83 | 31000 | 0.3201 |

| 0.3757 | 15.3 | 32000 | 0.3119 |

| 0.3757 | 15.78 | 33000 | 0.3106 |

| 0.3663 | 16.26 | 34000 | 0.3164 |

| 0.3663 | 16.74 | 35000 | 0.3190 |

| 0.3588 | 17.22 | 36000 | 0.3141 |

| 0.3588 | 17.69 | 37000 | 0.3262 |

| 0.3515 | 18.17 | 38000 | 0.3027 |

| 0.3515 | 18.65 | 39000 | 0.3178 |

| 0.3557 | 19.13 | 40000 | 0.3053 |

| 0.3557 | 19.61 | 41000 | 0.3032 |

| 0.3478 | 20.09 | 42000 | 0.3147 |

| 0.3478 | 20.56 | 43000 | 0.3069 |

| 0.3451 | 21.04 | 44000 | 0.3070 |

| 0.3451 | 21.52 | 45000 | 0.3055 |

| 0.3451 | 22.0 | 46000 | 0.2883 |

| 0.3367 | 22.48 | 47000 | 0.3090 |

| 0.3367 | 22.96 | 48000 | 0.2906 |

| 0.3348 | 23.43 | 49000 | 0.2805 |

| 0.3348 | 23.91 | 50000 | 0.2920 |

| 0.3298 | 24.39 | 51000 | 0.2854 |

| 0.3298 | 24.87 | 52000 | 0.2841 |

| 0.3254 | 25.35 | 53000 | 0.2822 |

| 0.3254 | 25.82 | 54000 | 0.2716 |

| 0.3169 | 26.3 | 55000 | 0.2825 |

| 0.3169 | 26.78 | 56000 | 0.2700 |

| 0.314 | 27.26 | 57000 | 0.2640 |

| 0.314 | 27.74 | 58000 | 0.2728 |

| 0.3047 | 28.22 | 59000 | 0.2654 |

| 0.3047 | 28.69 | 60000 | 0.2691 |

| 0.2999 | 29.17 | 61000 | 0.2601 |

| 0.2999 | 29.65 | 62000 | 0.2607 |

| 0.297 | 30.13 | 63000 | 0.2581 |

| 0.297 | 30.61 | 64000 | 0.2511 |

| 0.2946 | 31.09 | 65000 | 0.2557 |

| 0.2946 | 31.56 | 66000 | 0.2568 |

| 0.2912 | 32.04 | 67000 | 0.2569 |

| 0.2912 | 32.52 | 68000 | 0.2594 |

| 0.2912 | 33.0 | 69000 | 0.2553 |

| 0.2906 | 33.48 | 70000 | 0.2425 |

| 0.2906 | 33.96 | 71000 | 0.2475 |

| 0.2833 | 34.43 | 72000 | 0.2394 |

| 0.2833 | 34.91 | 73000 | 0.2422 |

| 0.278 | 35.39 | 74000 | 0.2403 |

| 0.278 | 35.87 | 75000 | 0.2349 |

| 0.2738 | 36.35 | 76000 | 0.2300 |

| 0.2738 | 36.82 | 77000 | 0.2332 |

| 0.2701 | 37.3 | 78000 | 0.2309 |

| 0.2701 | 37.78 | 79000 | 0.2298 |

| 0.2659 | 38.26 | 80000 | 0.2343 |

| 0.2659 | 38.74 | 81000 | 0.2265 |

| 0.2626 | 39.22 | 82000 | 0.2310 |

| 0.2626 | 39.69 | 83000 | 0.2255 |

| 0.259 | 40.17 | 84000 | 0.2263 |

| 0.259 | 40.65 | 85000 | 0.2282 |

| 0.2563 | 41.13 | 86000 | 0.2309 |

| 0.2563 | 41.61 | 87000 | 0.2270 |

| 0.2548 | 42.09 | 88000 | 0.2237 |

| 0.2548 | 42.56 | 89000 | 0.2203 |

| 0.254 | 43.04 | 90000 | 0.2204 |

| 0.254 | 43.52 | 91000 | 0.2218 |

| 0.254 | 44.0 | 92000 | 0.2207 |

| 0.2484 | 44.48 | 93000 | 0.2144 |

| 0.2484 | 44.95 | 94000 | 0.2194 |

| 0.2475 | 45.43 | 95000 | 0.2165 |

| 0.2475 | 45.91 | 96000 | 0.2162 |

| 0.2453 | 46.39 | 97000 | 0.2136 |

| 0.2453 | 46.87 | 98000 | 0.2152 |

| 0.2441 | 47.35 | 99000 | 0.2162 |

| 0.2441 | 47.82 | 100000 | 0.2171 |

| 0.2408 | 48.3 | 101000 | 0.2119 |

| 0.2408 | 48.78 | 102000 | 0.2131 |

| 0.2389 | 49.26 | 103000 | 0.2109 |

| 0.2389 | 49.74 | 104000 | 0.2139 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

| [

"block",

"footer",

"header"

] |

mmoltisanti/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.29.2

- Pytorch 1.13.1

- Datasets 2.14.4

- Tokenizers 0.13.2

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

Yorai/detr-resnet-50_finetuned_cppe5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.32.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

| [

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

Yorai/yolos-tiny_finetuned_cppe-5 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# yolos-tiny_finetuned_cppe-5

This model is a fine-tuned version of [hustvl/yolos-tiny](https://huggingface.co/hustvl/yolos-tiny) on the cppe-5 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8