model_id

stringlengths 9

102

| model_card

stringlengths 4

343k

| model_labels

listlengths 2

50.8k

|

|---|---|---|

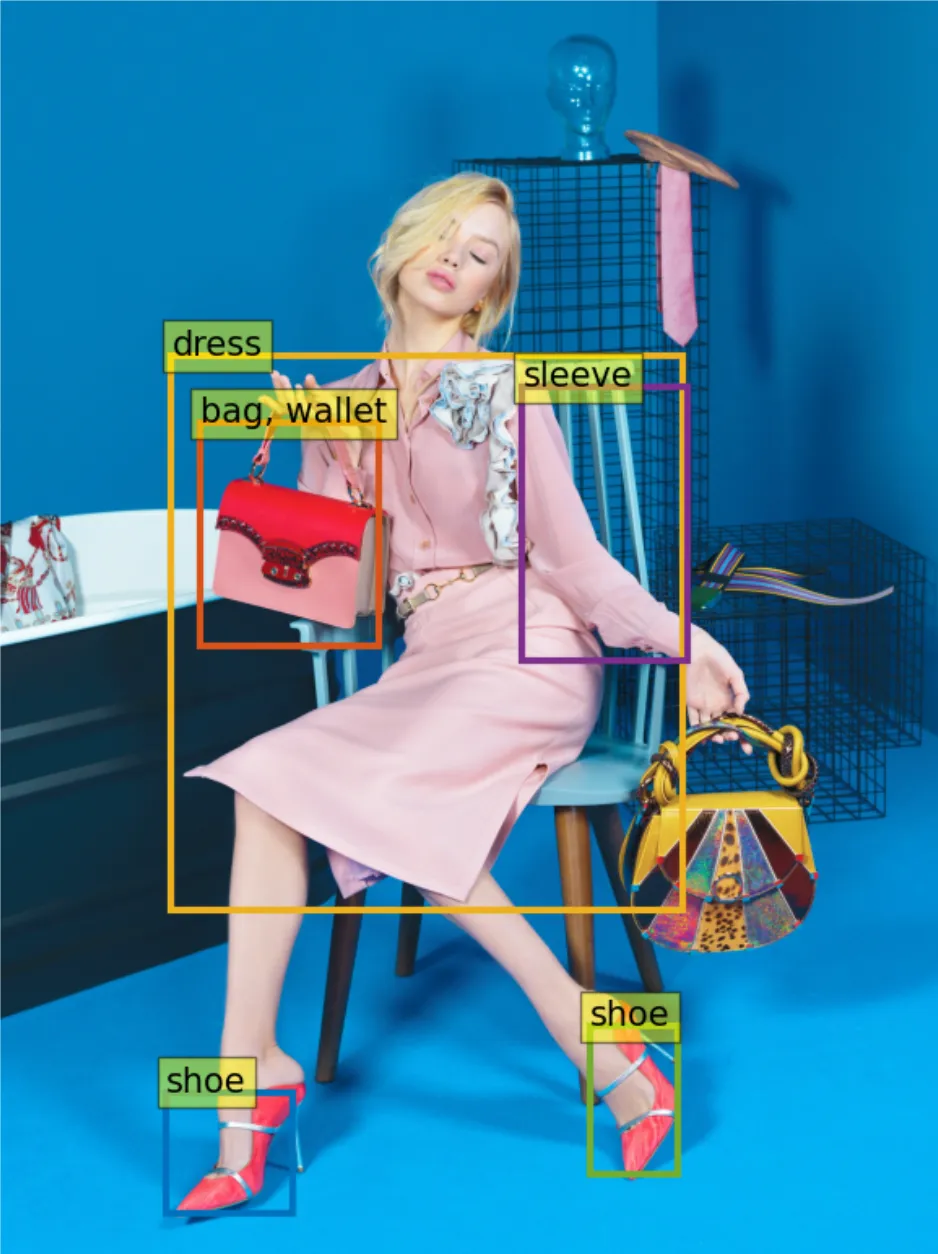

yainage90/fashion-object-detection | This model is fine-tuned version of microsoft/conditional-detr-resnet-50.

You can find details of model in this github repo -> [fashion-visual-search](https://github.com/yainage90/fashion-visual-search)

And you can find fashion image feature extractor model -> [yainage90/fashion-image-feature-extractor](https://huggingface.co/yainage90/fashion-image-feature-extractor)

This model was trained using a combination of two datasets: [modanet](https://github.com/eBay/modanet) and [fashionpedia](https://fashionpedia.github.io/home/)

The labels are ['bag', 'bottom', 'dress', 'hat', 'shoes', 'outer', 'top']

In the 96th epoch out of total of 100 epochs, the best score was achieved with mAP 0.7542. Therefore, it is believed that there is a little room for performance improvement.

``` python

from PIL import Image

import torch

from transformers import AutoImageProcessor, AutoModelForObjectDetection

device = 'cpu'

if torch.cuda.is_available():

device = torch.device('cuda')

elif torch.backends.mps.is_available():

device = torch.device('mps')

ckpt = 'yainage90/fashion-object-detection'

image_processor = AutoImageProcessor.from_pretrained(ckpt)

model = AutoModelForObjectDetection.from_pretrained(ckpt).to(device)

image = Image.open('<path/to/image>').convert('RGB')

with torch.no_grad():

inputs = image_processor(images=[image], return_tensors="pt")

outputs = model(**inputs.to(device))

target_sizes = torch.tensor([[image.size[1], image.size[0]]])

results = image_processor.post_process_object_detection(outputs, threshold=0.4, target_sizes=target_sizes)[0]

items = []

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

score = score.item()

label = label.item()

box = [i.item() for i in box]

print(f"{model.config.id2label[label]}: {round(score, 3)} at {box}")

items.append((score, label, box))

```

| [

"bag",

"bottom",

"dress",

"hat",

"outer",

"shoes",

"top"

] |

hustvl/yolos-small |

# YOLOS (small-sized) model

YOLOS model fine-tuned on COCO 2017 object detection (118k annotated images). It was introduced in the paper [You Only Look at One Sequence: Rethinking Transformer in Vision through Object Detection](https://arxiv.org/abs/2106.00666) by Fang et al. and first released in [this repository](https://github.com/hustvl/YOLOS).

Disclaimer: The team releasing YOLOS did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

YOLOS is a Vision Transformer (ViT) trained using the DETR loss. Despite its simplicity, a base-sized YOLOS model is able to achieve 42 AP on COCO validation 2017 (similar to DETR and more complex frameworks such as Faster R-CNN).

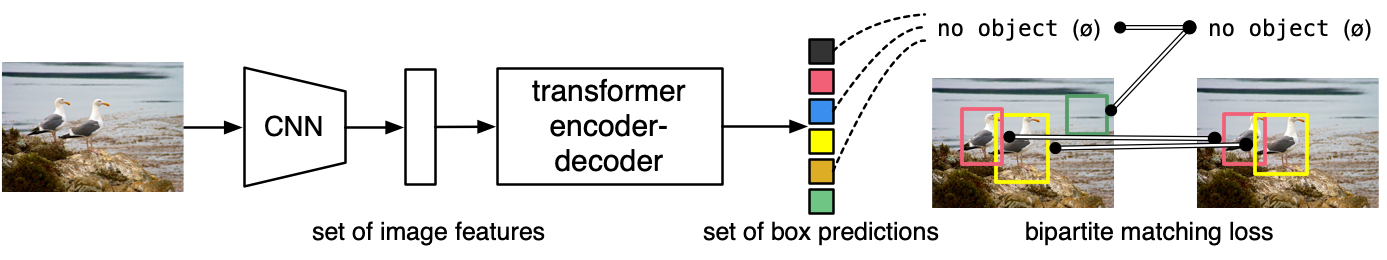

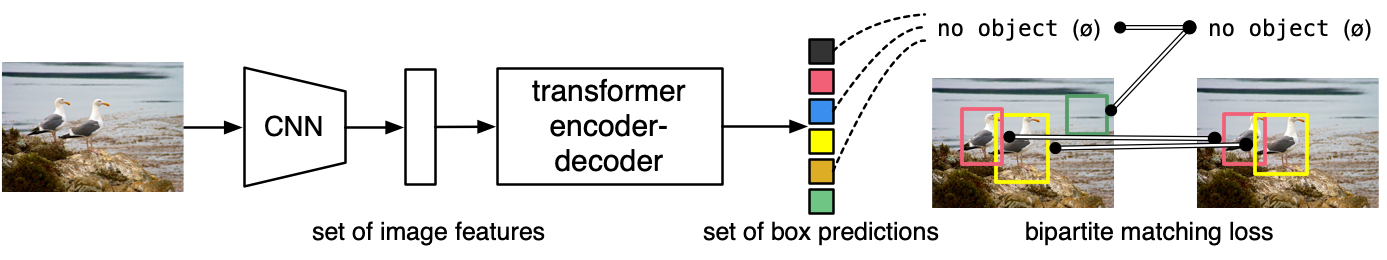

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=hustvl/yolos) to look for all available YOLOS models.

### How to use

Here is how to use this model:

```python

from transformers import YolosFeatureExtractor, YolosForObjectDetection

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = YolosFeatureExtractor.from_pretrained('hustvl/yolos-small')

model = YolosForObjectDetection.from_pretrained('hustvl/yolos-small')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding COCO classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The YOLOS model was pre-trained on [ImageNet-1k](https://huggingface.co/datasets/imagenet2012) and fine-tuned on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### Training

The model was pre-trained for 200 epochs on ImageNet-1k and fine-tuned for 150 epochs on COCO.

## Evaluation results

This model achieves an AP (average precision) of **36.1** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2106-00666,

author = {Yuxin Fang and

Bencheng Liao and

Xinggang Wang and

Jiemin Fang and

Jiyang Qi and

Rui Wu and

Jianwei Niu and

Wenyu Liu},

title = {You Only Look at One Sequence: Rethinking Transformer in Vision through

Object Detection},

journal = {CoRR},

volume = {abs/2106.00666},

year = {2021},

url = {https://arxiv.org/abs/2106.00666},

eprinttype = {arXiv},

eprint = {2106.00666},

timestamp = {Fri, 29 Apr 2022 19:49:16 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2106-00666.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

nickmuchi/yolos-small-finetuned-license-plate-detection | # YOLOS (small-sized) model

This model is a fine-tuned version of [hustvl/yolos-small](https://huggingface.co/hustvl/yolos-small) on the [licesne-plate-recognition](https://app.roboflow.com/objectdetection-jhgr1/license-plates-recognition/2) dataset from Roboflow which contains 5200 images in the training set and 380 in the validation set.

The original YOLOS model was fine-tuned on COCO 2017 object detection (118k annotated images).

## Model description

YOLOS is a Vision Transformer (ViT) trained using the DETR loss. Despite its simplicity, a base-sized YOLOS model is able to achieve 42 AP on COCO validation 2017 (similar to DETR and more complex frameworks such as Faster R-CNN).

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=hustvl/yolos) to look for all available YOLOS models.

### How to use

Here is how to use this model:

```python

from transformers import YolosFeatureExtractor, YolosForObjectDetection

from PIL import Image

import requests

url = 'https://drive.google.com/uc?id=1p9wJIqRz3W50e2f_A0D8ftla8hoXz4T5'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = YolosFeatureExtractor.from_pretrained('nickmuchi/yolos-small-finetuned-license-plate-detection')

model = YolosForObjectDetection.from_pretrained('nickmuchi/yolos-small-finetuned-license-plate-detection')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding face mask detection classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The YOLOS model was pre-trained on [ImageNet-1k](https://huggingface.co/datasets/imagenet2012) and fine-tuned on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### Training

This model was fine-tuned for 200 epochs on the [licesne-plate-recognition](https://app.roboflow.com/objectdetection-jhgr1/license-plates-recognition/2).

## Evaluation results

This model achieves an AP (average precision) of **49.0**.

Accumulating evaluation results...

IoU metric: bbox

Metrics | Metric Parameter | Location | Dets | Value |

---------------- | --------------------- | ------------| ------------- | ----- |

Average Precision | (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] | 0.490 |

Average Precision | (AP) @[ IoU=0.50 | area= all | maxDets=100 ] | 0.792 |

Average Precision | (AP) @[ IoU=0.75 | area= all | maxDets=100 ] | 0.585 |

Average Precision | (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] | 0.167 |

Average Precision | (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] | 0.460 |

Average Precision | (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] | 0.824 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] | 0.447 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] | 0.671 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] | 0.676 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] | 0.278 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] | 0.641 |

Average Recall | (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] | 0.890 | | [

"name",

"license-plates"

] |

kariver/detr-resnet-50_finetuned_food-roboflow |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_food-roboflow

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 40

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.0+cu118

- Tokenizers 0.14.1

| [

"akabare khursani",

"apple",

"artichoke",

"ash gourd -kubhindo-",

"asparagus -kurilo-",

"avocado",

"bacon",

"bamboo shoots -tama-",

"banana",

"beans",

"beaten rice -chiura-",

"beef",

"beetroot",

"bethu ko saag",

"bitter gourd",

"black lentils",

"black beans",

"bottle gourd -lauka-",

"bread",

"brinjal",

"broad beans -bakullo-",

"broccoli",

"buff meat",

"butter",

"cabbage",

"capsicum",

"carrot",

"cassava -ghar tarul-",

"cauliflower",

"chayote-iskus-",

"cheese",

"chicken gizzards",

"chicken",

"chickpeas",

"chili pepper -khursani-",

"chili powder",

"chowmein noodles",

"cinnamon",

"coriander -dhaniya-",

"corn",

"cornflakec",

"crab meat",

"cucumber",

"egg",

"farsi ko munta",

"fiddlehead ferns -niguro-",

"fish",

"garden peas",

"garden cress-chamsur ko saag-",

"garlic",

"ginger",

"green brinjal",

"green lentils",

"green mint -pudina-",

"green peas",

"green soyabean -hariyo bhatmas-",

"gundruk",

"ham",

"ice",

"jack fruit",

"ketchup",

"lapsi -nepali hog plum-",

"lemon -nimbu-",

"lime -kagati-",

"long beans -bodi-",

"masyaura",

"milk",

"minced meat",

"moringa leaves -sajyun ko munta-",

"mushroom",

"mutton",

"nutrela -soya chunks-",

"okra -bhindi-",

"olive oil",

"onion leaves",

"onion",

"orange",

"palak -indian spinach-",

"palungo -nepali spinach-",

"paneer",

"papaya",

"pea",

"pear",

"pointed gourd -chuche karela-",

"pork",

"potato",

"pumpkin -farsi-",

"radish",

"rahar ko daal",

"rayo ko saag",

"red beans",

"red lentils",

"rice -chamal-",

"sajjyun -moringa drumsticks-",

"salt",

"sausage",

"snake gourd -chichindo-",

"soy sauce",

"soyabean -bhatmas-",

"sponge gourd -ghiraula-",

"stinging nettle -sisnu-",

"strawberry",

"sugar",

"sweet potato -suthuni-",

"taro leaves -karkalo-",

"taro root-pidalu-",

"thukpa noodles",

"tofu",

"tomato",

"tori ko saag",

"tree tomato -rukh tamatar-",

"turnip",

"wallnut",

"water melon",

"wheat",

"yellow lentils",

"kimchi",

"mayonnaise",

"noodle",

"seaweed"

] |

ustc-community/dfine-xlarge-obj2coco | ## D-FINE

### **Overview**

The D-FINE model was proposed in [D-FINE: Redefine Regression Task in DETRs as Fine-grained Distribution Refinement](https://arxiv.org/abs/2410.13842) by

Yansong Peng, Hebei Li, Peixi Wu, Yueyi Zhang, Xiaoyan Sun, Feng Wu

This model was contributed by [VladOS95-cyber](https://github.com/VladOS95-cyber) with the help of [@qubvel-hf](https://huggingface.co/qubvel-hf)

This is the HF transformers implementation for D-FINE

_coco -> model trained on COCO

_obj365 -> model trained on Object365

_obj2coco -> model trained on Object365 and then finetuned on COCO

### **Performance**

D-FINE, a powerful real-time object detector that achieves outstanding localization precision by redefining the bounding box regression task in DETR models. D-FINE comprises two key components: Fine-grained Distribution Refinement (FDR) and Global Optimal Localization Self-Distillation (GO-LSD).

### **How to use**

```python

import torch

import requests

from PIL import Image

from transformers import DFineForObjectDetection, AutoImageProcessor

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("ustc-community/dfine-xlarge-obj2coco")

model = DFineForObjectDetection.from_pretrained("ustc-community/dfine-xlarge-obj2coco")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

results = image_processor.post_process_object_detection(outputs, target_sizes=torch.tensor([image.size[::-1]]), threshold=0.3)

for result in results:

for score, label_id, box in zip(result["scores"], result["labels"], result["boxes"]):

score, label = score.item(), label_id.item()

box = [round(i, 2) for i in box.tolist()]

print(f"{model.config.id2label[label]}: {score:.2f} {box}")

```

### **Training**

D-FINE is trained on COCO (Lin et al. [2014]) train2017 and validated on COCO val2017 dataset. We report the standard AP metrics (averaged over uniformly sampled IoU thresholds ranging from 0.50 − 0.95 with a step size of 0.05), and APval5000 commonly used in real scenarios.

### **Applications**

D-FINE is ideal for real-time object detection in diverse applications such as **autonomous driving**, **surveillance systems**, **robotics**, and **retail analytics**. Its enhanced flexibility and deployment-friendly design make it suitable for both edge devices and large-scale systems + ensures high accuracy and speed in dynamic, real-world environments. | [

"person",

"bicycle",

"car",

"motorbike",

"aeroplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"backpack",

"umbrella",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"sofa",

"pottedplant",

"bed",

"diningtable",

"toilet",

"tvmonitor",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

davanstrien/detr_beyond_words |

# detr_beyond_words (WIP)

[facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) fine tuned on [Beyond Words](https://github.com/LibraryOfCongress/newspaper-navigator/tree/master/beyond_words_data). | [

"photograph",

"illustration",

"map",

"comics/cartoon",

"editorial cartoon",

"headline",

"advertisement"

] |

facebook/detr-resnet-101-dc5 |

# DETR (End-to-End Object Detection) model with ResNet-101 backbone (dilated C5 stage)

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrFeatureExtractor, DetrForObjectDetection

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = DetrFeatureExtractor.from_pretrained('facebook/detr-resnet-101-dc5')

model = DetrForObjectDetection.from_pretrained('facebook/detr-resnet-101-dc5')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding COCO classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of **44.9** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

facebook/detr-resnet-101 |

# DETR (End-to-End Object Detection) model with ResNet-101 backbone

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrImageProcessor, DetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# you can specify the revision tag if you don't want the timm dependency

processor = DetrImageProcessor.from_pretrained("facebook/detr-resnet-101", revision="no_timm")

model = DetrForObjectDetection.from_pretrained("facebook/detr-resnet-101", revision="no_timm")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.9

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.9)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

This should output (something along the lines of):

```

Detected cat with confidence 0.998 at location [344.06, 24.85, 640.34, 373.74]

Detected remote with confidence 0.997 at location [328.13, 75.93, 372.81, 187.66]

Detected remote with confidence 0.997 at location [39.34, 70.13, 175.56, 118.78]

Detected cat with confidence 0.998 at location [15.36, 51.75, 316.89, 471.16]

Detected couch with confidence 0.995 at location [-0.19, 0.71, 639.73, 474.17]

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of **43.5** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

facebook/detr-resnet-50-dc5 |

# DETR (End-to-End Object Detection) model with ResNet-50 backbone (dilated C5 stage)

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrFeatureExtractor, DetrForObjectDetection

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = DetrFeatureExtractor.from_pretrained('facebook/detr-resnet-50-dc5')

model = DetrForObjectDetection.from_pretrained('facebook/detr-resnet-50-dc5')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

# model predicts bounding boxes and corresponding COCO classes

logits = outputs.logits

bboxes = outputs.pred_boxes

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of **43.3** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

facebook/detr-resnet-50 |

# DETR (End-to-End Object Detection) model with ResNet-50 backbone

DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [End-to-End Object Detection with Transformers](https://arxiv.org/abs/2005.12872) by Carion et al. and first released in [this repository](https://github.com/facebookresearch/detr).

Disclaimer: The team releasing DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=facebook/detr) to look for all available DETR models.

### How to use

Here is how to use this model:

```python

from transformers import DetrImageProcessor, DetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# you can specify the revision tag if you don't want the timm dependency

processor = DetrImageProcessor.from_pretrained("facebook/detr-resnet-50", revision="no_timm")

model = DetrForObjectDetection.from_pretrained("facebook/detr-resnet-50", revision="no_timm")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.9

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.9)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

This should output:

```

Detected remote with confidence 0.998 at location [40.16, 70.81, 175.55, 117.98]

Detected remote with confidence 0.996 at location [333.24, 72.55, 368.33, 187.66]

Detected couch with confidence 0.995 at location [-0.02, 1.15, 639.73, 473.76]

Detected cat with confidence 0.999 at location [13.24, 52.05, 314.02, 470.93]

Detected cat with confidence 0.999 at location [345.4, 23.85, 640.37, 368.72]

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/google-research/vision_transformer/blob/master/vit_jax/input_pipeline.py).

Images are resized/rescaled such that the shortest side is at least 800 pixels and the largest side at most 1333 pixels, and normalized across the RGB channels with the ImageNet mean (0.485, 0.456, 0.406) and standard deviation (0.229, 0.224, 0.225).

### Training

The model was trained for 300 epochs on 16 V100 GPUs. This takes 3 days, with 4 images per GPU (hence a total batch size of 64).

## Evaluation results

This model achieves an AP (average precision) of **42.0** on COCO 2017 validation. For more details regarding evaluation results, we refer to table 1 of the original paper.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` | [

"n/a",

"person",

"traffic light",

"fire hydrant",

"street sign",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"bicycle",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"hat",

"backpack",

"umbrella",

"shoe",

"car",

"eye glasses",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"motorcycle",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"plate",

"wine glass",

"cup",

"fork",

"knife",

"airplane",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"bus",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"mirror",

"dining table",

"window",

"desk",

"train",

"toilet",

"door",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"truck",

"toaster",

"sink",

"refrigerator",

"blender",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"boat",

"toothbrush"

] |

SenseTime/deformable-detr-single-scale-dc5 |

# Deformable DETR model with ResNet-50 backbone, single scale + dilation

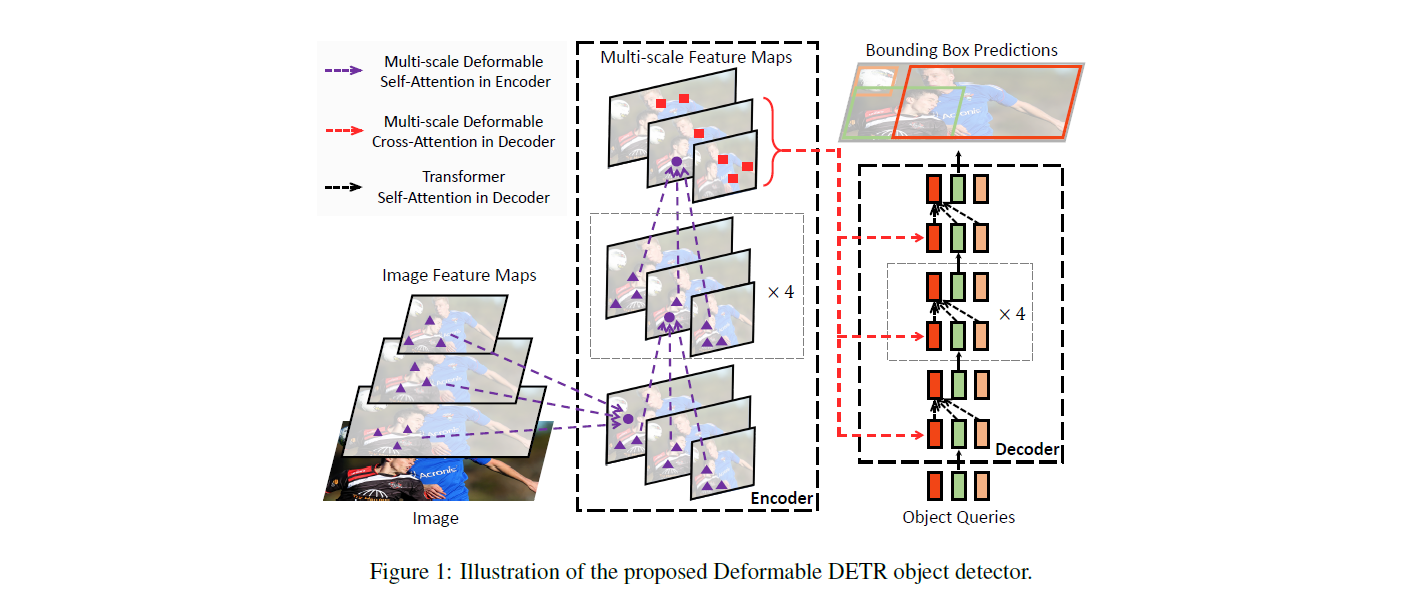

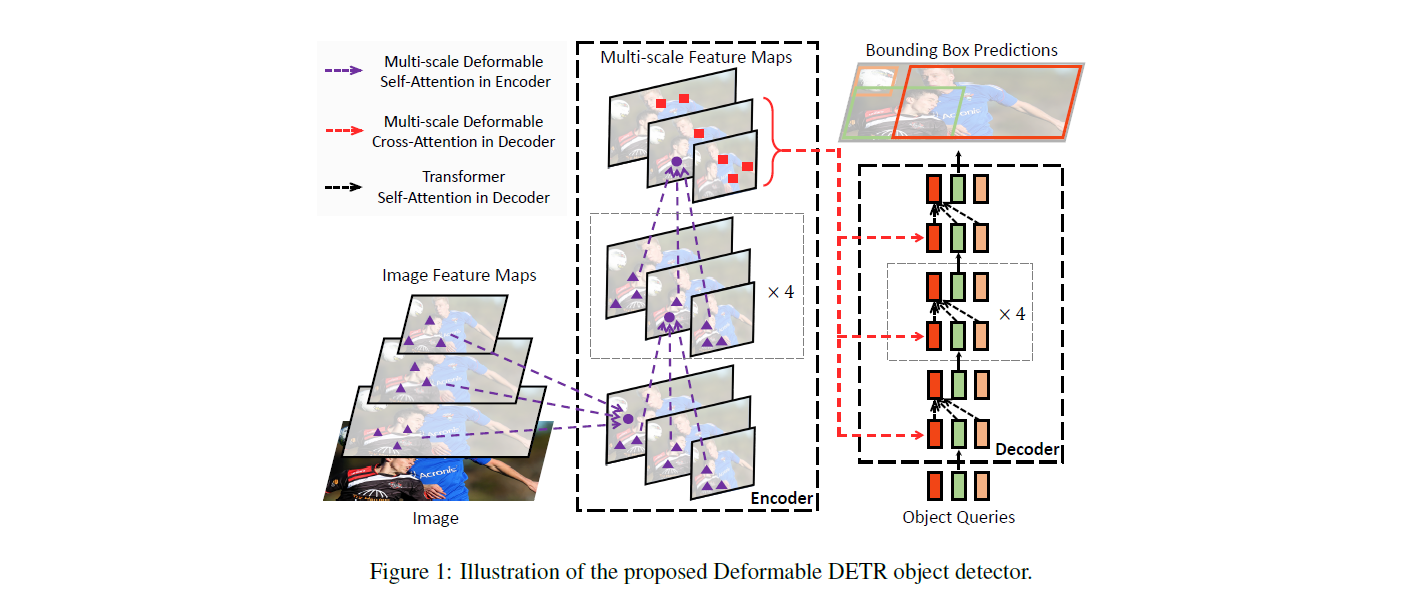

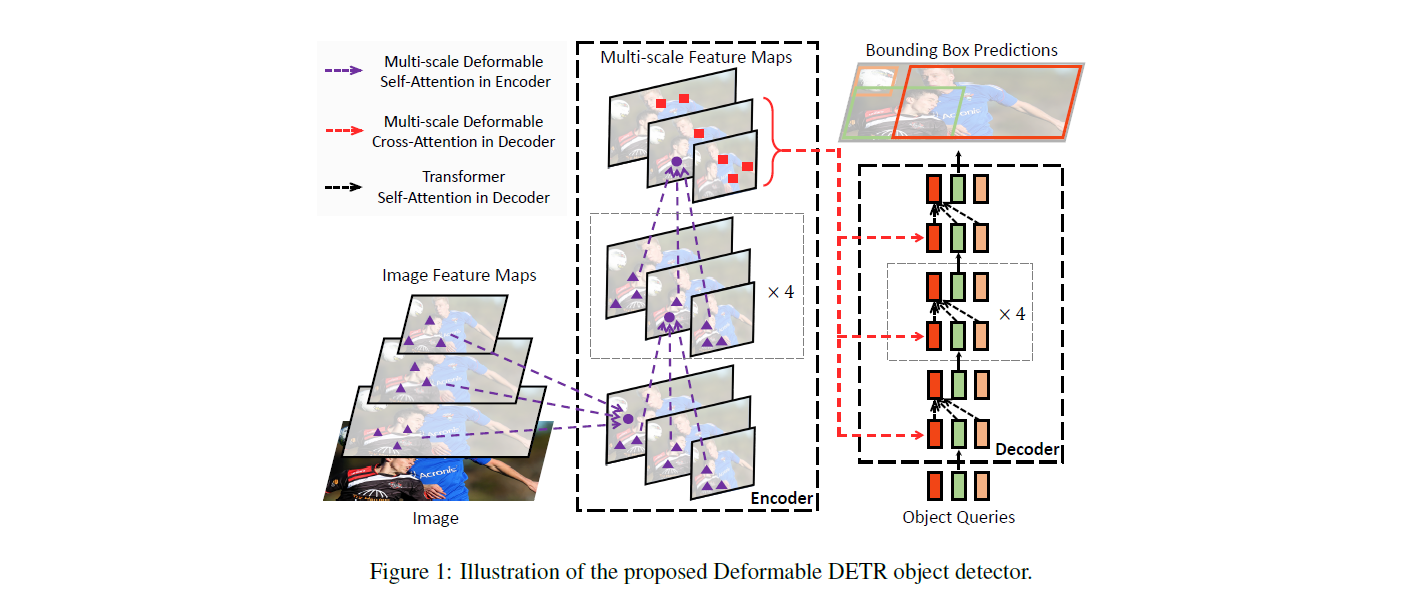

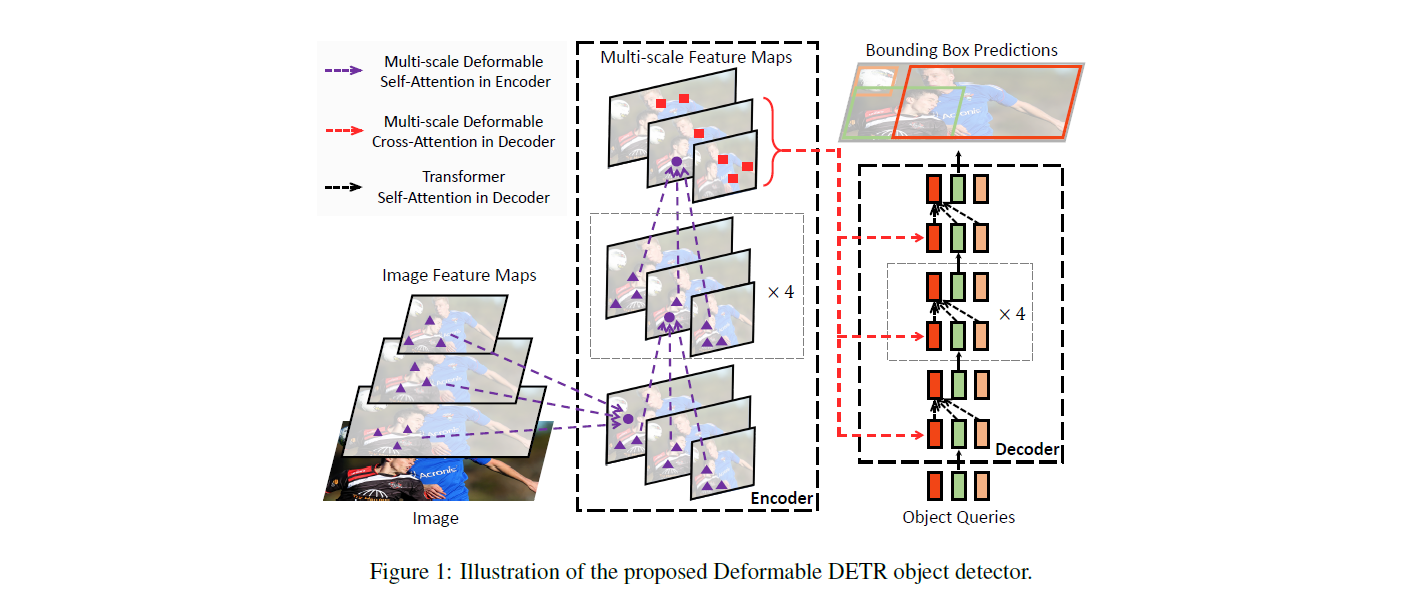

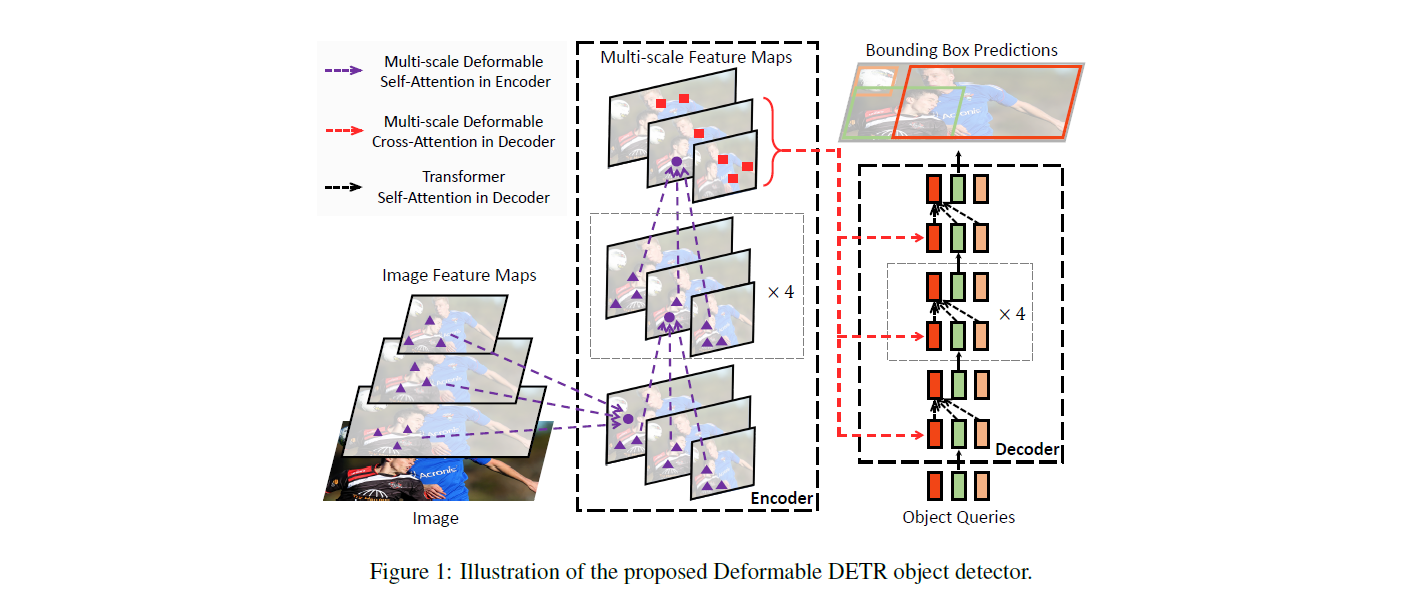

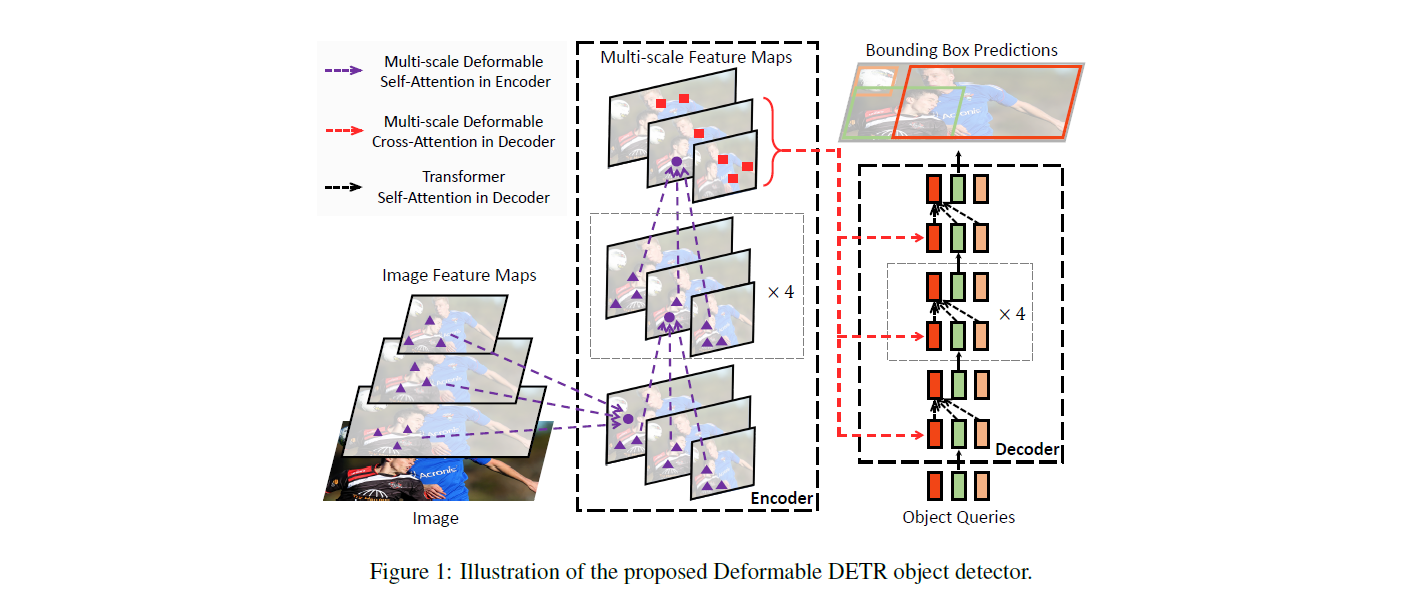

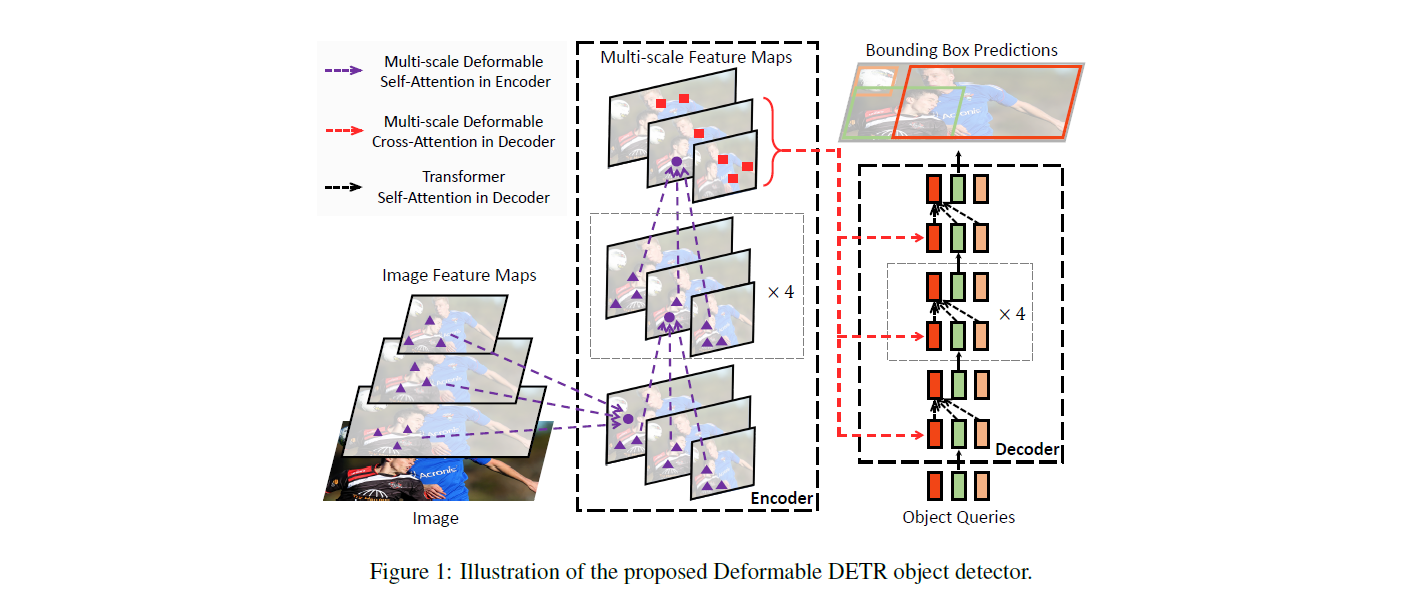

Deformable DEtection TRansformer (DETR) single scale + dilation model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr-single-scale-dc5")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr-single-scale-dc5")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

SenseTime/deformable-detr-single-scale |

# Deformable DETR model with ResNet-50 backbone, single scale

Deformable DEtection TRansformer (DETR), single scale model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr-single-scale")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr-single-scale")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

SenseTime/deformable-detr-with-box-refine-two-stage |

# Deformable DETR model with ResNet-50 backbone, with box refinement and two stage

Deformable DEtection TRansformer (DETR), with box refinement and two stage model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr-with-box-refine-two-stage")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr-with-box-refine-two-stage")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

SenseTime/deformable-detr-with-box-refine |

# Deformable DETR model with ResNet-50 backbone, with box refinement

Deformable DEtection TRansformer (DETR), with box refinement trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr-with-box-refine")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr-with-box-refine")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

SenseTime/deformable-detr |

# Deformable DETR model with ResNet-50 backbone

Deformable DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

This should output:

```

Detected cat with confidence 0.856 at location [342.19, 24.3, 640.02, 372.25]

Detected remote with confidence 0.739 at location [40.79, 72.78, 176.76, 117.25]

Detected cat with confidence 0.859 at location [16.5, 52.84, 318.25, 470.78]

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}

``` | [

"n/a",

"person",

"bicycle",

"car",

"motorcycle",

"airplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"n/a",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"n/a",

"backpack",

"umbrella",

"n/a",

"n/a",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"n/a",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"couch",

"potted plant",

"bed",

"n/a",

"dining table",

"n/a",

"n/a",

"toilet",

"n/a",

"tv",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"n/a",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

TahaDouaji/detr-doc-table-detection |

# Model Card for detr-doc-table-detection

# Model Details

detr-doc-table-detection is a model trained to detect both **Bordered** and **Borderless** tables in documents, based on [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50).

- **Developed by:** Taha Douaji

- **Shared by [Optional]:** Taha Douaji

- **Model type:** Object Detection

- **Language(s) (NLP):** More information needed

- **License:** More information needed

- **Parent Model:** [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50)

- **Resources for more information:**

- [Model Demo Space](https://huggingface.co/spaces/trevbeers/pdf-table-extraction)

- [Associated Paper](https://arxiv.org/abs/2005.12872)

# Uses

## Direct Use

This model can be used for the task of object detection.

## Out-of-Scope Use

The model should not be used to intentionally create hostile or alienating environments for people.

# Bias, Risks, and Limitations

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)). Predictions generated by the model may include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups.

## Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

# Training Details

## Training Data

The model was trained on ICDAR2019 Table Dataset

# Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

# Citation

**BibTeX:**

```bibtex

@article{DBLP:journals/corr/abs-2005-12872,

author = {Nicolas Carion and

Francisco Massa and

Gabriel Synnaeve and

Nicolas Usunier and

Alexander Kirillov and

Sergey Zagoruyko},

title = {End-to-End Object Detection with Transformers},

journal = {CoRR},

volume = {abs/2005.12872},

year = {2020},

url = {https://arxiv.org/abs/2005.12872},

archivePrefix = {arXiv},

eprint = {2005.12872},

timestamp = {Thu, 28 May 2020 17:38:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2005-12872.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

# Model Card Authors [optional]

Taha Douaji in collaboration with Ezi Ozoani and the Hugging Face team

# Model Card Contact

More information needed

# How to Get Started with the Model

Use the code below to get started with the model.

```python

from transformers import DetrImageProcessor, DetrForObjectDetection

import torch

from PIL import Image

import requests

image = Image.open("IMAGE_PATH")

processor = DetrImageProcessor.from_pretrained("TahaDouaji/detr-doc-table-detection")

model = DetrForObjectDetection.from_pretrained("TahaDouaji/detr-doc-table-detection")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.9

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.9)[0]