The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Error code: DatasetGenerationError

Exception: ParserError

Message: Error tokenizing data. C error: Expected 3 fields in line 9, saw 4

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1995, in _prepare_split_single

for _, table in generator:

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/csv/csv.py", line 195, in _generate_tables

for batch_idx, df in enumerate(csv_file_reader):

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1843, in __next__

return self.get_chunk()

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1985, in get_chunk

return self.read(nrows=size)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1923, in read

) = self._engine.read( # type: ignore[attr-defined]

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/c_parser_wrapper.py", line 234, in read

chunks = self._reader.read_low_memory(nrows)

File "parsers.pyx", line 850, in pandas._libs.parsers.TextReader.read_low_memory

File "parsers.pyx", line 905, in pandas._libs.parsers.TextReader._read_rows

File "parsers.pyx", line 874, in pandas._libs.parsers.TextReader._tokenize_rows

File "parsers.pyx", line 891, in pandas._libs.parsers.TextReader._check_tokenize_status

File "parsers.pyx", line 2061, in pandas._libs.parsers.raise_parser_error

pandas.errors.ParserError: Error tokenizing data. C error: Expected 3 fields in line 9, saw 4

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1529, in compute_config_parquet_and_info_response

parquet_operations = convert_to_parquet(builder)

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1154, in convert_to_parquet

builder.download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1027, in download_and_prepare

self._download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1122, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1882, in _prepare_split

for job_id, done, content in self._prepare_split_single(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2038, in _prepare_split_single

raise DatasetGenerationError("An error occurred while generating the dataset") from e

datasets.exceptions.DatasetGenerationError: An error occurred while generating the datasetNeed help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

datasplit

string | comment

string | video_id

string | video_name

string | playlist_name

string | annotator_H1_general

float64 | annotator_H1_setup

float64 | annotator_H1_pedagogy

float64 | annotator_H1_confusion

float64 | annotator_H1_gratitude

float64 | annotator_H1_personal_experience

float64 | annotator_H1_clarification

float64 | annotator_H1_bookmark

float64 | annotator_H1_non_english

float64 | annotator_H1_na

float64 | annotator_H2_general

float64 | annotator_H2_setup

float64 | annotator_H2_pedagogy

float64 | annotator_H2_confusion

float64 | annotator_H2_gratitude

float64 | annotator_H2_personal_experience

float64 | annotator_H2_clarification

float64 | annotator_H2_bookmark

float64 | annotator_H2_non_english

float64 | annotator_H2_na

float64 | comment_id

float64 | annotator_openaichat_v1_gratitude

float64 | annotator_openaichat_v0_setup

float64 | annotator_openaichat_v8_confusion

float64 | annotator_openaichat_v47_pedagogy

float64 | annotator_openaichat_v0_non_english

float64 | annotator_openaichat_v8_bookmark

float64 | annotator_openaichat_v1_clarification

float64 | annotator_openaichat_v39_general

float64 | annotator_openaichat_v45_personal_experience

float64 | annotator_openaichat_v3_na

float64 | annotator_openaichat_v46_personal_experience

float64 | annotator_openaichat_v47_personal_experience

float64 | annotator_openaichat_v48_personal_experience

float64 | annotator_openaichat_v49_personal_experience

float64 | annotator_openaichat_v50_personal_experience

float64 | annotator_openaichat_v40_general

float64 | annotator_openaichat_v48_pedagogy

float64 | annotator_openaichat_v49_pedagogy

float64 | annotator_openaichat_v50_pedagogy

float64 | annotator_openaichat_v51_pedagogy

float64 | annotator_openaichat_v52_pedagogy

float64 | annotator_openaichat_v53_pedagogy

float64 | annotator_openaichat_v54_pedagogy

float64 | annotator_openaichat_v55_pedagogy

float64 | annotator_openaichat_v56_pedagogy

float64 | annotator_openaichat_v57_pedagogy

float64 | annotator_openaichat_v58_pedagogy

float64 | annotator_openaichat_v59_pedagogy

float64 | annotator_openaichat_v60_pedagogy

float64 | annotator_openaichat_v61_pedagogy

float64 | annotator_openaichat_v62_pedagogy

float64 | annotator_openaichat_v63_pedagogy

float64 | annotator_openaichat_v64_pedagogy

float64 | annotator_openaichat_v65_pedagogy

float64 | annotator_openaichat_v66_pedagogy

float64 | annotator_openaichat_v67_pedagogy

float64 | annotator_openaichat_v68_pedagogy

float64 | annotator_openaichat_v69_pedagogy

float64 | annotator_openaichat_v70_pedagogy

float64 | annotator_openaichat_v71_pedagogy

float64 | annotator_openaichat_v72_pedagogy

float64 | annotator_openaichat_v73_pedagogy

float64 | 68

null | annotator_openaichat_v51_personal_experience

float64 | annotator_openaichat_v74_pedagogy

float64 | annotator_openaichat_v52_personal_experience

float64 | annotator_openaichat_v73b_pedagogy

float64 | annotator_openaichat_v73c_pedagogy

float64 | annotator_openaichat_v73d_pedagogy

float64 | annotator_openaichat_v74_na

float64 | annotator_openaichat_v74_clarification

float64 | annotator_openaichat_v74_non_english

float64 | annotator_openaichat_v74_gratitude

float64 | annotator_openaichat_v74_general

float64 | annotator_openaichat_v74_confusion

float64 | annotator_openaichat_v74_setup

float64 | annotator_openaichat_v75_pedagogy

float64 | annotator_openaichat_v75_clarification

float64 | annotator_openaichat_v75_personal_experience

float64 | annotator_openaichat_v75_confusion

float64 | annotator_openaichat_v75_non_english

float64 | annotator_openaichat_v75_na

float64 | annotator_openaichat_v75_setup

float64 | annotator_openaichat_v75_general

float64 | annotator_openaichat_v75_gratitude

float64 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

validation | Determinant has been determined. Thank you MIT! | srxexLishgY | 18. Properties of Determinants | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 54 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 |

validation | 19:40 if you turn the volume down everything he says changes its meaning | tzoYhe3H5dM | Lec 31: Stokes' theorem | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

validation | "Be assured it works just the same way if you have 10,000 variables" | PxCxlsl_YwY | Lec 1: Dot product | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | I suppose that’s why MIT is not for every student and why it is difficult and costly to get into. This course review requires a special level of mastery of the material, which assumes a great deal of understanding and problem practice throughout the course. When a matrix is not square and doesn’t have a unique solution (independent rows or columns) all kinds of scenarios could happen. Adding to that, whether the matrix is orthogonal, orthonormal, symmetric, positive or semi definite, as well as others involving Eigenvectors, values, pivots and determinants. I suppose that the best way to wrap all that information around the head is to start with some simple 2 by 2 or 3 by 3 matrix and experiment with all different scenarios using a good software package to spit out the output and see how the theory works. I am using scientific notebook. | RWvi4Vx4CDc | 34. Final Course Review | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 86 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | null | 0 | 1 | null | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | 21:12 In fact, if we normalize x1, x2, x3 to q1, q2, q3, then A = 0*q1*q1^T + c*q2*q2^T + 2*q3*q3^T (since q's are orthonormal). Any c, which is not necessarily real, can make A symmetric. ---can anyone help me check this statement? I havent found any comment discussing this yet. | HgC1l_6ySkc | 32. Quiz 3 Review | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | form is pathetic | J7DzL2_Na80 | 1. The Geometry of Linear Equations | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 176 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | 34:43 why "directional second derivative" would not give us a clue of whether it is a min or max? I thought it is a promising way. hmmm. | 15HVevXRsBA | Lec 13: Lagrange multipliers | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 12 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | FCP | hwDRfkPSXng | Lecture 32: ImageNet is a Convolutional Neural Network (CNN), The Convolution Rule | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 58 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

validation | Thank you very much! Amazing lectures! | RWvi4Vx4CDc | 34. Final Course Review | MIT 18.06 Linear Algebra, Spring 2005 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 131 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

validation | @seisdoesmatter The chalk's really awesome. Looks thicker than ordinary chalk. It seems a bit like the chalk kids use to paint on the pavement! | srxexLishgY | 18. Properties of Determinants | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 27 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

validation | His teaching style seems casual and intuitive. I go to a small public college and the course is much more formal and proof driven. These lectures are a great addition to (as well as a nice break from) formal proofs. Thanks MIT! | yjBerM5jWsc | 9. Independence, Basis, and Dimension | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 70 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | null | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 |

validation | really useful, thanks!! | 15HVevXRsBA | Lec 13: Lagrange multipliers | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 191 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

validation | Prerequisites please?? | j9WZyLZCBzs | 1. Probability Models and Axioms | 6.041 Probabilistic Systems Analysis and Applied Probability | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 108 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | Marvellous!!! | k3AiUhwHQ28 | 25. Stochastic Gradient Descent | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 103 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | feels like too many things a squished into one lecture | EObHWIEKGjA | 7. Discrete Random Variables III | 6.041 Probabilistic Systems Analysis and Applied Probability | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 173 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | Keep up the good work🙏 | LY7YmuDbuW0 | Lecture 1: Sets, Set Operations and Mathematical Induction | MIT 18.100A Real Analysis, Fall 2020 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | for 2 by 2 systems the normal method at school is fine but it's too long and it's much quicker to write it in a matrix and the pivots and words like that are just terms to describe what he is doing | QVKj3LADCnA | 2. Elimination with Matrices. | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 174 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | No. | MsIvs_6vC38 | 4. Factorization into A = LU | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 104 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | so substitute infinity minus one and chart the resultant here and now on the time line relax allow the unknown as infinite as well same graph pulse the fibronchi generator chart for rms the rms positive on sine only so return to chart and now condense the univariable into quantum zero | QVKj3LADCnA | 2. Elimination with Matrices. | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 192 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 |

validation | can anyone explain how the 5th column of w8 came out to be? | Xa2jPbURTjQ | 3. Orthonormal Columns in Q Give Q'Q = I | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 169 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | Simply excellent!! The Internet can save the world | YP_B0AapU0c | Lec 16: Double integrals | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 119 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | Prof. Strang. Please dont wink at me. I'm too shy. : P

Kidding! I've grown used to it from your linear algebra series 😅

Thanks for your work and all the enthusiasm. | Cx5Z-OslNWE | Course Introduction of 18.065 by Professor Strang | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 109 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 |

validation | are the online assignments available for this course? | YiqIkSHSmyc | Lecture 1: The Column Space of A Contains All Vectors Ax | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 166 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | Too funny, people need to see this.

acesofclubs

1 year ago

Mafia Mob moment at 11:36 | 5q_3FDOkVRQ | Lec 5 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 151 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

validation | this lecturer is the best lecturer i've ever had. never encounterd such clear explanations! very recommende :-) | j9WZyLZCBzs | 1. Probability Models and Axioms | 6.041 Probabilistic Systems Analysis and Applied Probability | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 199 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | This class is essentially week one of a Machine Learning class XD | tBUHRpFZy0s | 24. Classical Inference II | 6.041 Probabilistic Systems Analysis and Applied Probability | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 142 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 |

validation | Why the hell does my video freezes when it reaches 00:27 secods it feels like my ethernet service provider is preventing me from lisnening to a great lec! anyways need some coffee... | 7K1sB05pE0A | Lec 1 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 158 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | the audio is so low even when i turn up all volume. prof needs to put his mike anywhere but his chest plz. specifically talking about parts around 33:18. mans whispering. and you can't even hear what the students are asking. but i guess since this is opencourseware, i can't complain much about free college lectures. | rLlZpnT02ZU | 4. Parametric Inference (cont.) and Maximum Likelihood Estimation | MIT 18.650 Statistics for Applications, Fall 2016 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 196 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

validation | Around 34:00 : what about the case there AC - B^2 > 0 but A = 0? I take it that is also a case where we have a local max, since -B^2 is always negative; i'm just sorta surprised no-one noticed the omission? | 3_goGnJm5sA | Lec 10: Second derivative test; boundaries & infinity | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 40 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | Thank you so much for putting analysis online! | LY7YmuDbuW0 | Lecture 1: Sets, Set Operations and Mathematical Induction | MIT 18.100A Real Analysis, Fall 2020 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 127 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

validation | is this course applicable for lab analysis of data using statistical methods ? im a physics student wondering if this is worth the time to learn | VPZD_aij8H0 | 1. Introduction to Statistics | MIT 18.650 Statistics for Applications, Fall 2016 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 186 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | Awesome Teacher Gibert Strang | FX4C-JpTFgY | 3. Multiplication and Inverse Matrices | MIT 18.06 Linear Algebra, Spring 2005 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 42 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | Is a hyperbola in high dimensional spaces called a hyperhyperbola? | vF7eyJ2g3kU | 27. Positive Definite Matrices and Minima | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 90 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | Professore: "How many of you actually knew about vectors before that?"

Class: -______________- ... seriously? | PxCxlsl_YwY | Lec 1: Dot product | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 112 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | This was great. I only need some Harvard friends I can impress. | P7a4bjE6Crk | 12. Iterated Expectations | 6.041 Probabilistic Systems Analysis and Applied Probability | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 150 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | 41:21 it would help if it were in shot | NcPUI7aPFhA | Lecture 8: Norms of Vectors and Matrices | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

validation | The only reason I can see that you'd be opposed to this is perhaps if you think that American workers and businesses cannot keep up? Or just threatened and xenophobic of non-Americans? Well, if rising standards serves as a motivation, then American businesses have to step up their games and become better. Which is better for you.

It's not all about direct national interest. And as an international student who is not going to MIT, I greatly appreciate this gesture (which doesn't cost them much). | Pd2xP5zDsRw | Lec 20 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 141 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | Thank you, professor Strang and all guys insist on this perfect linear algebra lecture.

I started this lecture on 23/12/2021 and completed it on 08/01/2022 in Hong Kong, just before my new semester. I will remember this 18.06 forever, and professor Strang will be the best linear algebra teacher in my heart! | RWvi4Vx4CDc | 34. Final Course Review | MIT 18.06 Linear Algebra, Spring 2005 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 133 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

validation | why do we need to make the eigen vector as small as possible ? | wrEcHhoJxjM | 23. Accelerating Gradient Descent (Use Momentum) | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 206 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | Thanks for MIT OCW and Prof.Auroux. | 24v9onS9Kcg | Lec 35: Final review (cont.) | MIT 18.02 Multivariable Calculus, Fall 2007 | MIT 18.02 Multivariable Calculus, Fall 2007 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 137 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

validation | With all my endurance I listen to this saga until this lecture just to understand Linear Regression | Y_Ac6KiQ1t0 | 15. Projections onto Subspaces | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 161 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | i dont think this is what youtube was made for ._. | 7K1sB05pE0A | Lec 1 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 181 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | By the chain rule, (d/dx) f(g) when g is a function of x is g' * f '(g).

In this case, the "g" is (kx) so you can get the answer by using the chain rule. | 9v25gg2qJYE | Lec 6 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 47 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | null | 0 | 0 | null | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

validation | I admire this person a lot, it shows his passion and dedication. At his advanced age, I hope I have the same love at work as he does. | 7UJ4CFRGd-U | An Interview with Gilbert Strang on Teaching Linear Algebra | MIT 18.06 Linear Algebra, Spring 2005 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 74 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | Engineering students must love this | BSAA0akmPEU | Lec 9 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 55 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

validation | This man just truly loves linear algebra, and it's fantastic. | or6C4yBk_SY | Lecture 2: Multiplying and Factoring Matrices | MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 148 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | He's such a great instructor | QVKj3LADCnA | 2. Elimination with Matrices. | MIT 18.06 Linear Algebra, Spring 2005 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 69 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | Thank you so much!! | RWvi4Vx4CDc | 34. Final Course Review | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 130 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

validation | Why not Leipzig continuous and just bounded? | 78vN4sO7FVU | Lecture 2: Bounded Linear Operators | MIT 18.102 Introduction to Functional Analysis, Spring 2021 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 157 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

validation | Thanks Professor Gilbert Strang | 7UJ4CFRGd-U | An Interview with Gilbert Strang on Teaching Linear Algebra | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 134 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

validation | He's just showing applications of linear algebra, not teaching them. That's why it seems "sloppy". You just can't teach Fourier Transform in 30 mins. | M0Sa8fLOajA | 26. Complex Matrices; Fast Fourier Transform | MIT 18.06 Linear Algebra, Spring 2005 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 68 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | null | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | null | 1 | null | 0 | 0 | null | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

validation | Wtf, i learnt this when I was 15. How is MIT one of the best uni in the world?? | 7K1sB05pE0A | Lec 1 | MIT 18.01 Single Variable Calculus, Fall 2007 | MIT 18.01 Single Variable Calculus, Fall 2006 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 163 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | null | 0 | null | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

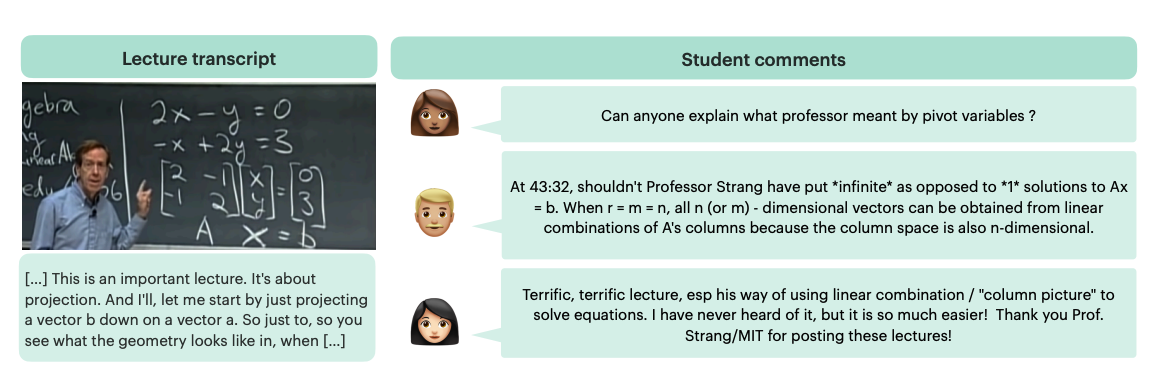

TLDR: MIT OCW Math Lectures with Student Questions

SIGHT: A Large Annotated Dataset on Student Insights Gathered from Higher Education Transcripts

Project Page • Paper • Code • Video

Authors: Rose E. Wang*, Pawan Wirawarn*, Noah Goodman and Dorottya Demszky

*= Equal contributions

In the Proceedings of Innovative Use of NLP for Building Educational Applications 2023

If you find our work useful or interesting, please consider citing it!

@inproceedings{wang2023sight,

title={SIGHT: A Large Annotated Dataset on Student Insights Gathered from Higher Education Transcripts},

author={Wang, Rose E and Wirawarn, Pawan and Goodman, Noah and Demszky, Dorottya},

year={2023},

month = jun,

booktitle = {18th Workshop on Innovative Use of NLP for Building Educational Applications},

month_numeric = {6}

}

Motivation

Lectures are a learning experience for both students and teachers. Students learn from teachers about the subject material, while teachers learn from students about how to refine their instruction. Unfortunately, online student feedback is unstructured and abundant, making it challenging for teachers to learn and improve. We take a step towards tackling this challenge. First, we contribute a dataset for studying this problem: SIGHT is a large dataset of 288 math lecture transcripts and 15,784 comments collected from the Massachusetts Institute of Technology OpenCourseWare (MIT OCW) YouTube channel. Second, we develop a rubric for categorizing feedback types using qualitative analysis. Qualitative analysis methods are powerful in uncovering domain-specific insights, however they are costly to apply to large data sources. To overcome this challenge, we propose a set of best practices for using large language models (LLMs) to cheaply classify the comments at scale. We observe a striking correlation between the model's and humans' annotation: Categories with consistent human annotations (>$0.9$ inter-rater reliability, IRR) also display higher human-model agreement (>$0.7$), while categories with less consistent human annotations ($0.7$-$0.8$ IRR) correspondingly demonstrate lower human-model agreement ($0.3$-$0.5$). These techniques uncover useful student feedback from thousands of comments, costing around $$0.002$ per comment. We conclude by discussing exciting future directions on using online student feedback and improving automated annotation techniques for qualitative research.

Repository structure

Scripts are in run_analysis.sh for replicating the paper analysis. Please refer to the prompts directory for replicating the annotations.

The repo structure:

.

├── data

├── annotations # Sample (human) and full SIGHT annotations

├── comments # Per-video comments

├── metadata # Per-video metadata like playlist ID or video name

└── transcripts # Per-video transcript, transcribed with Whisper Large V2

├── prompts # Prompts used for annotation

├── results # Result plots used in paper

├── scripts # Python scripts for analysis

├── requirements.txt # Install requirements for running code

├── run_analysis.sh # Complete analysis script

├── LICENSE

└── README.md

Installation

To install the required libraries:

conda create -n sight python=3

conda activate sight

pip install -r requirements.txt

Experiments

TLDR: Running source run_analysis.sh replicates all the results we report in the paper.

Plots (e.g., the IRR comparison in Figure 3) are saved under results/ as PDF files.

Numbers (e.g., sample data information in Table 2 or IRR values in Table 3) are printed out under results/ as txt files.

Annotations

The automated annotations provided in this GitHub repository have been scaled on categories with high inter-rater reliability (IRR) scores. While we have made efforts to ensure the reliability of these annotations, it is important to note that the automated annotations may not be completely error-free. We recommend using these annotations as a starting point and validating them through additional human annotation or other means as necessary. By using these annotations, you acknowledge and accept the potential limitations and inherent uncertainties associated with automated annotation methods, like annotating at scale with GPT-3.5.

We welcome any contributions to improve the quality of the annotations in this repository! If you have made improvements to the annotations or expanded the annotations, feel free to submit a pulll request with your changes. We appreciate all efforts to make these annotations more useful for the education and NLP community!

- Downloads last month

- 49