title

stringlengths 0

56

| url

stringlengths 56

142

| markdown

stringlengths 129

213k

| html

stringlengths 243

3.93M

| crawlDate

stringlengths 24

24

|

|---|---|---|---|---|

🤗 Transformers

|

https://huggingface.co/docs/transformers/v4.34.0/en/index

| "# 🤗 Transformers\n\nState-of-the-art Machine Learning for [PyTorch](https://pytorch.org/), [Tens(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:32.994Z

|

Quick tour

|

https://huggingface.co/docs/transformers/v4.34.0/en/quicktour

| "# Quick tour\n\nGet up and running with 🤗 Transformers! Whether you’re a developer or an every(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:34.381Z

|

Installation

|

https://huggingface.co/docs/transformers/v4.34.0/en/installation

| "# Installation\n\nInstall 🤗 Transformers for whichever deep learning library you’re working wi(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:34.498Z

|

Pipelines for inference

|

https://huggingface.co/docs/transformers/v4.34.0/en/pipeline_tutorial

| "# Pipelines for inference\n\nThe [pipeline()](/docs/transformers/v4.34.0/en/main_classes/pipelines#(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:35.128Z

|

Load pretrained instances with an AutoClass

|

https://huggingface.co/docs/transformers/v4.34.0/en/autoclass_tutorial

| "# Load pretrained instances with an AutoClass\n\nWith so many different Transformer architectures, (...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:35.231Z

|

Preprocess

|

https://huggingface.co/docs/transformers/v4.34.0/en/preprocessing

| "# Preprocess\n\nBefore you can train a model on a dataset, it needs to be preprocessed into the exp(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:36.462Z

|

Fine-tune a pretrained model

|

https://huggingface.co/docs/transformers/v4.34.0/en/training

| "# Fine-tune a pretrained model\n\n## Train a TensorFlow model with Keras\n\nYou can also train 🤗(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:36.715Z

|

Train with a script

|

https://huggingface.co/docs/transformers/v4.34.0/en/run_scripts

| "# Train with a script\n\nAlong with the 🤗 Transformers [notebooks](./noteboks/README), there are(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:37.104Z

|

Distributed training with 🤗 Accelerate

|

https://huggingface.co/docs/transformers/v4.34.0/en/accelerate

| "# Distributed training with 🤗 Accelerate\n\nAs models get bigger, parallelism has emerged as a s(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:37.378Z

|

Load adapters with 🤗 PEFT

|

https://huggingface.co/docs/transformers/v4.34.0/en/peft

| "# Load adapters with 🤗 PEFT\n\n[Parameter-Efficient Fine Tuning (PEFT)](https://huggingface.co/b(...TRUNCATED)

| "<!DOCTYPE html><html class=\"\"><head>\n\t\t<meta charset=\"utf-8\">\n\t\t<meta name=\"viewport\" c(...TRUNCATED)

|

2023-10-05T13:30:37.812Z

|

End of preview. Expand

in Data Studio

Hugging Face Transformers documentation as markdown dataset

This dataset was created using Clipper.js. Clipper is a Node.js command line tool that allows you to easily clip content from web pages and convert it to Markdown. It uses Mozilla's Readability library and Turndown under the hood to parse web page content and convert it to Markdown.

This dataset can be used to create RAG applications, which want to use the transformers documentation.

Example document: https://huggingface.co/docs/transformers/peft

# Load adapters with 🤗 PEFT

[Parameter-Efficient Fine Tuning (PEFT)](https://huggingface.co/blog/peft) methods freeze the pretrained model parameters during fine-tuning and add a small number of trainable parameters (the adapters) on top of it. The adapters are trained to learn task-specific information. This approach has been shown to be very memory-efficient with lower compute usage while producing results comparable to a fully fine-tuned model.

Adapters trained with PEFT are also usually an order of magnitude smaller than the full model, making it convenient to share, store, and load them.

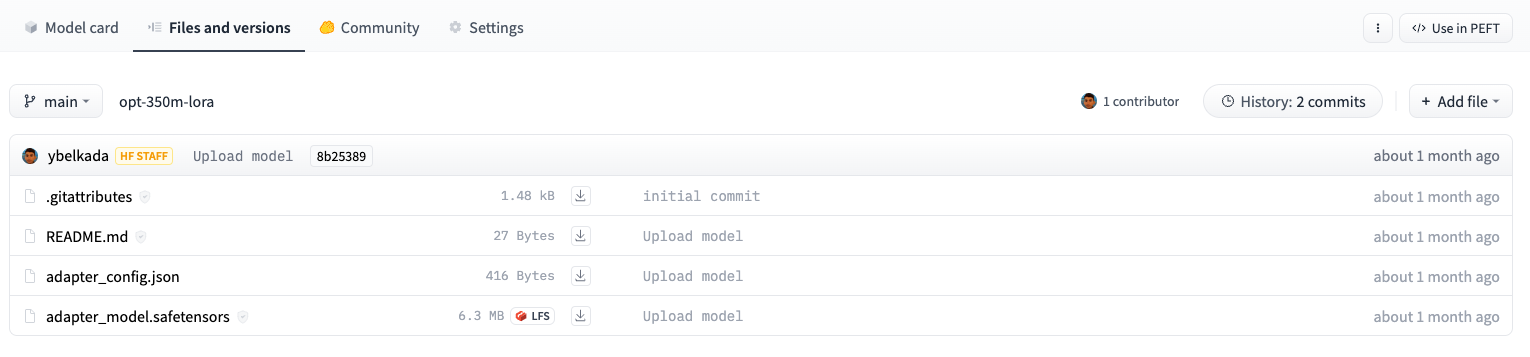

The adapter weights for a OPTForCausalLM model stored on the Hub are only ~6MB compared to the full size of the model weights, which can be ~700MB.

If you’re interested in learning more about the 🤗 PEFT library, check out the [documentation](https://huggingface.co/docs/peft/index).

## Setup

Get started by installing 🤗 PEFT:

If you want to try out the brand new features, you might be interested in installing the library from source:

....

- Downloads last month

- 12