Datasets:

The dataset viewer is not available for this split.

Error code: TooBigContentError

Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

SDOML-lite

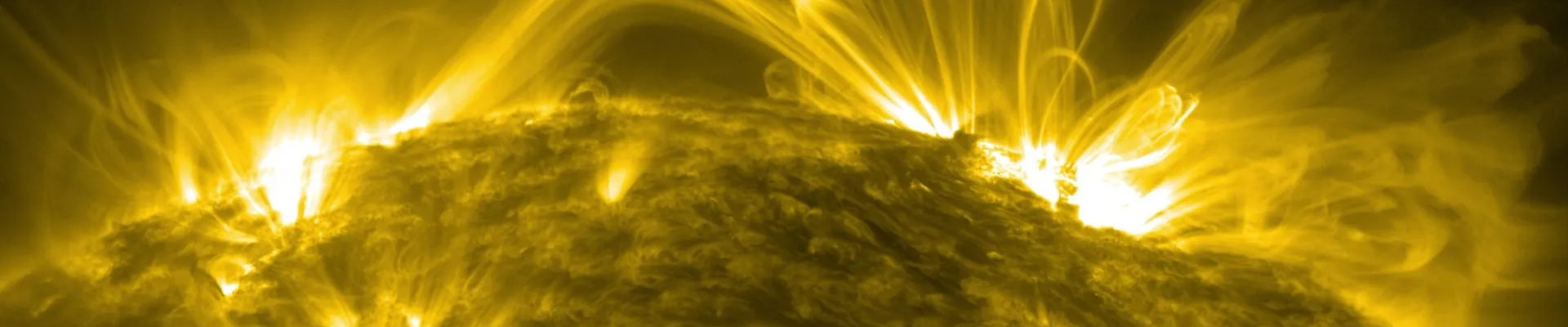

SDOML-lite is a lightweight alternative to the SDOML dataset specifically designed for machine learning applications in solar physics, providing continuous full-disk images of the Sun with magnetic field and extreme ultraviolet data in several wavelengths. The data source is the Solar Dynamics Observatory (SDO) space telescope, a NASA mission that has been in operation since 2010.

NASA’s SDO mission has generated over 20 petabytes of high-resolution solar imagery since its launch in 2010, representing one of the most comprehensive records of science data ever collected. This dataset offers an extraordinary opportunity for scientific discovery and machine learning, enabling advances in space weather forecasting, climate modeling, solar flare and coronal mass ejection (CME) prediction, image-to-image translation, and unsupervised representation learning of solar dynamics. Yet, the sheer scale and complexity of the raw archive have made it largely inaccessible to the broader ML community. SDOML-lite bridges this gap by delivering a curated, normalized, and temporally consistent subset of SDO data, specifically designed for seamless integration into large-scale machine learning pipelines.

IMPORTANT: SDOML and SDOML-lite datasets are different in structure and data distributions. SDOML-lite is inspired by SDOML, but it is not based on SDOML data and there is no compatibility between the two formats.

- Temporal Coverage: 13 May 2010 - 31 July 2024

- Data Source: NASA SDO/AIA (Level 1 FITS files) and SDO/HMI (

hmi.M_720sseries data) - Format: WebDataset (one TAR file per day,

sdoml-lite-xxxx.tar) - Total samples: ~ 500,000 timestamps

- Total Size: ~ 3 TB

- Number of Chunks (Days): 5194

- Image Resolution: 512x512 pixels for all channels

- Data Type:

float32(NumPy.npyfiles)

- Channels per sample: 6 (separate

.npyfiles per channel). AIA: 131 Å, 171 Å, 193 Å, 211 Å, 1600 Å (aia_0131,aia_0171,aia_0193,aia_211,aia_1600). HMI: Line-of-sight magnetogram (hmi_m)

- Cadence: Images are provided every 15 minutes within each daily TAR file. Each TAR file contains up to 96 observation sets.

Intended Uses

This dataset is designed for machine learning research at the intersection of solar physics, space weather, and climate science. Potential applications include:

- Generative and self-supervised modeling of solar dynamics.

- Spatiotemporal forecasting of solar flares and coronal mass ejections (CMEs).

- Real-time space weather nowcasting from full-disk EUV and magnetic imagery.

- Tracking and modeling the evolution of active regions and magnetic flux.

- Learning data-driven proxies for solar irradiance for use in climate models.

- Cross-channel image translation and modality alignment.

Dataset Structure

The dataset is organized into 5194 .tar files, with one .tar file per day within the temporal coverage period. Each daily .tar file contains multiple observation sets, one for every 15-minute interval of that day (up to 96 observation sets per day). An observation set consists of 6 NumPy (.npy) files, one for each specified channel.

Each .tar file corresponds to one day of data and contains one .npy file per channel, per 15-minute timestamp. For each timestamp, the internal file path has the following structure.

YYYY/MM/DD/HHMM.aia_131.npy

YYYY/MM/DD/HHMM.aia_171.npy

YYYY/MM/DD/HHMM.aia_193.npy

YYYY/MM/DD/HHMM.aia_211.npy

YYYY/MM/DD/HHMM.aia_1600.npy

YYYY/MM/DD/HHMM.hmi_m.npy

In WebDataset convention, the YYYY/MM/DD/HHMM substring of the path becomes the key for each data sample, and the aia_0131.npy, aia_0171.npy, aia_0193.npy, aia_0211.npy, aia_1600.npy, hmi_m.npy substrings become the names of the features for a given sample. Each .npy file stores a single-channel 512×512 image.

Features

Each sample obtained by iterating through the WebDataset corresponds to one solar observation (with 15-minute cadence) and contains:

__key__: (string) The base name YYYY/MM/DD/HHMM (e.g., 2010/05/13/0000).aia_0131.npy: (numpy.ndarray) Image data for AIA 131 Å. Shape: (512, 512). Dtype: float32.aia_0171.npy: (numpy.ndarray) Image data for AIA 171 Å. Shape: (512, 512). Dtype: float32.aia_0193.npy: (numpy.ndarray) Image data for AIA 193 Å. Shape: (512, 512). Dtype: float32.aia_0211.npy: (numpy.ndarray) Image data for AIA 211 Å. Shape: (512, 512). Dtype: float32.aia_1600.npy: (numpy.ndarray) Image data for AIA 1600 Å. Shape: (512, 512). Dtype: float32.hmi_m.npy: (numpy.ndarray) Line-of-sight magnetogram data from HMI. Shape: (512, 512). Dtype: float32.

Usage Example

In the SDOML-lite GitHub repository you can find a well-tested PyTorch dataset implementation that is designed to work with a local copy of the dataset.

The following is a minimal code example of how to read data from a streaming version of the dataset.

from datasets import load_dataset

from datetime import datetime

import numpy as np

import matplotlib.pyplot as plt

dataset = load_dataset("oxai4science/sdoml-lite", streaming=True)

channels = ['hmi_m', 'aia_0131', 'aia_0171', 'aia_0193', 'aia_0211', 'aia_1600']

def process(data):

timestamp = data['__key__']

d = []

for c in map(lambda x: x+'.npy', channels):

d.append(np.array(data[c]) if c in data else np.zeros((512, 512)))

return timestamp, np.stack(d, axis=0)

def plot(timestamp, data):

import matplotlib.pyplot as plt

_, axs = plt.subplots(2, 3, figsize=(15, 10))

axs = axs.flatten()

for i, c in enumerate(channels):

axs[i].imshow(data[i], cmap='gray')

axs[i].set_title(c)

axs[i].axis('off')

plt.tight_layout()

plt.suptitle(timestamp)

plt.subplots_adjust(top=0.94)

plt.show()

sample = next(iter(dataset['train']))

timestamp, data = process(sample)

plot(timestamp, data)

The following is another example showing how to put together a minimal PyTorch dataset and dataloader to train with SDOML-lite.

import torch

from torch.utils.data import IterableDataset, DataLoader

# PyTorch dataset

class SDOMLlite(IterableDataset):

def __init__(self, dataset_iter):

self.dataset_iter = dataset_iter

def __iter__(self):

for sample in self.dataset_iter:

t, d = process(sample)

yield t, torch.tensor(d, dtype=torch.float32)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

batch_size = 8

# Create DataLoader

dataset_iter = iter(dataset['train'])

torch_dataset = SDOMLlite(dataset_iter)

loader = DataLoader(torch_dataset, batch_size=batch_size)

# Training loop

num_epochs = 1

for epoch in range(num_epochs):

for batch in loader:

times = batch[0] # Timestamps

images = batch[1].to(device) # Images with shape (batch_size, 6, 512, 512)

print(f"Batch with shape: {images.shape}")

# ...

# training code

# ...

break

break

Data Generation and Processing

The SDOML-lite dataset is generated using the pipeline detailed in the sdoml-lite GitHub repository. The download and processing scripts were run in July 2024 using distributed computing resources provided by Google Cloud for FDL-X Heliolab 2024, which is a public-private partnership AI research initiative with NASA, Google Cloud and Nvidia and other leading research organizations.

Data Splits

This dataset is provided as a collection of daily .tar files. No predefined training, validation, or test splits are provided. Users are encouraged to define their own splits according to their specific research requirements, such as by date ranges (e.g., specific years or months of year for training/validation/testing) or by solar events.

Data normalization

The data comes normalized within each image channel such that the pixel values are in the range [0, 1], making it ready for machine learning use out of the box.

The HMI source we use is already normalized in the range [0, 1]. We normalize the AIA data based on the statistics of the actual AIA data processed during the generation of the dataset, in a two-phase processing pipeline where the first phase computes data statistics and the second phase applies normalization.

A note on data quality

The primary motivation for SDOML-lite is to provide a lightweight dataset suitable for use in machine learning pipelines, for example, as input to models that predict Sun-dependent quantities in domains such as space weather, thermospheric density, or radiation exposure.

We believe the dataset is of sufficient quality to serve as input for a broad range of machine learning applications. However, it is not intended for detailed scientific analysis of the HMI or AIA instruments, for which users should consult the original calibrated data products.

Acknowledgments

This work is supported by NASA under award #80NSSC24M0122 and is the research product of FDL-X Heliolab a public/private partnership between NASA, Trillium Technologies Inc (trillium.tech) and commercial AI partners Google Cloud, NVIDIA and Pasteur Labs & ISI, developing open science for all Humankind.

License

- Downloads last month

- 1,247