The dataset viewer is not available because its heuristics could not detect any supported data files. You can try uploading some data files, or configuring the data files location manually.

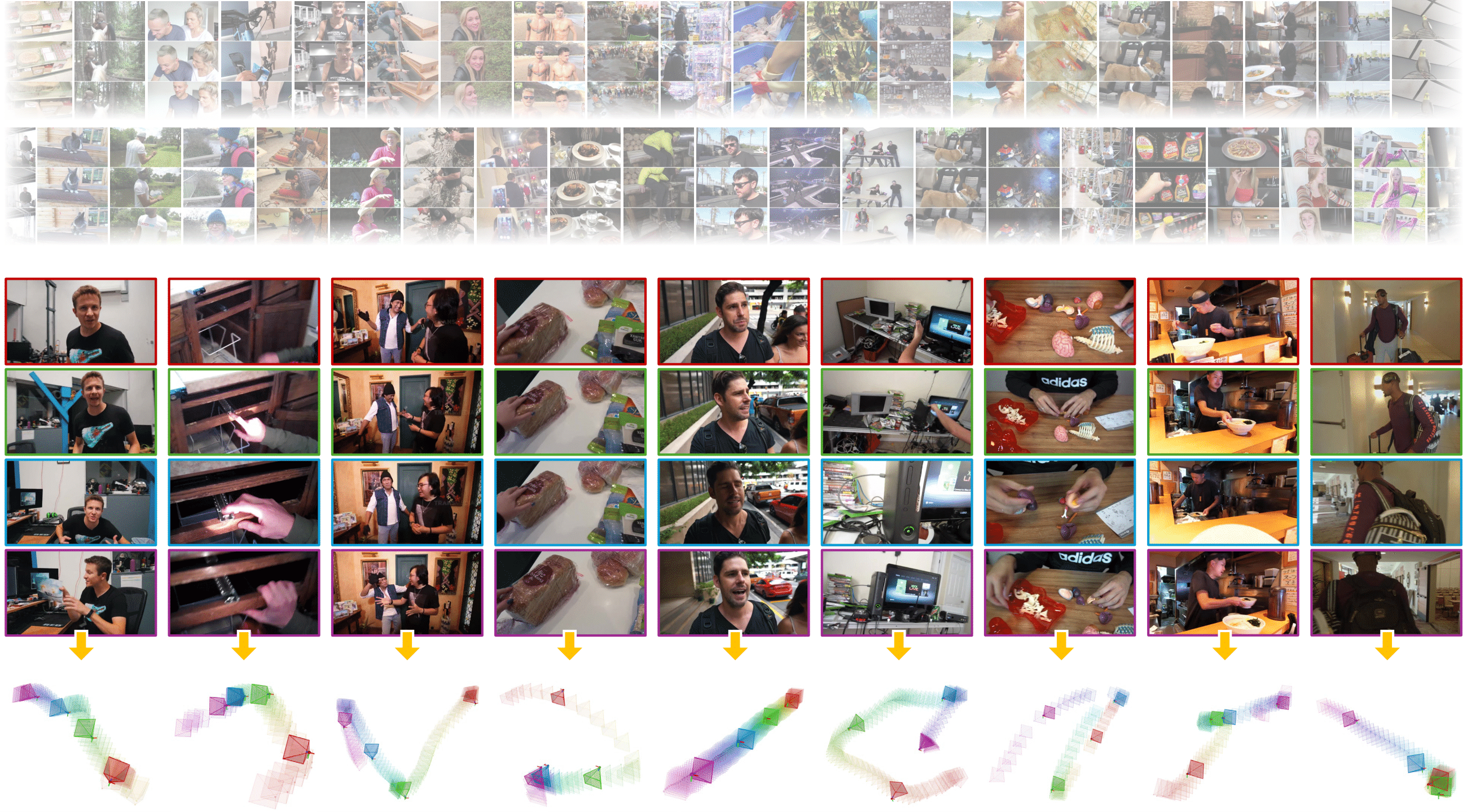

DynPose-100K

Dynamic Camera Poses and Where to Find Them

Chris Rockwell1,2, Joseph Tung3, Tsung-Yi Lin1,

Ming-Yu Liu1, David F. Fouhey3, Chen-Hsuan Lin1

1NVIDIA 2University of Michigan 3New York University

🎉 Updates

- [2025.05] We have released the Lightspeed benchmark, a new dataset with ground-truth camera poses for validating DynPose-100K's pose annotation method. See download instructions below.

- [2025.04] We have made the initial release of DynPose-100K, a large-scale dataset of diverse, dynamic videos with camera annotations. See download instructions below.

Overview

DynPose-100K is a large-scale dataset of diverse, dynamic videos with camera annotations. We curate 100K videos containing dynamic content while ensuring cameras can be accurately estimated (including intrinsics and poses), addressing two key challenges:

- Identifying videos suitable for camera estimation

- Improving camera estimation algorithms for dynamic videos

| Characteristic | Value |

|---|---|

| Size | 100K videos |

| Resolution | 1280×720 (720p) |

| Annotation type | Camera poses (world-to-camera), intrinsics |

| Format | MP4 (videos), PKL (camera data), JPG (frames) |

| Frame rate | 12 fps (extracted frames) |

| Storage | ~200 GB (videos) + ~400 GB (frames) + 0.7 GB (annotations) |

| License | NVIDIA License (for DynPose-100K) |

DynPose-100K Download

DynPose-100K contains diverse Internet videos annotated with state-of-the-art camera pose estimation. Videos were selected from 3.2M candidates through advanced filtering.

1. Camera annotation download (0.7 GB)

git clone https://huggingface.co/datasets/nvidia/dynpose-100k

cd dynpose-100k

unzip dynpose_100k.zip

export DYNPOSE_100K_ROOT=$(pwd)/dynpose_100k

2. Video download (~200 GB for all videos at 720p)

git clone https://github.com/snap-research/Panda-70M.git

pip install -e Panda-70M/dataset_dataloading/video2dataset

- For experiments we use (1280, 720) video resolution rather than the default (640, 360). To download at this resolution (optional), modify download size to 720

video2dataset --url_list="${DYNPOSE_100K_ROOT}/metadata.csv" --output_folder="${DYNPOSE_100K_ROOT}/video" \

--url_col="url" --caption_col="caption" --clip_col="timestamp" \

--save_additional_columns="[matching_score,desirable_filtering,shot_boundary_detection]" \

--config="video2dataset/video2dataset/configs/panda70m.yaml"

3. Video frame extraction (~400 GB for 12 fps over all videos at 720p)

python scripts/extract_frames.py --input_video_dir ${DYNPOSE_100K_ROOT}/video \

--output_frame_parent ${DYNPOSE_100K_ROOT}/frames-12fps \

--url_list ${DYNPOSE_100K_ROOT}/metadata.csv \

--uid_mapping ${DYNPOSE_100K_ROOT}/uid_mapping.csv

4. Camera pose visualization

Create a conda environment if you haven't done so:

conda env create -f environment.yml

conda activate dynpose-100k

Run the below under the dynpose-100k environment:

python scripts/visualize_pose.py --dset dynpose_100k --dset_parent ${DYNPOSE_100K_ROOT}

Dataset structure

dynpose_100k

├── cameras

| ├── 00011ee6-cbc1-4ec4-be6f-292bfa698fc6.pkl {uid}

| ├── poses {camera poses (all frames) ([N',3,4])}

| ├── intrinsics {camera intrinsic matrix ([3,3])}

| ├── frame_idxs {corresponding frame indices ([N']), values within [0,N-1]}

| ├── mean_reproj_error {average reprojection error from SfM ([N'])}

| ├── num_points {number of reprojected points ([N'])}

| ├── num_frames {number of video frames N (scalar)}

| # where N' is number of registered frames

| ├── 00031466-5496-46fa-a992-77772a118b17.pkl

| ├── poses # camera poses (all frames) ([N',3,4])

| └── ...

| └── ...

├── video

| ├── 00011ee6-cbc1-4ec4-be6f-292bfa698fc6.mp4 {uid}

| ├── 00031466-5496-46fa-a992-77772a118b17.mp4

| └── ...

├── frames-12fps

| ├── 00011ee6-cbc1-4ec4-be6f-292bfa698fc6 {uid}

| ├── 00001.jpg {frame id}

| ├── 00002.jpg

| └── ...

| ├── 00031466-5496-46fa-a992-77772a118b17

| ├── 00001.jpg

| └── ...

| └── ...

├── metadata.csv {used to download video & extract frames}

| ├── uid

| ├── 00031466-5496-46fa-a992-77772a118b17

| └── ...

├── uid_mapping.csv {used to download video & extract frames}

| ├── videoID,url,timestamp,caption,matching_score,desirable_filtering,shot_boundary_detection

| ├── --106WvnIhc,https://www.youtube.com/watch?v=--106WvnIhc,"[['0:13:34.029', '0:13:40.035']]",['A man is swimming in a pool with an inflatable mattress.'],[0.44287109375],['desirable'],"[[['0:00:00.000', '0:00:05.989']]]"

| └── ...

├── viz_list.txt {used as index for pose visualization}

| ├── 004cd3b5-8af4-4613-97a0-c51363d80c31 {uid}

| ├── 0c3e06ae-0d0e-4c41-999a-058b4ea6a831

| └── ...

Lightspeed Benchmark Download

Lightspeed is a challenging, photorealistic benchmark for dynamic pose estimation with ground-truth camera poses. It is used to validate DynPose-100K's pose annotation method.

Original video clips can be found here: https://www.youtube.com/watch?v=AsykNkUMoNU&t=1s

1. Downloading cameras, videos and frames (8.1 GB)

git clone https://huggingface.co/datasets/nvidia/dynpose-100k

cd dynpose-100k

unzip lightspeed.zip

export LIGHTSPEED_PARENT=$(pwd)/lightspeed

2. Dataset structure

lightspeed

├── poses.pkl

| ├── 0120_LOFT {id_setting}

| ├── poses {camera poses (all frames) ([N,3,4])}

| # where N is number of frames

| ├── 0180_DUST

| ├── poses {camera poses (all frames) ([N,3,4])}

| └── ...

├── video

| ├── 0120_LOFT.mp4 {id_setting}

| ├── 0180_DUST.mp4

| └── ...

├── frames-24fps

| ├── 0120_LOFT/images {id_setting}

| ├── 00000.png {frame id}

| ├── 00001.png

| └── ...

| ├── 0180_DUST/images

| ├── 00000.png

| └── ...

| └── ...

├── viz_list.txt {used as index for pose visualization}

| ├── 0120_LOFT.mp4 {id_setting}

| ├── 0180_DUST.mp4

| └── ...

3. Camera pose visualization

Create a conda environment if you haven't done so:

conda env create -f environment.yml

conda activate dynpose-100k

Run the below under the dynpose-100k environment:

python scripts/visualize_pose.py --dset lightspeed --dset_parent ${LIGHTSPEED_PARENT}

FAQ

Q: What coordinate system do the camera poses use?

A: Camera poses are world-to-camera and follow OpenCV "RDF" convention (same as COLMAP): X axis points to the right, the Y axis to the bottom, and the Z axis to the front as seen from the image.

Q: How do I map between frame indices and camera poses?

A: The frame_idxs field in each camera PKL file contains the corresponding frame indices for the poses.

Q: How can I contribute to this dataset?

A: Please contact the authors for collaboration opportunities.

Citation

If you find this dataset useful in your research, please cite our paper:

@inproceedings{rockwell2025dynpose,

author = {Rockwell, Chris and Tung, Joseph and Lin, Tsung-Yi and Liu, Ming-Yu and Fouhey, David F. and Lin, Chen-Hsuan},

title = {Dynamic Camera Poses and Where to Find Them},

booktitle = {CVPR},

year = 2025

}

Acknowledgements

We thank Gabriele Leone and the NVIDIA Lightspeed Content Tech team for sharing the original 3D assets and scene data for creating the Lightspeed benchmark. We thank Yunhao Ge, Zekun Hao, Yin Cui, Xiaohui Zeng, Zhaoshuo Li, Hanzi Mao, Jiahui Huang, Justin Johnson, JJ Park and Andrew Owens for invaluable inspirations, discussions and feedback on this project.

- Downloads last month

- 1,097