language:

- en

pretty_name: CheckboxQA

tags:

- Checkbox

license: cc-by-nc-4.0

task_categories:

- document-question-answering

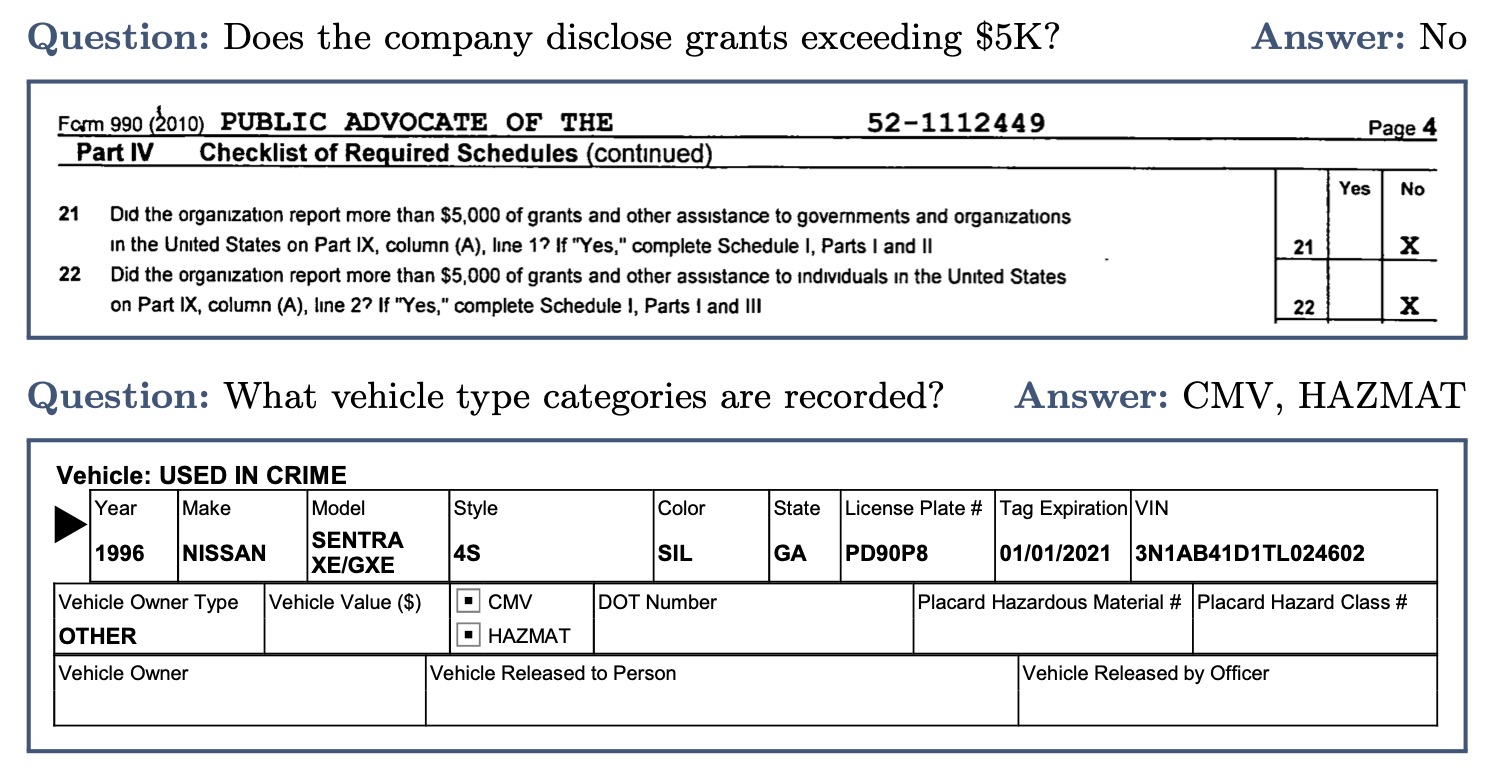

CheckboxQA

CheckboxQA consists of varied questions requiring interpretation of checkable content in the context of visually rich documents. Required answers range from simple yes/no to lists of values. The dataset is intended solely for evaluation purposes.

Data format

Questions and answers for CheckboxQA follows DUE data format.

Evaluation

The evaluation metric for CheckboxQA is ANLS* (Average Normalized Levenshtein Similarity).

You may evaluate your model's predictions using the evaluate.py script from Snowflake-Labs/CheckboxQA repository.

Citation

Please cite the following paper when using this dataset:

@misc{turski2025uncheckedoverlookedaddressingcheckbox,

title={Unchecked and Overlooked: Addressing the Checkbox Blind Spot in Large Language Models with CheckboxQA},

author={Michał Turski and Mateusz Chiliński and Łukasz Borchmann},

year={2025},

eprint={2504.10419},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2504.10419},

}

License

This dataset is intended for non-commercial research purposes and is provided under the CC BY-NC license.

Please refer to DocumentCloud's terms of service at

https://www.documentcloud.org/home/ for information regarding the rights of the underlying document.