Datasets:

license: apache-2.0

task_categories:

- text-classification

- text2text-generation

- text-generation

language:

- en

size_categories:

- n>1T

FineFineWeb: A Comprehensive Study on Fine-Grained Domain Web Corpus

arXiv: Coming Soon

Project Page: Coming Soon

Blog: Coming Soon

Data Statistics

| Domain (#tokens/#samples) | Iteration 1 | Iteration 2 | Iteration 3 | Total |

|---|---|---|---|---|

| medical | 140.03B | 813.46M | 4.97B | 145.81B |

| chemistry | 28.69B | 588.92M | 131.46M | 29.41B |

| systems_science | 25.41B | 11.64B | 180.39M | 37.22B |

| history | 45.27B | 1.56B | 1.74B | 48.57B |

| movie | 13.09B | 598.29M | 155.10M | 13.85B |

| design | 96.58B | 3.80B | 450.00M | 100.82B |

| fashion | 18.72B | 977.27M | 264.01M | 19.96B |

| celebrity | 10.29B | 745.94M | 4.48B | 15.52B |

| sociology | 76.34B | 3.59B | 8.88B | 88.82B |

| computer_science_and_technology | 194.46B | 3.95B | 4.76B | 203.16B |

| statistics | 19.59B | 1.15B | 1.70B | 22.44B |

| christianity | 47.72B | 403.68M | 732.55M | 48.86B |

| electronic_science | 31.18B | 8.01B | 482.62M | 39.67B |

| game | 43.47B | 2.36B | 2.68B | 48.51B |

| photo | 6.77B | 1.78B | 41.44M | 8.59B |

| biology | 88.58B | 371.29M | 838.82M | 89.79B |

| transportation_engineering | 13.61B | 6.61B | 972.50M | 21.19B |

| instrument_science | 6.36B | 2.17B | 165.43M | 8.70B |

| entertainment | 152.92B | 1.67B | 5.06B | 159.65B |

| travel | 78.87B | 584.78M | 957.26M | 80.41B |

| food | 59.88B | 136.32M | 1.01B | 61.03B |

| mathematics | 5.70B | 50.33M | 261.65M | 6.01B |

| mining_engineering | 8.35B | 206.05M | 529.02M | 9.08B |

| astronomy | 5.54B | 134.39M | 92.27M | 5.77B |

| artistic | 178.25B | 5.79B | 3.75B | 187.80B |

| automotive | 37.88B | 436.34M | 911.65M | 39.22B |

| music_and_dance | 15.92B | 745.94M | 4.48B | 15.52B |

| geography | 110.18B | 1.16B | 192.67M | 111.53B |

| ocean_science | 2.36B | 483.04M | 229.43M | 3.07B |

| textile_science | 2.59B | 3.00B | 94.56M | 5.69B |

| mechanical_engineering | 86.13B | 1.24B | 129.96M | 87.49B |

| topicality | 34.87M | 5.22M | / | 40.09M |

| psychology | 51.53B | 688.50M | 2.56B | 54.78B |

| philosophy | 47.99B | 121.26M | 335.77M | 48.44B |

| atmospheric_science | 2.84B | 102.04M | 259.25M | 3.21B |

| landscape_architecture | 3.07B | 2.93B | 53.24M | 15.12B |

| sports | 122.47B | 379.18M | 1.83B | 124.68B |

| politics | 79.52B | 253.26M | 930.96M | 80.70B |

| economics | 205.01B | 1.23B | 2.63B | 208.87B |

| environmental_science | 56.98B | 1.48B | 920.77M | 59.37B |

| agronomy | 13.08B | 1.02B | 229.04M | 14.33B |

| news | 328.47B | 12.37B | 11.34B | 352.18B |

| public_administration | 100.13B | 5.54B | 716.81M | 106.39B |

| pet | 12.58B | 154.14M | 307.28M | 13.05B |

| library | 59.00B | 5.01B | 36.56M | 64.05B |

| civil_engineering | 9.46B | 1.36B | 402.91M | 11.22B |

| literature | 73.37B | 7.22B | 69.75B | 150.34B |

| journalism_and_media_communication | 440.98B | 21.00B | 1.55B | 463.53B |

| nuclear_science | 495.51M | 79.89M | 78.79M | 654.19M |

| beauty | 19.75B | 709.15M | 1.01B | 21.47B |

| physics | 22.29B | 372.21M | 191.17M | 22.85B |

| painting | 374.41M | 429.63M | 96.57M | 900.61M |

| hobby | 150.23B | 42.78B | 44.05B | 237.06B |

| health | 191.20B | 427.93M | 18.43B | 210.06B |

| relationship | 21.87B | 3.69B | 129.60M | 25.69B |

| petroleum_and_natural_gas_engineering | 950.08M | 463.65M | 121.56M | 1.54B |

| optical_engineering | 2.54B | 253.06M | 263.99M | 3.06B |

| hydraulic_engineering | 57.36M | 75.40M | 3.65M | 136.41M |

| urban_planning | 12.13B | 2.93B | 53.24M | 15.12B |

| communication_engineering | 9.21B | 3.72B | 327.66M | 13.26B |

| aerospace | 6.15B | 261.63M | 352.68M | 6.77B |

| law | 128.58B | 455.19M | 2.38B | 131.42B |

| finance | 151.07B | 327.45M | 1.13B | 152.53B |

| drama_and_film | 20.44B | 11.22B | 206.27M | 31.86B |

| materials_science | 18.95B | 1.11B | 303.66M | 20.37B |

| weapons_science | 80.62M | 3.51B | 140.89M | 3.73B |

| gamble | 30.12B | 696.52M | 158.48M | 30.98B |

| Total | 4007.48B | 207.39B | 207.99B | 4422.86B |

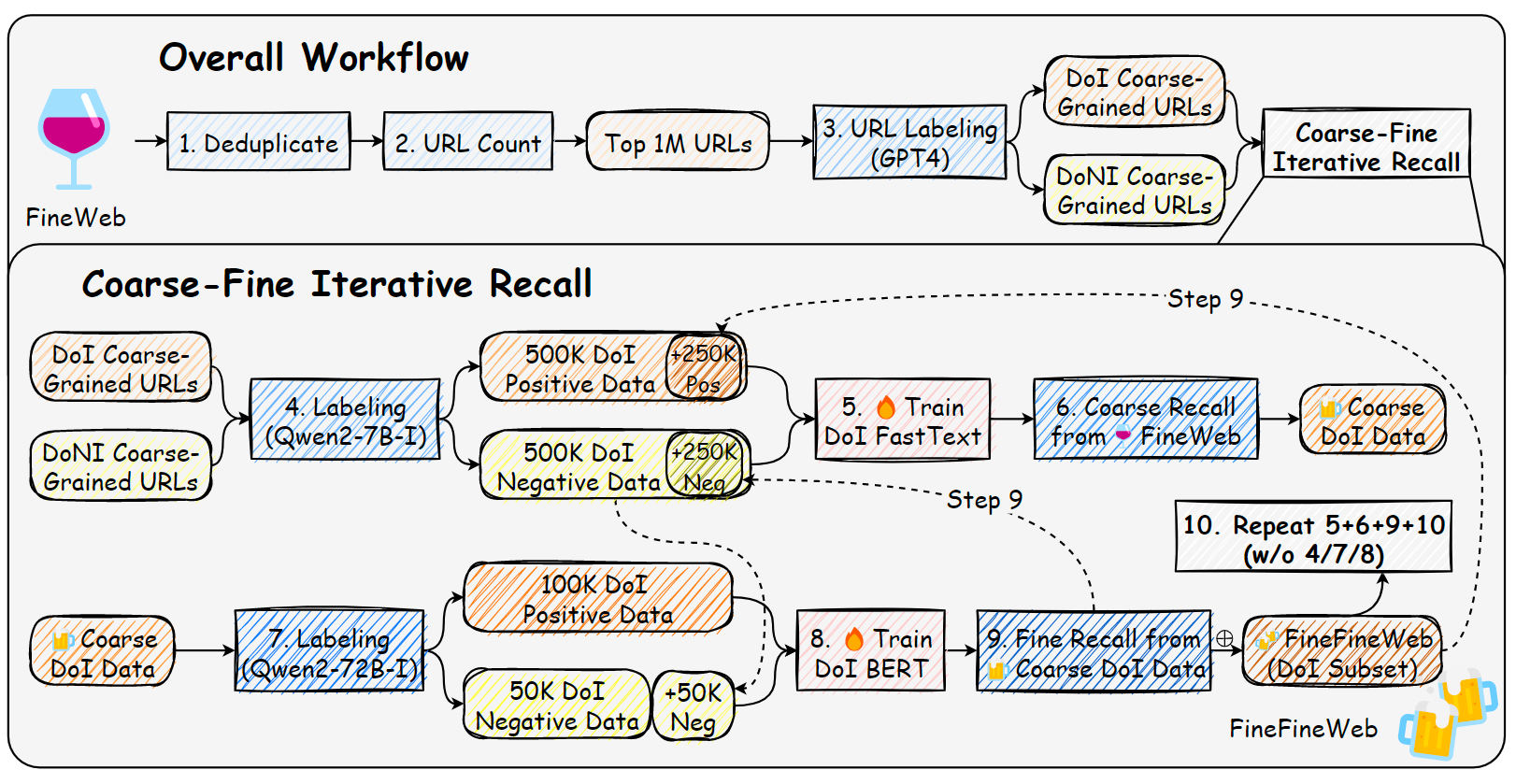

Data Construction Workflow

The data construction workflow can be summarized as follows:

Deduplicate: The FineWeb dataset is deduplicated using exact deduplication and MinHash techniques to remove redundant data.

URL Labeling: Root URLs from FineWeb are counted, and the top 1 million URLs are labeled using GPT-4. This step generates DoI (Domain-of-Interest) Coarse-Grained URLs and DoNI (Domain-of-Non-Interest) Coarse-Grained URLs as seed data sources.

Coarse Recall:

a. Based on the labeled root URLs, data is sampled for each domain.

b. The sampled data is labeled using Qwen2-7B-Instruct, producing 500K DoI Positive Data and 500K DoI Negative Data (note that for N>1 iterations, each 500K samples are composed of 250K sampled original seed data and 250K refined data after Fine Recall).

c. A binary FastText model is trained per domain using the labeled data.

d. The FastText model performs coarse recall on FineWeb, generating Coarse DoI Data.

Fine Recall:

a. The Coarse DoI Data is labeled using Qwen2-72B-Instruct to produce 100K DoI Positive Data and 50K DoI Negative Data, with the latter further augmented with 50K negative samples from earlier FastText training.

b. A BERT model is trained using this labeled data.

c. The BERT model performs fine recall on the Coarse DoI Data, producing a refined dataset, which is the DoI subset of FineFineWeb.

Coarse-Fine Recall Iteration: The workflow of coarse and fine recall iterates for 3 rounds with the following adjustments:

a. FastText is re-trained using updated seed data, which combines BERT-recalled samples, BERT-dropped samples, and previously labeled seed data.

b. The BERT model keeps frozen during subsequent iterations.

c. Steps for training FastText, coarse recall, and fine recall are repeated without re-labeling data with Qwen2-Instruct models.

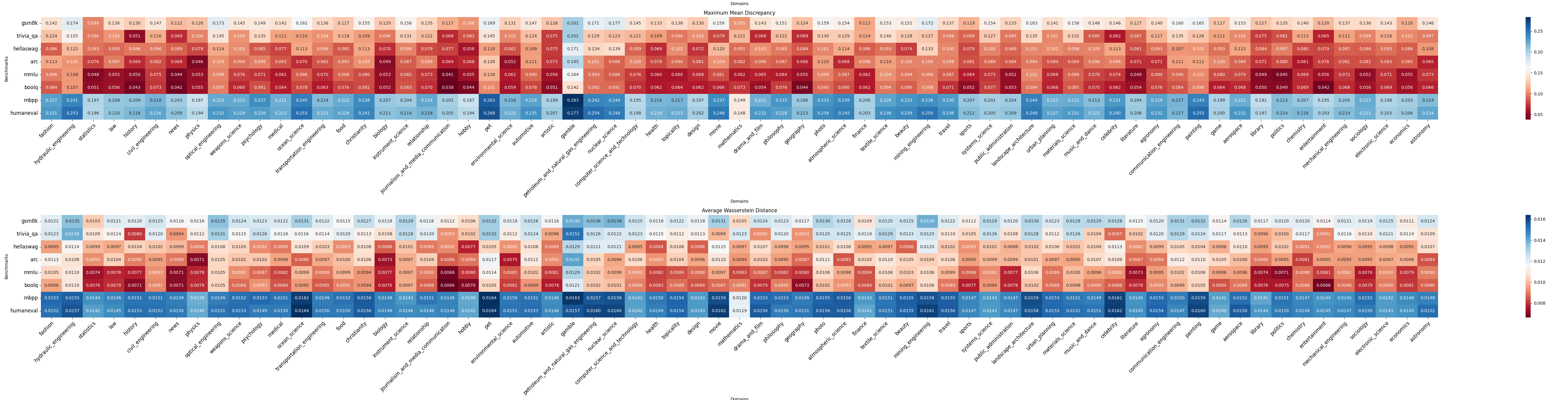

Domain-Domain Similarity Analysis

- Perform proportional weighted sampling of the domain subsets based on the sample size of each domain, with a total of 1 billion tokens sampled from the domain subsets.

- Use the BGE-M3 model to compute the embeddings of the samples in each domain subset, referred to as domain embeddings.

- Use the BGE-M3 model to compute the embeddings of the samples in each benchmark, referred to as benchmark embeddings (bench embeddings).

- Calculate the MMD distance and the Wasserstein distance between the domain embeddings and the benchmark embeddings.

The results above reveal the following observations:

- The two code-related benchmarks, MBPP and HumanEval, exhibit relatively large distances from nearly all domains, indicating that the proportion of code data in the training set is relatively small. Notably, their distance to the mathematics domain is comparatively smaller, suggesting a certain degree of overlap between mathematics data and code data.

- Benchmarks such as Hellaswag, ARC, MMLU, and BoolQ have distances that are close to almost all domains, except for the gamble domain. This indicates that the samples in these benchmarks involve synergetic effects across multiple domains of knowledge, with a wide distribution.

- GSM8K and TriviaQA show significant discrepancies with a small number of domains, suggesting that the distribution differences between domains are more pronounced for samples involving grade-school mathematics and fact-based question answering. Some domains contain a substantial amount of this type of data, while others do not.

- The gamble domain exhibits substantial differences from other domains and has large distances from all benchmarks, indicating that pretraining data related to gambling provides limited benefits for these benchmarks.

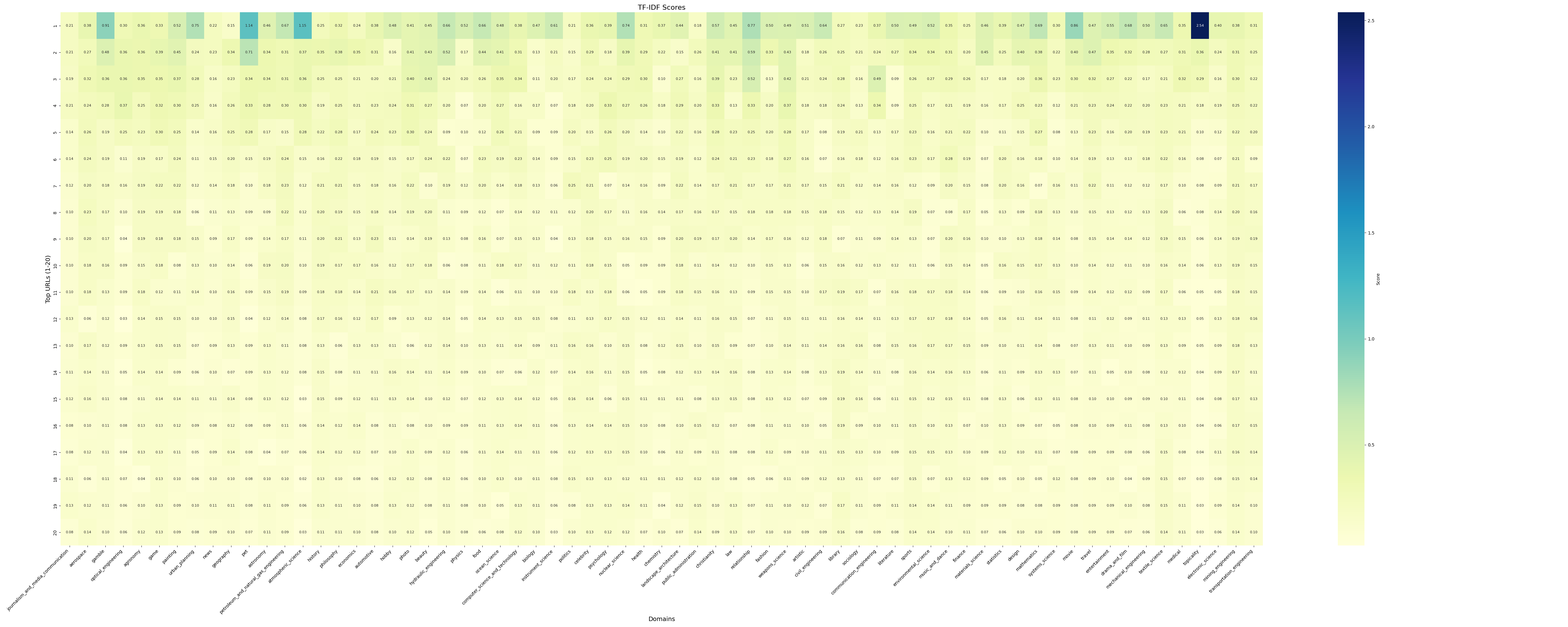

Domain-Domain Duplication

Let $D_1, D_2, \dots, D_N$ represent $N$ distinct domains, where we select top-20 URLs for each domain $D_i$, denoted as ${U_{i1}, U_{i2}, \dots, U_{i20}}$,. The total set of URLs across all domains is represented as $\mathcal{U}$, and the total number of URLs is $M = |\mathcal{U}|$.

For each URL $U_k \in \mathcal{U}$, the term frequency (TF) is defined as the proportion of $U_k$ in the total set of URLs:

$\text{TF}(U_k) = \frac{\text{count}(U_k)}{M}$

where $\text{count}(U_k)$ is the number of times $U_k$ appears in $\mathcal{U}$. Additionally, the document frequency $K_k$ of $U_k$ is the number of domains in which $U_k$ appears. Based on this, the inverse document frequency (IDF) is calculated as:

$\text{IDF}(U_k) = \log(\frac{N}{K_k})$

The TF-IDF value for each URL $U_{ij}$ in a specific domain $D_i$ is then computed as:

$\text{TF-IDF}(U_{ij}) = \text{TF}(U_{ij}) \times \text{IDF}(U_{ij})$

Using the TF-IDF values of all URLs within a domain, the domain-domain duplicate rate can be analyzed by comparing the distribution of TF-IDF values across domains. If a domain has many URLs with high TF-IDF values, it indicates that the domain’s URLs are relatively unique and significant within the entire set of URLs. Conversely, if a domain has many URLs with low TF-IDF values, it suggests that the domain's URLs are more common across other domains. Analyzing these values helps assess how similar or redundant a domain's content is in relation to others based on its URL composition.

As shown in the figure, most domains have low duplication rates, except for topicality, pet, and atmospheric science.

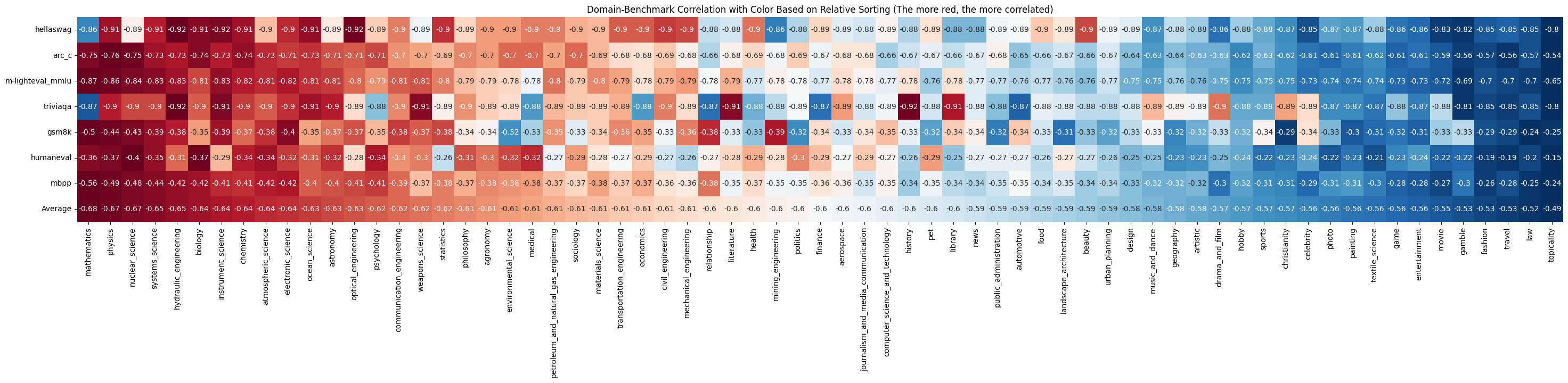

Domain-Benchmark BPC-Acc Correlation

Experimental method: Using 28 models (see the paper), we first calculate BPC for all domains to obtain a model ranking $R_D$. Similarly, we compute scores across all benchmarks to obtain a model ranking $R_M$. We then calculate the Spearman correlation between $R_D$ and $R_M$.

- For benchmarks like ARC, MMLU, GSM8K, HumanEval, and MBPP, STEM-related domains show higher correlation rankings, particularly mathematics, physics, and systems science.

- For TriviaQA, which emphasizes factual knowledge over reasoning, domains rich in world knowledge such as literature, history, and library science demonstrate higher correlation rankings.

Bibtex

@misc{

title={FineFineWeb: A Comprehensive Study on Fine-grained Domain Web Corpus},

url={[https://huggingface.co/datasets/m-a-p/FineFineWeb](https://huggingface.co/datasets/m-a-p/FineFineWeb)},

author = {M-A-P, Ge Zhang*, Xinrun Du*, Zhimiao Yu*, Zili Wang*, Zekun Wang, Shuyue Guo, Tianyu Zheng, Kang Zhu, Jerry Liu, Shawn Yue, Binbin Liu, Zhongyuan Peng, Yifan Yao, Jack Yang, Ziming Li, Bingni Zhang, Wenhu Chen, Minghao Liu, Tianyu Liu, Xiaohuan Zhou, Qian Liu, Taifeng Wang+, Wenhao Huang+},

publisher={huggingface},

verision={v0.1.0},

month={December},

year={2024}

}