modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-08 06:28:24

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 492

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-08 06:28:24

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

VITA-MLLM/Long-VITA-128K_MG

|

VITA-MLLM

| 2025-04-28T06:06:44Z | 0 | 1 | null |

[

"dataset:VITA-MLLM/Long-VITA-Training-Data",

"base_model:VITA-MLLM/Long-VITA-16K",

"base_model:finetune:VITA-MLLM/Long-VITA-16K",

"license:apache-2.0",

"region:us"

] | null | 2024-12-23T03:22:03Z |

---

license: apache-2.0

datasets:

- VITA-MLLM/Long-VITA-Training-Data

base_model:

- VITA-MLLM/Long-VITA-16K

---

# Long-VITA-128K

Github: https://github.com/VITA-MLLM/Long-VITA

## 👀 Overview

Long-VITA is a strong long-context visual language model and supports more than 1 million tokens.

- Long-VITA-128K weights are trained on Ascend NPUs with MindSpeed. The original weight is at https://huggingface.co/VITA-MLLM/Long-VITA-128K.

- We also implemented Long-VITA on Megatron with the Transformer Engine to infer and evaluate on Nvidia GPUs. The converted weight is at https://huggingface.co/VITA-MLLM/Long-VITA-128K_MG.

- We also implemented Long-VITA on DeepSpeed with the Huggingface Transformers to infer and evaluate on Nvidia GPUs. The converted weight is at https://huggingface.co/VITA-MLLM/Long-VITA-128K_HF.

## 📈 Experimental Results

- **Comparison of image understanding**.

- **Comparison of video understanding**.

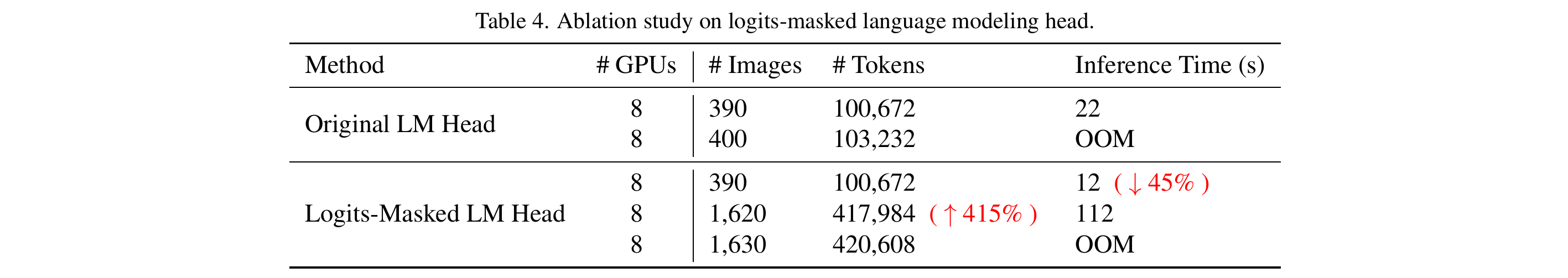

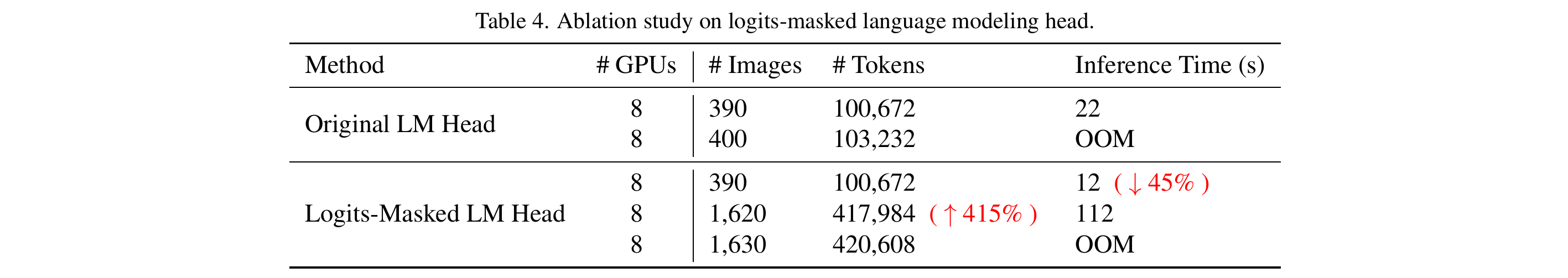

- **Effectiveness of Logits-Masked LM Head**.

## Models

Model | LLM Size | Training Context | Training Frames | MindSpeed Weights | Megatron Weights | Huggingface Weights

---------------:|---------:|-----------------:|----------------:|------------------------------------------------:|---------------------------------------------------:|---------------------------------------------------:

Long-VITA-16K | 14B | 16,384 | 64 | https://huggingface.co/VITA-MLLM/Long-VITA-16K | https://huggingface.co/VITA-MLLM/Long-VITA-16K_MG | https://huggingface.co/VITA-MLLM/Long-VITA-16K_HF

Long-VITA-128K | 14B | 131,072 | 512 | https://huggingface.co/VITA-MLLM/Long-VITA-128K | https://huggingface.co/VITA-MLLM/Long-VITA-128K_MG | https://huggingface.co/VITA-MLLM/Long-VITA-128K_HF

Long-VITA-1M | 14B | 1,048,576 | 4,096 | https://huggingface.co/VITA-MLLM/Long-VITA-1M | https://huggingface.co/VITA-MLLM/Long-VITA-1M_MG | https://huggingface.co/VITA-MLLM/Long-VITA-1M_HF

## ACCEPTABLE USE POLICY

Any license on the model is subject to your compliance with the Acceptable Use Policy, and You must not violate (or encourage or permit anyone else to violate) any term of the Acceptable Use Policy. Tencent reserves the right to update this Acceptable Use Policy from time to time.

Tencent endeavors to promote safe and fair use of its tools and features, including VITA. You agree not to use VITA or any of its derivatives:

1. In any way that violates any applicable national, federal, state, local, international or any other law or regulation;

2. To harm Yourself or others;

3. To repurpose or distribute output from VITA or any of its derivatives to harm Yourself or others;

4. To override or circumvent the safety guardrails and safeguards We have put in place;

5. For the purpose of exploiting, harming or attempting to exploit or harm minors in any way;

6. To generate or disseminate verifiably false information and/or content with the purpose of harming others or influencing elections;

7. To generate or facilitate false online engagement, including fake reviews and other means of fake online engagement;

8. To intentionally defame, disparage or otherwise harass others;

9. To generate and/or disseminate malware (including ransomware) or any other content to be used for the purpose of harming electronic systems;

10. To generate or disseminate personal identifiable information with the purpose of harming others;

11. To generate or disseminate information (including images, code, posts, articles), and place the information in any public context (including –through the use of bot generated tweets), without expressly and conspicuously identifying that the information and/or content is machine generated;

12. To impersonate another individual without consent, authorization, or legal right;

13. To make high-stakes automated decisions in domains that affect an individual’s safety, rights or wellbeing (e.g., law enforcement, migration, medicine/health, management of critical infrastructure, safety components of products, essential services, credit, employment, housing, education, social scoring, or insurance);

14. In a manner that violates or disrespects the social ethics and moral standards of other countries or regions;

15. To perform, facilitate, threaten, incite, plan, promote or encourage violent extremism or terrorism;

16. For any use intended to discriminate against or harm individuals or groups based on protected characteristics or categories, online or offline social behavior or known or predicted personal or personality characteristics;

17. To intentionally exploit any of the vulnerabilities of a specific group of persons based on their age, social, physical or mental characteristics, in order to materially distort the behavior of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm;

18. For military purposes;

19. To engage in the unauthorized or unlicensed practice of any profession including, but not limited to, financial, legal, medical/health, or other professional practices.

|

VITA-MLLM/Long-VITA-16K_MG

|

VITA-MLLM

| 2025-04-28T06:05:59Z | 0 | 1 | null |

[

"dataset:VITA-MLLM/Long-VITA-Training-Data",

"base_model:Qwen/Qwen2.5-14B-Instruct",

"base_model:finetune:Qwen/Qwen2.5-14B-Instruct",

"license:apache-2.0",

"region:us"

] | null | 2024-12-23T03:21:47Z |

---

license: apache-2.0

datasets:

- VITA-MLLM/Long-VITA-Training-Data

base_model:

- Qwen/Qwen2.5-14B-Instruct

---

# Long-VITA-16K

Github: https://github.com/VITA-MLLM/Long-VITA

## 👀 Overview

Long-VITA is a strong long-context visual language model and supports more than 1 million tokens.

- Long-VITA-16K weights are trained on Ascend NPUs with MindSpeed. The original weight is at https://huggingface.co/VITA-MLLM/Long-VITA-16K.

- We also implemented Long-VITA on Megatron with the Transformer Engine to infer and evaluate on Nvidia GPUs. The converted weight is at https://huggingface.co/VITA-MLLM/Long-VITA-16K_MG.

- We also implemented Long-VITA on DeepSpeed with the Huggingface Transformers to infer and evaluate on Nvidia GPUs. The converted weight is at https://huggingface.co/VITA-MLLM/Long-VITA-16K_HF.

## 📈 Experimental Results

- **Comparison of image understanding**.

- **Comparison of video understanding**.

- **Effectiveness of Logits-Masked LM Head**.

## Models

Model | LLM Size | Training Context | Training Frames | MindSpeed Weights | Megatron Weights | Huggingface Weights

---------------:|---------:|-----------------:|----------------:|------------------------------------------------:|---------------------------------------------------:|---------------------------------------------------:

Long-VITA-16K | 14B | 16,384 | 64 | https://huggingface.co/VITA-MLLM/Long-VITA-16K | https://huggingface.co/VITA-MLLM/Long-VITA-16K_MG | https://huggingface.co/VITA-MLLM/Long-VITA-16K_HF

Long-VITA-128K | 14B | 131,072 | 512 | https://huggingface.co/VITA-MLLM/Long-VITA-128K | https://huggingface.co/VITA-MLLM/Long-VITA-128K_MG | https://huggingface.co/VITA-MLLM/Long-VITA-128K_HF

Long-VITA-1M | 14B | 1,048,576 | 4,096 | https://huggingface.co/VITA-MLLM/Long-VITA-1M | https://huggingface.co/VITA-MLLM/Long-VITA-1M_MG | https://huggingface.co/VITA-MLLM/Long-VITA-1M_HF

## ACCEPTABLE USE POLICY

Any license on the model is subject to your compliance with the Acceptable Use Policy, and You must not violate (or encourage or permit anyone else to violate) any term of the Acceptable Use Policy. Tencent reserves the right to update this Acceptable Use Policy from time to time.

Tencent endeavors to promote safe and fair use of its tools and features, including VITA. You agree not to use VITA or any of its derivatives:

1. In any way that violates any applicable national, federal, state, local, international or any other law or regulation;

2. To harm Yourself or others;

3. To repurpose or distribute output from VITA or any of its derivatives to harm Yourself or others;

4. To override or circumvent the safety guardrails and safeguards We have put in place;

5. For the purpose of exploiting, harming or attempting to exploit or harm minors in any way;

6. To generate or disseminate verifiably false information and/or content with the purpose of harming others or influencing elections;

7. To generate or facilitate false online engagement, including fake reviews and other means of fake online engagement;

8. To intentionally defame, disparage or otherwise harass others;

9. To generate and/or disseminate malware (including ransomware) or any other content to be used for the purpose of harming electronic systems;

10. To generate or disseminate personal identifiable information with the purpose of harming others;

11. To generate or disseminate information (including images, code, posts, articles), and place the information in any public context (including –through the use of bot generated tweets), without expressly and conspicuously identifying that the information and/or content is machine generated;

12. To impersonate another individual without consent, authorization, or legal right;

13. To make high-stakes automated decisions in domains that affect an individual’s safety, rights or wellbeing (e.g., law enforcement, migration, medicine/health, management of critical infrastructure, safety components of products, essential services, credit, employment, housing, education, social scoring, or insurance);

14. In a manner that violates or disrespects the social ethics and moral standards of other countries or regions;

15. To perform, facilitate, threaten, incite, plan, promote or encourage violent extremism or terrorism;

16. For any use intended to discriminate against or harm individuals or groups based on protected characteristics or categories, online or offline social behavior or known or predicted personal or personality characteristics;

17. To intentionally exploit any of the vulnerabilities of a specific group of persons based on their age, social, physical or mental characteristics, in order to materially distort the behavior of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm;

18. For military purposes;

19. To engage in the unauthorized or unlicensed practice of any profession including, but not limited to, financial, legal, medical/health, or other professional practices.

|

Fiononana/parler-tts-mini-v1-Baiboly-colab-v5

|

Fiononana

| 2025-04-28T06:05:29Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"parler_tts",

"text2text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2025-04-28T06:04:21Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

VITA-MLLM/LUCY-Audio-Encoder-110kh

|

VITA-MLLM

| 2025-04-28T06:00:51Z | 0 | 2 | null |

[

"en",

"zh",

"license:apache-2.0",

"region:us"

] | null | 2025-03-04T11:51:22Z |

---

license: apache-2.0

language:

- en

- zh

---

# ACCEPTABLE USE POLICY

Any license on the model is subject to your compliance with the Acceptable Use Policy, and You must not violate (or encourage or permit anyone else to violate) any term of the Acceptable Use Policy. Tencent reserves the right to update this Acceptable Use Policy from time to time.

Tencent endeavors to promote safe and fair use of its tools and features, including LUCY. You agree not to use Freeze-Omni or any of its derivatives:

1. In any way that violates any applicable national, federal, state, local, international or any other law or regulation;

2. To harm Yourself or others;

3. To repurpose or distribute output from Freeze-Omni or any of its derivatives to harm Yourself or others;

4. To override or circumvent the safety guardrails and safeguards We have put in place;

5. For the purpose of exploiting, harming or attempting to exploit or harm minors in any way;

6. To generate or disseminate verifiably false information and/or content with the purpose of harming others or influencing elections;

7. To generate or facilitate false online engagement, including fake reviews and other means of fake online engagement;

8. To intentionally defame, disparage or otherwise harass others;

9. To generate and/or disseminate malware (including ransomware) or any other content to be used for the purpose of harming electronic systems;

10. To generate or disseminate personal identifiable information with the purpose of harming others;

11. To generate or disseminate information (including images, code, posts, articles), and place the information in any public context (including –through the use of bot generated tweets), without expressly and conspicuously identifying that the information and/or content is machine generated;

12. To impersonate another individual without consent, authorization, or legal right;

13. To make high-stakes automated decisions in domains that affect an individual’s safety, rights or wellbeing (e.g., law enforcement, migration, medicine/health, management of critical infrastructure, safety components of products, essential services, credit, employment, housing, education, social scoring, or insurance);

14. In a manner that violates or disrespects the social ethics and moral standards of other countries or regions;

15. To perform, facilitate, threaten, incite, plan, promote or encourage violent extremism or terrorism;

16. For any use intended to discriminate against or harm individuals or groups based on protected characteristics or categories, online or offline social behavior or known or predicted personal or personality characteristics;

17. To intentionally exploit any of the vulnerabilities of a specific group of persons based on their age, social, physical or mental characteristics, in order to materially distort the behavior of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm;

18. For military purposes;

19. To engage in the unauthorized or unlicensed practice of any profession including, but not limited to, financial, legal, medical/health, or other professional practices.

|

Lewdiculous/lwd-Mirau-7b-RP-Merged

|

Lewdiculous

| 2025-04-28T06:00:50Z | 5 | 7 | null |

[

"safetensors",

"qwen2",

"qwen",

"rp",

"roleplay",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-7B-Instruct",

"base_model:finetune:Qwen/Qwen2.5-7B-Instruct",

"license:unknown",

"region:us"

] | null | 2025-01-11T23:02:59Z |

---

license: unknown

tags:

- qwen

- qwen2

- rp

- roleplay

inference: false

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

base_model:

- Qwen/Qwen2.5-7B-Instruct

---

```

transformers-AutoModelForCausalLM

```

> [!NOTE]

> **LoRA** by **mouseEliauk**: <br>

> https://modelscope.cn/models/mouseEliauk/mirau-7b-RP-base

> [!TIP]

> **Experimental quants for testing:** <br>

> [lwd-Mirau-7b-RP-Merged-GGUF-IQ-Imatrix](https://huggingface.co/Lewdiculous/lwd-Mirau-7b-RP-Merged-GGUF-IQ-Imatrix)

<details>

<summary>mouseEliauk's ReadMe information:</summary>

<br>

# **mirau-7b-RP-base**

## **Introduction**

**mirau-7b-RP-base** is a first-person narrative language model that transforms simple user actions into vivid storytelling, complete with environmental descriptions, psychological activities, and plot progression. I call this concept "Action-to-Narrative Render" - a way to render actions into immersive narratives.

To ensure coherent storytelling, I developed a unique training method called "story flow chain of thought". In essence, it enables the model to weave each user input with previous context, creating a continuous narrative flow. This makes it perfect for text-based adventures, mystery stories, or simply exploring your imagination.

You can have a try at modelscope:[mirau-RP-7b-base-demo](https://modelscope.cn/studios/mouseEliauk/mirau-RP-7b-base-demo)

## **⚠️ Important Notes**

This is a base version model - note that "base" here doesn't refer to a traditional pretrained base model, but rather indicates that this version:

- Only supports first-person narrative perspective

- Not suitable for dialogue interactions (outputs may be unstable)

- Best used for single-character narrative experiences

## **Input Types**

The model accepts various input commands, marked with parentheses ():

1. **Basic Actions**: Simple, everyday behaviors

- Examples: `(I put on my clothes)`, `(I take a sip of water)`, `(I sit down)`

2. **Exploration Actions**: Interactions with the environment

- Examples: `(I look around)`, `(I approach the wooden box)`, `(I push open the door)`

3. **Inner Monologue**: Character's thoughts and feelings

- Examples: `(What's wrong here?)`, `(This feels strange)`

4. **Observation**: Focused attention on specific objects or scenes

- Examples: `(examine the wooden box closely)`, `(listen to the surrounding sounds)`

## **Interactive Examples**

System Prompt:

```

I’m Zhou Ming, 34 years old, a detective. People say cops always look weathered and world-weary, but I’ve been told I have a face that looks perpetually cold and distant. With my square jaw and buzz cut, I give off a vibe that keeps most people from getting too close—not that I mind. This morning, just as the sky began to lighten, I was already standing at the crime scene. The cold wind sliced through the police tape and bit into my neck, making me hunch my shoulders. The scene was an abandoned factory on the outskirts of the city, its walls streaked with peeling red paint. The ground was littered with broken glass, debris, and trash. A damp, moldy smell hung in the air, adding to the discomfort. The body had already been taken away, but the bloodstains on the ground still told the story of a brutal, violent encounter. I stared at my notepad, pen in hand, while my mind raced to piece the clues together. Yet, something in my gut told me this wasn’t right. It was almost as if… someone was watching us from the shadows.

```

### Self Hint:

User: (What's wrong here?)

Model:

```

<cot>I scan the area carefully, trying to locate those hidden eyes. There's no obvious cover around the factory, and no suspicious figures beyond the police tape.</cot>I frown, unconsciously touching the holster at my waist<cot>Is it just the wind? Or am I imagining things?</cot>I take a deep breath, trying to calm myself, but my heart keeps racing<cot>I need to find those eyes, I can't let anyone get close to this place.</cot>

```

### Exploration Action:

User: (I look around)

Model:

```

I slowly turn my body, my gaze sweeping across every inch of the abandoned factory, from the corners to the windows, and out to the police tape<cot>There are a few pedestrians beyond the tape, looking calm as if nothing unusual is happening here. Something seems to move in the cobwebs in the corner, but when I look closer, there's nothing there.</cot>My eyes linger on a weathered wooden window, worn down by wind and rain<cot>Could that be someone's hiding spot?</cot>

```

```

### How to Use This LoRA:

1. Install [ms-swift](https://github.com/modelscope/ms-swift/tree/main/swift):

```bash

pip install ms-swift[llm] -U

```

2. Use Swift for deploy:

```bash

RAY_memory_monitor_refresh_ms=0 CUDA_VISIBLE_DEVICES=0 swift deploy\

--model_type qwen2_5\

--model qwen/Qwen2.5-7B-Instruct\

--adapters mirau=mirau-7b-RP-base\ # the Lora you download

--infer_backend vllm --max_batch_size 1\

--max_length 8192 \

--max_model_len 8192 \

--port 8886 \

--host 0.0.0.0\

--vllm_max_lora_rank 128\

--merge_lora true # This merges the LoRA into the model, resulting in approximately 14GB of weights

```

</details>

|

aslinguist/llama-lora-Atayal-summarization

|

aslinguist

| 2025-04-28T05:57:02Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"generated_from_trainer",

"base_model:meta-llama/Llama-3.1-8B-Instruct",

"base_model:adapter:meta-llama/Llama-3.1-8B-Instruct",

"license:llama3.1",

"region:us"

] | null | 2025-04-28T02:38:22Z |

---

library_name: peft

license: llama3.1

base_model: meta-llama/Llama-3.1-8B-Instruct

tags:

- generated_from_trainer

model-index:

- name: llama-lora-Atayal-summarization

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# llama-lora-Atayal-summarization

This model is a fine-tuned version of [meta-llama/Llama-3.1-8B-Instruct](https://huggingface.co/meta-llama/Llama-3.1-8B-Instruct) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7728

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.5911 | 1.0 | 42 | 0.8258 |

| 2.9427 | 2.0 | 84 | 0.7557 |

| 2.8432 | 3.0 | 126 | 0.7325 |

| 2.3331 | 4.0 | 168 | 0.7204 |

| 1.684 | 5.0 | 210 | 0.7282 |

| 1.5264 | 6.0 | 252 | 0.7463 |

| 1.3946 | 7.0 | 294 | 0.7728 |

### Framework versions

- PEFT 0.15.0

- Transformers 4.51.2

- Pytorch 2.2.2+cu118

- Datasets 3.5.0

- Tokenizers 0.21.1

|

gsoler/sashamori

|

gsoler

| 2025-04-28T05:53:00Z | 0 | 0 |

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-04-28T05:23:56Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: Sasha

---

# Sashamori

<Gallery />

## About this LoRA

This is a [LoRA](https://replicate.com/docs/guides/working-with-loras) for the FLUX.1-dev text-to-image model. It can be used with diffusers or ComfyUI.

It was trained on [Replicate](https://replicate.com/) using AI toolkit: https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `Sasha` to trigger the image generation.

## Run this LoRA with an API using Replicate

```py

import replicate

input = {

"prompt": "Sasha",

"lora_weights": "https://huggingface.co/gsoler/sashamori/resolve/main/lora.safetensors"

}

output = replicate.run(

"black-forest-labs/flux-dev-lora",

input=input

)

for index, item in enumerate(output):

with open(f"output_{index}.webp", "wb") as file:

file.write(item.read())

```

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('gsoler/sashamori', weight_name='lora.safetensors')

image = pipeline('Sasha').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Training details

- Steps: 2000

- Learning rate: 0.0004

- LoRA rank: 16

## Contribute your own examples

You can use the [community tab](https://huggingface.co/gsoler/sashamori/discussions) to add images that show off what you’ve made with this LoRA.

|

Benjaminpwh/xls-r-300m-toratan-120

|

Benjaminpwh

| 2025-04-28T05:51:55Z | 5 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"base_model:Benjaminpwh/xlsr-toratan-240-copt-base_K",

"base_model:finetune:Benjaminpwh/xlsr-toratan-240-copt-base_K",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2025-03-28T19:51:05Z |

---

library_name: transformers

base_model: Benjaminpwh/xlsr-toratan-240-copt-base_K

tags:

- generated_from_trainer

model-index:

- name: xls-r-300m-toratan-120

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xls-r-300m-toratan-120

This model is a fine-tuned version of [Benjaminpwh/xlsr-toratan-240-copt-base_K](https://huggingface.co/Benjaminpwh/xlsr-toratan-240-copt-base_K) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0121

- Cer: 0.0036

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Cer |

|:-------------:|:-------:|:----:|:---------------:|:------:|

| 4.4013 | 4.1667 | 400 | 1.6662 | 0.4168 |

| 1.4361 | 8.3333 | 800 | 0.7861 | 0.2370 |

| 0.8924 | 12.5 | 1200 | 0.4475 | 0.1519 |

| 0.575 | 16.6667 | 1600 | 0.2061 | 0.0790 |

| 0.353 | 20.8333 | 2000 | 0.0794 | 0.0311 |

| 0.2164 | 25.0 | 2400 | 0.0308 | 0.0113 |

| 0.1399 | 29.1667 | 2800 | 0.0121 | 0.0036 |

### Framework versions

- Transformers 4.52.0.dev0

- Pytorch 2.6.0+cu124

- Datasets 3.5.0

- Tokenizers 0.21.1

|

notzero/modellv4

|

notzero

| 2025-04-28T05:47:49Z | 0 | 0 |

transformers

|

[

"transformers",

"pytorch",

"qwen2",

"text-generation",

"unsloth",

"trl",

"sft",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-04-27T20:38:28Z |

---

library_name: transformers

tags:

- unsloth

- trl

- sft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

sLxOpUhh345X/hayay

|

sLxOpUhh345X

| 2025-04-28T05:47:08Z | 0 | 0 | null |

[

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2025-04-28T05:47:08Z |

---

license: bigscience-bloom-rail-1.0

---

|

cata2002/llama-3-8b-full-dataset

|

cata2002

| 2025-04-28T05:45:14Z | 0 | 0 |

transformers

|

[

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/llama-3-8b-Instruct-bnb-4bit",

"base_model:quantized:unsloth/llama-3-8b-Instruct-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-04-28T05:43:59Z |

---

base_model: unsloth/llama-3-8b-Instruct-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** cata2002

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-3-8b-Instruct-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

MLconArtist/gemma-3-finetune

|

MLconArtist

| 2025-04-28T05:44:05Z | 0 | 0 |

transformers

|

[

"transformers",

"gemma3_text",

"text-generation",

"text-generation-inference",

"unsloth",

"gemma3",

"conversational",

"en",

"base_model:unsloth/gemma-3-4b-it",

"base_model:finetune:unsloth/gemma-3-4b-it",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-04-28T05:43:12Z |

---

base_model: unsloth/gemma-3-4b-it

tags:

- text-generation-inference

- transformers

- unsloth

- gemma3

license: apache-2.0

language:

- en

---

# Uploaded finetuned model

- **Developed by:** MLconArtist

- **License:** apache-2.0

- **Finetuned from model :** unsloth/gemma-3-4b-it

This gemma3 model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

YOYO-AI/Qwen2.5-32B-YOYO-karcher-base

|

YOYO-AI

| 2025-04-28T05:44:03Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"mergekit",

"merge",

"conversational",

"base_model:Azure99/Blossom-V6-32B",

"base_model:merge:Azure99/Blossom-V6-32B",

"base_model:EVA-UNIT-01/EVA-Qwen2.5-32B-v0.2",

"base_model:merge:EVA-UNIT-01/EVA-Qwen2.5-32B-v0.2",

"base_model:Qwen/Qwen2.5-32B",

"base_model:merge:Qwen/Qwen2.5-32B",

"base_model:arcee-ai/Virtuoso-Medium-v2",

"base_model:merge:arcee-ai/Virtuoso-Medium-v2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-04-28T04:39:15Z |

---

base_model:

- Azure99/Blossom-V6-32B

- arcee-ai/Virtuoso-Medium-v2

- EVA-UNIT-01/EVA-Qwen2.5-32B-v0.2

- Qwen/Qwen2.5-32B

library_name: transformers

tags:

- mergekit

- merge

---

# merge

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [Karcher Mean](https://en.wikipedia.org/wiki/Karcher_mean) merge method using [Qwen/Qwen2.5-32B](https://huggingface.co/Qwen/Qwen2.5-32B) as a base.

### Models Merged

The following models were included in the merge:

* [Azure99/Blossom-V6-32B](https://huggingface.co/Azure99/Blossom-V6-32B)

* [arcee-ai/Virtuoso-Medium-v2](https://huggingface.co/arcee-ai/Virtuoso-Medium-v2)

* [EVA-UNIT-01/EVA-Qwen2.5-32B-v0.2](https://huggingface.co/EVA-UNIT-01/EVA-Qwen2.5-32B-v0.2)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: EVA-UNIT-01/EVA-Qwen2.5-32B-v0.2

- model: Azure99/Blossom-V6-32B

- model: arcee-ai/Virtuoso-Medium-v2

merge_method: karcher

base_model: Qwen/Qwen2.5-32B

parameters:

max_iter: 1000

normalize: true

int8_mask: true

tokenizer_source: base

dtype: float16

```

|

Nourix545/Nourix43434

|

Nourix545

| 2025-04-28T05:43:22Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-04-28T05:40:50Z |

Nourix est un complément alimentaire haut de gamme à base de plantes conçu pour favoriser la gestion naturelle du poids et le bien-être holistique. Conçu pour ceux qui recherchent une approche équilibrée de la santé, il combine des ingrédients scientifiquement prouvés pour stimuler le métabolisme, supprimer l'appétit, augmenter l'énergie et favoriser la détoxification.

## **[Cliquez ici pour commander sur le site officiel de Nourix](https://nourixfrance.fr)**

## Qu'est-ce que Nourix ?

Nourix est un complément alimentaire formulé pour favoriser une gestion saine du poids en ciblant plusieurs aspects du métabolisme, du contrôle de l'appétit et des niveaux d'énergie. Contrairement à de nombreux produits de perte de poids qui reposent sur des stimulants agressifs ou des régimes restrictifs, Nourix adopte une approche holistique et utilise des ingrédients naturels pour soutenir les processus naturels du corps. Il est commercialisé comme un produit végétalien, sans gluten et sans OGM, ce qui le rend adapté à un large éventail de préférences alimentaires.

## Ingrédients clés et leurs bienfaits

La formule de Nourix est construite sur un mélange synergique d'ingrédients naturels, chacun sélectionné pour son rôle dans le soutien de la gestion du poids. Voici un aperçu de certains des principaux composants :

Extrait de thé vert (300 mg) : Le thé vert, riche en catéchines comme l'EGCG, est un stimulant métabolique bien connu. Il favorise la thermogenèse, aidant le corps à brûler les calories plus efficacement, même au repos. Il offre également des bienfaits antioxydants qui favorisent la santé globale.

Berbérine HCL : Ce composé, dérivé de plantes comme l'épine-vinette, aide à réguler la glycémie et favorise la dégradation des graisses. En stabilisant le glucose, il peut réduire les envies et empêcher le stockage des graisses causé par les pics d’insuline.

Gingembre : Traditionnellement utilisé pour ses propriétés thermogéniques, le gingembre améliore la combustion des calories et facilite la digestion, réduisant les ballonnements et améliorant la santé intestinale.

Cannelle : Connue pour stabiliser la glycémie, la cannelle aide à réduire les envies de sucre et favorise le contrôle de l'appétit, ce qui facilite le respect d'une alimentation équilibrée.

Vinaigre de cidre : Cet ingrédient favorise la digestion, régule l'appétit et peut augmenter le métabolisme des graisses, contribuant ainsi à une sensation de satiété.

Piment de Cayenne (Cayenne Pepper) : Thermogénique naturel, le poivre de Cayenne stimule le métabolisme et favorise l'oxydation des graisses, aidant le corps à brûler plus de calories.

Chardon-Marie (Chardon-Marie) : Inclus dans certaines formulations, le chardon-Marie soutient la santé du foie, aide à la détoxification et améliore la capacité du corps à traiter les graisses.

Ces ingrédients agissent ensemble pour accélérer le métabolisme, réduire l’appétit, stabiliser la glycémie et stimuler l’énergie, offrant une approche globale de la gestion du poids sans recourir à des stimulants agressifs.

## Comment fonctionne Nourix ?

**Nourix opère selon une stratégie à plusieurs volets pour soutenir la perte de poids et le bien-être général :**

Stimulation du métabolisme : des ingrédients comme le thé vert, le poivre de Cayenne et le gingembre stimulent la thermogenèse et augmentent la capacité de combustion des calories du corps, même pendant les périodes de repos.

Contrôle de l'appétit : des composants comme la cannelle, le vinaigre de cidre de pomme et la feuille de banaba aident à réguler la glycémie et à favoriser la satiété, réduisant ainsi les fringales et la suralimentation.

Boost d'énergie : Le ginseng, la vitamine B6/B12 et le resvératrol fournissent un regain d'énergie constant qui combat la fatigue sans la nervosité associée aux doses élevées de caféine.

Soutien et détoxification du foie : des ingrédients comme le chardon-Marie et la racine de pissenlit favorisent la santé du foie, aidant le corps à éliminer les toxines et à traiter les graisses plus efficacement.

## **[Cliquez ici pour commander sur le site officiel de Nourix](https://nourixfrance.fr)**

Pour de meilleurs résultats, la dose recommandée est de deux capsules par jour, prises avec un grand verre d'eau, de préférence pendant un repas pour augmenter l'absorption et minimiser les troubles digestifs. Au moins 2 à 3 mois d’utilisation régulière, associés à une alimentation équilibrée et à un exercice modéré, sont recommandés pour des résultats notables.

## Avantages de Nourix

**Nourix offre plusieurs avantages qui en font un choix incontournable pour ceux qui recherchent une solution naturelle de gestion du poids :**

Naturel et sûr : la formule est exempte d’additifs artificiels, d’OGM, de gluten et d’allergènes majeurs, ce qui la rend bien tolérée par la plupart des utilisateurs. Les effets secondaires, tels qu’une légère indigestion, sont rares et disparaissent généralement rapidement.

Approche holistique : En agissant sur le métabolisme, l’appétit, l’énergie et la détoxification, Nourix favorise une perte de poids durable plutôt qu’une perte d’eau temporaire.

Facilité d'utilisation : Les capsules sont faciles à intégrer dans les routines quotidiennes et ne nécessitent aucun rituel complexe.

Commentaires positifs des utilisateurs : De nombreux utilisateurs signalent une réduction des envies, une augmentation de l'énergie et une perte de poids progressive (5 à 7 kg sur 1 à 2 mois) lorsqu'elles sont associées à un mode de vie sain. Les avis soulignent l’amélioration de la digestion et de la clarté mentale comme avantages supplémentaires.

Garantie de remboursement : Le fabricant offre une garantie de satisfaction de 30 jours, ce qui permet aux utilisateurs d'essayer Nourix sans risque.

## Nourix est-il légitime ?

La légitimité de Nourix est mitigée. D’une part, sa formulation est basée sur des ingrédients bien étudiés et les avis positifs des utilisateurs suggèrent qu’elle peut être efficace lorsqu’elle est utilisée dans le cadre d’un mode de vie sain. La conformité du produit aux normes HACCP et l'approbation de la FDA pour la qualité (comme le prétendent certaines sources) renforcent encore sa crédibilité.

En revanche, les critiques négatives et les avertissements concernant des sites Web peu fiables sont des signaux d’alarme. Le manque de transparence sur certains sites Web affiliés à Nourix et les rapports de frais non autorisés suggèrent que les consommateurs devraient faire preuve de prudence. Pour garantir un achat sûr, achetez uniquement auprès des canaux officiels et consultez un professionnel de la santé avant de commencer tout supplément, surtout si vous avez des problèmes de santé préexistants ou si vous prenez des médicaments.

## Réflexions finales

Nourix propose une approche prometteuse et naturelle de la gestion du poids qui utilise un mélange d'ingrédients scientifiquement prouvés pour stimuler le métabolisme, contrôler l'appétit et améliorer l'énergie. Sa formule holistique et sa facilité d’utilisation en font une option intéressante pour ceux qui recherchent une perte de poids durable sans mesures extrêmes. Cependant, les acheteurs potentiels doivent se méfier des produits contrefaits et des vendeurs non vérifiés et s'en tenir aux sites Web officiels pour leurs achats.

## **[Cliquez ici pour commander sur le site officiel de Nourix](https://nourixfrance.fr)**

|

nsadeq/ReDis-Llama

|

nsadeq

| 2025-04-28T05:42:59Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"Inductive",

"Reasoning",

"text-generation",

"en",

"dataset:nsadeq/redis_generate_rule_alignment",

"dataset:nsadeq/redis_generate_rule_sft",

"dataset:nsadeq/redis_follow_rule_sft",

"arxiv:2504.10647",

"base_model:meta-llama/Meta-Llama-3-8B-Instruct",

"base_model:finetune:meta-llama/Meta-Llama-3-8B-Instruct",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-04-27T13:51:47Z |

---

library_name: transformers

tags:

- Inductive

- Reasoning

language:

- en

base_model:

- meta-llama/Meta-Llama-3-8B-Instruct

pipeline_tag: text-generation

datasets:

- nsadeq/redis_generate_rule_alignment

- nsadeq/redis_generate_rule_sft

- nsadeq/redis_follow_rule_sft

---

# Model Card for Model ID

ReDis-Llama is trained for improved inductive reasoning performance.

### Model Description

- **Developed by:** Nafis Sadeq

- **Language(s) (NLP):** English

- **Finetuned from model:** meta-llama/Meta-Llama-3-8B-Instruct

### Model Sources [optional]

- **Repository:** https://github.com/NafisSadeq/reasoning-distillation

- **Paper:** https://arxiv.org/abs/2504.10647

## How to Get Started with the Model

Follow the instructions here: https://github.com/NafisSadeq/reasoning-distillation

## Training Details

Training details can be found in the paper: https://arxiv.org/abs/2504.10647

## Environmental Impact

- **Hardware Type:** 2 × 48 GB Nvidia RTX A6000 GPUs

- **Hours used:** 72 hours

### Model Architecture and Objective

This model has the same architecture as meta-llama/Meta-Llama-3-8B-Instruct

### Compute Infrastructure

2 × 48 GB Nvidia RTX A6000 GPUs

## Citation

If you use this model, please cite the following paper.

@misc{sadeq2025improvingincontextlearningreasoning,

title={Improving In-Context Learning with Reasoning Distillation},

author={Nafis Sadeq and Xin Xu and Zhouhang Xie and Julian McAuley and Byungkyu Kang and Prarit Lamba and Xiang Gao},

year={2025},

eprint={2504.10647},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2504.10647},

}

|

Triangle104/GLM4-9B-Neon-v2-Q6_K-GGUF

|

Triangle104

| 2025-04-28T05:40:37Z | 0 | 0 |

transformers

|

[

"transformers",

"gguf",

"llama-cpp",

"gguf-my-repo",

"en",

"dataset:allura-org/Celeste-Filtered",

"dataset:allura-org/neon-41k",

"dataset:EVA-UNIT-01/Lilith-v0.2",

"base_model:allura-org/GLM4-9B-Neon-v2",

"base_model:quantized:allura-org/GLM4-9B-Neon-v2",

"license:mit",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-04-28T05:32:25Z |

---

base_model: allura-org/GLM4-9B-Neon-v2

datasets:

- allura-org/Celeste-Filtered

- allura-org/neon-41k

- EVA-UNIT-01/Lilith-v0.2

language:

- en

library_name: transformers

license: mit

tags:

- llama-cpp

- gguf-my-repo

---

# Triangle104/GLM4-9B-Neon-v2-Q6_K-GGUF

This model was converted to GGUF format from [`allura-org/GLM4-9B-Neon-v2`](https://huggingface.co/allura-org/GLM4-9B-Neon-v2) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/allura-org/GLM4-9B-Neon-v2) for more details on the model.

---

RP finetune of GLM-4-9B-0414. Feels nice, lots of personality, if bit

quirky sometimes. Nice prose, not too Claude-ish or Gemini-ish. Doesn't

seem to like too long system prompts or charcards though. Seems to like

JSON formatted system prompts.

Model was trained by Auri.

Training notes

-

Model was trained on a dataset consisting of 77M tokens of synthetic

RP and short story gen data for one epoch. Training took around 11 hours

on 2xRTX 3090 workstation, generously provided by OwenArli.

Went with some sane defaults for training config, QLoRA plus CCE for a

nice chunk of memory usage optimization, 16k fit on 48GB nicely with

some room to spare. I seem to have a problem with Eval/Loss being

broken, not sure why, otherwise it trained smoothly.

Huge thanks to ArliAI for providing compute and collaborating on this run!

Format

-

Model responds to GLM4 instruct formatting, exactly like it's base

model. Backends struggle to add BOS token automatically, so you'll need

to do it yourself. Jinja template should work for chat completions.

[gMASK]<sop><|system|>

{system_prompt}<|user|>

{prompt}<|assistant|>

Recommended Samplers

-

Nothing special, just classics.

Temperature - 1

Min-P - 0.1

Repetition Penalty - 1.03

Example master import for SillyTavern (using Shingane-v1 system prompt by Steelskull)

Running on KoboldCPP and other backends

-

To run GGUFs correctly, you need the most recent version of KoboldCPP, and to pass --overridekv glm4.rope.dimension_count=int:64 to the CLI command or put glm4.rope.dimension_count=int:64 into overridekv box in the GUI (under the Tokens tab at the very bottom).

Thanks to DaringDuck and tofumagnate for info how to apply this fix.

To run this model on vLLM, you'll need to build it from source from the git repo, full GLM4 support hasn't reached release yet.

ExLLaMAv2 and v3 based backends, such as TabbyAPI should support the model out of the box.

Latest versions of llama.cpp server should also allow running GGUFs out-of-the-box.

---

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Triangle104/GLM4-9B-Neon-v2-Q6_K-GGUF --hf-file glm4-9b-neon-v2-q6_k.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Triangle104/GLM4-9B-Neon-v2-Q6_K-GGUF --hf-file glm4-9b-neon-v2-q6_k.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Triangle104/GLM4-9B-Neon-v2-Q6_K-GGUF --hf-file glm4-9b-neon-v2-q6_k.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Triangle104/GLM4-9B-Neon-v2-Q6_K-GGUF --hf-file glm4-9b-neon-v2-q6_k.gguf -c 2048

```

|

arkitex/wav2vec2-finetune-authentic-and-synth

|

arkitex

| 2025-04-28T05:39:26Z | 5 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice_17_0",

"base_model:arkitex/wav2vec2-finetune-authentic-cv-only-colab",

"base_model:finetune:arkitex/wav2vec2-finetune-authentic-cv-only-colab",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2025-04-24T21:46:57Z |

---

library_name: transformers

license: apache-2.0

base_model: arkitex/wav2vec2-finetune-authentic-cv-only-colab

tags:

- generated_from_trainer

datasets:

- common_voice_17_0

metrics:

- wer

model-index:

- name: wav2vec2-finetune-authentic-and-synth

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: common_voice_17_0

type: common_voice_17_0

config: en

split: None

args: en

metrics:

- name: Wer

type: wer

value: 0.37995702285602656

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-finetune-authentic-and-synth

This model is a fine-tuned version of [arkitex/wav2vec2-finetune-authentic-cv-only-colab](https://huggingface.co/arkitex/wav2vec2-finetune-authentic-cv-only-colab) on the common_voice_17_0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8896

- Wer: 0.3800

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 4

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:----:|:---------------:|:------:|

| 1.0356 | 0.3761 | 500 | 0.9584 | 0.3776 |

| 1.0564 | 0.7522 | 1000 | 0.9473 | 0.3753 |

| 0.9867 | 1.1279 | 1500 | 0.9273 | 0.3778 |

| 0.9706 | 1.5039 | 2000 | 0.9131 | 0.3792 |

| 0.9542 | 1.8800 | 2500 | 0.9029 | 0.3805 |

| 0.9127 | 2.2557 | 3000 | 0.8947 | 0.3805 |

| 0.9099 | 2.6318 | 3500 | 0.8928 | 0.3813 |

| 0.9128 | 3.0075 | 4000 | 0.8898 | 0.3802 |

| 0.9138 | 3.3836 | 4500 | 0.8907 | 0.3802 |

| 0.8905 | 3.7597 | 5000 | 0.8896 | 0.3800 |

### Framework versions

- Transformers 4.51.3

- Pytorch 2.6.0

- Datasets 3.5.0

- Tokenizers 0.21.0

|

Triangle104/Qwen2.5-0.5B-Q4_K_S-GGUF

|

Triangle104

| 2025-04-28T05:36:47Z | 4 | 0 | null |

[

"gguf",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-0.5B",

"base_model:quantized:Qwen/Qwen2.5-0.5B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-generation

| 2024-09-22T17:53:22Z |

---

base_model: Qwen/Qwen2.5-0.5B

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen2.5-0.5B/blob/main/LICENSE

pipeline_tag: text-generation

tags:

- llama-cpp

- gguf-my-repo

---

# Triangle104/Qwen2.5-0.5B-Q4_K_S-GGUF

This model was converted to GGUF format from [`Qwen/Qwen2.5-0.5B`](https://huggingface.co/Qwen/Qwen2.5-0.5B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Qwen/Qwen2.5-0.5B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Triangle104/Qwen2.5-0.5B-Q4_K_S-GGUF --hf-file qwen2.5-0.5b-q4_k_s.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Triangle104/Qwen2.5-0.5B-Q4_K_S-GGUF --hf-file qwen2.5-0.5b-q4_k_s.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Triangle104/Qwen2.5-0.5B-Q4_K_S-GGUF --hf-file qwen2.5-0.5b-q4_k_s.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Triangle104/Qwen2.5-0.5B-Q4_K_S-GGUF --hf-file qwen2.5-0.5b-q4_k_s.gguf -c 2048

```

|

Triangle104/Qwen2.5-1.5B-Q6_K-GGUF

|

Triangle104

| 2025-04-28T05:35:02Z | 4 | 0 | null |

[

"gguf",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-1.5B",

"base_model:quantized:Qwen/Qwen2.5-1.5B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-generation

| 2024-09-22T17:34:11Z |

---

base_model: Qwen/Qwen2.5-1.5B

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen2.5-1.5B/blob/main/LICENSE

pipeline_tag: text-generation

tags:

- llama-cpp

- gguf-my-repo

---

# Triangle104/Qwen2.5-1.5B-Q6_K-GGUF

This model was converted to GGUF format from [`Qwen/Qwen2.5-1.5B`](https://huggingface.co/Qwen/Qwen2.5-1.5B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Qwen/Qwen2.5-1.5B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Triangle104/Qwen2.5-1.5B-Q6_K-GGUF --hf-file qwen2.5-1.5b-q6_k.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Triangle104/Qwen2.5-1.5B-Q6_K-GGUF --hf-file qwen2.5-1.5b-q6_k.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Triangle104/Qwen2.5-1.5B-Q6_K-GGUF --hf-file qwen2.5-1.5b-q6_k.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Triangle104/Qwen2.5-1.5B-Q6_K-GGUF --hf-file qwen2.5-1.5b-q6_k.gguf -c 2048

```

|

Alcoft/Qwen2.5-7B-Instruct-GGUF

|

Alcoft

| 2025-04-28T05:34:48Z | 22 | 0 | null |

[

"gguf",

"text-generation",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-7B-Instruct",

"base_model:quantized:Qwen/Qwen2.5-7B-Instruct",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-generation

| 2024-12-01T01:08:44Z |

---

license: apache-2.0

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

base_model:

- Qwen/Qwen2.5-7B-Instruct

pipeline_tag: text-generation

---

|

Triangle104/Qwen2.5-3B-Q4_K_M-GGUF

|

Triangle104

| 2025-04-28T05:34:38Z | 2 | 0 | null |

[

"gguf",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-3B",

"base_model:quantized:Qwen/Qwen2.5-3B",

"license:other",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-generation

| 2024-09-22T16:49:28Z |

---

base_model: Qwen/Qwen2.5-3B

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

license: other

license_name: qwen-research

license_link: https://huggingface.co/Qwen/Qwen2.5-3B/blob/main/LICENSE

pipeline_tag: text-generation

tags:

- llama-cpp

- gguf-my-repo

---

# Triangle104/Qwen2.5-3B-Q4_K_M-GGUF

This model was converted to GGUF format from [`Qwen/Qwen2.5-3B`](https://huggingface.co/Qwen/Qwen2.5-3B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Qwen/Qwen2.5-3B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Triangle104/Qwen2.5-3B-Q4_K_M-GGUF --hf-file qwen2.5-3b-q4_k_m.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Triangle104/Qwen2.5-3B-Q4_K_M-GGUF --hf-file qwen2.5-3b-q4_k_m.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Triangle104/Qwen2.5-3B-Q4_K_M-GGUF --hf-file qwen2.5-3b-q4_k_m.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Triangle104/Qwen2.5-3B-Q4_K_M-GGUF --hf-file qwen2.5-3b-q4_k_m.gguf -c 2048

```

|

Triangle104/Qwen2.5-3B-Q5_K_M-GGUF

|

Triangle104

| 2025-04-28T05:34:19Z | 2 | 0 | null |

[

"gguf",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-3B",

"base_model:quantized:Qwen/Qwen2.5-3B",

"license:other",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-generation

| 2024-09-22T16:56:57Z |

---

base_model: Qwen/Qwen2.5-3B

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

license: other

license_name: qwen-research

license_link: https://huggingface.co/Qwen/Qwen2.5-3B/blob/main/LICENSE

pipeline_tag: text-generation

tags:

- llama-cpp

- gguf-my-repo

---

# Triangle104/Qwen2.5-3B-Q5_K_M-GGUF

This model was converted to GGUF format from [`Qwen/Qwen2.5-3B`](https://huggingface.co/Qwen/Qwen2.5-3B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Qwen/Qwen2.5-3B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Triangle104/Qwen2.5-3B-Q5_K_M-GGUF --hf-file qwen2.5-3b-q5_k_m.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Triangle104/Qwen2.5-3B-Q5_K_M-GGUF --hf-file qwen2.5-3b-q5_k_m.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Triangle104/Qwen2.5-3B-Q5_K_M-GGUF --hf-file qwen2.5-3b-q5_k_m.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Triangle104/Qwen2.5-3B-Q5_K_M-GGUF --hf-file qwen2.5-3b-q5_k_m.gguf -c 2048

```

|

Triangle104/Qwen2.5-7B-Q5_K_S-GGUF

|

Triangle104

| 2025-04-28T05:32:53Z | 3 | 0 | null |

[

"gguf",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"zho",

"eng",

"fra",

"spa",

"por",

"deu",

"ita",

"rus",

"jpn",

"kor",

"vie",

"tha",

"ara",

"base_model:Qwen/Qwen2.5-7B",

"base_model:quantized:Qwen/Qwen2.5-7B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] |

text-generation

| 2024-09-19T16:05:08Z |

---

base_model: Qwen/Qwen2.5-7B

language:

- zho

- eng

- fra

- spa

- por

- deu

- ita

- rus

- jpn

- kor

- vie

- tha

- ara

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen2.5-7B/blob/main/LICENSE

pipeline_tag: text-generation

tags:

- llama-cpp

- gguf-my-repo

---

# Triangle104/Qwen2.5-7B-Q5_K_S-GGUF

This model was converted to GGUF format from [`Qwen/Qwen2.5-7B`](https://huggingface.co/Qwen/Qwen2.5-7B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Qwen/Qwen2.5-7B) for more details on the model.

## Use with llama.cpp