modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-07 15:50:20

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 491

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-07 15:48:55

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

ubergarm/GLM-4.5-Air-GGUF

|

ubergarm

| 2025-08-07T12:25:38Z | 5,259 | 15 | null |

[

"gguf",

"imatrix",

"conversational",

"ik_llama.cpp",

"text-generation",

"base_model:zai-org/GLM-4.5-Air",

"base_model:quantized:zai-org/GLM-4.5-Air",

"license:mit",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-02T06:05:33Z |

---

quantized_by: ubergarm

pipeline_tag: text-generation

base_model: zai-org/GLM-4.5-Air

license: mit

base_model_relation: quantized

tags:

- imatrix

- conversational

- ik_llama.cpp

---

## `ik_llama.cpp` imatrix Quantizations of zai-org/GLM-4.5-Air

This quant collection **REQUIRES** [ik_llama.cpp](https://github.com/ikawrakow/ik_llama.cpp/) fork to support the ik's latest SOTA quants and optimizations! Do **not** download these big files and expect them to run on mainline vanilla llama.cpp, ollama, LM Studio, KoboldCpp, etc!

*NOTE* `ik_llama.cpp` can also run your existing GGUFs from bartowski, unsloth, mradermacher, etc if you want to try it out before downloading my quants.

Some of ik's new quants are supported with [Nexesenex/croco.cpp](https://github.com/Nexesenex/croco.cpp) fork of KoboldCPP with Windows builds for CUDA 12.9. Also check for [Windows builds by Thireus here.](https://github.com/Thireus/ik_llama.cpp/releases) which have been CUDA 12.8.

These quants provide best in class perplexity for the given memory footprint.

## Big Thanks

Shout out to Wendell and the **Level1Techs** crew, the community [Forums](https://forum.level1techs.com/t/deepseek-deep-dive-r1-at-home/225826), [YouTube Channel](https://www.youtube.com/@Level1Techs)! **BIG thanks** for providing **BIG hardware** expertise and access to run these experiments and make these great quants available to the community!!!

Also thanks to all the folks in the quanting and inferencing community on [BeaverAI Club Discord](https://huggingface.co/BeaverAI) and on [r/LocalLLaMA](https://www.reddit.com/r/LocalLLaMA/) for tips and tricks helping each other run, test, and benchmark all the fun new models!

## Quant Collection

Perplexity computed against *wiki.test.raw*.

These first two are just test quants for baseline perplexity comparison:

* `BF16` 205.811 GiB (16.004 BPW)

- Final estimate: PPL = 4.5704 +/- 0.02796

* `Q8_0` 109.381 GiB (8.505 BPW)

- Final estimate: PPL = 4.5798 +/- 0.02804

## IQ5_K 77.704 GiB (6.042 BPW)

Final estimate: PPL = 4.5867 +/- 0.02806

<details>

<summary>👈 Secret Recipe</summary>

```bash

#!/usr/bin/env bash

custom="

# 47 Repeating Layers [0-46]

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

# Attention

blk\..*\.attn_q.*=q8_0

blk\..*\.attn_k.*=q8_0

blk\..*\.attn_v.*=q8_0

blk\..*\.attn_output.*=q8_0

# First 1 Dense Layers [0]

blk\..*\.ffn_down\.weight=q8_0

blk\..*\.ffn_(gate|up)\.weight=q8_0

# Shared Expert Layers [1-46]

blk\..*\.ffn_down_shexp\.weight=q8_0

blk\..*\.ffn_(gate|up)_shexp\.weight=q8_0

# Routed Experts Layers [1-46]

blk\.(1)\.ffn_down_exps\.weight=q8_0

blk\.(1)\.ffn_(gate|up)_exps\.weight=q8_0

blk\..*\.ffn_down_exps\.weight=q6_0

blk\..*\.ffn_(gate|up)_exps\.weight=iq5_k

# NextN MTP Layer [46]

blk\..*\.nextn\.embed_tokens\.weight=iq5_ks

blk\..*\.nextn\.shared_head_head\.weight=iq5_ks

blk\..*\.nextn\.eh_proj\.weight=q8_0

# Non-Repeating Layers

token_embd\.weight=iq6_k

output\.weight=iq6_k

"

custom=$(

echo "$custom" | grep -v '^#' | \

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

)

numactl -N 0 -m 0 \

./build/bin/llama-quantize \

--custom-q "$custom" \

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ5_K.gguf \

IQ5_K \

192

```

</details>

## IQ5_KS 72.855 GiB (5.665 BPW)

Final estimate: PPL = 4.5948 +/- 0.02815

<details>

<summary>👈 Secret Recipe</summary>

```bash

#!/usr/bin/env bash

custom="

# 47 Repeating Layers [0-46]

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

# Attention

blk\..*\.attn_q.*=iq5_ks

blk\..*\.attn_k.*=q8_0

blk\..*\.attn_v.*=q8_0

blk\..*\.attn_output.*=iq5_ks

# First 1 Dense Layers [0]

blk\..*\.ffn_down\.weight=q6_0

blk\..*\.ffn_(gate|up)\.weight=iq5_ks

# Shared Expert Layers [1-46]

blk\..*\.ffn_down_shexp\.weight=q6_0

blk\..*\.ffn_(gate|up)_shexp\.weight=iq5_ks

# Routed Experts Layers [1-46]

blk\..*\.ffn_down_exps\.weight=q6_0

blk\..*\.ffn_(gate|up)_exps\.weight=iq5_ks

# NextN MTP Layer [46]

blk\..*\.nextn\.embed_tokens\.weight=iq5_ks

blk\..*\.nextn\.shared_head_head\.weight=iq5_ks

blk\..*\.nextn\.eh_proj\.weight=q8_0

# Non-Repeating Layers

token_embd\.weight=iq4_k

output\.weight=iq6_k

"

custom=$(

echo "$custom" | grep -v '^#' | \

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

)

numactl -N 0 -m 0 \

./build/bin/llama-quantize \

--custom-q "$custom" \

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ5_KS.gguf \

IQ5_KS \

192

```

</details>

## IQ4_K 62.910 GiB (4.892 BPW)

Final estimate: PPL = 4.6273 +/- 0.02839

<details>

<summary>👈 Secret Recipe</summary>

```bash

#!/usr/bin/env bash

custom="

# 47 Repeating Layers [0-46]

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

# Attention

blk\..*\.attn_q.*=iq5_ks

blk\..*\.attn_k.*=q8_0

blk\..*\.attn_v.*=q8_0

blk\..*\.attn_output.*=iq5_ks

# First 1 Dense Layers [0]

blk\..*\.ffn_down\.weight=q6_0

blk\..*\.ffn_(gate|up)\.weight=iq5_ks

# Shared Expert Layers [1-46]

blk\..*\.ffn_down_shexp\.weight=q6_0

blk\..*\.ffn_(gate|up)_shexp\.weight=iq5_ks

# Routed Experts Layers [1-46]

blk\..*\.ffn_down_exps\.weight=q5_0

blk\..*\.ffn_(gate|up)_exps\.weight=iq4_k

# NextN MTP Layer [46]

blk\..*\.nextn\.embed_tokens\.weight=iq5_ks

blk\..*\.nextn\.shared_head_head\.weight=iq5_ks

blk\..*\.nextn\.eh_proj\.weight=q8_0

# Non-Repeating Layers

token_embd\.weight=iq4_k

output\.weight=iq6_k

"

custom=$(

echo "$custom" | grep -v '^#' | \

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

)

numactl -N 1 -m 1 \

./build/bin/llama-quantize \

--custom-q "$custom" \

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ4_K.gguf \

IQ4_K \

192

```

</details>

## IQ4_KSS 54.801 GiB (4.261 BPW)

Final estimate: PPL = 4.7056 +/- 0.02909

<details>

<summary>👈 Secret Recipe</summary>

```bash

#!/usr/bin/env bash

custom="

# 47 Repeating Layers [0-46]

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

# Attention

blk\.(0|1)\.attn_q.*=q8_0

blk\.(0|1)\.attn_k.*=q8_0

blk\.(0|1)\.attn_v.*=q8_0

blk\.(0|1)\.attn_output.*=q8_0

blk\..*\.attn_q.*=iq5_ks

blk\..*\.attn_k.*=iq5_ks

blk\..*\.attn_v.*=iq5_ks

blk\..*\.attn_output.*=iq5_ks

# First 1 Dense Layers [0]

blk\..*\.ffn_down\.weight=q6_0

blk\..*\.ffn_(gate|up)\.weight=iq5_ks

# Shared Expert Layers [1-46]

blk\..*\.ffn_down_shexp\.weight=q6_0

blk\..*\.ffn_(gate|up)_shexp\.weight=iq5_ks

# Routed Experts Layers [1-46]

#blk\.(1|46)\.ffn_down_exps\.weight=q8_0

#blk\.(1|46)\.ffn_(gate|up)_exps\.weight=q8_0

blk\..*\.ffn_down_exps\.weight=iq4_nl

blk\..*\.ffn_(gate|up)_exps\.weight=iq4_kss

# NextN MTP Layer [46]

blk\..*\.nextn\.embed_tokens\.weight=iq5_ks

blk\..*\.nextn\.shared_head_head\.weight=iq5_ks

blk\..*\.nextn\.eh_proj\.weight=q8_0

# Non-Repeating Layers

token_embd\.weight=iq4_k

output\.weight=iq6_k

"

custom=$(

echo "$custom" | grep -v '^#' | \

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

)

numactl -N 0 -m 0 \

./build/bin/llama-quantize \

--custom-q "$custom" \

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ4_KSS.gguf \

IQ4_KSS \

192

```

</details>

## IQ2_KL 43.870 GiB (3.411 BPW)

Final estimate: PPL = 5.0697 +/- 0.03166

<details>

<summary>👈 Secret Recipe</summary>

```bash

#!/usr/bin/env bash

custom="

# 47 Repeating Layers [0-46]

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

# Attention

blk\..*\.attn_q.*=iq4_ks

blk\..*\.attn_k.*=iq5_ks

blk\..*\.attn_v.*=iq5_ks

blk\..*\.attn_output.*=iq4_ks

# First 1 Dense Layers [0]

blk\..*\.ffn_down\.weight=iq4_nl

blk\..*\.ffn_(gate|up)\.weight=iq4_kss

# Shared Expert Layers [1-46]

blk\..*\.ffn_down_shexp\.weight=iq4_nl

blk\..*\.ffn_(gate|up)_shexp\.weight=iq4_kss

# Routed Experts Layers [1-46]

blk\.(1)\.ffn_down_exps\.weight=iq4_nl

blk\.(1)\.ffn_(gate|up)_exps\.weight=iq4_kss

blk\..*\.ffn_down_exps\.weight=iq4_nl

blk\..*\.ffn_(gate|up)_exps\.weight=iq2_kl

# NextN MTP Layer [46]

blk\..*\.nextn\.embed_tokens\.weight=iq4_ks

blk\..*\.nextn\.shared_head_head\.weight=iq4_ks

blk\..*\.nextn\.eh_proj\.weight=q6_0

# Non-Repeating Layers

token_embd\.weight=iq4_k

output\.weight=iq6_k

"

custom=$(

echo "$custom" | grep -v '^#' | \

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

)

numactl -N 0 -m 0 \

./build/bin/llama-quantize \

--custom-q "$custom" \

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ2_KL.gguf \

IQ2_KL \

192

```

</details>

## IQ1_KT 36.039 GiB (2.802 BPW)

Final estimate: PPL = 5.8214 +/- 0.03767

<details>

<summary>👈 Secret Recipe</summary>

```bash

#!/usr/bin/env bash

custom="

# 47 Repeating Layers [0-46]

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

# Attention

blk\..*\.attn_q.*=iq4_kt

blk\..*\.attn_k.*=iq4_kt

blk\..*\.attn_v.*=iq4_kt

blk\..*\.attn_output.*=iq4_kt

# First 1 Dense Layers [0]

blk\..*\.ffn_down\.weight=iq4_nl

blk\..*\.ffn_(gate|up)\.weight=iq4_kt

# Shared Expert Layers [1-46]

blk\..*\.ffn_down_shexp\.weight=iq4_nl

blk\..*\.ffn_(gate|up)_shexp\.weight=iq4_kt

# Routed Experts Layers [1-46]

blk\..*\.ffn_down_exps\.weight=iq4_nl

blk\..*\.ffn_(gate|up)_exps\.weight=iq1_kt

# NextN MTP Layer [46]

blk\..*\.nextn\.embed_tokens\.weight=iq4_kt

blk\..*\.nextn\.shared_head_head\.weight=iq4_kt

blk\..*\.nextn\.eh_proj\.weight=q8_0

# Non-Repeating Layers

token_embd\.weight=iq4_k

output\.weight=iq6_k

"

custom=$(

echo "$custom" | grep -v '^#' | \

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

)

numactl -N 1 -m 1 \

./build/bin/llama-quantize \

--custom-q "$custom" \

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ1_KT.gguf \

IQ1_KT \

192

```

</details>

## Quick Start

If you want to disable thinking, add `/nothink` (correct, no underscore) at the *end* of your prompt.

```bash

# Clone and checkout

$ git clone https://github.com/ikawrakow/ik_llama.cpp

$ cd ik_llama.cpp

# Build for hybrid CPU+CUDA

$ cmake -B build -DCMAKE_BUILD_TYPE=Release -DGGML_CUDA=ON -DGGML_BLAS=OFF -DGGML_SCHED_MAX_COPIES=1

$ cmake --build build --config Release -j $(nproc)

# Run API server

$ ./build/bin/llama-server \

--model GLM-4.5-Air-IQ4_KSS-00001-of-00002.gguf \

--alias ubergarm/GLM-4.5-Air-IQ4_KSS \

--chat-template chatglm4 \

--ctx-size 32768 \

-fa -fmoe \

-ctk q8_0 -ctv q8_0 \

-ub 4096 -b 4096 \

-ngl 99 \

-ot exps=CPU \

--parallel 1 \

--threads 8 \

--host 127.0.0.1 \

--port 8080 \

--no-mmap

```

## References

* [ik_llama.cpp](https://github.com/ikawrakow/ik_llama.cpp)

* [Getting Started Guide (already out of date lol)](https://github.com/ikawrakow/ik_llama.cpp/discussions/258)

* [ubergarm-imatrix-calibration-corpus-v02.txt](https://gist.github.com/ubergarm/edfeb3ff9c6ec8b49e88cdf627b0711a?permalink_comment_id=5682584#gistcomment-5682584)

* [Mainline llama.cpp Draft PR14939](https://github.com/ggml-org/llama.cpp/pull/14939)

* [ik_llama.cpp GLM-4.5 MoE PR668](https://github.com/ikawrakow/ik_llama.cpp/pull/668)

|

lastmass/MedGemma-GRPO

|

lastmass

| 2025-08-07T09:40:01Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gemma3",

"image-text-to-text",

"text-generation-inference",

"unsloth",

"vllm",

"conversational",

"en",

"base_model:unsloth/medgemma-4b-it",

"base_model:finetune:unsloth/medgemma-4b-it",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-text-to-text

| 2025-08-07T08:08:13Z |

---

base_model: unsloth/medgemma-4b-it

tags:

- text-generation-inference

- transformers

- unsloth

- gemma3

- vllm

license: apache-2.0

language:

- en

---

# MedGemma-GRPO

## Model Description

This model is a fine-tuned version of the MEDGemma model, adapted for tasks involving clinical case reasoning.

The goal of this project was to explore the effects of combining Supervised Fine-Tuning (SFT) with Group Relative Policy Optimization (GRPO) to guide the model's ability to follow a logical diagnostic path.

The model was trained on a synthetic dataset of medical case studies,

which, due to the nature of the large models used for generation, has a notable bias towards complex cardiovascular, thrombotic, and autoimmune diseases (e.g., Lupus, Pericarditis).

## Fine-tuning Procedure

The model was first adapted to the medical domain using Supervised Fine-Tuning (SFT) on a synthetic dataset. Subsequently,

Group Relative Policy Optimization (GRPO) was applied, utilizing a custom rule-based reward function.

This reward function was designed to guide the model towards accurate diagnoses and logically structured reasoning, while also penalizing overly verbose outputs.

# Using with Unsloth

```

from unsloth import FastModel

import torch

max_seq_length=1024

model, tokenizer = FastModel.from_pretrained(

model_name = "lastmass/MedGemma-GRPO",

max_seq_length = max_seq_length,

load_in_4bit = False,

load_in_8bit = False,

full_finetuning = False,

)

reasoning_start = "<start_working_out>"

reasoning_end = "<end_working_out>"

solution_start = "<SOLUTION>"

solution_end = "</SOLUTION>"

system_prompt = \

f"""You are given a problem.

Think about the problem and provide your working out.

Place it between {reasoning_start} and {reasoning_end}.

Then, provide your solution between {solution_start}{solution_end}"""

user_input=”“

messages = [{

"role": "user",

"content": [{"type" : "text", "text" :system_prompt + user_input + reasoning_start,}]

}]

text = tokenizer.apply_chat_template(

messages,

add_generation_prompt = True,

)

from transformers import TextStreamer

_ = model.generate(

**tokenizer([text], return_tensors = "pt").to("cuda"),

max_new_tokens =max_seq_length,

# Recommended Gemma-3 settings!

temperature = 0.0,

streamer = TextStreamer(tokenizer, skip_prompt = True),

)

```

# Intended Use & Limitations

This model is intended for research purposes only, as an exploration into enhancing the reasoning capabilities of smaller language models (4B) in a specialized domain.

# ⚠️ Disclaimer:

This model is NOT a medical device and should NEVER be used for actual medical diagnosis, treatment, or advice.

The outputs are generated by an AI and may contain inaccuracies or hallucinations, and they reflect the significant biases present in the training data.

Always consult a qualified healthcare professional for any medical concerns.

# Example Prompts and Outputs

Here are a couple of examples demonstrating the model's reasoning capabilities on complex cases with diagnostic traps.

## Question

```

A 58-year-old overweight male with a history of hypertension presents to the emergency department with a 12-hour history of excruciating pain, swelling, and redness in his right great toe. He states the pain started abruptly overnight and is so severe that even the bedsheet touching his toe is unbearable. He admits to drinking several beers and eating a large steak dinner the night before. His medications include hydrochlorothiazide for his blood pressure.

On examination, he has a low-grade fever of 38.1°C (100.6°F). The first metatarsophalangeal (MTP) joint of his right foot is markedly swollen, erythematous, warm, and exquisitely tender to palpation.

Initial laboratory results show:

CBC: White blood cell count is elevated at 13.5 x 10^9/L.

Serum Uric Acid: Markedly elevated.

CRP: Elevated.

What is the most likely diagnosis? What specific findings from his history and medications are significant risk factors for this condition? Finally, what is the gold standard for a definitive diagnosis, and what would it reveal?

```

## Answer

```

<start_working_out>

Alright, let's think this through. We have a 58-year-old man who's overweight and has a history of hypertension.

He's come in with excruciating pain, swelling, and redness in his right great toe.

The pain started abruptly overnight and is so severe that even the bedsheet touching his toe is unbearable.

He also mentions drinking several beers and eating a large steak dinner the night before.On examination,

he has a low-grade fever of 38.1°C and the first metatarsophalangeal (MTP) joint of his right foot is markedly swollen, erythematous, warm, and exquisitely tender to palpation.

His lab results show an elevated white blood cell count, a markedly elevated serum uric acid, and an elevated CRP.

These findings are pointing towards an inflammatory process, likely related to uric acid.Given his symptoms and lab results, the most likely diagnosis is gout.

Gout is characterized by the deposition of monosodium urate crystals in joints, often affecting the first MTP joint.

The sudden onset of severe pain, the presence of swelling and redness, and the elevated uric acid levels are all classic signs of gout.

Now, let's consider the risk factors. His history of hypertension and being overweight are significant risk factors for gout.

Obesity and hypertension are both associated with increased uric acid levels, which can lead to the formation of uric acid crystals and subsequent gout attacks.

The gold standard for a definitive diagnosis of gout is a joint aspiration. This procedure involves taking a sample of synovial fluid from the affected joint and examining it under a microscope for the presence of monosodium urate crystals.

The presence of these crystals confirms the diagnosis of gout.So, in summary, the most likely diagnosis is gout, and the joint aspiration would reveal the presence of monosodium urate crystals, confirming the diagnosis.

<end_working_out>

<SOLUTION>The most likely diagnosis for the 58-year-old male patient is gout.

The presentation of excruciating pain, swelling, redness, and warmth in the first metatarsophalangeal (MTP) joint of the right foot,

along with the abrupt onset of symptoms, elevated white blood cell count, markedly elevated serum uric acid, and elevated CRP,

are all characteristic of gout.Significant risk factors for this condition include his history of hypertension and being overweight.

Obesity and hypertension are both associated with increased uric acid levels, which can lead to the formation of uric acid crystals and subsequent gout attacks.

The gold standard for a definitive diagnosis of gout is a joint aspiration. This procedure involves taking a sample of synovial fluid from the affected joint and examining it under a microscope for the presence of monosodium urate crystals.

The presence of these crystals confirms the diagnosis of gout.In a joint aspiration, the presence of monosodium urate crystals would be revealed,

confirming the diagnosis of gout.

</SOLUTION>

```

## Question

```

A 48-year-old female presents to her primary care physician stating, "I just feel empty." She reports a 4-month period of pervasive low mood. She has lost interest in her hobbies, including gardening and book club, which she used to love.

She describes significant fatigue, stating she feels "exhausted all the time" despite sleeping more than usual.

She has difficulty concentrating at her job as an accountant and has made several simple errors recently. Her appetite has decreased, and she has had an unintentional weight loss of 10 lbs. She expresses feelings of worthlessness, saying "I feel like a failure as a wife and mother."

She denies any specific plans to harm herself but admits to sometimes "wishing she wouldn't wake up in the morning."

Her past medical history is unremarkable. A physical examination and basic metabolic panel are entirely within normal limits.

What is the most likely diagnosis for this patient? Based on the DSM-5 criteria, list at least five key symptoms from her history that support this diagnosis.

Finally, what is a crucial next step in assessing this patient's immediate safety?

```

## Answer

```

<start_working_out>

Alright, let's think about this. A 48-year-old woman is feeling empty and low in mood for the past four months.

She's lost interest in things she used to enjoy, like gardening and book club. That's a big red flag for depression.

She's also feeling really tired, even though she's sleeping more.

That's another classic symptom of depression.

And she's having trouble concentrating at work, which is impacting her performance.

She's lost her appetite and has lost weight unintentionally.

That's concerning and could be a sign of depression.She's feeling worthless and says she feels like a failure as a wife and mother.

That's a sign of low self-esteem, which is often associated with depression.She mentions wishing she wouldn't wake up in the morning.

That's a sign of suicidal ideation, which is a serious concern.

Her past medical history is unremarkable, and her physical exam and basic metabolic panel are normal.

This suggests the symptoms are likely psychological rather than physical.Based on the DSM-5 criteria,

she has five key symptoms: persistent low mood, loss of interest in activities, fatigue, difficulty concentrating, and feelings of worthlessness.

Given these symptoms, the most likely diagnosis is Major Depressive Disorder.Now, it's crucial to assess her immediate safety.

She admits to suicidal thoughts, which is a significant risk factor.The next step is to conduct a thorough safety assessment.

This involves asking direct questions about her suicidal thoughts, plans, and intent.

It's important to have a safety plan in place and to ensure she has access to mental health support.

This patient needs immediate attention to ensure her safety and well-being.

<end_working_out>

<SOLUTION>The most likely diagnosis for this patient is Major Depressive Disorder, based on the DSM-5 criteria.

The patient presents with several key symptoms that align with this diagnosis:

1. **Persistent low mood:** The patient reports feeling "empty" and experiencing pervasive low mood for the past four months.

2. **Loss of interest in activities:** She has lost interest in her hobbies, including gardening and book club, which she used to enjoy.

3. **Fatigue:** She describes feeling "exhausted all the time," even though she is sleeping more than usual.

4. **Difficulty concentrating:** She has difficulty concentrating at her job as an accountant, leading to simple errors.

5. **Feelings of worthlessness:** She expresses feelings of worthlessness, stating "I feel like a failure as a wife and mother."

In addition to these symptoms, the patient's unintentional weight loss of 10 lbs and suicidal ideation (wishing she wouldn't wake up in the morning) further support the diagnosis of Major Depressive Disorder.

A crucial next step in assessing this patient's immediate safety is to conduct a thorough safety assessment.

This involves asking direct questions about her suicidal thoughts, plans, and intent.

It is also important to have a safety plan in place and to ensure she has access to mental health support.

This assessment will help determine the level of risk and guide appropriate interventions to ensure her safety and well-being.

</SOLUTION>

```

# Uploaded finetuned model

- **Developed by:** lastmass

- **License:** apache-2.0

- **Finetuned from model :** unsloth/medgemma-4b-it

This gemma3 model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

InfiX-ai/InfiGFusion-14B

|

InfiX-ai

| 2025-08-07T09:01:09Z | 0 | 1 | null |

[

"pytorch",

"phi3",

"custom_code",

"arxiv:2505.13893",

"license:mit",

"region:us"

] | null | 2025-06-01T14:27:41Z |

---

license: mit

---

<h1 align="center">

InfiGFusion: Graph-on-Logits Distillation via Efficient Gromov-Wasserstein for Model Fusion

</h1>

<h4 align="center">

[](https://arxiv.org/pdf/2505.13893)

[](https://github.com/Reallm-Labs/InfiGFusion/edit/main/README.md)

[](https://huggingface.co/papers/2505.13893)

</h4>

**InfiGFusion** is the first structure-aware fusion framework for large language models that models semantic dependencies among logits using feature-level graphs. We introduce a novel Graph-on-Logits Distillation (GLD) loss that captures cross-dimension interactions via co-activation graphs and aligns them using an efficient, provable approximation of Gromov-Wasserstein distance (reducing complexity from O(n^4) to O(nlogn)). Our released **InfiGFusion-14B** model consistently shows better performance, achieving +35.6 on Multistep Arithmetic and +37.06 on Causal Judgement over SFT, demonstrating superior multi-step and complex logic inference.

## 🎉 News

🎉 The ckpt model, InfiGFusion-14B, has been released on Huggingface! ! !

## 🎨 Fusion Framework

## 📕 Model Summary

| | |

|-------------------------|-------------------------------------------------------------------------------|

| **Developers** | Reallm-Labs |

| **Description** | InfiGFusion is an open fusion model series designed to fuse multiple domain LLMs into a single LLM. It excels in multi-step and relational inference, enabling robust performance across complex reasoning tasks.|

| **Architecture** | 14B parameters, dense decoder-only Transformer model |

| **Inputs** | Text, best suited for prompts in the chat format |

| **Max Context length** | 16K tokens |

| **Fusing input length** | 4K tokens |

| **Fusing time** | 195 hours |

| **Fusing data** | 520M tokens |

| **Outputs** | Generated text in response to input |

| **Status** | Static model trained on an offline dataset |

| **License** | MIT |

## 🩺 Intended Use

| | |

| -------------------------- | ------------------|

| **Primary Use Cases** | `InfiGFusion` is designed to accelerate research on language model fusion and serve as a foundation for generative AI-powered features. It is suitable for building general-purpose AI systems and applications (primarily in English), especially in scenarios that require:<br><br>1. Operation in memory- or compute-constrained environments.<br>2. Low-latency inference.<br>3. Advanced reasoning and logical inference.|

| **Out-of-Scope Use Cases** | `InfiGFusion` is not specifically optimized or evaluated for all downstream tasks. As such:<br><br>1. Developers should consider the general limitations of language models and carefully evaluate performance, safety, and fairness before deploying in sensitive or high-stakes applications.<br>2. Use of the model must comply with all applicable laws and regulations (e.g., data privacy, export controls), particularly given its English-language focus.<br>3. This Model Card does not alter or restrict the terms of the model’s open-source license. |

## 💼 Data Overview

### 📚 Training Data

We construct a novel multi-task training dataset comprising **130k curated examples** across three major domains: **general reasoning**, **mathematics**, and **code generation**.

1. **General Reasoning (52K samples)**

Samples are sourced from the [Infinity-Instruct](https://huggingface.co/datasets/BAAI/Infinity-Instruct) dataset, a high-quality instruction-following corpus created through expert filtering.

2. **Mathematics (39K samples)**

Questions are drawn from the [NuminaMath-1.5](https://huggingface.co/datasets/AI-MO/NuminaMath-1.5) dataset—an advanced benchmark for competition-level math spanning Algebra, Geometry, Combinatorics, Calculus, Inequalities, Logic & Puzzles, and Number Theory.

Answers are distilled from the [DeepSeek-R1-671B](https://huggingface.co/datasets/a-m-team/AM-DeepSeek-R1-Distilled-1.4M) model by the AM team.

3. **Code Generation (39K samples)**

We used [KodCode-V1-SFT-R1](https://huggingface.co/mlfoundations-dev/KodCode-V1-SFT-R1_300k_batch_size_512), a dataset with 268K code samples. Each example was processed by our pivot model to generate five completions. These were sandbox-evaluated, and samples where at least one generation failed were flagged. From these, we filtered and distilled 39K high-quality examples.

| **Type** | **General** | **Math** | **Code** |

| ----------------- | ----------------- | -------------- | ----------------- |

| **Dataset** | Infinity-Instruct | NuminaMath-1.5 | KodCode-V1-SFT-R1 |

| **Original Size** | 1.4M | 1.4M | 268K |

| **Filtered Size** | 52K | 39K | 39K |

#### Benchmark evaluation

To enhance the robustness of answer extraction under the regex-based evaluation framework of[OpenCompass](https://github.com/open-compass/opencompass) and [EvalPlus](https://github.com/evalplus/evalplus), we systematically refine the prompts used in several benchmark datasets. These tailored prompt formats are designed to facilitate precise output matching, mitigating ambiguities that often arise from model generations. The revised prompt templates corresponding to each dataset are presented in the following Table, which details how task instructions and answer formats are standardized to align with OpenCompass's automatic evaluation pipeline.

For datasets such as TheoremQA and HumanEval, we retain the original prompt configurations, adhering to their respective community-adopted evaluation protocols. This ensures consistency with prior works and preserves the validity of established benchmarks.

For MBPP, we utilize EvalPlus for a more rigorous assessment of LLM-generated code, providing enhanced reliability in functional correctness evaluation, more specifically:

| **Benchmark** | **Prompt Format** |

| ------------- | ----------------------------------|

| **IFEval** | `{prompt}\nPlease directly give the correct answer:` |

| **ARC-C** | `Question: {question}\nA. {textA}\nB. {textB}\nC. {textC}\nD. {textD}\nDirectly give me the correct answer option, and then explain:` |

| **Hellaswag** | `{ctx}\nQuestion: Which ending makes the most sense?\nDirectly give me the correct choice, you can further explain it or not.\nA. {A}\nB. {B}\nC. {C}\nD. {D}\nYou may choose from 'A', 'B', 'C', 'D'.\nAnswer:` |

| **BBH** | `Follow the given examples and answer the question.\n{_hint}\nQ: {input}\nA: Let's think step by step.` |

| **DROP** | `You will be asked to read a passage and answer a question. Some examples of passages and Q&A are provided below.\n{drop_examples}\n\n# Your Task\n---\n{prompt}\nThink step by step, then write a line of the form "Answer: $ANSWER" at the end of your response.` |

| **MMLU** | `{_hint}\nQuestion: {input}\nA. {A}\nB. {B}\nC. {C}\nD. {D}\n\nFor simple problems:\nDirectly provide the answer with minimal explanation.\n\nFor complex problems:\nUse this step-by-step format:\n## Step 1: [Concise description]\n[Brief explanation]\n## Step 2: [Concise description]\n[Brief explanation]\n\nRegardless of the approach, always conclude with:\nThe answer is [the_answer_letter].\nwhere the [the_answer_letter] is one of A, B, C or D.\n\nLet's think step by step.` |

| **GSM8K** | `{question}\nPlease reason step by step, and put your final answer within \boxed{}.` |

| **MATH** | `{problem}\nPlease reason step by step, and put your final answer within \boxed{}.` |

## 🚀 Usage

### Input Formats

Our fusion process uses [phi-4](https://huggingface.co/microsoft/phi-4) as the **pivot model**, and thus **InfiGFusion shares the same prompt format and usage style** as `phi-4`.

Given the nature of the training data, `InfiGFusion` performs best when used with prompts in the following chat-style format:

```bash

<|im_start|>system<|im_sep|>

You are a medieval knight and must provide explanations to modern people.<|im_end|>

<|im_start|>user<|im_sep|>

How should I explain the Internet?<|im_end|>

<|im_start|>assistant<|im_sep|>

```

### With `transformers`

```python

import transformers

pipeline = transformers.pipeline(

"text-generation",

model="InfiGFusion", # replace with actual model path

model_kwargs={"torch_dtype": "auto"},

device_map="auto",

)

messages = [

{"role": "system", "content": "You are a medieval knight and must provide explanations to modern people."},

{"role": "user", "content": "How should I explain the Internet?"},

]

outputs = pipeline(messages, max_new_tokens=1024)

print(outputs[0]["generated_text"][-1])

```

> 📌 *Note: Since InfiGFusion uses `phi-4` as its pivot model during fusion, it inherits many of its usage patterns and prompt compatibility features.*

## 🎯 Model Quality

## ✅ Responsible AI Considerations

Like other large language models, `InfiGFusion` may exhibit behaviors that raise concerns around fairness, safety, and reliability. While our fusion framework enhances reasoning and relational inference, the foundation models it integrates—including the pivot model `phi-4`—carry inherited limitations. Users should be aware of the following considerations:

* **Language Coverage & Bias:**

InfiGFusion is primarily trained and evaluated on English datasets. Its performance on non-English inputs may be degraded. Moreover, any biases or stereotypes present in the underlying models or datasets may be preserved or amplified through the fusion process.

* **Representation of Harms & Perpetuation of Stereotypes:**

The fused models may over- or under-represent certain groups or reinforce societal stereotypes. Although quality filters and alignment procedures are used, they cannot fully eliminate harmful representations due to real-world imbalances in the data sources.

* **Content Safety:**

The model may generate inappropriate, offensive, or unsafe content, especially in unconstrained or adversarial inputs. It is not recommended for use in sensitive domains (e.g., mental health, legal advice) without additional safeguards.

* **Fused Behavior Complexity:**

Due to the fusion of multiple distinct models, `InfiGFusion` may exhibit complex or emergent behaviors not present in any single model. This makes interpretability and debugging more challenging, especially in high-risk applications.

* **Factuality & Hallucination:**

Like other generative models, `InfiGFusion` may produce convincing yet factually incorrect or outdated content. Developers should not rely on model output as a source of truth and are encouraged to integrate verification mechanisms such as Retrieval-Augmented Generation (RAG).

* **Code Generation Limitations:**

While `InfiGFusion` includes training on code-related datasets, it may still produce invalid, unsafe, or incomplete code snippets. Outputs involving unfamiliar libraries or languages should be carefully reviewed before use.

---

### Developer Responsibilities

Developers using `InfiGFusion` are encouraged to:

* **Evaluate outputs contextually**, particularly for fairness, accuracy, and safety.

* **Follow all applicable laws and regulations**, including those relating to privacy, trade compliance, and data use.

* **Avoid deployment in high-stakes decision-making** (e.g., employment, finance, law enforcement) without extensive validation and domain-specific safeguards.

* **Clearly disclose to users** that they are interacting with an AI system, following transparency and responsible AI best practices.

By using this model, you agree to evaluate and manage risks responsibly and ensure your applications align with ethical and regulatory expectations.

## 🗒️ BibTex Citation

If you find this work helpful, feel free to give us a cite.

```bigquery

@article{wang2025infigfusion,

title={InfiGFusion: Graph-on-Logits Distillation via Efficient Gromov-Wasserstein for Model Fusion},

author={Wang, Yuanyi and Yan, Zhaoyi and Zhang, Yiming and Zhou, Qi and Gu, Yanggan and Wu, Fei and Yang, Hongxia},

journal={arXiv preprint arXiv:2505.13893},

year={2025}

}

```

|

Anzhc/MS-LC-EQ-D-VR_VAE

|

Anzhc

| 2025-08-07T08:26:03Z | 2,745 | 30 |

diffusers

|

[

"diffusers",

"arxiv:2502.09509",

"arxiv:2506.07863",

"base_model:stabilityai/sdxl-vae",

"base_model:finetune:stabilityai/sdxl-vae",

"region:us"

] | null | 2025-07-15T22:12:46Z |

---

base_model:

- stabilityai/sdxl-vae

library_name: diffusers

---

# MS-LC-EQ-D-VR VAE: another reproduction of EQ-VAE on variable VAEs and then some

### Current VAEs present:

- SDXL VAE

- FLUX VAE

EQ-VAE paper: https://arxiv.org/abs/2502.09509 <br>

VIVAT paper: https://arxiv.org/pdf/2506.07863v1 <br>

Thanks to Kohaku and his reproduction that made me look into this: https://huggingface.co/KBlueLeaf/EQ-SDXL-VAE <br>

Latent to PCA <br>

**IMPORTANT**: This VAE requires reflection padding on conv layers. It should be added both in your trainer, and your webui.

You can do it with this function on VAE model:

```

for module in self.model.modules():

if isinstance(module, nn.Conv2d):

pad_h, pad_w = module.padding if isinstance(module.padding, tuple) else (module.padding, module.padding)

if pad_h > 0 or pad_w > 0:

module.padding_mode = "reflect"

```

If you have trained without this - don't worry, just add this modification and do a small tune to fix up artefacts on edges.

(left - padded, right - not)

## Introduction

Refer to https://huggingface.co/KBlueLeaf/EQ-SDXL-VAE for introduction to EQ-VAE.

This implementation additionally utilizes some of fixes proposed in VIVAT paper, and custom in-house regularization techniques, as well as training implementation.

For additional examples and more information refer to: https://arcenciel.io/articles/20 and https://arcenciel.io/models/10994

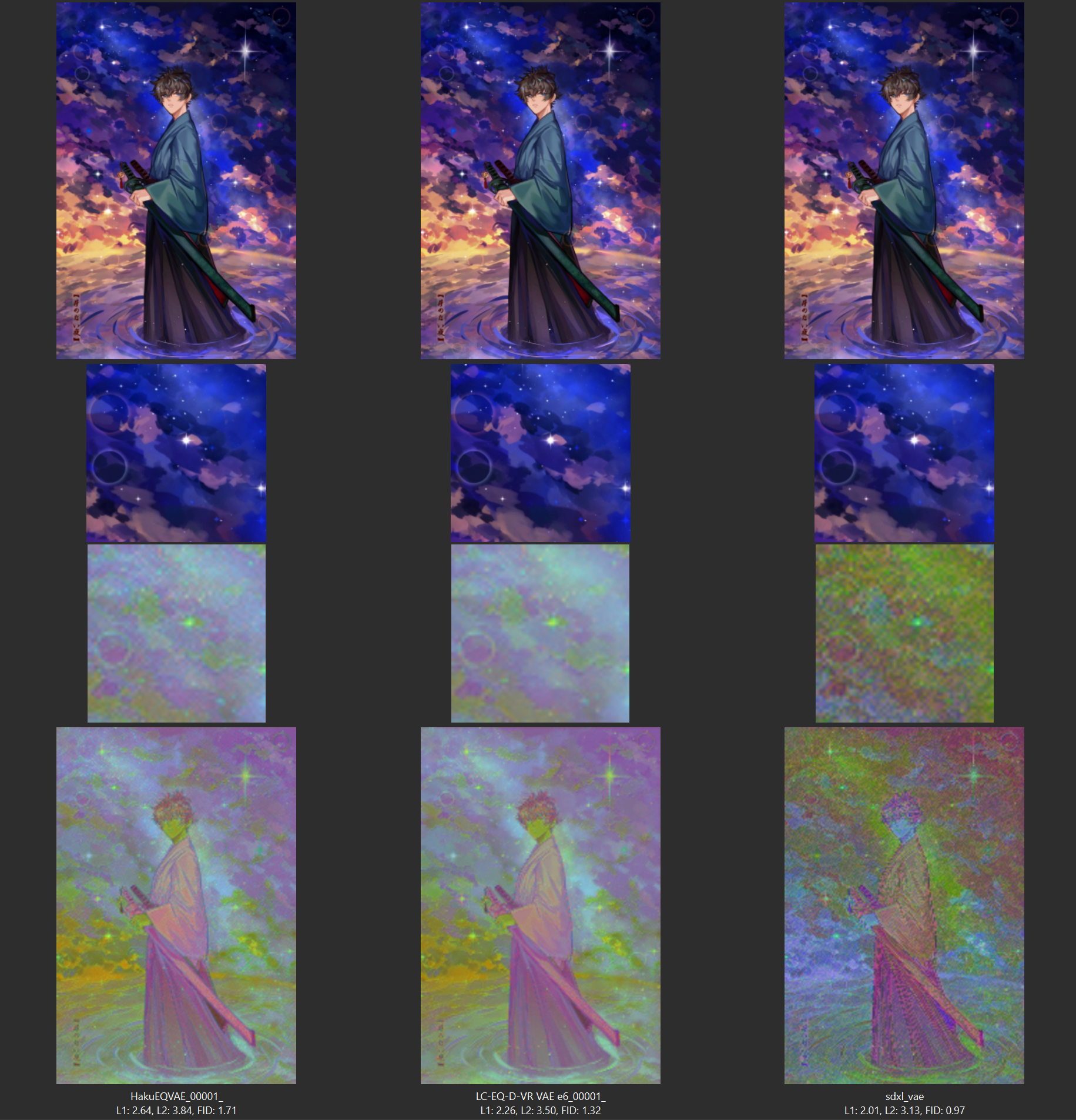

## Visual Examples

## Usage

This is a finetuned SDXL VAE, adapted with new regularization, and other techniques. You can use this with your existing SDXL model, but image will be quite artefacting, particularly - oversharpening and ringing.

This VAE is supposed ot be used for finetune, after that images will become normal. But be aware, compatibility with old VAEs, that are not EQ, will be lost(They will become blurry).

## Training Setup

#### Base SDXL:

* Base Model: [SDXL-VAE](https://huggingface.co/stabilityai/sdxl-vae)

* Resolution: 256

* Dataset: ~12.8k anime images

* Batch Size: 128 (bs 8, grad acc 16)

* Samples Seen: ~75k

* Loss Weights:

* L1: 0.3

* L2: 0.5

* SSIM: 0.5

* LPIPS: 0.5

* KL: 0.000001

* Consistency Loss: 0.75

Both Encoder and Decoder were trained.

**Training Time**: ~8-10 hours on **4060Ti**

#### B2:

* Base Model: First version

* Resolution: 256

* Dataset: 87.8k anime images

* Batch Size: 128 (bs 8, grad acc 16)

* Samples Seen: ~150k

* Loss Weights:

* L1: 0.2

* L2: 0.4

* SSIM: 0.6

* LPIPS: 0.8

* KL: 0.000001

* Consistency Loss: 0.75

Both Encoder and Decoder were trained.

**Training Time**: ~16 hours on **4060Ti**

#### B3:

* Base Model: B2

* Resolution: 256

* Dataset: 162.8k anime images

* Batch Size: 128 (bs 8, grad acc 16)

* Samples Seen: ~225k

* Loss Weights:

* L1: 0.2

* L2: 0.4

* SSIM: 0.6

* LPIPS: 0.8

* KL: 0.000001

* Consistency Loss: 0.75

Both Encoder and Decoder were trained.

**Training Time**: ~24 hours on **4060Ti**

#### B4:

* Base Model: B3

* Resolution: 320

* Dataset: ~237k anime images

* Batch Size: 72 (bs 6, grad acc 12)

* Samples Seen: ~300k

* Loss Weights:

* L1: 0.5

* L2: 0.9

* SSIM: 0.6

* LPIPS: 0.7

* KL: 0.000001

* Consistency Loss: 0.75

* wavelet: 0.3

Both Encoder and Decoder were trained.

**Total Training Time**: ~33 hours on **4060Ti**

B2 is a direct continuation of base version, stats displayed are cumulative across multiple runs.

I took batch of 75k images, so samples seen never repeated.

B3 repeats B2 for another batch of data and further solidifies cleaner latents. Minor tweaks were done to training code for better regularization.

B4 changes mixture a bit, to concentrate more on reconstruction quality. Additionally, resolution was increased to 320. Wavelet loss was added at low values(but it's effect is yet to be studied).

---

#### Base FLUX:

* Base Model: [FLUX-VAE](https://huggingface.co/black-forest-labs/FLUX.1-dev/tree/main/vae)

* Dataset: ~12.8k anime images

* Batch Size: 128 (bs 8, grad acc 16)

* Samples Seen: ~62.5k

* Loss Weights:

* L1: 0.3

* L2: 0.4

* SSIM: 0.6

* LPIPS: 0.6

* KL: 0.000001

* Consistency Loss: 0.75

Both Encoder and Decoder were trained.

**Training Time**: ~6 hours on **4060Ti**

## Evaluation Results

Im using small test set i have on me, separated into anime(434) and photo(500) images. Additionally, im measuring noise in latents. Sorgy for no larger test sets.

### Results on small benchmark of 500 photos

| VAE SDXL | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS-SSIM ↑ | KL ↓ | RFID ↓ |

|---------------------------------------|----------------------------------|-------------------------------------|-------------------------------------|-------------------------------------|-------------------------------------|-------------------------------------|--------------------------------------|

| sdxl_vae | 6.282 | 10.534 | 29.278 | <span style="color:Crimson">0.063</span> | 0.947 | <span style="color:Crimson">31.216</span> | <span style="color:Crimson">4.819</span> |

| Kohaku EQ-VAE | 6.423 | 10.428 | 29.140 | <span style="color:Orange">*0.082*</span> | 0.945 | 43.236 | 6.202 |

| Anzhc MS-LC-EQ-D-VR VAE | <span style="color:Orange">*5.975*</span> | <span style="color:Orange">*10.096*</span> | <span style="color:Orange">*29.526*</span> | 0.106 | <span style="color:Orange">*0.952*</span> | <span style="color:Orange">*33.176*</span> | 5.578 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 6.082 | 10.214 | 29.432 | 0.103 | 0.951 | 33.535 | 5.509 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | 6.066 | 10.151 | 29.475 | 0.104 | 0.951 | 34.341 | 5.538 |

| Anzhc MS-LC-EQ-D-VR VAE B4 | <span style="color:Crimson">5.839</span> | <span style="color:Crimson">9.818</span> | <span style="color:Crimson">29.788</span> | 0.112 | <span style="color:Crimson">0.954</span> | 35.762 | <span style="color:Orange">*5.260*</span> |

| VAE FLUX | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS‑SSIM ↑ | KL ↓ | rFID ↓ |

|---|---|---|---|---|---|---|---|

| FLUX VAE | <span style="color:Orange">*4.147* | <span style="color:Orange">*6.294* | <span style="color:Orange">*33.389* | <span style="color:Crimson">0.021 | <span style="color:Crimson">0.987 | <span style="color:Orange">*12.146* | <span style="color:Crimson">0.565 |

| MS‑LC‑EQ‑D‑VR VAE FLUX | <span style="color:Crimson">3.799 | <span style="color:Crimson">6.077 | <span style="color:Crimson">33.807 | <span style="color:Orange">*0.032* | <span style="color:Orange">*0.986* | <span style="color:Crimson">10.992 | <span style="color:Orange">*1.692* |

#### Noise in latents

| VAE SDXL | Noise ↓ |

|-----------------------------------------|------------------------------------|

| sdxl_vae | 27.508 |

| Kohaku EQ-VAE | 17.395 |

| Anzhc MS-LC-EQ-D-VR VAE | 15.527 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 13.914 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | <span style="color:Orange">*13.124*</span> |

| Anzhc MS-LC-EQ-D-VR VAE B4 | <span style="color:Crimson">12.354</span> |

| VAE FLUX | Noise ↓ |

|---|---|

| FLUX VAE | <span style="color:Orange">*10.499* |

| MS‑LC‑EQ‑D‑VR VAE FLUX | <span style="color:Crimson">7.635 |

---

### Results on a small benchmark of 434 anime arts

| VAE SDXL | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS-SSIM ↑ | KL ↓ | RFID ↓ |

|-----------------------------------------|----------------------------------|---------------------------------------|---------------------------------------|-------------------------------------|--------------------------------------|--------------------------------------|------------------------------------|

| sdxl_vae | 4.369 | 7.905 | <span style="color:Orange">*31.080*</span> | <span style="color:Crimson">0.038</span> | 0.969 | <span style="color:Crimson">35.057</span> | <span style="color:Crimson">5.088</span> |

| Kohaku EQ-VAE | 4.818 | 8.332 | 30.462 | <span style="color:Orange">*0.048*</span> | 0.967 | 50.022 | 7.264 |

| Anzhc MS-LC-EQ-D-VR VAE | 4.351 | <span style="color:Orange">*7.902*</span> | 30.956 | 0.062 | 0.970 | <span style="color:Orange">*36.724*</span> | 6.239 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | <span style="color:Orange">*4.313*</span> | 7.935 | 30.951 | 0.059 | <span style="color:Orange">*0.970*</span> | 36.963 | 6.147 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | 4.323 | 7.910 | 30.977 | 0.058 | 0.970 | 37.809 | 6.075 |

| Anzhc MS-LC-EQ-D-VR VAE B4 | <span style="color:Crimson">4.140</span> | <span style="color:Crimson">7.617</span> | <span style="color:Crimson">31.343</span> | 0.058 | <span style="color:Crimson">0.971</span> | 39.057 | <span style="color:Orange">*5.670*</span> |

| VAE FLUX | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS‑SSIM ↑ | KL ↓ | rFID ↓ |

|---|---|---|---|---|---|---|---|

| FLUX VAE | <span style="color:Orange">*3.060* | <span style="color:Crimson">4.775 | <span style="color:Crimson">35.440 | <span style="color:Crimson">0.011 | <span style="color:Crimson">0.991 | <span style="color:Orange">*12.472* | <span style="color:Crimson">0.670 |

| MS‑LC‑EQ‑D‑VR VAE FLUX | <span style="color:Crimson">2.933 | <span style="color:Orange">*4.856* | <span style="color:Orange">*35.251* | <span style="color:Orange">*0.018* | <span style="color:Orange">*0.990* | <span style="color:Crimson">11.225 | <span style="color:Orange">*1.561* |

#### Noise in latents

| VAE SDXL | Noise ↓ |

|-----------------------------------------|------------------------------------|

| sdxl_vae | 26.359 |

| Kohaku EQ-VAE | 17.314 |

| Anzhc MS-LC-EQ-D-VR VAE | 14.976 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 13.649 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | <span style="color:Orange">*13.247*</span> |

| Anzhc MS-LC-EQ-D-VR VAE B4 | <span style="color:Crimson">12.652</span> |

| VAE FLUX | Noise ↓ |

|---|---|

| FLUX VAE | <span style="color:Orange">*9.913* |

| MS‑LC‑EQ‑D‑VR VAE FLUX | <span style="color:Crimson">7.723 |

KL loss suggests that this VAE implementation is much closer to SDXL, and likely will be a better candidate for further finetune, but that is just a theory.

B2 further improves latent clarity, while maintaining same or better performance. Particularly improved very fine texture handling, which previously would be overcorrected into smooth surface. Performs better in such cases now.

B3 cleans them up ever more, but at that point visually they are +- same.

## References

[1] [[2502.09509] EQ-VAE: Equivariance Regularized Latent Space for Improved Generative Image Modeling](https://arxiv.org/abs/2502.09509)

[2] [[2506.07863] VIVAT: VIRTUOUS IMPROVING VAE TRAINING THROUGH ARTIFACT MITIGATION](https://arxiv.org/abs/2506.07863v1)

[3] [sdxl-vae](https://huggingface.co/stabilityai/sdxl-vae)

## Cite

```bibtex

@misc{anzhc_ms-lc-eq-d-vr_vae,

author = {Anzhc},

title = {MS-LC-EQ-D-VR VAE: another reproduction of EQ-VAE on cariable VAEs and then some},

year = {2025},

howpublished = {Hugging Face model card},

url = {https://huggingface.co/Anzhc/MS-LC-EQ-D-VR_VAE},

note = {Finetuned SDXL-VAE with EQ regularization and more, for improved latent representation.}

}

```

## Acknowledgement

My friend Bluvoll, for no particular reason.

|

TAUR-dev/M-test_all_parts-sft

|

TAUR-dev

| 2025-08-07T08:14:31Z | 35 | 0 | null |

[

"safetensors",

"qwen2",

"region:us"

] | null | 2025-08-05T11:20:51Z |

# M-test_all_parts-sft

This model was created as part of the **test_all_parts** experiment using the SkillFactory experiment management system.

## Model Details

- **Training Method**: LLaMAFactory SFT (Supervised Fine-Tuning)

- **Stage Name**: sft

- **Experiment**: test_all_parts

## Training Configuration

{"model_name_or_path": "Qwen/Qwen2.5-1.5B-Instruct", "trust_remote_code": true, "stage": "sft", "do_train": true, "finetuning_type": "full", "deepspeed": "/datastor1/mwadhwa/code/skill-factory/thirdparty/LLaMA-Factory/examples/deepspeed/ds_z2_config.json", "dataset": "TAUR_dev__D_SFT_C_cd3arg_Qwen2_5_1_5B_Instruct_AnsRev_think", "template": "qwen", "cutoff_len": 16384, "max_samples": 50, "overwrite_cache": true, "preprocessing_num_workers": 1, "dataloader_num_workers": 0, "disable_tqdm": false, "output_dir": "/datastor1/mwadhwa/tmp/sf/llamafactory/checkpoints", "logging_steps": 10, "save_steps": 5, "plot_loss": true, "overwrite_output_dir": true, "per_device_train_batch_size": 1, "gradient_accumulation_steps": 1, "learning_rate": 1e-06, "num_train_epochs": 1, "lr_scheduler_type": "cosine", "warmup_ratio": 0.05, "weight_decay": 0.0001, "adam_beta1": 0.9, "adam_beta2": 0.95, "bf16": true, "ddp_timeout": 180000000, "gradient_checkpointing": true, "save_only_model": true, "enable_masked_ranges": false, "save_strategy": "steps", "save_total_limit": 5, "sf_tracker_dataset_id": "TAUR-dev/D-ExpTracker__test_all_parts__v1", "sf_eval_before_training": false, "sf_wandb_project": "test_all_parts_sft", "sf_eval_steps": null, "run_name": "test_all_parts_sft"}

## Experiment Tracking

🔗 **View complete experiment details**: [Experiment Tracker Dataset](https://huggingface.co/datasets/TAUR-dev/D-ExpTracker__test_all_parts__v1)

## Usage

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("TAUR-dev/M-test_all_parts-sft")

model = AutoModelForCausalLM.from_pretrained("TAUR-dev/M-test_all_parts-sft")

```

|

medoxz543/hate-speech

|

medoxz543

| 2025-08-07T07:08:01Z | 41 | 0 | null |

[

"pytorch",

"roberta",

"text-classification",

"en",

"dataset:Machlovi/Hatebase",

"dataset:manueltonneau/english-hate-speech-superset",

"base_model:vinai/bertweet-base",

"base_model:finetune:vinai/bertweet-base",

"license:apache-2.0",

"region:us"

] |

text-classification

| 2025-04-24T06:51:39Z |

---

license: apache-2.0

datasets:

- Machlovi/Hatebase

- manueltonneau/english-hate-speech-superset

language:

- en

metrics:

- accuracy

base_model:

- vinai/bertweet-base

pipeline_tag: text-classification

---

|

overfit-brothers/KRAG-SOTA

|

overfit-brothers

| 2025-08-07T05:56:32Z | 2 | 0 | null |

[

"safetensors",

"llama",

"region:us"

] | null | 2025-08-04T01:48:46Z |

# 입력 텍스트 예시

아래는 저희 팀에서 구축한 RAG 파이프라인으로 완성되는 모델 입력 텍스트의 예시입니다.

```python

"""다음 글은 어문 규범에 맞는 표현을 고르거나 어문 규범에 따라 문장을 교정하고 그 이유를 설명할 수 있는 자료이다

2. 자음 뒤에 [w]가 올 때에는 두 음절로 갈라 적되, [gw], [hw], [kw]는 한 음절로 붙여

적는다.

- swing[swiŋ] 스윙, twist[twist] 트위스트, penguin[peŋgwin] 펭귄, whistle[hwisl] 휘슬,quarter[kwɔːtə] 쿼터

다음은 질문 유형과 답변 형식을 이해하는 데 도움이 되는 여러 예시입니다. 이 예시들의 내용에 얽매이지 않고, 주어진 질문에 대해 가장 적절한 답변을 생성하세요.

question:""네덜란드의 {헤이그/하흐}에 가 봤다."" 가운데 올바른 것을 선택하고, 그 이유를 설명하세요.

answer:""네덜란드의 헤이그에 가 봤다.""가 옳다. 원지음이 아닌 제3국의 발음으로 통용되고 있는 것은 관용을 따른다. 따라서 '헤이그'로 쓰는 것이 적절하다.

**위 'answer:' 뒤의 출력 형식을 엄격히 준수하여 답변을 생성하십시오.**

이제 질문을 시작합니다.

question:""{히치하이크/힛치하이크}를 하다."" 가운데 올바른 것을 선택하고, 그 이유를 설명하세요."""

```

---

base_model: []

library_name: transformers

tags:

- mergekit

- merge

---

# 0725_5-merge

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [SLERP](https://en.wikipedia.org/wiki/Slerp) merge method.

### Models Merged

The following models were included in the merge:

* /home/infidea/rebirth-hjun/KRAG_2025/modle_merge/kanana-1.5-8b-instruct-2505-lora-20250715-1532

* /home/infidea/rebirth-hjun/KRAG_2025/modle_merge/0725_4-merge

### Configuration

The following YAML configuration was used to produce this model:

```yaml

base_model: /home/infidea/rebirth-hjun/KRAG_2025/modle_merge/0725_4-merge

dtype: bfloat16

merge_method: slerp

modules:

default:

slices:

- sources:

- layer_range: [0, 32]

model: /home/infidea/rebirth-hjun/KRAG_2025/modle_merge/0725_4-merge

- layer_range: [0, 32]

model: /home/infidea/rebirth-hjun/KRAG_2025/modle_merge/kanana-1.5-8b-instruct-2505-lora-20250715-1532

parameters:

t:

- filter: self_attn

value: [0.0, 0.5, 0.7, 0.9, 1.0]

- filter: mlp

value: [1.0, 0.5, 0.7, 0.5, 0.0]

- value: 0.8

```

|

Fugaki/RecurrentGemma_IndonesiaSummarizerNews

|

Fugaki

| 2025-08-07T05:52:27Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-07T05:33:18Z |

---

license: apache-2.0

---

|

meandyou200175/intent_1tg_fix

|

meandyou200175

| 2025-08-07T04:25:22Z | 0 | 0 |

transformers

|

[

"transformers",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-08-07T03:49:44Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Thireus/GLM-4.5-THIREUS-IQ1_KT-SPECIAL_SPLIT

|

Thireus

| 2025-08-07T04:16:20Z | 7 | 0 | null |

[

"gguf",

"arxiv:2505.23786",

"license:mit",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-08-03T09:02:17Z |

---

license: mit

---

## ⚠️ Cautionary Notice

Due to changes in the GLM-4.5 PR the GGUF files of this repository have changed. Any older version of these GGUFs are no longer compatible with the latest version of `llama.cpp` and `ik_llama.cpp`. Please download the latest GGUF files of this repository and make sure to use the latest version of `llama.cpp` or `ik_llama.cpp`.

- **For `llama.cpp`** – see the discussion in [PR #14939](https://github.com/ggml-org/llama.cpp/pull/14939).

- **For `ik_llama.cpp`** – refer to [ikawrakow/ik_llama.cpp#668](https://github.com/ikawrakow/ik_llama.cpp/pull/668).

**Unless you are confident in what you're doing, and until support is officially confirmed (PR merged),**

> 🔒 **Do not use these quantized models for production**

> 🔬 **Do not use them to assess the quality of the GLM-4.5 models**

Proceed with caution and keep an eye on the upstream PRs for any updates that could affect compatibility or performance.

---

# GLM-4.5

## 🤔 What is this [HuggingFace repository](https://huggingface.co/Thireus/GLM-4.5-THIREUS-BF16-SPECIAL_SPLIT/) about?

This repository provides **GGUF-quantized tensors** for the GLM-4.5 model (official repo: https://huggingface.co/zai-org/GLM-4.5). These GGUF shards are designed to be used with **Thireus’ GGUF Tool Suite** (https://gguf.thireus.com), a collection of tools that automatically finds the perplexity-optimal mix of quantizations for any given VRAM and RAM target. With the Tool Suite, you can generate and download custom quantization “recipes” effortlessly.

- 📖 Read more: https://github.com/Thireus/GGUF-Tool-Suite

- 🔍 Example quant mixes: https://github.com/Thireus/GGUF-Tool-Suite/tree/main/recipe_examples

- 🛠️ Create your own recipe: https://colab.research.google.com/github/Thireus/GGUF-Tool-Suite/blob/main/quant_recipe_pipeline.ipynb

- 📂 Browse available quant shards: https://huggingface.co/Thireus/collections

*tl;dr: Expand the details section below*

<details>

```

cd ~

# Make sure to install all ik_llama.cpp compilation dependencies...

apt install python3-dev python3-pip python3-venv python3-wheel python3-setuptools git acl netcat-openbsd cmake # pipx

# Obtain ik_llama's Thireus version - Windows builds available at https://github.com/Thireus/ik_llama.cpp/releases

git clone https://github.com/Thireus/ik_llama.cpp

cd ik_llama.cpp

git pull

# Build ik_llama.cpp

cmake -B build -DGGML_AVX=ON -DGGML_AVX2=ON -DLLAMA_CURL=OFF -DGGML_MAX_CONTEXTS=2048

cmake --build build --config Release -j16

cd ..

# Obtain Thireus' GGUF-Tool-Suite

git clone https://github.com/Thireus/GGUF-Tool-Suite

# Download model quant mix from recipe file:

cd GGUF-Tool-Suite

rm -f download.conf # Make sure to copy the relevant download.conf for the model before running quant_assign.py

cp -f models/GLM-4.5/download.conf . # Use the download.conf of the chosen model

mkdir -p kitchen && cd kitchen

../quant_downloader.sh ../recipe_examples/GLM-4.5.ROOT-3.6910bpw-3.2785ppl.153GB-GGUF_19GB-GPU_134GB-CPU.68f915c_9c7682b.recipe

# Launch ik_llama's llama-cli:

ulimit -n 99999 # Lifts "too many open files" limitation on Linux

~/ik_llama.cpp/build/bin/llama-cli \

-m GLM-4.5-THIREUS-BF16-SPECIAL_TENSOR-00001-of-01148.gguf \

-fa -amb 512 -fmoe -ctk f16 -c 4096 -ngl 99 \

-ot "blk\.(3|4|5|6)\.ffn_.*=CUDA0" \

-ot "blk\.(7|8|9|10)\.ffn_.*=CUDA1" \

-ot exps=CPU -b 2048 -ub 1024 --warmup-batch --no-mmap --threads 36 \

--main-gpu 0 \

-p '<|begin▁of▁sentence|><|User|>What is the solution of x+5=-2?<|Assistant|><think>\n'

```

</details>

---

## ❓ Why does this Tool Suite exist?

1. **Compatibility & Speed** – [unsloth](https://huggingface.co/unsloth)’s dynamic quants may not always work optimally with `ik_llama.cpp`.

2. **Custom Rig Fit** – No off-the-shelf GGUF model perfectly matched my VRAM/RAM setup, so I built a way to tailor models and leverage extra VRAM/RAM to reduce perplexity.

3. **Automated PPL-Optimal Quantization** – To my knowledge, there was no flexible, automated method to minimize perplexity for any bits-per-weight (bpw) target—so I created one with excellent results!

---

## 📊 How does it compare to other GGUFs?

Here’s how DeepSeek-R1-0528 quantized with **Thireus’ GGUF Tool Suite** stacks up against other quantizers (lower perplexity = better at equal or lower bpw):

> _Note: The `recipe_examples` files illustrate good recipes. The Tool Suite computes the optimal ppl/bpw curve for you — just specify your target RAM, VRAM, and quant types, and `quant_assign.py` finds the best mix._

More perplexity/bpw graphs for other supported models: https://github.com/Thireus/GGUF-Tool-Suite/tree/main/ppl_graphs

---

## 🚀 How do I get started?

Check out the [GGUF Tool Suite README](https://github.com/Thireus/GGUF-Tool-Suite) — focus on these sections:

1. ⚠️ **Requirements** – Which `ik_llama.cpp` (or `llama.cpp`) version to use and how to compile.

- Windows binaries (no patching needed) at: https://github.com/Thireus/ik_llama.cpp/releases

2. 📥 **Download Model Shards** – Use `quant_downloader.sh` to fetch GGUF shards from any recipe.

- Recipe examples: https://github.com/Thireus/GGUF-Tool-Suite/tree/main/recipe_examples

3. 🧠 **Run a Downloaded Model** – Sample usage with `llama-cli`.

4. 🛠️ **Generate a Custom Recipe** – Produce recipes tailored to your rig for optimal perplexity.

---

## ✅ Supported Models

Supported models are listed under `models/` in the [Tool Suite Github repo](https://github.com/Thireus/GGUF-Tool-Suite/tree/main/models). Presence of `ppl_results.csv` indicates official support and compatibility with `quant_assign.py`.

---

## 🤷♂️ Will I release pre-cooked GGUF files?

No, because I believe in **tailored quantization** for each user’s hardware. If you prefer ready-made shards, you are welcome to merge them via `llama-gguf-split --merge`, or request someone to publish them.

Instead, I prefer to share examples of recipes so users can see exactly how they were produced (command included inside these recipe files) and tweak them for their own rigs. The `quant_downloader.sh` script handles automatic fetching and verification of each shard. Recipes provided by [Ubergarm](https://huggingface.co/ubergarm) on his model cards are also compatible with `quant_downloader.sh`.

Users who don’t trust the GGUF shards on HuggingFace can also quantize their own by passing recipe lines to `llama-quantize --custom-q` ([see example](https://github.com/Thireus/GGUF-Tool-Suite/blob/main/models/DeepSeek-R1-0528/DeepSeek-R1-0528-THIREUS-ANY-SPECIAL.sh#L482-L486)). Run `llama-quantize --help` to list compatible quants for `quant_assign.py`. This approach is especially useful if you prefer `llama.cpp` over `ik_llama.cpp`.

---

## 📦 What’s in this repository?

- **00001 GGUF header shard** – Contains metadata (tokens, chat template, tensor count, etc.). This metadata can be explored directly from the HuggingFace web interface after clicking on that shard.

- **Tensor shards** – Each shard holds one tensor; see `tensors.map` for names, quant types, sizes, SHA-256 hash, shard IDs, etc.

- **GPG-signed files** – `tensors.map` and header shard are signed with the key in [trusted-keys.asc](https://github.com/Thireus/GGUF-Tool-Suite/blob/main/trusted-keys.asc) for tamper detection.

- **Security note** – Some papers about various ways to attack GGUFs and LLMs are available online, such as https://arxiv.org/abs/2505.23786, and there are also more classic security exploits like CVE-2024-23496 and CVE-2024-25664 through CVE-2024-25668. Only use GGUFs from reputable, trusted authors—or alternatively self-quantize—to avoid potential exploits.

---

## 💡 Pro Tips

You can download the BF16 model version to quantize your own shards:

```

mkdir kitchen

echo '.*=bf16' > kitchen/bf16.recipe

cd kitchen

../quant_downloader.sh bf16.recipe

```

Enjoy optimized quantization! 🎉

|

x2bee/Polar-oss-20B

|

x2bee

| 2025-08-07T03:19:55Z | 1 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gpt_oss",

"text-generation",

"vllm",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"8-bit",

"mxfp4",

"region:us"

] |

text-generation

| 2025-08-07T03:09:42Z |

---

license: apache-2.0

pipeline_tag: text-generation

library_name: transformers

tags:

- vllm

---

<p align="center">

<img alt="gpt-oss-20b" src="https://raw.githubusercontent.com/openai/gpt-oss/main/docs/gpt-oss-20b.svg">

</p>

<p align="center">

<a href="https://gpt-oss.com"><strong>Try gpt-oss</strong></a> ·

<a href="https://cookbook.openai.com/topic/gpt-oss"><strong>Guides</strong></a> ·

<a href="https://openai.com/index/gpt-oss-model-card"><strong>Model card</strong></a> ·

<a href="https://openai.com/index/introducing-gpt-oss/"><strong>OpenAI blog</strong></a>

</p>

<br>

Welcome to the gpt-oss series, [OpenAI’s open-weight models](https://openai.com/open-models) designed for powerful reasoning, agentic tasks, and versatile developer use cases.

We’re releasing two flavors of these open models:

- `gpt-oss-120b` — for production, general purpose, high reasoning use cases that fit into a single H100 GPU (117B parameters with 5.1B active parameters)

- `gpt-oss-20b` — for lower latency, and local or specialized use cases (21B parameters with 3.6B active parameters)

Both models were trained on our [harmony response format](https://github.com/openai/harmony) and should only be used with the harmony format as it will not work correctly otherwise.

> [!NOTE]

> This model card is dedicated to the smaller `gpt-oss-20b` model. Check out [`gpt-oss-120b`](https://huggingface.co/openai/gpt-oss-120b) for the larger model.

# Highlights

* **Permissive Apache 2.0 license:** Build freely without copyleft restrictions or patent risk—ideal for experimentation, customization, and commercial deployment.

* **Configurable reasoning effort:** Easily adjust the reasoning effort (low, medium, high) based on your specific use case and latency needs.

* **Full chain-of-thought:** Gain complete access to the model’s reasoning process, facilitating easier debugging and increased trust in outputs. It’s not intended to be shown to end users.

* **Fine-tunable:** Fully customize models to your specific use case through parameter fine-tuning.

* **Agentic capabilities:** Use the models’ native capabilities for function calling, [web browsing](https://github.com/openai/gpt-oss/tree/main?tab=readme-ov-file#browser), [Python code execution](https://github.com/openai/gpt-oss/tree/main?tab=readme-ov-file#python), and Structured Outputs.

* **Native MXFP4 quantization:** The models are trained with native MXFP4 precision for the MoE layer, making `gpt-oss-120b` run on a single H100 GPU and the `gpt-oss-20b` model run within 16GB of memory.

---

# Inference examples

## Transformers

You can use `gpt-oss-120b` and `gpt-oss-20b` with Transformers. If you use the Transformers chat template, it will automatically apply the [harmony response format](https://github.com/openai/harmony). If you use `model.generate` directly, you need to apply the harmony format manually using the chat template or use our [openai-harmony](https://github.com/openai/harmony) package.

To get started, install the necessary dependencies to setup your environment:

```

pip install -U transformers kernels torch

```

Once, setup you can proceed to run the model by running the snippet below:

```py

from transformers import pipeline

import torch

model_id = "openai/gpt-oss-20b"

pipe = pipeline(

"text-generation",

model=model_id,

torch_dtype="auto",

device_map="auto",

)

messages = [

{"role": "user", "content": "Explain quantum mechanics clearly and concisely."},

]

outputs = pipe(

messages,

max_new_tokens=256,

)

print(outputs[0]["generated_text"][-1])

```

Alternatively, you can run the model via [`Transformers Serve`](https://huggingface.co/docs/transformers/main/serving) to spin up a OpenAI-compatible webserver:

```

transformers serve

transformers chat localhost:8000 --model-name-or-path openai/gpt-oss-20b

```

[Learn more about how to use gpt-oss with Transformers.](https://cookbook.openai.com/articles/gpt-oss/run-transformers)

## vLLM

vLLM recommends using [uv](https://docs.astral.sh/uv/) for Python dependency management. You can use vLLM to spin up an OpenAI-compatible webserver. The following command will automatically download the model and start the server.

```bash

uv pip install --pre vllm==0.10.1+gptoss \

--extra-index-url https://wheels.vllm.ai/gpt-oss/ \

--extra-index-url https://download.pytorch.org/whl/nightly/cu128 \

--index-strategy unsafe-best-match

vllm serve openai/gpt-oss-20b

```

[Learn more about how to use gpt-oss with vLLM.](https://cookbook.openai.com/articles/gpt-oss/run-vllm)

## PyTorch / Triton

To learn about how to use this model with PyTorch and Triton, check out our [reference implementations in the gpt-oss repository](https://github.com/openai/gpt-oss?tab=readme-ov-file#reference-pytorch-implementation).

## Ollama

If you are trying to run gpt-oss on consumer hardware, you can use Ollama by running the following commands after [installing Ollama](https://ollama.com/download).

```bash

# gpt-oss-20b

ollama pull gpt-oss:20b

ollama run gpt-oss:20b

```

[Learn more about how to use gpt-oss with Ollama.](https://cookbook.openai.com/articles/gpt-oss/run-locally-ollama)

#### LM Studio

If you are using [LM Studio](https://lmstudio.ai/) you can use the following commands to download.