problem

stringlengths 1.19k

65.4k

| solution

stringlengths 1.19k

67.5k

| topic

stringlengths 5

80

|

|---|---|---|

---

title

intersecting_segments

---

# Search for a pair of intersecting segments

Given $n$ line segments on the plane. It is required to check whether at least two of them intersect with each other.

If the answer is yes, then print this pair of intersecting segments; it is enough to choose any of them among several answers.

The naive solution algorithm is to iterate over all pairs of segments in $O(n^2)$ and check for each pair whether they intersect or not. This article describes an algorithm with the runtime time $O(n \log n)$, which is based on the **sweep line algorithm**.

## Algorithm

Let's draw a vertical line $x = -\infty$ mentally and start moving this line to the right.

In the course of its movement, this line will meet with segments, and at each time a segment intersect with our line it intersects in exactly one point (we will assume that there are no vertical segments).

<center></center>

Thus, for each segment, at some point in time, its point will appear on the sweep line, then with the movement of the line, this point will move, and finally, at some point, the segment will disappear from the line.

We are interested in the **relative order of the segments** along the vertical.

Namely, we will store a list of segments crossing the sweep line at a given time, where the segments will be sorted by their $y$-coordinate on the sweep line.

<center></center>

This order is interesting because intersecting segments will have the same $y$-coordinate at least at one time:

<center></center>

We formulate key statements:

- To find an intersecting pair, it is sufficient to consider **only adjacent segments** at each fixed position of the sweep line.

- It is enough to consider the sweep line not in all possible real positions $(-\infty \ldots +\infty)$, but **only in those positions when new segments appear or old ones disappear**. In other words, it is enough to limit yourself only to the positions equal to the abscissas of the end points of the segments.

- When a new line segment appears, it is enough to **insert** it to the desired location in the list obtained for the previous sweep line. We should only check for the intersection of the **added segment with its immediate neighbors in the list above and below**.

- If the segment disappears, it is enough to **remove** it from the current list. After that, it is necessary **check for the intersection of the upper and lower neighbors in the list**.

- Other changes in the sequence of segments in the list, except for those described, do not exist. No other intersection checks are required.

To understand the truth of these statements, the following remarks are sufficient:

- Two disjoint segments never change their **relative order**.<br>

In fact, if one segment was first higher than the other, and then became lower, then between these two moments there was an intersection of these two segments.

- Two non-intersecting segments also cannot have the same $y$-coordinates.

- From this it follows that at the moment of the segment appearance we can find the position for this segment in the queue, and we will not have to rearrange this segment in the queue any more: **its order relative to other segments in the queue will not change**.

- Two intersecting segments at the moment of their intersection point will be neighbors of each other in the queue.

- Therefore, for finding pairs of intersecting line segments is sufficient to check the intersection of all and only those pairs of segments that sometime during the movement of the sweep line at least once were neighbors to each other. <br>

It is easy to notice that it is enough only to check the added segment with its upper and lower neighbors, as well as when removing the segment — its upper and lower neighbors (which after removal will become neighbors of each other).<br>

- It should be noted that at a fixed position of the sweep line, we must **first add all the segments** that start at this x-coordinate, and only **then remove all the segments** that end here.<br>

Thus, we do not miss the intersection of segments on the vertex: i.e. such cases when two segments have a common vertex.

- Note that **vertical segments** do not actually affect the correctness of the algorithm.<br>

These segments are distinguished by the fact that they appear and disappear at the same time. However, due to the previous comment, we know that all segments will be added to the queue first, and only then they will be deleted. Therefore, if the vertical segment intersects with some other segment opened at that moment (including the vertical one), it will be detected.<br>

**In what place of the queue to place vertical segments?** After all, a vertical segment does not have one specific $y$-coordinate, it extends for an entire segment along the $y$-coordinate. However, it is easy to understand that any coordinate from this segment can be taken as a $y$-coordinate.

Thus, the entire algorithm will perform no more than $2n$ tests on the intersection of a pair of segments, and will perform $O(n)$ operations with a queue of segments ($O(1)$ operations at the time of appearance and disappearance of each segment).

The final **asymptotic behavior of the algorithm** is thus $O(n \log n)$.

## Implementation

We present the full implementation of the described algorithm:

```cpp

const double EPS = 1E-9;

struct pt {

double x, y;

};

struct seg {

pt p, q;

int id;

double get_y(double x) const {

if (abs(p.x - q.x) < EPS)

return p.y;

return p.y + (q.y - p.y) * (x - p.x) / (q.x - p.x);

}

};

bool intersect1d(double l1, double r1, double l2, double r2) {

if (l1 > r1)

swap(l1, r1);

if (l2 > r2)

swap(l2, r2);

return max(l1, l2) <= min(r1, r2) + EPS;

}

int vec(const pt& a, const pt& b, const pt& c) {

double s = (b.x - a.x) * (c.y - a.y) - (b.y - a.y) * (c.x - a.x);

return abs(s) < EPS ? 0 : s > 0 ? +1 : -1;

}

bool intersect(const seg& a, const seg& b)

{

return intersect1d(a.p.x, a.q.x, b.p.x, b.q.x) &&

intersect1d(a.p.y, a.q.y, b.p.y, b.q.y) &&

vec(a.p, a.q, b.p) * vec(a.p, a.q, b.q) <= 0 &&

vec(b.p, b.q, a.p) * vec(b.p, b.q, a.q) <= 0;

}

bool operator<(const seg& a, const seg& b)

{

double x = max(min(a.p.x, a.q.x), min(b.p.x, b.q.x));

return a.get_y(x) < b.get_y(x) - EPS;

}

struct event {

double x;

int tp, id;

event() {}

event(double x, int tp, int id) : x(x), tp(tp), id(id) {}

bool operator<(const event& e) const {

if (abs(x - e.x) > EPS)

return x < e.x;

return tp > e.tp;

}

};

set<seg> s;

vector<set<seg>::iterator> where;

set<seg>::iterator prev(set<seg>::iterator it) {

return it == s.begin() ? s.end() : --it;

}

set<seg>::iterator next(set<seg>::iterator it) {

return ++it;

}

pair<int, int> solve(const vector<seg>& a) {

int n = (int)a.size();

vector<event> e;

for (int i = 0; i < n; ++i) {

e.push_back(event(min(a[i].p.x, a[i].q.x), +1, i));

e.push_back(event(max(a[i].p.x, a[i].q.x), -1, i));

}

sort(e.begin(), e.end());

s.clear();

where.resize(a.size());

for (size_t i = 0; i < e.size(); ++i) {

int id = e[i].id;

if (e[i].tp == +1) {

set<seg>::iterator nxt = s.lower_bound(a[id]), prv = prev(nxt);

if (nxt != s.end() && intersect(*nxt, a[id]))

return make_pair(nxt->id, id);

if (prv != s.end() && intersect(*prv, a[id]))

return make_pair(prv->id, id);

where[id] = s.insert(nxt, a[id]);

} else {

set<seg>::iterator nxt = next(where[id]), prv = prev(where[id]);

if (nxt != s.end() && prv != s.end() && intersect(*nxt, *prv))

return make_pair(prv->id, nxt->id);

s.erase(where[id]);

}

}

return make_pair(-1, -1);

}

```

The main function here is `solve()`, which returns the number of found intersecting segments, or $(-1, -1)$, if there are no intersections.

Checking for the intersection of two segments is carried out by the `intersect ()` function, using an **algorithm based on the oriented area of the triangle**.

The queue of segments is the global variable `s`, a `set<event>`. Iterators that specify the position of each segment in the queue (for convenient removal of segments from the queue) are stored in the global array `where`.

Two auxiliary functions `prev()` and `next()` are also introduced, which return iterators to the previous and next elements (or `end()`, if one does not exist).

The constant `EPS` denotes the error of comparing two real numbers (it is mainly used when checking two segments for intersection).

|

---

title

intersecting_segments

---

# Search for a pair of intersecting segments

Given $n$ line segments on the plane. It is required to check whether at least two of them intersect with each other.

If the answer is yes, then print this pair of intersecting segments; it is enough to choose any of them among several answers.

The naive solution algorithm is to iterate over all pairs of segments in $O(n^2)$ and check for each pair whether they intersect or not. This article describes an algorithm with the runtime time $O(n \log n)$, which is based on the **sweep line algorithm**.

## Algorithm

Let's draw a vertical line $x = -\infty$ mentally and start moving this line to the right.

In the course of its movement, this line will meet with segments, and at each time a segment intersect with our line it intersects in exactly one point (we will assume that there are no vertical segments).

<center></center>

Thus, for each segment, at some point in time, its point will appear on the sweep line, then with the movement of the line, this point will move, and finally, at some point, the segment will disappear from the line.

We are interested in the **relative order of the segments** along the vertical.

Namely, we will store a list of segments crossing the sweep line at a given time, where the segments will be sorted by their $y$-coordinate on the sweep line.

<center></center>

This order is interesting because intersecting segments will have the same $y$-coordinate at least at one time:

<center></center>

We formulate key statements:

- To find an intersecting pair, it is sufficient to consider **only adjacent segments** at each fixed position of the sweep line.

- It is enough to consider the sweep line not in all possible real positions $(-\infty \ldots +\infty)$, but **only in those positions when new segments appear or old ones disappear**. In other words, it is enough to limit yourself only to the positions equal to the abscissas of the end points of the segments.

- When a new line segment appears, it is enough to **insert** it to the desired location in the list obtained for the previous sweep line. We should only check for the intersection of the **added segment with its immediate neighbors in the list above and below**.

- If the segment disappears, it is enough to **remove** it from the current list. After that, it is necessary **check for the intersection of the upper and lower neighbors in the list**.

- Other changes in the sequence of segments in the list, except for those described, do not exist. No other intersection checks are required.

To understand the truth of these statements, the following remarks are sufficient:

- Two disjoint segments never change their **relative order**.<br>

In fact, if one segment was first higher than the other, and then became lower, then between these two moments there was an intersection of these two segments.

- Two non-intersecting segments also cannot have the same $y$-coordinates.

- From this it follows that at the moment of the segment appearance we can find the position for this segment in the queue, and we will not have to rearrange this segment in the queue any more: **its order relative to other segments in the queue will not change**.

- Two intersecting segments at the moment of their intersection point will be neighbors of each other in the queue.

- Therefore, for finding pairs of intersecting line segments is sufficient to check the intersection of all and only those pairs of segments that sometime during the movement of the sweep line at least once were neighbors to each other. <br>

It is easy to notice that it is enough only to check the added segment with its upper and lower neighbors, as well as when removing the segment — its upper and lower neighbors (which after removal will become neighbors of each other).<br>

- It should be noted that at a fixed position of the sweep line, we must **first add all the segments** that start at this x-coordinate, and only **then remove all the segments** that end here.<br>

Thus, we do not miss the intersection of segments on the vertex: i.e. such cases when two segments have a common vertex.

- Note that **vertical segments** do not actually affect the correctness of the algorithm.<br>

These segments are distinguished by the fact that they appear and disappear at the same time. However, due to the previous comment, we know that all segments will be added to the queue first, and only then they will be deleted. Therefore, if the vertical segment intersects with some other segment opened at that moment (including the vertical one), it will be detected.<br>

**In what place of the queue to place vertical segments?** After all, a vertical segment does not have one specific $y$-coordinate, it extends for an entire segment along the $y$-coordinate. However, it is easy to understand that any coordinate from this segment can be taken as a $y$-coordinate.

Thus, the entire algorithm will perform no more than $2n$ tests on the intersection of a pair of segments, and will perform $O(n)$ operations with a queue of segments ($O(1)$ operations at the time of appearance and disappearance of each segment).

The final **asymptotic behavior of the algorithm** is thus $O(n \log n)$.

## Implementation

We present the full implementation of the described algorithm:

```cpp

const double EPS = 1E-9;

struct pt {

double x, y;

};

struct seg {

pt p, q;

int id;

double get_y(double x) const {

if (abs(p.x - q.x) < EPS)

return p.y;

return p.y + (q.y - p.y) * (x - p.x) / (q.x - p.x);

}

};

bool intersect1d(double l1, double r1, double l2, double r2) {

if (l1 > r1)

swap(l1, r1);

if (l2 > r2)

swap(l2, r2);

return max(l1, l2) <= min(r1, r2) + EPS;

}

int vec(const pt& a, const pt& b, const pt& c) {

double s = (b.x - a.x) * (c.y - a.y) - (b.y - a.y) * (c.x - a.x);

return abs(s) < EPS ? 0 : s > 0 ? +1 : -1;

}

bool intersect(const seg& a, const seg& b)

{

return intersect1d(a.p.x, a.q.x, b.p.x, b.q.x) &&

intersect1d(a.p.y, a.q.y, b.p.y, b.q.y) &&

vec(a.p, a.q, b.p) * vec(a.p, a.q, b.q) <= 0 &&

vec(b.p, b.q, a.p) * vec(b.p, b.q, a.q) <= 0;

}

bool operator<(const seg& a, const seg& b)

{

double x = max(min(a.p.x, a.q.x), min(b.p.x, b.q.x));

return a.get_y(x) < b.get_y(x) - EPS;

}

struct event {

double x;

int tp, id;

event() {}

event(double x, int tp, int id) : x(x), tp(tp), id(id) {}

bool operator<(const event& e) const {

if (abs(x - e.x) > EPS)

return x < e.x;

return tp > e.tp;

}

};

set<seg> s;

vector<set<seg>::iterator> where;

set<seg>::iterator prev(set<seg>::iterator it) {

return it == s.begin() ? s.end() : --it;

}

set<seg>::iterator next(set<seg>::iterator it) {

return ++it;

}

pair<int, int> solve(const vector<seg>& a) {

int n = (int)a.size();

vector<event> e;

for (int i = 0; i < n; ++i) {

e.push_back(event(min(a[i].p.x, a[i].q.x), +1, i));

e.push_back(event(max(a[i].p.x, a[i].q.x), -1, i));

}

sort(e.begin(), e.end());

s.clear();

where.resize(a.size());

for (size_t i = 0; i < e.size(); ++i) {

int id = e[i].id;

if (e[i].tp == +1) {

set<seg>::iterator nxt = s.lower_bound(a[id]), prv = prev(nxt);

if (nxt != s.end() && intersect(*nxt, a[id]))

return make_pair(nxt->id, id);

if (prv != s.end() && intersect(*prv, a[id]))

return make_pair(prv->id, id);

where[id] = s.insert(nxt, a[id]);

} else {

set<seg>::iterator nxt = next(where[id]), prv = prev(where[id]);

if (nxt != s.end() && prv != s.end() && intersect(*nxt, *prv))

return make_pair(prv->id, nxt->id);

s.erase(where[id]);

}

}

return make_pair(-1, -1);

}

```

The main function here is `solve()`, which returns the number of found intersecting segments, or $(-1, -1)$, if there are no intersections.

Checking for the intersection of two segments is carried out by the `intersect ()` function, using an **algorithm based on the oriented area of the triangle**.

The queue of segments is the global variable `s`, a `set<event>`. Iterators that specify the position of each segment in the queue (for convenient removal of segments from the queue) are stored in the global array `where`.

Two auxiliary functions `prev()` and `next()` are also introduced, which return iterators to the previous and next elements (or `end()`, if one does not exist).

The constant `EPS` denotes the error of comparing two real numbers (it is mainly used when checking two segments for intersection).

## Problems

* [TIMUS 1469 No Smoking!](https://acm.timus.ru/problem.aspx?space=1&num=1469)

|

Search for a pair of intersecting segments

|

---

title

segments_intersection_checking

---

# Check if two segments intersect

You are given two segments $(a, b)$ and $(c, d)$.

You have to check if they intersect.

Of course, you may find their intersection and check if it isn't empty, but this can't be done in integers for segments with integer coordinates.

The approach described here can work in integers.

## Algorithm

Firstly, consider the case when the segments are part of the same line.

In this case it is sufficient to check if their projections on $Ox$ and $Oy$ intersect.

In the other case $a$ and $b$ must not lie on the same side of line $(c, d)$, and $c$ and $d$ must not lie on the same side of line $(a, b)$.

It can be checked with a couple of cross products.

## Implementation

The given algorithm is implemented for integer points. Of course, it can be easily modified to work with doubles.

```{.cpp file=check-segments-inter}

struct pt {

long long x, y;

pt() {}

pt(long long _x, long long _y) : x(_x), y(_y) {}

pt operator-(const pt& p) const { return pt(x - p.x, y - p.y); }

long long cross(const pt& p) const { return x * p.y - y * p.x; }

long long cross(const pt& a, const pt& b) const { return (a - *this).cross(b - *this); }

};

int sgn(const long long& x) { return x >= 0 ? x ? 1 : 0 : -1; }

bool inter1(long long a, long long b, long long c, long long d) {

if (a > b)

swap(a, b);

if (c > d)

swap(c, d);

return max(a, c) <= min(b, d);

}

bool check_inter(const pt& a, const pt& b, const pt& c, const pt& d) {

if (c.cross(a, d) == 0 && c.cross(b, d) == 0)

return inter1(a.x, b.x, c.x, d.x) && inter1(a.y, b.y, c.y, d.y);

return sgn(a.cross(b, c)) != sgn(a.cross(b, d)) &&

sgn(c.cross(d, a)) != sgn(c.cross(d, b));

}

```

|

---

title

segments_intersection_checking

---

# Check if two segments intersect

You are given two segments $(a, b)$ and $(c, d)$.

You have to check if they intersect.

Of course, you may find their intersection and check if it isn't empty, but this can't be done in integers for segments with integer coordinates.

The approach described here can work in integers.

## Algorithm

Firstly, consider the case when the segments are part of the same line.

In this case it is sufficient to check if their projections on $Ox$ and $Oy$ intersect.

In the other case $a$ and $b$ must not lie on the same side of line $(c, d)$, and $c$ and $d$ must not lie on the same side of line $(a, b)$.

It can be checked with a couple of cross products.

## Implementation

The given algorithm is implemented for integer points. Of course, it can be easily modified to work with doubles.

```{.cpp file=check-segments-inter}

struct pt {

long long x, y;

pt() {}

pt(long long _x, long long _y) : x(_x), y(_y) {}

pt operator-(const pt& p) const { return pt(x - p.x, y - p.y); }

long long cross(const pt& p) const { return x * p.y - y * p.x; }

long long cross(const pt& a, const pt& b) const { return (a - *this).cross(b - *this); }

};

int sgn(const long long& x) { return x >= 0 ? x ? 1 : 0 : -1; }

bool inter1(long long a, long long b, long long c, long long d) {

if (a > b)

swap(a, b);

if (c > d)

swap(c, d);

return max(a, c) <= min(b, d);

}

bool check_inter(const pt& a, const pt& b, const pt& c, const pt& d) {

if (c.cross(a, d) == 0 && c.cross(b, d) == 0)

return inter1(a.x, b.x, c.x, d.x) && inter1(a.y, b.y, c.y, d.y);

return sgn(a.cross(b, c)) != sgn(a.cross(b, d)) &&

sgn(c.cross(d, a)) != sgn(c.cross(d, b));

}

```

|

Check if two segments intersect

|

---

title

- Original

---

# Convex hull trick and Li Chao tree

Consider the following problem. There are $n$ cities. You want to travel from city $1$ to city $n$ by car. To do this you have to buy some gasoline. It is known that a liter of gasoline costs $cost_k$ in the $k^{th}$ city. Initially your fuel tank is empty and you spend one liter of gasoline per kilometer. Cities are located on the same line in ascending order with $k^{th}$ city having coordinate $x_k$. Also you have to pay $toll_k$ to enter $k^{th}$ city. Your task is to make the trip with minimum possible cost. It's obvious that the solution can be calculated via dynamic programming:

$$dp_i = toll_i+\min\limits_{j<i}(cost_j \cdot (x_i - x_j)+dp_j)$$

Naive approach will give you $O(n^2)$ complexity which can be improved to $O(n \log n)$ or $O(n \log [C \varepsilon^{-1}])$ where $C$ is largest possible $|x_i|$ and $\varepsilon$ is precision with which $x_i$ is considered ($\varepsilon = 1$ for integers which is usually the case). To do this one should note that the problem can be reduced to adding linear functions $k \cdot x + b$ to the set and finding minimum value of the functions in some particular point $x$. There are two main approaches one can use here.

## Convex hull trick

The idea of this approach is to maintain a lower convex hull of linear functions.

Actually it would be a bit more convenient to consider them not as linear functions, but as points $(k;b)$ on the plane such that we will have to find the point which has the least dot product with a given point $(x;1)$, that is, for this point $kx+b$ is minimized which is the same as initial problem.

Such minimum will necessarily be on lower convex envelope of these points as can be seen below:

<center>  </center>

One has to keep points on the convex hull and normal vectors of the hull's edges.

When you have a $(x;1)$ query you'll have to find the normal vector closest to it in terms of angles between them, then the optimum linear function will correspond to one of its endpoints.

To see that, one should note that points having a constant dot product with $(x;1)$ lie on a line which is orthogonal to $(x;1)$, so the optimum linear function will be the one in which tangent to convex hull which is collinear with normal to $(x;1)$ touches the hull.

This point is the one such that normals of edges lying to the left and to the right of it are headed in different sides of $(x;1)$.

This approach is useful when queries of adding linear functions are monotone in terms of $k$ or if we work offline, i.e. we may firstly add all linear functions and answer queries afterwards.

So we cannot solve the cities/gasoline problems using this way.

That would require handling online queries.

When it comes to deal with online queries however, things will go tough and one will have to use some kind of set data structure to implement a proper convex hull.

Online approach will however not be considered in this article due to its hardness and because second approach (which is Li Chao tree) allows to solve the problem way more simply.

Worth mentioning that one can still use this approach online without complications by square-root-decomposition.

That is, rebuild convex hull from scratch each $\sqrt n$ new lines.

To implement this approach one should begin with some geometric utility functions, here we suggest to use the C++ complex number type.

```cpp

typedef int ftype;

typedef complex<ftype> point;

#define x real

#define y imag

ftype dot(point a, point b) {

return (conj(a) * b).x();

}

ftype cross(point a, point b) {

return (conj(a) * b).y();

}

```

Here we will assume that when linear functions are added, their $k$ only increases and we want to find minimum values.

We will keep points in vector $hull$ and normal vectors in vector $vecs$.

When we add a new point, we have to look at the angle formed between last edge in convex hull and vector from last point in convex hull to new point.

This angle has to be directed counter-clockwise, that is the dot product of the last normal vector in the hull (directed inside hull) and the vector from the last point to the new one has to be non-negative.

As long as this isn't true, we should erase the last point in the convex hull alongside with the corresponding edge.

```cpp

vector<point> hull, vecs;

void add_line(ftype k, ftype b) {

point nw = {k, b};

while(!vecs.empty() && dot(vecs.back(), nw - hull.back()) < 0) {

hull.pop_back();

vecs.pop_back();

}

if(!hull.empty()) {

vecs.push_back(1i * (nw - hull.back()));

}

hull.push_back(nw);

}

```

Now to get the minimum value in some point we will find the first normal vector in the convex hull that is directed counter-clockwise from $(x;1)$. The left endpoint of such edge will be the answer. To check if vector $a$ is not directed counter-clockwise of vector $b$, we should check if their cross product $[a,b]$ is positive.

```cpp

int get(ftype x) {

point query = {x, 1};

auto it = lower_bound(vecs.begin(), vecs.end(), query, [](point a, point b) {

return cross(a, b) > 0;

});

return dot(query, hull[it - vecs.begin()]);

}

```

## Li Chao tree

Assume you're given a set of functions such that each two can intersect at most once. Let's keep in each vertex of a segment tree some function in such way, that if we go from root to the leaf it will be guaranteed that one of the functions we met on the path will be the one giving the minimum value in that leaf. Let's see how to construct it.

Assume we're in some vertex corresponding to half-segment $[l,r)$ and the function $f_{old}$ is kept there and we add the function $f_{new}$. Then the intersection point will be either in $[l;m)$ or in $[m;r)$ where $m=\left\lfloor\tfrac{l+r}{2}\right\rfloor$. We can efficiently find that out by comparing the values of the functions in points $l$ and $m$. If the dominating function changes, then it is in $[l;m)$ otherwise it is in $[m;r)$. Now for the half of the segment with no intersection we will pick the lower function and write it in the current vertex. You can see that it will always be the one which is lower in point $m$. After that we recursively go to the other half of the segment with the function which was the upper one. As you can see this will keep correctness on the first half of segment and in the other one correctness will be maintained during the recursive call. Thus we can add functions and check the minimum value in the point in $O(\log [C\varepsilon^{-1}])$.

Here is the illustration of what is going on in the vertex when we add new function:

<center></center>

Let's go to implementation now. Once again we will use complex numbers to keep linear functions.

```{.cpp file=lichaotree_line_definition}

typedef long long ftype;

typedef complex<ftype> point;

#define x real

#define y imag

ftype dot(point a, point b) {

return (conj(a) * b).x();

}

ftype f(point a, ftype x) {

return dot(a, {x, 1});

}

```

We will keep functions in the array $line$ and use binary indexing of the segment tree. If you want to use it on large numbers or doubles, you should use a dynamic segment tree.

The segment tree should be initialized with default values, e.g. with lines $0x + \infty$.

```{.cpp file=lichaotree_addline}

const int maxn = 2e5;

point line[4 * maxn];

void add_line(point nw, int v = 1, int l = 0, int r = maxn) {

int m = (l + r) / 2;

bool lef = f(nw, l) < f(line[v], l);

bool mid = f(nw, m) < f(line[v], m);

if(mid) {

swap(line[v], nw);

}

if(r - l == 1) {

return;

} else if(lef != mid) {

add_line(nw, 2 * v, l, m);

} else {

add_line(nw, 2 * v + 1, m, r);

}

}

```

Now to get the minimum in some point $x$ we simply choose the minimum value along the path to the point.

```{.cpp file=lichaotree_getminimum}

ftype get(int x, int v = 1, int l = 0, int r = maxn) {

int m = (l + r) / 2;

if(r - l == 1) {

return f(line[v], x);

} else if(x < m) {

return min(f(line[v], x), get(x, 2 * v, l, m));

} else {

return min(f(line[v], x), get(x, 2 * v + 1, m, r));

}

}

```

|

---

title

- Original

---

# Convex hull trick and Li Chao tree

Consider the following problem. There are $n$ cities. You want to travel from city $1$ to city $n$ by car. To do this you have to buy some gasoline. It is known that a liter of gasoline costs $cost_k$ in the $k^{th}$ city. Initially your fuel tank is empty and you spend one liter of gasoline per kilometer. Cities are located on the same line in ascending order with $k^{th}$ city having coordinate $x_k$. Also you have to pay $toll_k$ to enter $k^{th}$ city. Your task is to make the trip with minimum possible cost. It's obvious that the solution can be calculated via dynamic programming:

$$dp_i = toll_i+\min\limits_{j<i}(cost_j \cdot (x_i - x_j)+dp_j)$$

Naive approach will give you $O(n^2)$ complexity which can be improved to $O(n \log n)$ or $O(n \log [C \varepsilon^{-1}])$ where $C$ is largest possible $|x_i|$ and $\varepsilon$ is precision with which $x_i$ is considered ($\varepsilon = 1$ for integers which is usually the case). To do this one should note that the problem can be reduced to adding linear functions $k \cdot x + b$ to the set and finding minimum value of the functions in some particular point $x$. There are two main approaches one can use here.

## Convex hull trick

The idea of this approach is to maintain a lower convex hull of linear functions.

Actually it would be a bit more convenient to consider them not as linear functions, but as points $(k;b)$ on the plane such that we will have to find the point which has the least dot product with a given point $(x;1)$, that is, for this point $kx+b$ is minimized which is the same as initial problem.

Such minimum will necessarily be on lower convex envelope of these points as can be seen below:

<center>  </center>

One has to keep points on the convex hull and normal vectors of the hull's edges.

When you have a $(x;1)$ query you'll have to find the normal vector closest to it in terms of angles between them, then the optimum linear function will correspond to one of its endpoints.

To see that, one should note that points having a constant dot product with $(x;1)$ lie on a line which is orthogonal to $(x;1)$, so the optimum linear function will be the one in which tangent to convex hull which is collinear with normal to $(x;1)$ touches the hull.

This point is the one such that normals of edges lying to the left and to the right of it are headed in different sides of $(x;1)$.

This approach is useful when queries of adding linear functions are monotone in terms of $k$ or if we work offline, i.e. we may firstly add all linear functions and answer queries afterwards.

So we cannot solve the cities/gasoline problems using this way.

That would require handling online queries.

When it comes to deal with online queries however, things will go tough and one will have to use some kind of set data structure to implement a proper convex hull.

Online approach will however not be considered in this article due to its hardness and because second approach (which is Li Chao tree) allows to solve the problem way more simply.

Worth mentioning that one can still use this approach online without complications by square-root-decomposition.

That is, rebuild convex hull from scratch each $\sqrt n$ new lines.

To implement this approach one should begin with some geometric utility functions, here we suggest to use the C++ complex number type.

```cpp

typedef int ftype;

typedef complex<ftype> point;

#define x real

#define y imag

ftype dot(point a, point b) {

return (conj(a) * b).x();

}

ftype cross(point a, point b) {

return (conj(a) * b).y();

}

```

Here we will assume that when linear functions are added, their $k$ only increases and we want to find minimum values.

We will keep points in vector $hull$ and normal vectors in vector $vecs$.

When we add a new point, we have to look at the angle formed between last edge in convex hull and vector from last point in convex hull to new point.

This angle has to be directed counter-clockwise, that is the dot product of the last normal vector in the hull (directed inside hull) and the vector from the last point to the new one has to be non-negative.

As long as this isn't true, we should erase the last point in the convex hull alongside with the corresponding edge.

```cpp

vector<point> hull, vecs;

void add_line(ftype k, ftype b) {

point nw = {k, b};

while(!vecs.empty() && dot(vecs.back(), nw - hull.back()) < 0) {

hull.pop_back();

vecs.pop_back();

}

if(!hull.empty()) {

vecs.push_back(1i * (nw - hull.back()));

}

hull.push_back(nw);

}

```

Now to get the minimum value in some point we will find the first normal vector in the convex hull that is directed counter-clockwise from $(x;1)$. The left endpoint of such edge will be the answer. To check if vector $a$ is not directed counter-clockwise of vector $b$, we should check if their cross product $[a,b]$ is positive.

```cpp

int get(ftype x) {

point query = {x, 1};

auto it = lower_bound(vecs.begin(), vecs.end(), query, [](point a, point b) {

return cross(a, b) > 0;

});

return dot(query, hull[it - vecs.begin()]);

}

```

## Li Chao tree

Assume you're given a set of functions such that each two can intersect at most once. Let's keep in each vertex of a segment tree some function in such way, that if we go from root to the leaf it will be guaranteed that one of the functions we met on the path will be the one giving the minimum value in that leaf. Let's see how to construct it.

Assume we're in some vertex corresponding to half-segment $[l,r)$ and the function $f_{old}$ is kept there and we add the function $f_{new}$. Then the intersection point will be either in $[l;m)$ or in $[m;r)$ where $m=\left\lfloor\tfrac{l+r}{2}\right\rfloor$. We can efficiently find that out by comparing the values of the functions in points $l$ and $m$. If the dominating function changes, then it is in $[l;m)$ otherwise it is in $[m;r)$. Now for the half of the segment with no intersection we will pick the lower function and write it in the current vertex. You can see that it will always be the one which is lower in point $m$. After that we recursively go to the other half of the segment with the function which was the upper one. As you can see this will keep correctness on the first half of segment and in the other one correctness will be maintained during the recursive call. Thus we can add functions and check the minimum value in the point in $O(\log [C\varepsilon^{-1}])$.

Here is the illustration of what is going on in the vertex when we add new function:

<center></center>

Let's go to implementation now. Once again we will use complex numbers to keep linear functions.

```{.cpp file=lichaotree_line_definition}

typedef long long ftype;

typedef complex<ftype> point;

#define x real

#define y imag

ftype dot(point a, point b) {

return (conj(a) * b).x();

}

ftype f(point a, ftype x) {

return dot(a, {x, 1});

}

```

We will keep functions in the array $line$ and use binary indexing of the segment tree. If you want to use it on large numbers or doubles, you should use a dynamic segment tree.

The segment tree should be initialized with default values, e.g. with lines $0x + \infty$.

```{.cpp file=lichaotree_addline}

const int maxn = 2e5;

point line[4 * maxn];

void add_line(point nw, int v = 1, int l = 0, int r = maxn) {

int m = (l + r) / 2;

bool lef = f(nw, l) < f(line[v], l);

bool mid = f(nw, m) < f(line[v], m);

if(mid) {

swap(line[v], nw);

}

if(r - l == 1) {

return;

} else if(lef != mid) {

add_line(nw, 2 * v, l, m);

} else {

add_line(nw, 2 * v + 1, m, r);

}

}

```

Now to get the minimum in some point $x$ we simply choose the minimum value along the path to the point.

```{.cpp file=lichaotree_getminimum}

ftype get(int x, int v = 1, int l = 0, int r = maxn) {

int m = (l + r) / 2;

if(r - l == 1) {

return f(line[v], x);

} else if(x < m) {

return min(f(line[v], x), get(x, 2 * v, l, m));

} else {

return min(f(line[v], x), get(x, 2 * v + 1, m, r));

}

}

```

## Problems

* [Codebreaker - TROUBLES](https://codeforces.com/gym/103536/problem/B) (simple application of Convex Hull Trick after a couple of observations)

* [CS Academy - Squared Ends](https://csacademy.com/contest/archive/task/squared-ends)

* [Codeforces - Escape Through Leaf](http://codeforces.com/contest/932/problem/F)

* [CodeChef - Polynomials](https://www.codechef.com/NOV17/problems/POLY)

* [Codeforces - Kalila and Dimna in the Logging Industry](https://codeforces.com/problemset/problem/319/C)

* [Codeforces - Product Sum](https://codeforces.com/problemset/problem/631/E)

* [Codeforces - Bear and Bowling 4](https://codeforces.com/problemset/problem/660/F)

* [APIO 2010 - Commando](https://dmoj.ca/problem/apio10p1)

|

Convex hull trick and Li Chao tree

|

---

title

- Original

---

# Basic Geometry

In this article we will consider basic operations on points in Euclidean space which maintains the foundation of the whole analytical geometry.

We will consider for each point $\mathbf r$ the vector $\vec{\mathbf r}$ directed from $\mathbf 0$ to $\mathbf r$.

Later we will not distinguish between $\mathbf r$ and $\vec{\mathbf r}$ and use the term **point** as a synonym for **vector**.

## Linear operations

Both 2D and 3D points maintain linear space, which means that for them sum of points and multiplication of point by some number are defined. Here are those basic implementations for 2D:

```{.cpp file=point2d}

struct point2d {

ftype x, y;

point2d() {}

point2d(ftype x, ftype y): x(x), y(y) {}

point2d& operator+=(const point2d &t) {

x += t.x;

y += t.y;

return *this;

}

point2d& operator-=(const point2d &t) {

x -= t.x;

y -= t.y;

return *this;

}

point2d& operator*=(ftype t) {

x *= t;

y *= t;

return *this;

}

point2d& operator/=(ftype t) {

x /= t;

y /= t;

return *this;

}

point2d operator+(const point2d &t) const {

return point2d(*this) += t;

}

point2d operator-(const point2d &t) const {

return point2d(*this) -= t;

}

point2d operator*(ftype t) const {

return point2d(*this) *= t;

}

point2d operator/(ftype t) const {

return point2d(*this) /= t;

}

};

point2d operator*(ftype a, point2d b) {

return b * a;

}

```

And 3D points:

```{.cpp file=point3d}

struct point3d {

ftype x, y, z;

point3d() {}

point3d(ftype x, ftype y, ftype z): x(x), y(y), z(z) {}

point3d& operator+=(const point3d &t) {

x += t.x;

y += t.y;

z += t.z;

return *this;

}

point3d& operator-=(const point3d &t) {

x -= t.x;

y -= t.y;

z -= t.z;

return *this;

}

point3d& operator*=(ftype t) {

x *= t;

y *= t;

z *= t;

return *this;

}

point3d& operator/=(ftype t) {

x /= t;

y /= t;

z /= t;

return *this;

}

point3d operator+(const point3d &t) const {

return point3d(*this) += t;

}

point3d operator-(const point3d &t) const {

return point3d(*this) -= t;

}

point3d operator*(ftype t) const {

return point3d(*this) *= t;

}

point3d operator/(ftype t) const {

return point3d(*this) /= t;

}

};

point3d operator*(ftype a, point3d b) {

return b * a;

}

```

Here `ftype` is some type used for coordinates, usually `int`, `double` or `long long`.

## Dot product

### Definition

The dot (or scalar) product $\mathbf a \cdot \mathbf b$ for vectors $\mathbf a$ and $\mathbf b$ can be defined in two identical ways.

Geometrically it is product of the length of the first vector by the length of the projection of the second vector onto the first one.

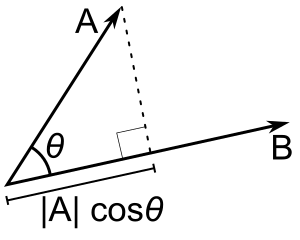

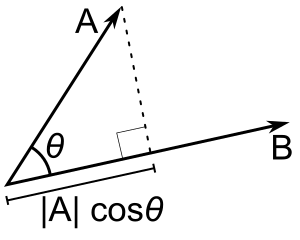

As you may see from the image below this projection is nothing but $|\mathbf a| \cos \theta$ where $\theta$ is the angle between $\mathbf a$ and $\mathbf b$. Thus $\mathbf a\cdot \mathbf b = |\mathbf a| \cos \theta \cdot |\mathbf b|$.

<center></center>

The dot product holds some notable properties:

1. $\mathbf a \cdot \mathbf b = \mathbf b \cdot \mathbf a$

2. $(\alpha \cdot \mathbf a)\cdot \mathbf b = \alpha \cdot (\mathbf a \cdot \mathbf b)$

3. $(\mathbf a + \mathbf b)\cdot \mathbf c = \mathbf a \cdot \mathbf c + \mathbf b \cdot \mathbf c$

I.e. it is a commutative function which is linear with respect to both arguments.

Let's denote the unit vectors as

$$\mathbf e_x = \begin{pmatrix} 1 \\ 0 \\ 0 \end{pmatrix}, \mathbf e_y = \begin{pmatrix} 0 \\ 1 \\ 0 \end{pmatrix}, \mathbf e_z = \begin{pmatrix} 0 \\ 0 \\ 1 \end{pmatrix}.$$

With this notation we can write the vector $\mathbf r = (x;y;z)$ as $r = x \cdot \mathbf e_x + y \cdot \mathbf e_y + z \cdot \mathbf e_z$.

And since for unit vectors

$$\mathbf e_x\cdot \mathbf e_x = \mathbf e_y\cdot \mathbf e_y = \mathbf e_z\cdot \mathbf e_z = 1,\\

\mathbf e_x\cdot \mathbf e_y = \mathbf e_y\cdot \mathbf e_z = \mathbf e_z\cdot \mathbf e_x = 0$$

we can see that in terms of coordinates for $\mathbf a = (x_1;y_1;z_1)$ and $\mathbf b = (x_2;y_2;z_2)$ holds

$$\mathbf a\cdot \mathbf b = (x_1 \cdot \mathbf e_x + y_1 \cdot\mathbf e_y + z_1 \cdot\mathbf e_z)\cdot( x_2 \cdot\mathbf e_x + y_2 \cdot\mathbf e_y + z_2 \cdot\mathbf e_z) = x_1 x_2 + y_1 y_2 + z_1 z_2$$

That is also the algebraic definition of the dot product.

From this we can write functions which calculate it.

```{.cpp file=dotproduct}

ftype dot(point2d a, point2d b) {

return a.x * b.x + a.y * b.y;

}

ftype dot(point3d a, point3d b) {

return a.x * b.x + a.y * b.y + a.z * b.z;

}

```

When solving problems one should use algebraic definition to calculate dot products, but keep in mind geometric definition and properties to use it.

### Properties

We can define many geometrical properties via the dot product.

For example

1. Norm of $\mathbf a$ (squared length): $|\mathbf a|^2 = \mathbf a\cdot \mathbf a$

2. Length of $\mathbf a$: $|\mathbf a| = \sqrt{\mathbf a\cdot \mathbf a}$

3. Projection of $\mathbf a$ onto $\mathbf b$: $\dfrac{\mathbf a\cdot\mathbf b}{|\mathbf b|}$

4. Angle between vectors: $\arccos \left(\dfrac{\mathbf a\cdot \mathbf b}{|\mathbf a| \cdot |\mathbf b|}\right)$

5. From the previous point we may see that the dot product is positive if the angle between them is acute, negative if it is obtuse and it equals zero if they are orthogonal, i.e. they form a right angle.

Note that all these functions do not depend on the number of dimensions, hence they will be the same for the 2D and 3D case:

```{.cpp file=dotproperties}

ftype norm(point2d a) {

return dot(a, a);

}

double abs(point2d a) {

return sqrt(norm(a));

}

double proj(point2d a, point2d b) {

return dot(a, b) / abs(b);

}

double angle(point2d a, point2d b) {

return acos(dot(a, b) / abs(a) / abs(b));

}

```

To see the next important property we should take a look at the set of points $\mathbf r$ for which $\mathbf r\cdot \mathbf a = C$ for some fixed constant $C$.

You can see that this set of points is exactly the set of points for which the projection onto $\mathbf a$ is the point $C \cdot \dfrac{\mathbf a}{|\mathbf a|}$ and they form a hyperplane orthogonal to $\mathbf a$.

You can see the vector $\mathbf a$ alongside with several such vectors having same dot product with it in 2D on the picture below:

<center></center>

In 2D these vectors will form a line, in 3D they will form a plane.

Note that this result allows us to define a line in 2D as $\mathbf r\cdot \mathbf n=C$ or $(\mathbf r - \mathbf r_0)\cdot \mathbf n=0$ where $\mathbf n$ is vector orthogonal to the line and $\mathbf r_0$ is any vector already present on the line and $C = \mathbf r_0\cdot \mathbf n$.

In the same manner a plane can be defined in 3D.

## Cross product

### Definition

Assume you have three vectors $\mathbf a$, $\mathbf b$ and $\mathbf c$ in 3D space joined in a parallelepiped as in the picture below:

<center></center>

How would you calculate its volume?

From school we know that we should multiply the area of the base with the height, which is projection of $\mathbf a$ onto direction orthogonal to base.

That means that if we define $\mathbf b \times \mathbf c$ as the vector which is orthogonal to both $\mathbf b$ and $\mathbf c$ and which length is equal to the area of the parallelogram formed by $\mathbf b$ and $\mathbf c$ then $|\mathbf a\cdot (\mathbf b\times\mathbf c)|$ will be equal to the volume of the parallelepiped.

For integrity we will say that $\mathbf b\times \mathbf c$ will be always directed in such way that the rotation from the vector $\mathbf b$ to the vector $\mathbf c$ from the point of $\mathbf b\times \mathbf c$ is always counter-clockwise (see the picture below).

<center></center>

This defines the cross (or vector) product $\mathbf b\times \mathbf c$ of the vectors $\mathbf b$ and $\mathbf c$ and the triple product $\mathbf a\cdot(\mathbf b\times \mathbf c)$ of the vectors $\mathbf a$, $\mathbf b$ and $\mathbf c$.

Some notable properties of cross and triple products:

1. $\mathbf a\times \mathbf b = -\mathbf b\times \mathbf a$

2. $(\alpha \cdot \mathbf a)\times \mathbf b = \alpha \cdot (\mathbf a\times \mathbf b)$

3. For any $\mathbf b$ and $\mathbf c$ there is exactly one vector $\mathbf r$ such that $\mathbf a\cdot (\mathbf b\times \mathbf c) = \mathbf a\cdot\mathbf r$ for any vector $\mathbf a$. <br>Indeed if there are two such vectors $\mathbf r_1$ and $\mathbf r_2$ then $\mathbf a\cdot (\mathbf r_1 - \mathbf r_2)=0$ for all vectors $\mathbf a$ which is possible only when $\mathbf r_1 = \mathbf r_2$.

4. $\mathbf a\cdot (\mathbf b\times \mathbf c) = \mathbf b\cdot (\mathbf c\times \mathbf a) = -\mathbf a\cdot( \mathbf c\times \mathbf b)$

5. $(\mathbf a + \mathbf b)\times \mathbf c = \mathbf a\times \mathbf c + \mathbf b\times \mathbf c$.

Indeed for all vectors $\mathbf r$ the chain of equations holds:

\[\mathbf r\cdot( (\mathbf a + \mathbf b)\times \mathbf c) = (\mathbf a + \mathbf b) \cdot (\mathbf c\times \mathbf r) = \mathbf a \cdot(\mathbf c\times \mathbf r) + \mathbf b\cdot(\mathbf c\times \mathbf r) = \mathbf r\cdot (\mathbf a\times \mathbf c) + \mathbf r\cdot(\mathbf b\times \mathbf c) = \mathbf r\cdot(\mathbf a\times \mathbf c + \mathbf b\times \mathbf c)\]

Which proves $(\mathbf a + \mathbf b)\times \mathbf c = \mathbf a\times \mathbf c + \mathbf b\times \mathbf c$ due to point 3.

6. $|\mathbf a\times \mathbf b|=|\mathbf a| \cdot |\mathbf b| \sin \theta$ where $\theta$ is angle between $\mathbf a$ and $\mathbf b$, since $|\mathbf a\times \mathbf b|$ equals to the area of the parallelogram formed by $\mathbf a$ and $\mathbf b$.

Given all this and that the following equation holds for the unit vectors

$$\mathbf e_x\times \mathbf e_x = \mathbf e_y\times \mathbf e_y = \mathbf e_z\times \mathbf e_z = \mathbf 0,\\

\mathbf e_x\times \mathbf e_y = \mathbf e_z,~\mathbf e_y\times \mathbf e_z = \mathbf e_x,~\mathbf e_z\times \mathbf e_x = \mathbf e_y$$

we can calculate the cross product of $\mathbf a = (x_1;y_1;z_1)$ and $\mathbf b = (x_2;y_2;z_2)$ in coordinate form:

$$\mathbf a\times \mathbf b = (x_1 \cdot \mathbf e_x + y_1 \cdot \mathbf e_y + z_1 \cdot \mathbf e_z)\times (x_2 \cdot \mathbf e_x + y_2 \cdot \mathbf e_y + z_2 \cdot \mathbf e_z) =$$

$$(y_1 z_2 - z_1 y_2)\mathbf e_x + (z_1 x_2 - x_1 z_2)\mathbf e_y + (x_1 y_2 - y_1 x_2)$$

Which also can be written in the more elegant form:

$$\mathbf a\times \mathbf b = \begin{vmatrix}\mathbf e_x & \mathbf e_y & \mathbf e_z \\ x_1 & y_1 & z_1 \\ x_2 & y_2 & z_2 \end{vmatrix},~a\cdot(b\times c) = \begin{vmatrix} x_1 & y_1 & z_1 \\ x_2 & y_2 & z_2 \\ x_3 & y_3 & z_3 \end{vmatrix}$$

Here $| \cdot |$ stands for the determinant of a matrix.

Some kind of cross product (namely the pseudo-scalar product) can also be implemented in the 2D case.

If we would like to calculate the area of parallelogram formed by vectors $\mathbf a$ and $\mathbf b$ we would compute $|\mathbf e_z\cdot(\mathbf a\times \mathbf b)| = |x_1 y_2 - y_1 x_2|$.

Another way to obtain the same result is to multiply $|\mathbf a|$ (base of parallelogram) with the height, which is the projection of vector $\mathbf b$ onto vector $\mathbf a$ rotated by $90^\circ$ which in turn is $\widehat{\mathbf a}=(-y_1;x_1)$.

That is, to calculate $|\widehat{\mathbf a}\cdot\mathbf b|=|x_1y_2 - y_1 x_2|$.

If we will take the sign into consideration then the area will be positive if the rotation from $\mathbf a$ to $\mathbf b$ (i.e. from the view of the point of $\mathbf e_z$) is performed counter-clockwise and negative otherwise.

That defines the pseudo-scalar product.

Note that it also equals $|\mathbf a| \cdot |\mathbf b| \sin \theta$ where $\theta$ is angle from $\mathbf a$ to $\mathbf b$ count counter-clockwise (and negative if rotation is clockwise).

Let's implement all this stuff!

```{.cpp file=crossproduct}

point3d cross(point3d a, point3d b) {

return point3d(a.y * b.z - a.z * b.y,

a.z * b.x - a.x * b.z,

a.x * b.y - a.y * b.x);

}

ftype triple(point3d a, point3d b, point3d c) {

return dot(a, cross(b, c));

}

ftype cross(point2d a, point2d b) {

return a.x * b.y - a.y * b.x;

}

```

### Properties

As for the cross product, it equals to the zero vector iff the vectors $\mathbf a$ and $\mathbf b$ are collinear (they form a common line, i.e. they are parallel).

The same thing holds for the triple product, it is equal to zero iff the vectors $\mathbf a$, $\mathbf b$ and $\mathbf c$ are coplanar (they form a common plane).

From this we can obtain universal equations defining lines and planes.

A line can be defined via its direction vector $\mathbf d$ and an initial point $\mathbf r_0$ or by two points $\mathbf a$ and $\mathbf b$.

It is defined as $(\mathbf r - \mathbf r_0)\times\mathbf d=0$ or as $(\mathbf r - \mathbf a)\times (\mathbf b - \mathbf a) = 0$.

As for planes, it can be defined by three points $\mathbf a$, $\mathbf b$ and $\mathbf c$ as $(\mathbf r - \mathbf a)\cdot((\mathbf b - \mathbf a)\times (\mathbf c - \mathbf a))=0$ or by initial point $\mathbf r_0$ and two direction vectors lying in this plane $\mathbf d_1$ and $\mathbf d_2$: $(\mathbf r - \mathbf r_0)\cdot(\mathbf d_1\times \mathbf d_2)=0$.

In 2D the pseudo-scalar product also may be used to check the orientation between two vectors because it is positive if the rotation from the first to the second vector is clockwise and negative otherwise.

And, of course, it can be used to calculate areas of polygons, which is described in a different article.

A triple product can be used for the same purpose in 3D space.

## Exercises

### Line intersection

There are many possible ways to define a line in 2D and you shouldn't hesitate to combine them.

For example we have two lines and we want to find their intersection points.

We can say that all points from first line can be parameterized as $\mathbf r = \mathbf a_1 + t \cdot \mathbf d_1$ where $\mathbf a_1$ is initial point, $\mathbf d_1$ is direction and $t$ is some real parameter.

As for second line all its points must satisfy $(\mathbf r - \mathbf a_2)\times \mathbf d_2=0$. From this we can easily find parameter $t$:

$$(\mathbf a_1 + t \cdot \mathbf d_1 - \mathbf a_2)\times \mathbf d_2=0 \quad\Rightarrow\quad t = \dfrac{(\mathbf a_2 - \mathbf a_1)\times\mathbf d_2}{\mathbf d_1\times \mathbf d_2}$$

Let's implement function to intersect two lines.

```{.cpp file=basic_line_intersection}

point2d intersect(point2d a1, point2d d1, point2d a2, point2d d2) {

return a1 + cross(a2 - a1, d2) / cross(d1, d2) * d1;

}

```

### Planes intersection

However sometimes it might be hard to use some geometric insights.

For example, you're given three planes defined by initial points $\mathbf a_i$ and directions $\mathbf d_i$ and you want to find their intersection point.

You may note that you just have to solve the system of equations:

$$\begin{cases}\mathbf r\cdot \mathbf n_1 = \mathbf a_1\cdot \mathbf n_1, \\ \mathbf r\cdot \mathbf n_2 = \mathbf a_2\cdot \mathbf n_2, \\ \mathbf r\cdot \mathbf n_3 = \mathbf a_3\cdot \mathbf n_3\end{cases}$$

Instead of thinking on geometric approach, you can work out an algebraic one which can be obtained immediately.

For example, given that you already implemented a point class, it will be easy for you to solve this system using Cramer's rule because the triple product is simply the determinant of the matrix obtained from the vectors being its columns:

```{.cpp file=plane_intersection}

point3d intersect(point3d a1, point3d n1, point3d a2, point3d n2, point3d a3, point3d n3) {

point3d x(n1.x, n2.x, n3.x);

point3d y(n1.y, n2.y, n3.y);

point3d z(n1.z, n2.z, n3.z);

point3d d(dot(a1, n1), dot(a2, n2), dot(a3, n3));

return point3d(triple(d, y, z),

triple(x, d, z),

triple(x, y, d)) / triple(n1, n2, n3);

}

```

Now you may try to find out approaches for common geometric operations yourself to get used to all this stuff.

|

---

title

- Original

---

# Basic Geometry

In this article we will consider basic operations on points in Euclidean space which maintains the foundation of the whole analytical geometry.

We will consider for each point $\mathbf r$ the vector $\vec{\mathbf r}$ directed from $\mathbf 0$ to $\mathbf r$.

Later we will not distinguish between $\mathbf r$ and $\vec{\mathbf r}$ and use the term **point** as a synonym for **vector**.

## Linear operations

Both 2D and 3D points maintain linear space, which means that for them sum of points and multiplication of point by some number are defined. Here are those basic implementations for 2D:

```{.cpp file=point2d}

struct point2d {

ftype x, y;

point2d() {}

point2d(ftype x, ftype y): x(x), y(y) {}

point2d& operator+=(const point2d &t) {

x += t.x;

y += t.y;

return *this;

}

point2d& operator-=(const point2d &t) {

x -= t.x;

y -= t.y;

return *this;

}

point2d& operator*=(ftype t) {

x *= t;

y *= t;

return *this;

}

point2d& operator/=(ftype t) {

x /= t;

y /= t;

return *this;

}

point2d operator+(const point2d &t) const {

return point2d(*this) += t;

}

point2d operator-(const point2d &t) const {

return point2d(*this) -= t;

}

point2d operator*(ftype t) const {

return point2d(*this) *= t;

}

point2d operator/(ftype t) const {

return point2d(*this) /= t;

}

};

point2d operator*(ftype a, point2d b) {

return b * a;

}

```

And 3D points:

```{.cpp file=point3d}

struct point3d {

ftype x, y, z;

point3d() {}

point3d(ftype x, ftype y, ftype z): x(x), y(y), z(z) {}

point3d& operator+=(const point3d &t) {

x += t.x;

y += t.y;

z += t.z;

return *this;

}

point3d& operator-=(const point3d &t) {

x -= t.x;

y -= t.y;

z -= t.z;

return *this;

}

point3d& operator*=(ftype t) {

x *= t;

y *= t;

z *= t;

return *this;

}

point3d& operator/=(ftype t) {

x /= t;

y /= t;

z /= t;

return *this;

}

point3d operator+(const point3d &t) const {

return point3d(*this) += t;

}

point3d operator-(const point3d &t) const {

return point3d(*this) -= t;

}

point3d operator*(ftype t) const {

return point3d(*this) *= t;

}

point3d operator/(ftype t) const {

return point3d(*this) /= t;

}

};

point3d operator*(ftype a, point3d b) {

return b * a;

}

```

Here `ftype` is some type used for coordinates, usually `int`, `double` or `long long`.

## Dot product

### Definition

The dot (or scalar) product $\mathbf a \cdot \mathbf b$ for vectors $\mathbf a$ and $\mathbf b$ can be defined in two identical ways.

Geometrically it is product of the length of the first vector by the length of the projection of the second vector onto the first one.

As you may see from the image below this projection is nothing but $|\mathbf a| \cos \theta$ where $\theta$ is the angle between $\mathbf a$ and $\mathbf b$. Thus $\mathbf a\cdot \mathbf b = |\mathbf a| \cos \theta \cdot |\mathbf b|$.

<center></center>

The dot product holds some notable properties:

1. $\mathbf a \cdot \mathbf b = \mathbf b \cdot \mathbf a$

2. $(\alpha \cdot \mathbf a)\cdot \mathbf b = \alpha \cdot (\mathbf a \cdot \mathbf b)$

3. $(\mathbf a + \mathbf b)\cdot \mathbf c = \mathbf a \cdot \mathbf c + \mathbf b \cdot \mathbf c$

I.e. it is a commutative function which is linear with respect to both arguments.

Let's denote the unit vectors as

$$\mathbf e_x = \begin{pmatrix} 1 \\ 0 \\ 0 \end{pmatrix}, \mathbf e_y = \begin{pmatrix} 0 \\ 1 \\ 0 \end{pmatrix}, \mathbf e_z = \begin{pmatrix} 0 \\ 0 \\ 1 \end{pmatrix}.$$

With this notation we can write the vector $\mathbf r = (x;y;z)$ as $r = x \cdot \mathbf e_x + y \cdot \mathbf e_y + z \cdot \mathbf e_z$.

And since for unit vectors

$$\mathbf e_x\cdot \mathbf e_x = \mathbf e_y\cdot \mathbf e_y = \mathbf e_z\cdot \mathbf e_z = 1,\\

\mathbf e_x\cdot \mathbf e_y = \mathbf e_y\cdot \mathbf e_z = \mathbf e_z\cdot \mathbf e_x = 0$$

we can see that in terms of coordinates for $\mathbf a = (x_1;y_1;z_1)$ and $\mathbf b = (x_2;y_2;z_2)$ holds

$$\mathbf a\cdot \mathbf b = (x_1 \cdot \mathbf e_x + y_1 \cdot\mathbf e_y + z_1 \cdot\mathbf e_z)\cdot( x_2 \cdot\mathbf e_x + y_2 \cdot\mathbf e_y + z_2 \cdot\mathbf e_z) = x_1 x_2 + y_1 y_2 + z_1 z_2$$

That is also the algebraic definition of the dot product.

From this we can write functions which calculate it.

```{.cpp file=dotproduct}

ftype dot(point2d a, point2d b) {

return a.x * b.x + a.y * b.y;

}

ftype dot(point3d a, point3d b) {

return a.x * b.x + a.y * b.y + a.z * b.z;

}

```

When solving problems one should use algebraic definition to calculate dot products, but keep in mind geometric definition and properties to use it.

### Properties

We can define many geometrical properties via the dot product.

For example

1. Norm of $\mathbf a$ (squared length): $|\mathbf a|^2 = \mathbf a\cdot \mathbf a$

2. Length of $\mathbf a$: $|\mathbf a| = \sqrt{\mathbf a\cdot \mathbf a}$

3. Projection of $\mathbf a$ onto $\mathbf b$: $\dfrac{\mathbf a\cdot\mathbf b}{|\mathbf b|}$

4. Angle between vectors: $\arccos \left(\dfrac{\mathbf a\cdot \mathbf b}{|\mathbf a| \cdot |\mathbf b|}\right)$

5. From the previous point we may see that the dot product is positive if the angle between them is acute, negative if it is obtuse and it equals zero if they are orthogonal, i.e. they form a right angle.

Note that all these functions do not depend on the number of dimensions, hence they will be the same for the 2D and 3D case:

```{.cpp file=dotproperties}

ftype norm(point2d a) {

return dot(a, a);

}

double abs(point2d a) {

return sqrt(norm(a));

}

double proj(point2d a, point2d b) {

return dot(a, b) / abs(b);

}

double angle(point2d a, point2d b) {

return acos(dot(a, b) / abs(a) / abs(b));

}

```

To see the next important property we should take a look at the set of points $\mathbf r$ for which $\mathbf r\cdot \mathbf a = C$ for some fixed constant $C$.

You can see that this set of points is exactly the set of points for which the projection onto $\mathbf a$ is the point $C \cdot \dfrac{\mathbf a}{|\mathbf a|}$ and they form a hyperplane orthogonal to $\mathbf a$.

You can see the vector $\mathbf a$ alongside with several such vectors having same dot product with it in 2D on the picture below:

<center></center>

In 2D these vectors will form a line, in 3D they will form a plane.

Note that this result allows us to define a line in 2D as $\mathbf r\cdot \mathbf n=C$ or $(\mathbf r - \mathbf r_0)\cdot \mathbf n=0$ where $\mathbf n$ is vector orthogonal to the line and $\mathbf r_0$ is any vector already present on the line and $C = \mathbf r_0\cdot \mathbf n$.

In the same manner a plane can be defined in 3D.

## Cross product

### Definition

Assume you have three vectors $\mathbf a$, $\mathbf b$ and $\mathbf c$ in 3D space joined in a parallelepiped as in the picture below:

<center></center>

How would you calculate its volume?

From school we know that we should multiply the area of the base with the height, which is projection of $\mathbf a$ onto direction orthogonal to base.

That means that if we define $\mathbf b \times \mathbf c$ as the vector which is orthogonal to both $\mathbf b$ and $\mathbf c$ and which length is equal to the area of the parallelogram formed by $\mathbf b$ and $\mathbf c$ then $|\mathbf a\cdot (\mathbf b\times\mathbf c)|$ will be equal to the volume of the parallelepiped.

For integrity we will say that $\mathbf b\times \mathbf c$ will be always directed in such way that the rotation from the vector $\mathbf b$ to the vector $\mathbf c$ from the point of $\mathbf b\times \mathbf c$ is always counter-clockwise (see the picture below).

<center></center>

This defines the cross (or vector) product $\mathbf b\times \mathbf c$ of the vectors $\mathbf b$ and $\mathbf c$ and the triple product $\mathbf a\cdot(\mathbf b\times \mathbf c)$ of the vectors $\mathbf a$, $\mathbf b$ and $\mathbf c$.

Some notable properties of cross and triple products:

1. $\mathbf a\times \mathbf b = -\mathbf b\times \mathbf a$

2. $(\alpha \cdot \mathbf a)\times \mathbf b = \alpha \cdot (\mathbf a\times \mathbf b)$

3. For any $\mathbf b$ and $\mathbf c$ there is exactly one vector $\mathbf r$ such that $\mathbf a\cdot (\mathbf b\times \mathbf c) = \mathbf a\cdot\mathbf r$ for any vector $\mathbf a$. <br>Indeed if there are two such vectors $\mathbf r_1$ and $\mathbf r_2$ then $\mathbf a\cdot (\mathbf r_1 - \mathbf r_2)=0$ for all vectors $\mathbf a$ which is possible only when $\mathbf r_1 = \mathbf r_2$.

4. $\mathbf a\cdot (\mathbf b\times \mathbf c) = \mathbf b\cdot (\mathbf c\times \mathbf a) = -\mathbf a\cdot( \mathbf c\times \mathbf b)$

5. $(\mathbf a + \mathbf b)\times \mathbf c = \mathbf a\times \mathbf c + \mathbf b\times \mathbf c$.

Indeed for all vectors $\mathbf r$ the chain of equations holds:

\[\mathbf r\cdot( (\mathbf a + \mathbf b)\times \mathbf c) = (\mathbf a + \mathbf b) \cdot (\mathbf c\times \mathbf r) = \mathbf a \cdot(\mathbf c\times \mathbf r) + \mathbf b\cdot(\mathbf c\times \mathbf r) = \mathbf r\cdot (\mathbf a\times \mathbf c) + \mathbf r\cdot(\mathbf b\times \mathbf c) = \mathbf r\cdot(\mathbf a\times \mathbf c + \mathbf b\times \mathbf c)\]

Which proves $(\mathbf a + \mathbf b)\times \mathbf c = \mathbf a\times \mathbf c + \mathbf b\times \mathbf c$ due to point 3.

6. $|\mathbf a\times \mathbf b|=|\mathbf a| \cdot |\mathbf b| \sin \theta$ where $\theta$ is angle between $\mathbf a$ and $\mathbf b$, since $|\mathbf a\times \mathbf b|$ equals to the area of the parallelogram formed by $\mathbf a$ and $\mathbf b$.

Given all this and that the following equation holds for the unit vectors

$$\mathbf e_x\times \mathbf e_x = \mathbf e_y\times \mathbf e_y = \mathbf e_z\times \mathbf e_z = \mathbf 0,\\

\mathbf e_x\times \mathbf e_y = \mathbf e_z,~\mathbf e_y\times \mathbf e_z = \mathbf e_x,~\mathbf e_z\times \mathbf e_x = \mathbf e_y$$

we can calculate the cross product of $\mathbf a = (x_1;y_1;z_1)$ and $\mathbf b = (x_2;y_2;z_2)$ in coordinate form:

$$\mathbf a\times \mathbf b = (x_1 \cdot \mathbf e_x + y_1 \cdot \mathbf e_y + z_1 \cdot \mathbf e_z)\times (x_2 \cdot \mathbf e_x + y_2 \cdot \mathbf e_y + z_2 \cdot \mathbf e_z) =$$

$$(y_1 z_2 - z_1 y_2)\mathbf e_x + (z_1 x_2 - x_1 z_2)\mathbf e_y + (x_1 y_2 - y_1 x_2)$$

Which also can be written in the more elegant form:

$$\mathbf a\times \mathbf b = \begin{vmatrix}\mathbf e_x & \mathbf e_y & \mathbf e_z \\ x_1 & y_1 & z_1 \\ x_2 & y_2 & z_2 \end{vmatrix},~a\cdot(b\times c) = \begin{vmatrix} x_1 & y_1 & z_1 \\ x_2 & y_2 & z_2 \\ x_3 & y_3 & z_3 \end{vmatrix}$$

Here $| \cdot |$ stands for the determinant of a matrix.

Some kind of cross product (namely the pseudo-scalar product) can also be implemented in the 2D case.

If we would like to calculate the area of parallelogram formed by vectors $\mathbf a$ and $\mathbf b$ we would compute $|\mathbf e_z\cdot(\mathbf a\times \mathbf b)| = |x_1 y_2 - y_1 x_2|$.

Another way to obtain the same result is to multiply $|\mathbf a|$ (base of parallelogram) with the height, which is the projection of vector $\mathbf b$ onto vector $\mathbf a$ rotated by $90^\circ$ which in turn is $\widehat{\mathbf a}=(-y_1;x_1)$.

That is, to calculate $|\widehat{\mathbf a}\cdot\mathbf b|=|x_1y_2 - y_1 x_2|$.

If we will take the sign into consideration then the area will be positive if the rotation from $\mathbf a$ to $\mathbf b$ (i.e. from the view of the point of $\mathbf e_z$) is performed counter-clockwise and negative otherwise.

That defines the pseudo-scalar product.

Note that it also equals $|\mathbf a| \cdot |\mathbf b| \sin \theta$ where $\theta$ is angle from $\mathbf a$ to $\mathbf b$ count counter-clockwise (and negative if rotation is clockwise).

Let's implement all this stuff!

```{.cpp file=crossproduct}

point3d cross(point3d a, point3d b) {

return point3d(a.y * b.z - a.z * b.y,

a.z * b.x - a.x * b.z,

a.x * b.y - a.y * b.x);

}

ftype triple(point3d a, point3d b, point3d c) {

return dot(a, cross(b, c));

}

ftype cross(point2d a, point2d b) {

return a.x * b.y - a.y * b.x;

}

```

### Properties

As for the cross product, it equals to the zero vector iff the vectors $\mathbf a$ and $\mathbf b$ are collinear (they form a common line, i.e. they are parallel).

The same thing holds for the triple product, it is equal to zero iff the vectors $\mathbf a$, $\mathbf b$ and $\mathbf c$ are coplanar (they form a common plane).

From this we can obtain universal equations defining lines and planes.

A line can be defined via its direction vector $\mathbf d$ and an initial point $\mathbf r_0$ or by two points $\mathbf a$ and $\mathbf b$.

It is defined as $(\mathbf r - \mathbf r_0)\times\mathbf d=0$ or as $(\mathbf r - \mathbf a)\times (\mathbf b - \mathbf a) = 0$.

As for planes, it can be defined by three points $\mathbf a$, $\mathbf b$ and $\mathbf c$ as $(\mathbf r - \mathbf a)\cdot((\mathbf b - \mathbf a)\times (\mathbf c - \mathbf a))=0$ or by initial point $\mathbf r_0$ and two direction vectors lying in this plane $\mathbf d_1$ and $\mathbf d_2$: $(\mathbf r - \mathbf r_0)\cdot(\mathbf d_1\times \mathbf d_2)=0$.

In 2D the pseudo-scalar product also may be used to check the orientation between two vectors because it is positive if the rotation from the first to the second vector is clockwise and negative otherwise.

And, of course, it can be used to calculate areas of polygons, which is described in a different article.

A triple product can be used for the same purpose in 3D space.

## Exercises

### Line intersection

There are many possible ways to define a line in 2D and you shouldn't hesitate to combine them.

For example we have two lines and we want to find their intersection points.

We can say that all points from first line can be parameterized as $\mathbf r = \mathbf a_1 + t \cdot \mathbf d_1$ where $\mathbf a_1$ is initial point, $\mathbf d_1$ is direction and $t$ is some real parameter.

As for second line all its points must satisfy $(\mathbf r - \mathbf a_2)\times \mathbf d_2=0$. From this we can easily find parameter $t$:

$$(\mathbf a_1 + t \cdot \mathbf d_1 - \mathbf a_2)\times \mathbf d_2=0 \quad\Rightarrow\quad t = \dfrac{(\mathbf a_2 - \mathbf a_1)\times\mathbf d_2}{\mathbf d_1\times \mathbf d_2}$$

Let's implement function to intersect two lines.

```{.cpp file=basic_line_intersection}

point2d intersect(point2d a1, point2d d1, point2d a2, point2d d2) {

return a1 + cross(a2 - a1, d2) / cross(d1, d2) * d1;

}

```

### Planes intersection

However sometimes it might be hard to use some geometric insights.

For example, you're given three planes defined by initial points $\mathbf a_i$ and directions $\mathbf d_i$ and you want to find their intersection point.

You may note that you just have to solve the system of equations:

$$\begin{cases}\mathbf r\cdot \mathbf n_1 = \mathbf a_1\cdot \mathbf n_1, \\ \mathbf r\cdot \mathbf n_2 = \mathbf a_2\cdot \mathbf n_2, \\ \mathbf r\cdot \mathbf n_3 = \mathbf a_3\cdot \mathbf n_3\end{cases}$$

Instead of thinking on geometric approach, you can work out an algebraic one which can be obtained immediately.

For example, given that you already implemented a point class, it will be easy for you to solve this system using Cramer's rule because the triple product is simply the determinant of the matrix obtained from the vectors being its columns:

```{.cpp file=plane_intersection}

point3d intersect(point3d a1, point3d n1, point3d a2, point3d n2, point3d a3, point3d n3) {

point3d x(n1.x, n2.x, n3.x);

point3d y(n1.y, n2.y, n3.y);

point3d z(n1.z, n2.z, n3.z);

point3d d(dot(a1, n1), dot(a2, n2), dot(a3, n3));

return point3d(triple(d, y, z),

triple(x, d, z),

triple(x, y, d)) / triple(n1, n2, n3);

}

```

Now you may try to find out approaches for common geometric operations yourself to get used to all this stuff.

|

Basic Geometry

|

---

title

circles_intersection

---

# Circle-Circle Intersection

You are given two circles on a 2D plane, each one described as coordinates of its center and its radius. Find the points of their intersection (possible cases: one or two points, no intersection or circles coincide).

## Solution

Let's reduce this problem to the [circle-line intersection problem](circle-line-intersection.md).

Assume without loss of generality that the first circle is centered at the origin (if this is not true, we can move the origin to the center of the first circle and adjust the coordinates of intersection points accordingly at output time). We have a system of two equations:

$$x^2+y^2=r_1^2$$

$$(x - x_2)^2 + (y - y_2)^2 = r_2^2$$

Subtract the first equation from the second one to get rid of the second powers of variables:

$$x^2+y^2=r_1^2$$

$$x \cdot (-2x_2) + y \cdot (-2y_2) + (x_2^2+y_2^2+r_1^2-r_2^2) = 0$$

Thus, we've reduced the original problem to the problem of finding intersections of the first circle and a line:

$$Ax + By + C = 0$$

$$\begin{align}

A &= -2x_2 \\

B &= -2y_2 \\

C &= x_2^2+y_2^2+r_1^2-r_2^2

\end{align}$$

And this problem can be solved as described in the [corresponding article](circle-line-intersection.md).

The only degenerate case we need to consider separately is when the centers of the circles coincide. In this case $x_2=y_2=0$, and the line equation will be $C = r_1^2-r_2^2 = 0$. If the radii of the circles are the same, there are infinitely many intersection points, if they differ, there are no intersections.

|

---

title

circles_intersection

---

# Circle-Circle Intersection

You are given two circles on a 2D plane, each one described as coordinates of its center and its radius. Find the points of their intersection (possible cases: one or two points, no intersection or circles coincide).

## Solution

Let's reduce this problem to the [circle-line intersection problem](circle-line-intersection.md).

Assume without loss of generality that the first circle is centered at the origin (if this is not true, we can move the origin to the center of the first circle and adjust the coordinates of intersection points accordingly at output time). We have a system of two equations:

$$x^2+y^2=r_1^2$$

$$(x - x_2)^2 + (y - y_2)^2 = r_2^2$$

Subtract the first equation from the second one to get rid of the second powers of variables:

$$x^2+y^2=r_1^2$$

$$x \cdot (-2x_2) + y \cdot (-2y_2) + (x_2^2+y_2^2+r_1^2-r_2^2) = 0$$

Thus, we've reduced the original problem to the problem of finding intersections of the first circle and a line:

$$Ax + By + C = 0$$

$$\begin{align}

A &= -2x_2 \\

B &= -2y_2 \\

C &= x_2^2+y_2^2+r_1^2-r_2^2

\end{align}$$

And this problem can be solved as described in the [corresponding article](circle-line-intersection.md).

The only degenerate case we need to consider separately is when the centers of the circles coincide. In this case $x_2=y_2=0$, and the line equation will be $C = r_1^2-r_2^2 = 0$. If the radii of the circles are the same, there are infinitely many intersection points, if they differ, there are no intersections.

## Practice Problems

- [RadarFinder](https://community.topcoder.com/stat?c=problem_statement&pm=7766)

- [Runaway to a shadow - Codeforces Round #357](http://codeforces.com/problemset/problem/681/E)

- [ASC 1 Problem F "Get out!"](http://codeforces.com/gym/100199/problem/F)

- [SPOJ: CIRCINT](http://www.spoj.com/problems/CIRCINT/)

- [UVA - 10301 - Rings and Glue](https://uva.onlinejudge.org/index.php?option=onlinejudge&page=show_problem&problem=1242)

- [Codeforces 933C A Colorful Prospect](https://codeforces.com/problemset/problem/933/C)

- [TIMUS 1429 Biscuits](https://acm.timus.ru/problem.aspx?space=1&num=1429)

|

Circle-Circle Intersection

|

---

title

segments_intersection

---

# Finding intersection of two segments

You are given two segments AB and CD, described as pairs of their endpoints. Each segment can be a single point if its endpoints are the same.

You have to find the intersection of these segments, which can be empty (if the segments don't intersect), a single point or a segment (if the given segments overlap).

## Solution

We can find the intersection point of segments in the same way as [the intersection of lines](lines-intersection.md):

reconstruct line equations from the segments' endpoints and check whether they are parallel.

If the lines are not parallel, we need to find their point of intersection and check whether it belongs to both segments

(to do this it's sufficient to verify that the intersection point belongs to each segment projected on X and Y axes).

In this case the answer will be either "no intersection" or the single point of lines' intersection.