Datasets:

license: apache-2.0

dataset_info:

- config_name: human_object_interactions

features:

- name: video_id

dtype: string

- name: video_path

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

dtype: string

- name: source

dtype: string

splits:

- name: mini

num_bytes: 1749322

num_examples: 5724

- name: full

num_bytes: 4074313

num_examples: 14046

download_size: 594056

dataset_size: 5823635

- config_name: intuitive_physics

features:

- name: video_id

dtype: string

- name: video_path

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

dtype: string

- name: source

dtype: string

splits:

- name: mini

num_bytes: 6999824

num_examples: 8680

- name: full

num_bytes: 10570407

num_examples: 12220

download_size: 805372

dataset_size: 17570231

- config_name: robot_object_interactions

features:

- name: video_id

dtype: string

- name: video_path

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

dtype: string

- name: source

dtype: string

splits:

- name: mini

num_bytes: 771052

num_examples: 2000

- name: full

num_bytes: 9954084

num_examples: 25796

download_size: 1547348

dataset_size: 10725136

- config_name: temporal_reasoning

features:

- name: video_id

dtype: string

- name: video_path

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

- name: candidates

dtype: string

- name: source

dtype: string

splits:

- name: mini

num_bytes: 611241

num_examples: 2012

- name: full

num_bytes: 783157

num_examples: 2766

download_size: 254438

dataset_size: 1394398

configs:

- config_name: human_object_interactions

data_files:

- split: mini

path: human_object_interactions/mini-*

- split: full

path: human_object_interactions/full-*

- config_name: intuitive_physics

data_files:

- split: mini

path: intuitive_physics/mini-*

- split: full

path: intuitive_physics/full-*

- config_name: robot_object_interactions

data_files:

- split: mini

path: robot_object_interactions/mini-*

- split: full

path: robot_object_interactions/full-*

- config_name: temporal_reasoning

data_files:

- split: mini

path: temporal_reasoning/mini-*

- split: full

path: temporal_reasoning/full-*

task_categories:

- question-answering

- video-text-to-text

language:

- en

size_categories:

- 10K<n<100K

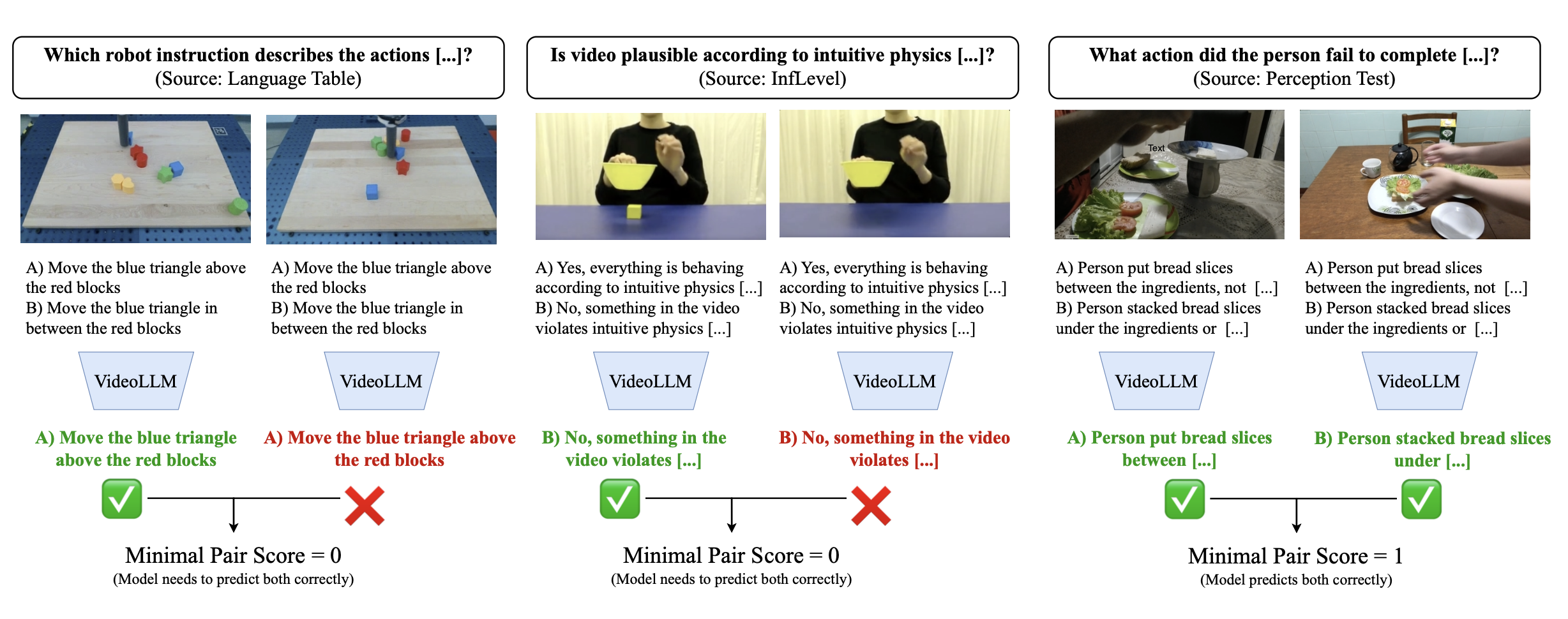

Minimal Video Pairs

A shortcut-aware benchmark for spatio-temporal and intuitive physics video understanding (VideoQA) using minimally different video pairs.

For legal reasons, we are unable to upload the videos directly to Huggingface. However, we provide scripts in this repository for downloading the videos in our github repository. Our benchmark is built on top of videos source from 9 domains:

| Subset | Data sources |

|---|---|

| Human object interactions | PerceptionTest, SomethingSomethingV2 |

| Robot object interactions | Language Table |

| Intuitive Physics and collisions | IntPhys, InfLevel, GRASP, CLEVRER |

| Temporal Reasoning | STAR, Vinoground |

Run evaluation

To enable reproducible evaluation, we utilize the lmms-eval library. We have provided the task files you need to run mvp and mvp_mini. mvp_mini is essentially a smaller, balanced evaluation set with 9k examples for enabling faster evaluations.

Please follow the instructions on our Github repository for running reproducible evals.

Leaderboard

We also release our Physical Reasoning Leaderboard, where you can submit your model outputs for this dataset. Use the data_name as mvp and mvp_mini for submissions against full and mini split respectvely.

Citation and acknowledgements

We are grateful to the datasets listed above for utilizing their videos to create this benchmark. Please cite us if you use the benchmark or any other part of the paper:

@article{krojer2025shortcut,

title={A Shortcut-aware Video-QA Benchmark for Physical Understanding via Minimal Video Pairs}

author={Benno Krojer and Mojtaba Komeili and Candace Ross and Quentin Garrido and Koustuv Sinha and Nicolas Ballas and Mahmoud Assran},

journal={arXiv},

year={2025}

}