Datasets:

pretty_name: Wikipedia Comments

language:

- da

license: cc0-1.0

license_name: CC-0

size_categories:

- 100k-1M

task_categories:

- text-generation

- fill-mask

task_ids:

- language-modeling

domains:

- Encyclopedic

Dataset Card for Wikipedia Comments

Text from the comments sections of the Danish Wikipedia.

You can read more about Danish Wikipedia on their about page. This dataset contains meta-discussion as opposed to the direct pages. This includes discussions about contents of specific pages, but also questions about how to achieve certain things. An example page can be found on: https://da.wikipedia.org/wiki/Wikipedia:Landsbybr%C3%B8nden/Vejledning_efterlyses. For this specific version, the Wikipedia downloads of 20250720 have been used, this can easily be updated in the create.py script.

Dataset Description

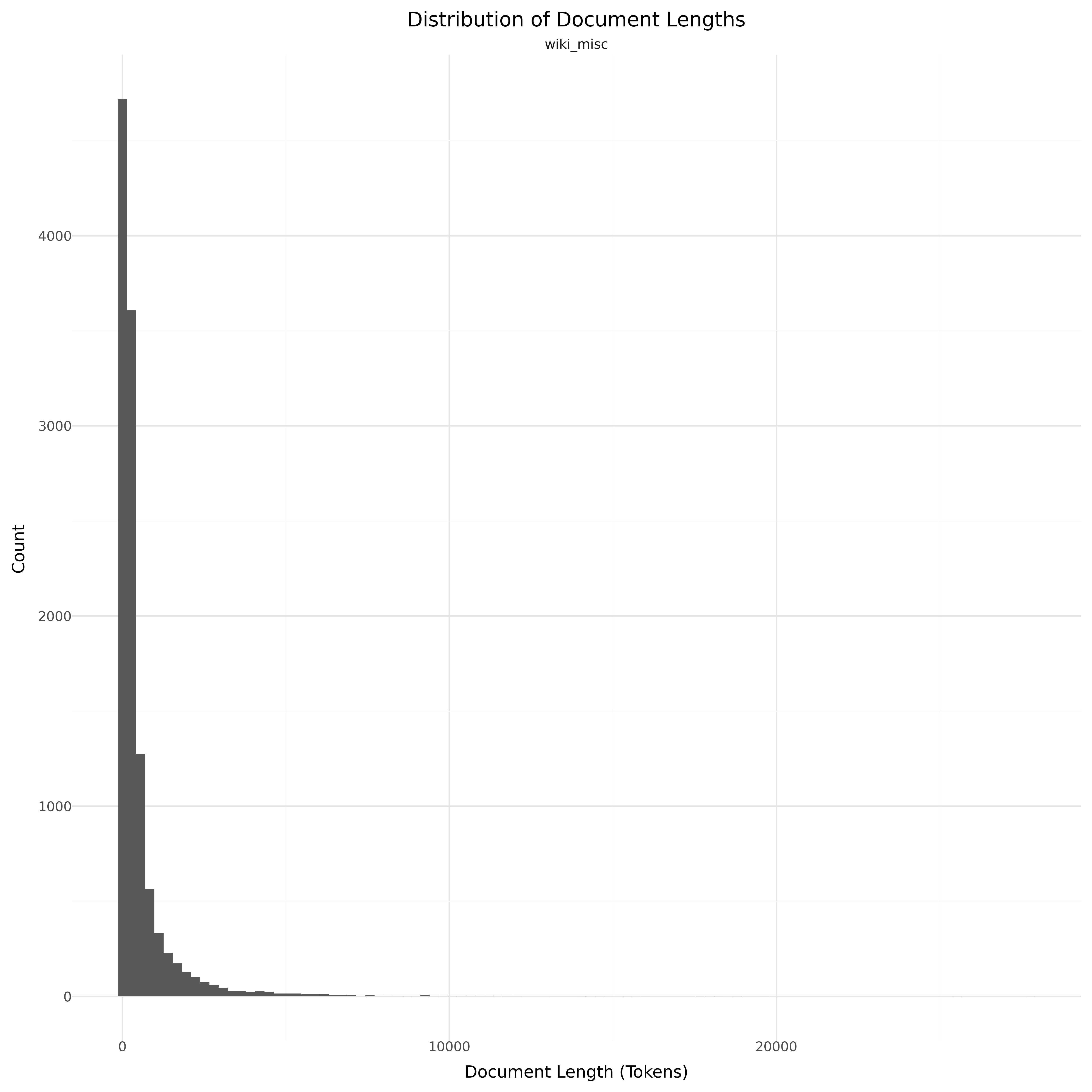

- Number of samples: 11.59K

- Number of tokens (Llama 3): 6.14M

- Average document length in tokens (min, max): 529.26 (4, 27.78K)

Dataset Structure

An example from the dataset looks as follows.

{

"id": "wikicomment_0",

"text": "## Vejledning efterlyses.\nJeg har ledt og ledt alle steder efter tip om, hvordan man kan bruge et bi[...]",

"source": "wiki_misc",

"added": "2025-07-21",

"created": "2002-02-01, 2025-07-20",

"token_count": 385

}

Data Fields

An entry in the dataset consists of the following fields:

id(str): An unique identifier for each document.text(str): The content of the document.source(str): The source of the document (see Source Data).added(str): An date for when the document was added to this collection.created(str): An date range for when the document was originally created.token_count(int): The number of tokens in the sample computed using the Llama 8B tokenizer

Dataset Statistics

Additional Information

This dataset is collected using an adapted version of the WikiExtractor. Rob van der Goot created a fork that allows for extracting additional text from Wiki's. The fork can be found here: WikiExtractor.

After inspection of the different outputs, there are multiple categories of files, which can most easily be distinguished through the title field. Below, I list the different categories, their size (number of pages), and what they seem to contain after a manual inspection.

71472 Kategori: category overview pages

19992 Wikipedia: Comments, but also daily articles

2379 Portal: Also monthly articles, and some lists/calendars

1360 MediaWiki: About files, contains almost no natural language

726 Modul: technical stuff, contains almost no (Danish) text

171 Hjælp: help pages; info and comments

In the current version of the dataset, we used the titles starting with Wikipedia: , and remove the daily articles by leaving out titles starting with "Wikipedia:Dagens".

In this data we include comments where people discuss things like: content of pages, writing style, which pages/information to include/exclude, etc. It also includes pages written for people that contribute to Wikipedia.

Opportunities for Improvement

The wikiextractor outputs some incomplete sentences, where entities seem to be missing. This is a known issue: https://github.com/attardi/wikiextractor/issues/33 , but in the Wikipedia section of DynaWord, other extractors seems to have given better results, so it would be nice if they could be adapted to extract comments as well. We have choosen to remove entire lines when we know that words are missing, to avoid disrupting the context.

Language filtering is done with pre-trained fastText, which is definitely not a perfect solution (but the majority of text should already be Danish, and quality of English-Danish should be ok).