Datasets:

File size: 13,083 Bytes

4669289 0b655fe 366d487 c647a6c 8604b06 366d487 6e96d9c 8604b06 6e96d9c 366d487 6e96d9c b056875 6e96d9c 366d487 6e96d9c b056875 6e96d9c 366d487 6e96d9c b056875 6e96d9c 366d487 4669289 366d487 0b655fe ce3cc50 0b655fe ce3cc50 0b655fe 366d487 ce3cc50 28a70bf ce3cc50 fa80777 ce3cc50 fc759de ce3cc50 0b655fe 38c6249 0b655fe e80408f 0b655fe 4807866 0b655fe 366d487 0b655fe 5493e9a 0b655fe 366d487 0b655fe 0252ff8 38c6249 0252ff8 0b655fe ce3cc50 0b655fe 8169760 38c6249 6e96d9c 38c6249 0b655fe 4807866 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 |

---

license: cc-by-nc-nd-4.0

task_categories:

- audio-classification

language:

- zh

- en

tags:

- music

- art

pretty_name: Guzheng Technique 99 Dataset

size_categories:

- n<1K

dataset_info:

- config_name: default

features:

- name: audio

dtype:

audio:

sampling_rate: 44100

- name: mel

dtype: image

- name: label

sequence:

- name: onset_time

dtype: float32

- name: offset_time

dtype: float32

- name: IPT

dtype:

class_label:

names:

'0': Vibrato

'1': Plucks

'2': Upward_Portamento

'3': Downward_Portamento

'4': Glissando

'5': Tremolo

'6': Point_Note

- name: note

dtype: int8

splits:

- name: train

num_bytes: 242218

num_examples: 79

- name: validation

num_bytes: 32229

num_examples: 10

- name: test

num_bytes: 31038

num_examples: 10

download_size: 683115163

dataset_size: 305485

- config_name: eval

features:

- name: mel

dtype:

array3_d:

dtype: float32

shape:

- 128

- 258

- 1

- name: cqt

dtype:

array3_d:

dtype: float32

shape:

- 88

- 258

- 1

- name: chroma

dtype:

array3_d:

dtype: float32

shape:

- 12

- 258

- 1

- name: label

dtype:

array2_d:

dtype: float32

shape:

- 7

- 258

splits:

- name: train

num_bytes: 1190227192

num_examples: 2486

- name: validation

num_bytes: 133098616

num_examples: 278

- name: test

num_bytes: 148898092

num_examples: 311

download_size: 667607870

dataset_size: 1472223900

configs:

- config_name: default

data_files:

- split: train

path: default/train/data-*.arrow

- split: validation

path: default/validation/data-*.arrow

- split: test

path: default/test/data-*.arrow

- config_name: eval

data_files:

- split: train

path: eval/train/data-*.arrow

- split: validation

path: eval/validation/data-*.arrow

- split: test

path: eval/test/data-*.arrow

---

# Dataset Card for Guzheng Technique 99 Dataset

## Original Content

This dataset is created and used by [[1]](https://arxiv.org/pdf/2303.13272) for frame-level Guzheng playing technique detection. The original dataset encompasses 99 solo compositions for Guzheng, recorded by professional musicians within a studio environment. Each composition is annotated for every note, indicating the onset, offset, pitch, and playing techniques. This is different from the GZ IsoTech, which is annotated at the clip-level. Also, its playing technique categories differ slightly, encompassing a total of seven techniques. They are: _Vibrato (chanyin 颤音), Plucks (boxian 拨弦), Upward Portamento (shanghua 上滑), Downward Portamento (xiahua 下滑), Glissando (huazhi\guazou\lianmo\liantuo 花指\刮奏\连抹\连托), Tremolo (yaozhi 摇指), and Point Note (dianyin 点音)_. This meticulous annotation results in a total of 63,352 annotated labels.

## Integration

In the original dataset, the labels were stored in a separate CSV file. This posed usability challenges, as researchers had to perform time-consuming operations on CSV parsing and label-audio alignment. After our integration, the data structure has been streamlined and optimized. It now contains three columns: audio sampled at 44,100 Hz, pre-processed mel spectrograms, and a dictionary. This dictionary contains onset, offset, technique numeric labels, and pitch. The number of data entries after integration remains 99, with a cumulative duration amounting to 151.08 minutes. The average audio duration is 91.56 seconds.

We performed data processing and constructed the [default subset](#default-subset) of the current integrated version of the dataset, and the details of its data structure can be viewed through the [viewer](https://huggingface.co/datasets/ccmusic-database/Guzheng_Tech99/viewer). In light of the fact that the current dataset has been referenced and evaluated in a published article, we transcribe here the details of the dataset processing during the evaluation in the said article: each audio clip is a 3-second segment sampled at 44,100Hz, which is then converted into a log Constant-Q Transform (CQT) spectrogram. A CQT accompanied by a label constitutes a single data entry, forming the first and second columns, respectively. The CQT is a 3-dimensional array with dimensions of 88x258x1, representing the frequency-time structure of the audio. The label, on the other hand, is a 2-dimensional array with dimensions of 7x258, indicating the presence of seven distinct techniques across each time frame. Ultimately, given that the original dataset has already been divided into train, valid, and test sets, we have integrated the feature extraction method mentioned in this article's evaluation process into the API, thereby constructing the [eval subset](#eval-subset), which is not embodied in our paper.

## Statistics

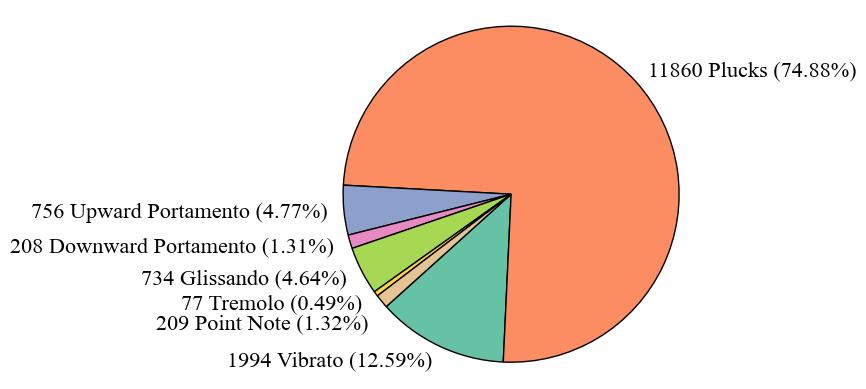

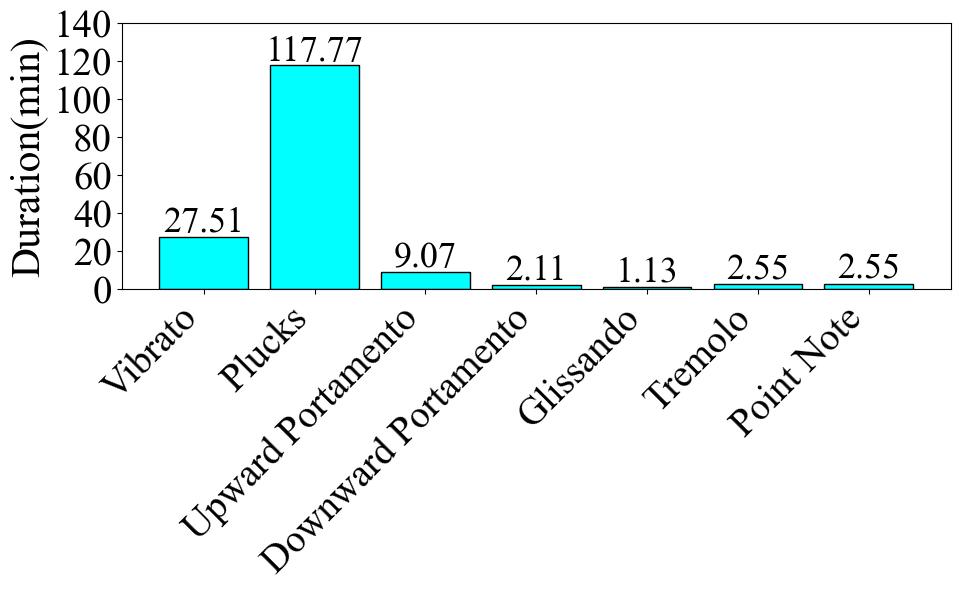

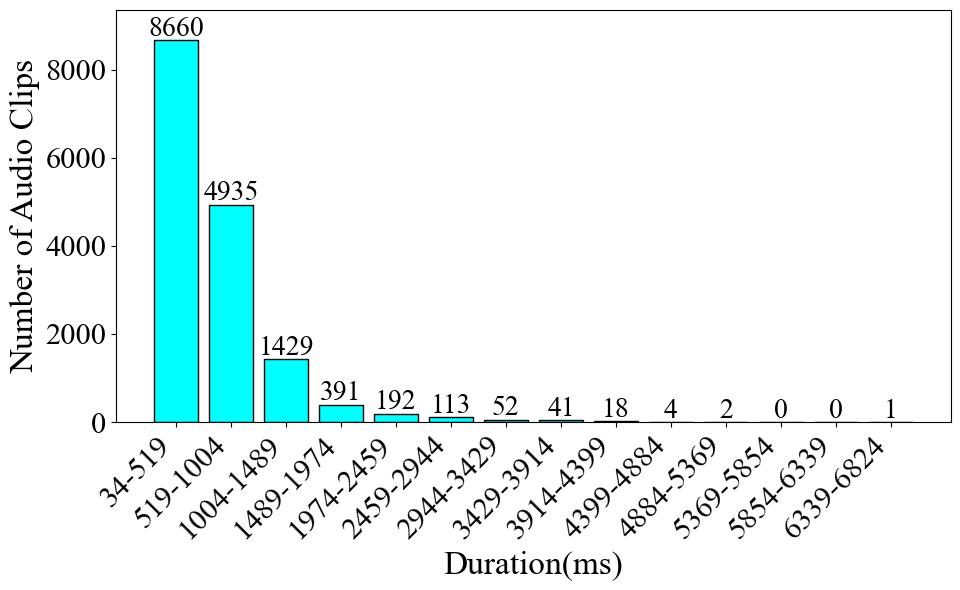

In this part, we present statistics at the label-level. The number of audio clips is equivalent to the count of either onset or offset occurrences. The duration of an audio clip is determined by calculating the offset time minus the onset time. At this level, the number of clips is 15,838, and the total duration is 162.69 minutes.

|  |  |  |

| :--------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: |

| **Fig. 1** | **Fig. 2** | **Fig. 3** |

In this part, we present statistics at label level. The number of audio clips is equivalent to the count of either onset or offset occurrences. The duration of an audio clip is determined by calculating the offset time minus the onset time. At this level, the average audio duration is 0.62 seconds, with the shortest being 0.03 seconds and the longest 6.82 seconds. Firstly, **Fig. 1** illustrates the number and proportion of audio clips in each category. The *Plucks* category accounts for a significantly larger proportion than all other categories, comprising 74.88% of the dataset. The category with the smallest proportion is *Tremolo*, which accounts for only 0.49%, resulting in a difference of 74.39% between the largest and smallest categories. Next, **Fig. 2** presents the audio duration of each category. The total duration of *Plucks* audio is also significantly longer than all other categories, with a total duration of 117.77 minutes. In contrast, the category with the shortest total duration is *Glissando*, with only 1.13 minutes. This differs from the pie chart, where the smallest category was *Tremolo*. The difference between the longest and shortest durations is 116.64 minutes. From both the pie chart and the duration chart, it is evident that this dataset suffers from a severe data imbalance problem. In the end, **Fig. 3** gives the audio clip number across various duration intervals. Most of the audio clips are concentrated in the 34-1004 millisecond range. Among them, 8,660 audio clips fall within the 34-519 millisecond range, 4,935 clips fall within the 519-1004 millisecond range, and only 2,243 clips exceed 1004 milliseconds in length.

| Statistical items | Values |

| :------------------------------------------: | :------------------: |

| Total audio count | `99` |

| Total audio duration(s) | `9064.61260770975` |

| Avg audio duration(s) | `91.5617435122197` |

| Shortest audio duration(s) | `32.888004535147395` |

| Longest audio duration(s) | `327.7973469387755` |

| Total data count | `15838` |

| Total data duration(s) | `9760.579138441011` |

| Mean data duration(ms) | `616.2759905569524` |

| Min data duration(ms) | `34.81292724609375` |

| Max data duration(ms) | `6823.249816894531` |

| Class in the longest audio duartion interval | `boxian` |

## Dataset Structure

<https://huggingface.co/datasets/ccmusic-database/Guzheng_Tech99/viewer>

### Data Instances

.zip(.flac, .csv)

### Data Fields

The dataset comprises 99 Guzheng solo compositions, recorded by professionals in a studio, totaling 9064.6 seconds. It includes seven playing techniques labeled for each note (onset, offset, pitch, vibrato, point note, upward portamento, downward portamento, plucks, glissando, and tremolo), resulting in 63,352 annotated labels. The dataset is divided into 79, 10, and 10 songs for the training, validation, and test sets, respectively.

### Data Splits

train, validation, test

## Dataset Description

### Dataset Summary

The integrated version provides the original content and the spectrogram generated in the experimental part of the paper cited above. For the second part, the pre-process in the paper is replicated. Each audio clip is a 3-second segment sampled at 44,100Hz, which is subsequently converted into a log Constant-Q Transform (CQT) spectrogram. A CQT accompanied by a label constitutes a single data entry, forming the first and second columns, respectively. The CQT is a 3-dimensional array with the dimension of 88 x 258 x 1, representing the frequency-time structure of the audio. The label, on the other hand, is a 2-dimensional array with dimensions of 7 x 258, which indicates the presence of seven distinct techniques across each time frame. indicating the existence of the seven techniques in each time frame. In the end, given that the raw dataset has already been split into train, valid, and test sets, the integrated dataset maintains the same split method. This dataset can be used for frame-level guzheng playing technique detection.

### Supported Tasks and Leaderboards

MIR, audio frame-level detection, Guzheng playing technique detection

### Languages

Chinese, English

## Usage

### Default Subset

```python

from datasets import load_dataset

ds = load_dataset("ccmusic-database/Guzheng_Tech99", name="default")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

### Eval Subset

```python

from datasets import load_dataset

ds = load_dataset("ccmusic-database/Guzheng_Tech99", name="eval")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

## Maintenance

```bash

GIT_LFS_SKIP_SMUDGE=1 git clone [email protected]:datasets/ccmusic-database/Guzheng_Tech99

cd Guzheng_Tech99

```

## Mirror

<https://www.modelscope.cn/datasets/ccmusic-database/Guzheng_Tech99>

## Additional Information

### Dataset Curators

Dichucheng Li

### Evaluation

[1] [Dichucheng Li, Mingjin Che, Wenwu Meng, Yulun Wu, Yi Yu, Fan Xia and Wei Li. "Frame-Level Multi-Label Playing Technique Detection Using Multi-Scale Network and Self-Attention Mechanism", in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2023).](https://arxiv.org/pdf/2303.13272.pdf)<br>

[2] <https://huggingface.co/ccmusic-database/Guzheng_Tech99>

### Citation Information

```bibtex

@inproceedings{LiCMWYXL23,

author = {Dichucheng Li and Mingjin Che and Wenwu Meng and Yulun Wu and Yi Yu and Fan Xia and Wei Li},

title = {Frame-Level Multi-Label Playing Technique Detection Using Multi-Scale Network and Self-Attention Mechanism},

booktitle = {{IEEE} International Conference on Acoustics, Speech and Signal Processing {ICASSP} 2023, Rhodes Island, Greece, June 4-10, 2023},

pages = {1-5},

publisher = {{IEEE}},

year = {2023}

}

```

### Contributions

A dataset for Guzheng playing technique frame-level detection |