Datasets:

The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Error code: JobManagerCrashedError

Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

image

image |

|---|

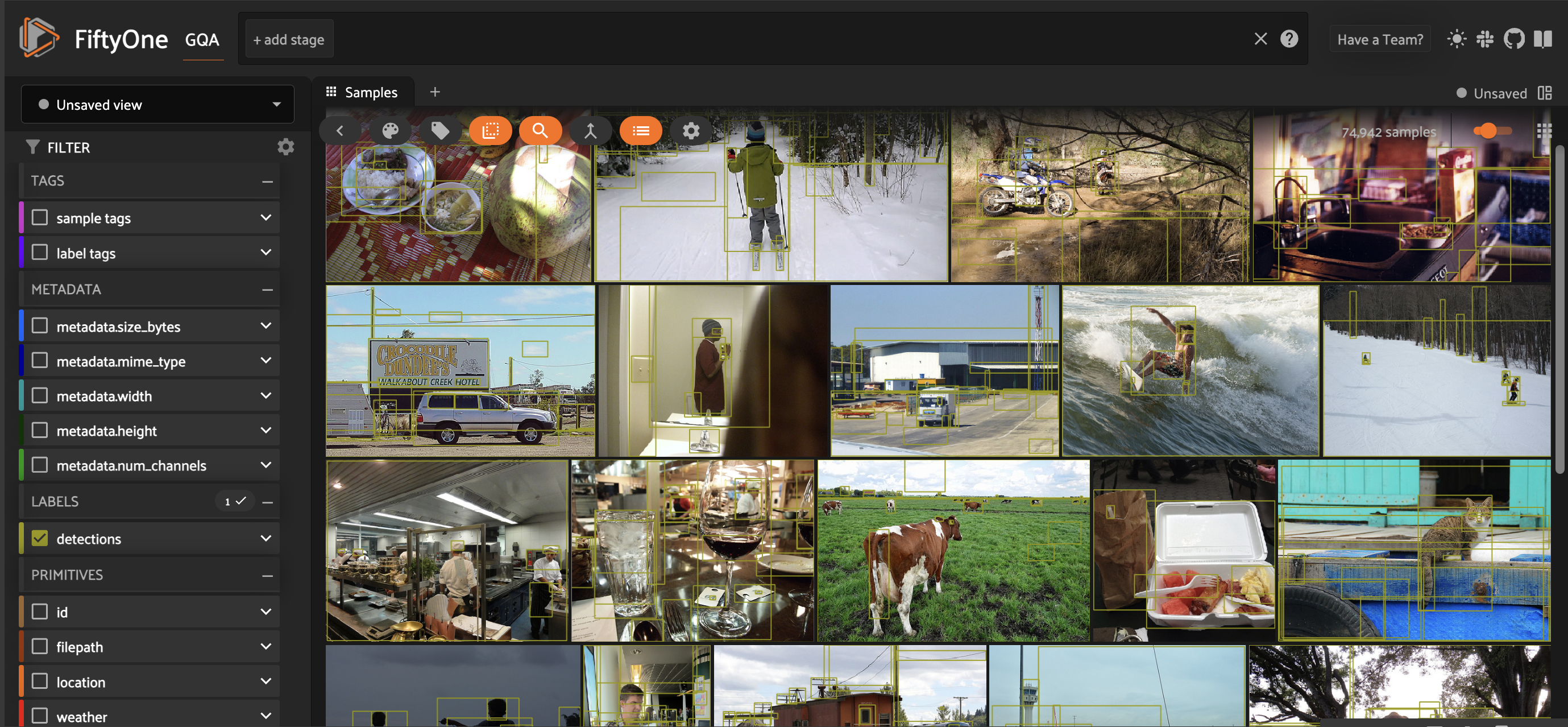

Dataset Card for GQA-35k

The GQA (Visual Reasoning in the Real World) dataset is a large-scale visual question answering dataset that includes scene graph annotations for each image.

This is a FiftyOne dataset with 35000 samples.

Note: This is a 35,000 sample subset which does not contain questions, only the scene graph annotations as detection-level attributes.

You can find the recipe notebook for creating the dataset here

Installation

If you haven't already, install FiftyOne:

pip install -U fiftyone

Usage

import fiftyone as fo

import fiftyone.utils.huggingface as fouh

# Load the dataset

# Note: other available arguments include 'max_samples', etc

dataset = fouh.load_from_hub("Voxel51/GQA-Scene-Graph")

# Launch the App

session = fo.launch_app(dataset)

Dataset Details

Dataset Description

Scene Graph Annotations

Each of the 113K images in GQA is associated with a detailed scene graph describing the objects, attributes and relations present.

The scene graphs are based on a cleaner version of the Visual Genome scene graphs.

For each image, the scene graph is provided as a dictionary (sceneGraph) containing:

- Image metadata like width, height, location, weather

- A dictionary (objects) mapping each object ID to its name, bounding box coordinates, attributes, and relations[6]

- Relations are represented as triples specifying the predicate (e.g. "holding", "on", "left of") and the target object ID[6]

Curated by: Drew Hudson & Christopher Manning

Shared by: Harpreet Sahota, Hacker-in-Residence at Voxel51

Language(s) (NLP): en

License:

GQA annotations (scene graphs, questions, programs) licensed under CC BY 4.0

Images sourced from Visual Genome may have different licensing terms

Dataset Sources

- Repository: https://cs.stanford.edu/people/dorarad/gqa/

- Paper : https://arxiv.org/pdf/1902.09506

- Demo: https://cs.stanford.edu/people/dorarad/gqa/vis.html

Dataset Structure

Here's the information presented as a markdown table:

| Field | Type | Description |

|---|---|---|

| location | str | Optional. The location of the image, e.g. kitchen, beach. |

| weather | str | Optional. The weather in the image, e.g. sunny, cloudy. |

| objects | dict | A dictionary from objectId to its object. |

| object | dict | A visual element in the image (node). |

| name | str | The name of the object, e.g. person, apple or sky. |

| x | int | Horizontal position of the object bounding box (top left). |

| y | int | Vertical position of the object bounding box (top left). |

| w | int | The object bounding box width in pixels. |

| h | int | The object bounding box height in pixels. |

| attributes | [str] | A list of all the attributes of the object, e.g. blue, small, running. |

| relations | [dict] | A list of all outgoing relations (edges) from the object (source). |

| relation | dict | A triple representing the relation between source and target objects. |

Note: I've used non-breaking spaces ( ) to indent the nested fields in the 'Field' column to represent the hierarchy. This helps to visually distinguish the nested structure within the table.

Citation

BibTeX:

@article{Hudson_2019,

title={GQA: A New Dataset for Real-World Visual Reasoning and Compositional Question Answering},

ISBN={9781728132938},

url={http://dx.doi.org/10.1109/CVPR.2019.00686},

DOI={10.1109/cvpr.2019.00686},

journal={2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

publisher={IEEE},

author={Hudson, Drew A. and Manning, Christopher D.},

year={2019},

month={Jun}

}

- Downloads last month

- 2,200