Modularized Cross-Embodiment Transformer (MXT) – Pretrained Models from Human2LocoMan

Yaru Niu1,*

Yunzhe Zhang1,*

Mingyang Yu1

Changyi Lin1

Chenhao Li1

Yikai Wang1

Yuxiang Yang2

Wenhao Yu2

Tingnan Zhang2

Zhenzhen Li3

Jonathan Francis1,3

Bingqing Chen3

Jie Tan2

Ding Zhao1

1Carnegie Mellon University

2Google DeepMind

3Bosch Center for AI

*Equal contributions

Robotics: Science and Systems (RSS) 2025

Website |

Paper |

Code

Model Description

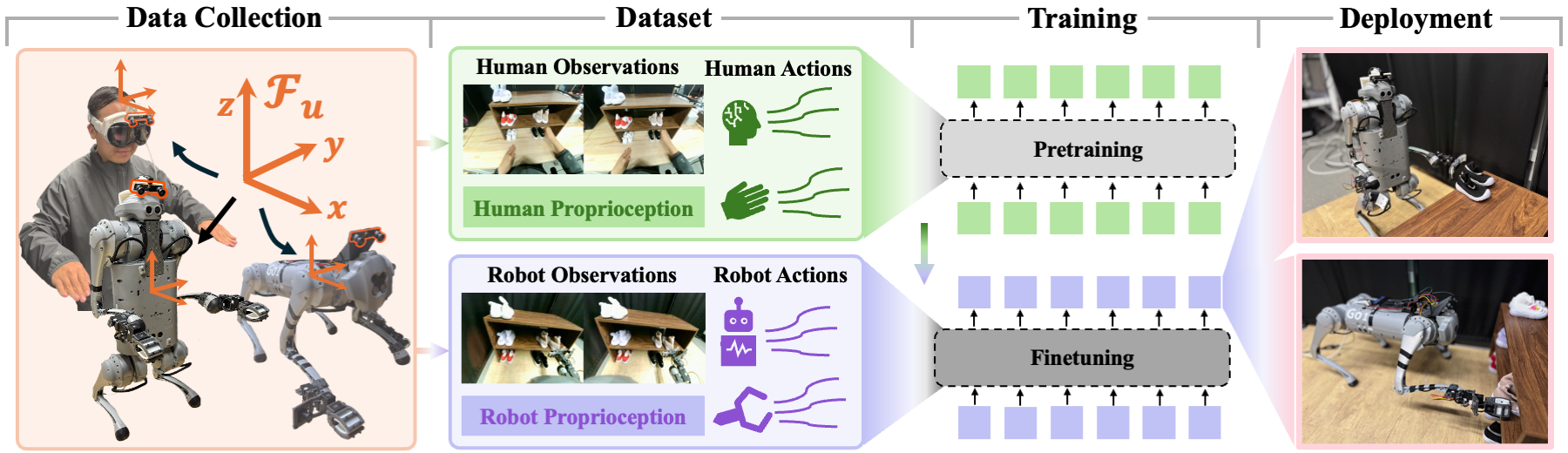

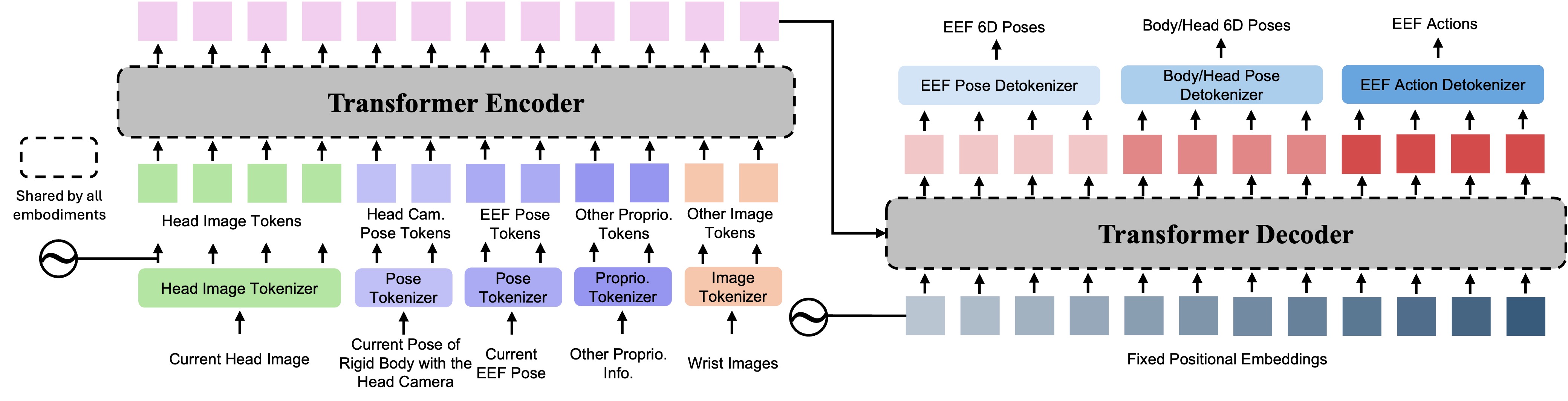

Our learning framework is designed to efficiently utilize data from both human and robot sources, and account for modality-specific distributions unique to each embodiment. We propose a modularized design Modularized Cross-Embodiment Transformer (MXT). MXT consists mainly of three groups of modules: tokenizers, Transformer trunk, and detokenizers. The tokenizers act as encoders and map embodiment-specific observation modalities to tokens in the latent space, and the detokenizers translate the output tokens from the trunk to action modalities in the action space of each embodiment. The tokenizers and detokenizers are specific to one embodiment and are reinitialized for each new embodiment, while the trunk is shared across all embodiments and reused for transferring the policy among embodiments.

We provide MXT checkpoints pretrained on human data, along with their corresponding config files. Specifically, pour.ckpt is pretrained on the human pouring dataset; scoop.ckpt on the human scooping dataset; shoe_org.ckpt on the human unimanual and bimanual shoe organization dataset; and toy_collect.ckpt on the human unimanual and bimanual toy collection dataset.

Citation

If you find this work helpful, please consider citing the paper:

@inproceedings{niu2025human2locoman,

title={Human2LocoMan: Learning Versatile Quadrupedal Manipulation with Human Pretraining},

author={Niu, Yaru and Zhang, Yunzhe and Yu, Mingyang and Lin, Changyi and Li, Chenhao and Wang, Yikai and Yang, Yuxiang and Yu, Wenhao and Zhang, Tingnan and Li, Zhenzhen and Francis, Jonathan and Chen, Bingqing and Tan, Jie and Zhao, Ding},

booktitle={Robotics: Science and Systems (RSS)},

year={2025}

}