gguf quantized version of krea

- run it with diffusers (see example inference below)

import torch

from transformers import T5EncoderModel

from diffusers import FluxPipeline, GGUFQuantizationConfig, FluxTransformer2DModel

model_path = "https://huggingface.co/calcuis/krea-gguf/blob/main/flux1-krea-dev-q2_k.gguf"

transformer = FluxTransformer2DModel.from_single_file(

model_path,

quantization_config=GGUFQuantizationConfig(compute_dtype=torch.bfloat16),

torch_dtype=torch.bfloat16,

config="callgg/krea-decoder",

subfolder="transformer"

)

text_encoder = T5EncoderModel.from_pretrained(

"chatpig/t5-v1_1-xxl-encoder-fp32-gguf",

gguf_file="t5xxl-encoder-fp32-q2_k.gguf",

torch_dtype=torch.bfloat16

)

pipe = FluxPipeline.from_pretrained(

"callgg/krea-decoder",

transformer=transformer,

text_encoder_2=text_encoder,

torch_dtype=torch.bfloat16

)

pipe.enable_model_cpu_offload() # could change it to cuda if you have good gpu

prompt = "a pig holding a sign that says hello world"

image = pipe(

prompt,

height=1024,

width=1024,

guidance_scale=2.5,

).images[0]

image.save("output.png")

- Prompt

- a frog holding a sign that says hello world

- Prompt

- a pig holding a sign that says hello world

- Prompt

- a wolf holding a sign that says hello world

- Prompt

- cute anime girl with massive fluffy fennec ears and a big fluffy tail blonde messy long hair blue eyes wearing a maid outfit with a long black gold leaf pattern dress and a white apron mouth open holding a fancy black forest cake with candles on top in the kitchen of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere

- Prompt

- on a rainy night, a girl holds an umbrella and looks at the camera. The rain keeps falling.

- Prompt

- drone shot of a volcano erupting with a pig walking on it

run it with gguf-node via comfyui

- drag krea to >

./ComfyUI/models/diffusion_models - drag clip-l-v2 [248MB], t5xxl [2.75GB] to >

./ComfyUI/models/text_encoders - drag pig [168MB] to >

./ComfyUI/models/vae

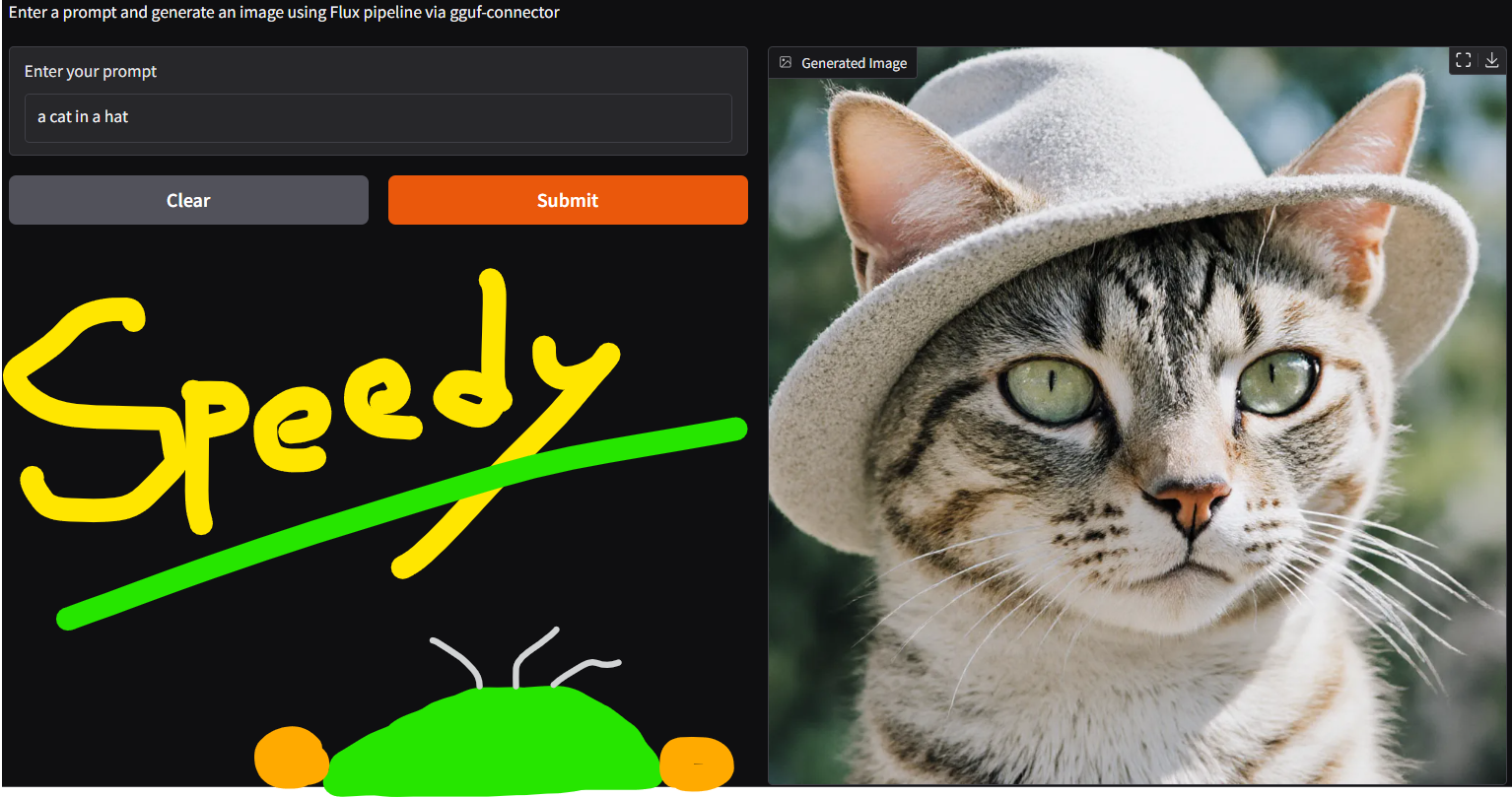

run it with gguf-connector

- able to opt - 2-bit, 4-bit or 8-bit model for dequant while executing:

ggc k4

reference

- base model from black-forest-labs

- diffusers from huggingface

- comfyui from comfyanonymous

- gguf-node (pypi|repo|pack)

- gguf-connector (pypi)

- Downloads last month

- 553

Hardware compatibility

Log In

to view the estimation

1-bit

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Model tree for calcuis/krea-gguf

Base model

black-forest-labs/FLUX.1-dev

Finetuned

black-forest-labs/FLUX.1-Krea-dev