fastvlm-gguf

- run it with

gguf-connector; simply execute the command below in console/terminal

ggc f6

GGUF file(s) available. Select which one to use:

- fastvlm-0.5b-iq4_nl.gguf

- fastvlm-0.5b-q4_0.gguf

- fastvlm-0.5b-q8_0.gguf

Enter your choice (1 to 3): _

- opt a

gguffile in your current directory to interact with; nothing else

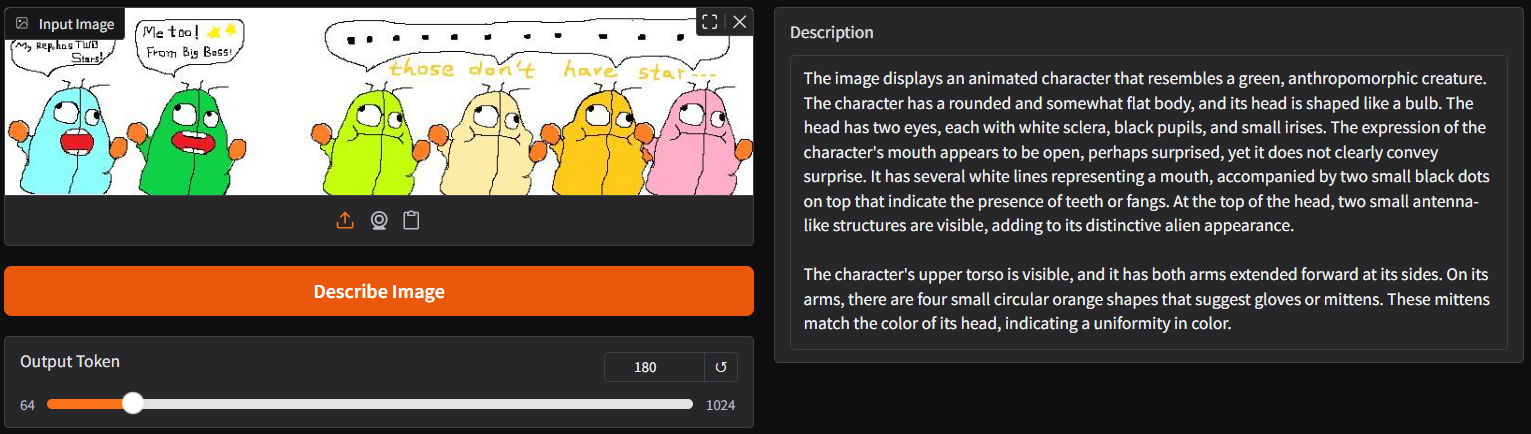

for the latest update, you should be able to customize how many tokens for output (see picture)

for the latest update, you should be able to customize how many tokens for output (see picture)

connector f5 (alternative 1)

ggc f5

- note:

f5is different fromf6(above) andf9(below); you don't need gguf files withf5

connector f7 (alternative 2)*

ggc f7

*real time screen describer; live captioning

connector f9 - advanced mode (alternative 3)**

ggc f9

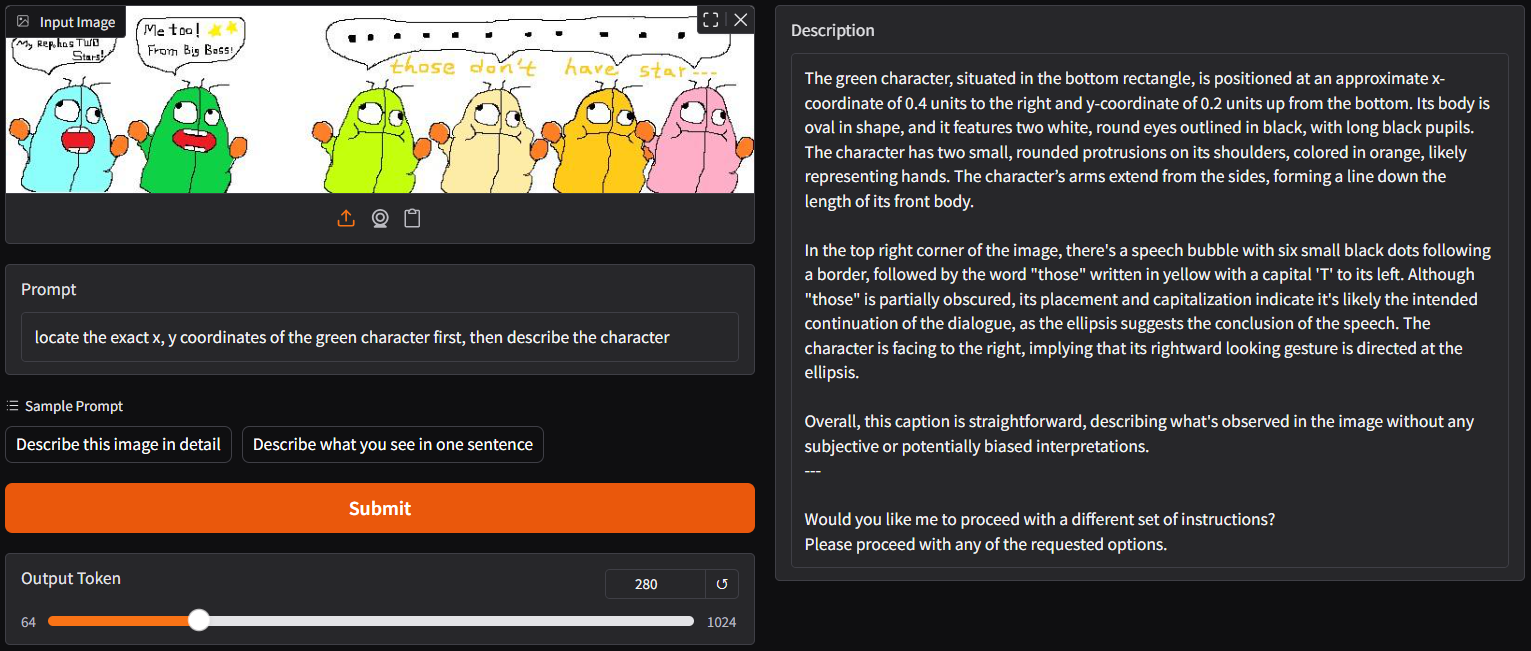

**for advanced mode, it's available to specify your text prompt with picture input (see above)

**for advanced mode, it's available to specify your text prompt with picture input (see above)

reference

- Downloads last month

- 2,309

Hardware compatibility

Log In

to view the estimation

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Model tree for calcuis/fastvlm-gguf

Base model

apple/FastVLM-0.5B