This model's origin might be a scam

Sorry for posting this here bartowski, but I think this is worth discussing for anybody passing by. I don't expect you to do anything about this model, in fact I think you should leave it up for the sake of future reference.

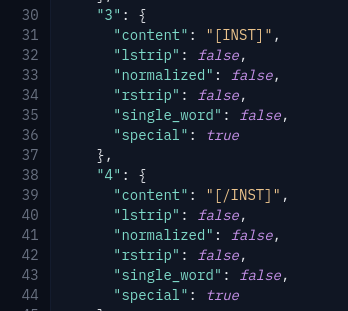

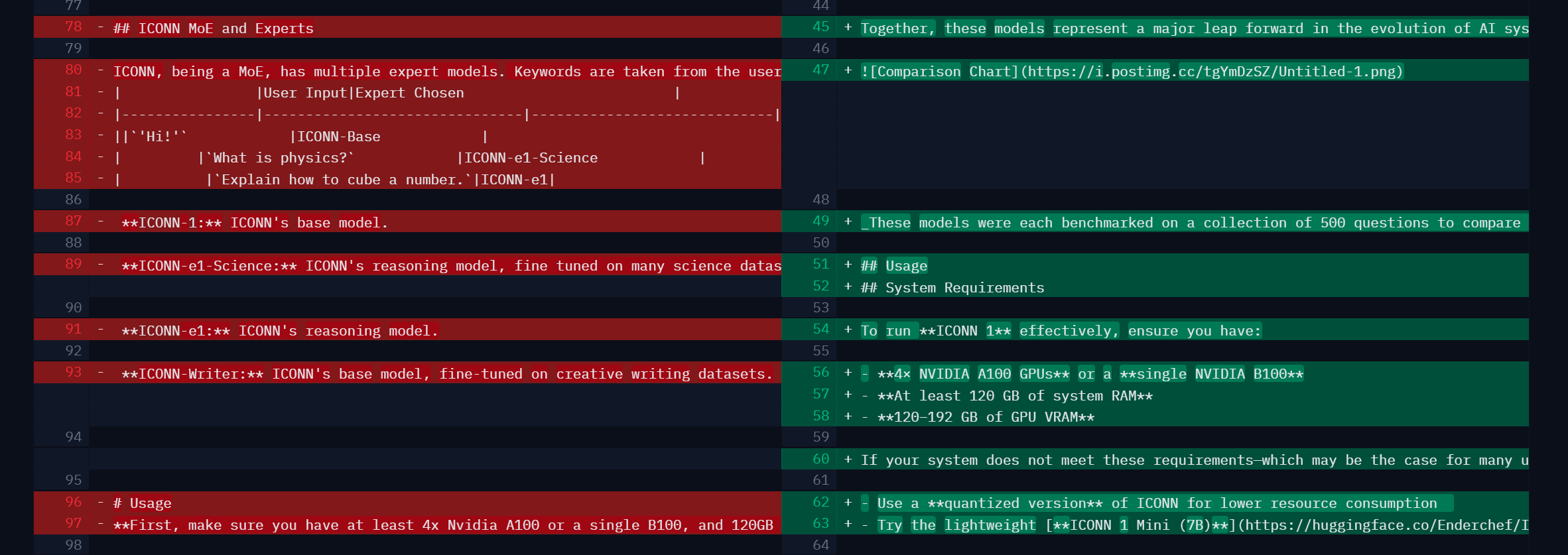

Anyway, the original model got deleted after me and two other users started digging around in the files, with even the posts themselves being deleted beforehand, due to wondering if it was a legit new model from the ground up. The mergekit settings and tokens used suggested otherwise, with my contribution identifying that it had used Mistral instruct in the config, a template that isn't really used outside of Mistral models.

Tag, you're it. Anything you wanna contribute?

@Delta-Vector (I think you were the second guy in the thread, but correct me if I'm wrong)

Yep can confirm, if you look at the -E1 checkpoint up on their HF org, you can see a mergekit version in the safetensors. They may have done pretraining on the model which is what you're supposed to do with a clowncar MoE but given the fact they didn't even bother removing the mergekit addition from their config, i find it highly likely it's just a grift.

Mixtral-Arch is also what mergekit outputs regardless of previous arch aswell.

Woof. Why some people do these things is beyond me. Thanks for exposing these frauds.

Thank you so much for these reports!

I've gated the model with a link to this discussion, since I'd rather not surprised the info but I do want to try to warn people before they blindly download and waste their bandwidth!!

Real shame.. hope it was just an innocent accident rather than anything malicious!

My guess is they made a doubled mixtral moe and ran some 1m dataset through it. A lot of claims and not a whole lot of substance. Absolute blast from the past.