|

|

--- |

|

|

quantized_by: anikifoss |

|

|

pipeline_tag: text-generation |

|

|

base_model: deepseek-ai/DeepSeek-R1-0528 |

|

|

license: mit |

|

|

base_model_relation: quantized |

|

|

tags: |

|

|

- mla |

|

|

- conversational |

|

|

- ik_llama.cpp |

|

|

--- |

|

|

|

|

|

|

|

|

# Model Card |

|

|

|

|

|

Dynamic quantization of DeepSeek-R1-0528 using optimized format only available on **ik_llama** fork, resized to run with 24GB to 32GB VRAM and 512GB RAM systems while providing the best balance between quality and performance for coding. |

|

|

|

|

|

THIS QUANT USES OPTIMIZED FORMAT THAT IS ONLY AVAILABLE ON **IK_LLAMA** FORK!!! |

|

|

|

|

|

Note that **ik_llama** can run all the **llama.cpp** quants, while adding support for interleaved formats (_R4 and _R8). |

|

|

|

|

|

See [this detailed guide](https://github.com/ikawrakow/ik_llama.cpp/discussions/258) on how to setup an run **ik_llama**. |

|

|

|

|

|

## Run |

|

|

Use the following command lines to run the model (tweak the command to further customize it to your needs). |

|

|

|

|

|

### 24GB VRAM |

|

|

``` |

|

|

./build/bin/llama-server \ |

|

|

--alias anikifoss/DeepSeek-R1-0528-DQ4_K_R4 \ |

|

|

--model /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00001-of-00010.gguf \ |

|

|

--temp 0.5 --top-k 0 --top-p 1.0 --min-p 0.1 --repeat-penalty 1.0 \ |

|

|

--ctx-size 41000 \ |

|

|

-ctk q8_0 \ |

|

|

-mla 2 -fa \ |

|

|

-amb 512 \ |

|

|

-b 1024 -ub 1024 \ |

|

|

-fmoe \ |

|

|

--n-gpu-layers 99 \ |

|

|

--override-tensor exps=CPU,attn_kv_b=CPU \ |

|

|

--parallel 1 \ |

|

|

--threads 32 \ |

|

|

--host 127.0.0.1 \ |

|

|

--port 8090 |

|

|

``` |

|

|

|

|

|

### 32GB VRAM |

|

|

``` |

|

|

./build/bin/llama-server \ |

|

|

--alias anikifoss/DeepSeek-R1-0528-DQ4_K_R4 \ |

|

|

--model /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00001-of-00010.gguf \ |

|

|

--temp 0.5 --top-k 0 --top-p 1.0 --min-p 0.1 --repeat-penalty 1.0 \ |

|

|

--ctx-size 75000 \ |

|

|

-ctk f16 \ |

|

|

-mla 2 -fa \ |

|

|

-amb 1024 \ |

|

|

-b 2048 -ub 2048 \ |

|

|

-fmoe \ |

|

|

--n-gpu-layers 99 \ |

|

|

--override-tensor exps=CPU,attn_kv_b=CPU \ |

|

|

--parallel 1 \ |

|

|

--threads 32 \ |

|

|

--host 127.0.0.1 \ |

|

|

--port 8090 |

|

|

``` |

|

|

|

|

|

### Customization |

|

|

- Replace `/mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4` with the location of the model (where you downloaded it) |

|

|

- Adjust `--threads` to the number of physical cores on your system |

|

|

- Tweak these to your preference `--temp 0.5 --top-k 0 --top-p 1.0 --min-p 0.1 --repeat-penalty 1.0` |

|

|

- Add `--no-mmap` to force the model to be fully loaded into memory (this is especially important when running inference speed benchmarks) |

|

|

- You can increase `--parallel`, but doing so will cause your context buffer (set via `--ctx-size`) to be shared between tasks executing in parallel |

|

|

|

|

|

TODO: |

|

|

- Experiment with new `-mla 3` (recent **ik_llama** patches enable new MLA implementation on CUDA) |

|

|

- Re-evaluate `-rtr` (in case Q8_0 can be repacked as Q8_0_R8 after some of the recent patches) |

|

|

|

|

|

### Inference Performance vs VRAM Considerations |

|

|

You can try the following to squeeze out more context on your system: |

|

|

- Running with `-ctk q8_0` can save some VRAM, but is a little slower on the target system |

|

|

- Reducing buffers can free up a bit more VRAM at a very minor cost to performance (`-amb 512` and `-b 1024 -ub 1024`) |

|

|

- Try `attn_kv_b=CPU` vs `attn_k_b=CPU,attn_v_b=CPU` to see which gives you the best performance |

|

|

- Switching to an IQ quant will save some memory at the cost of performance (*very very roughly* 10% memory savings at the cost of 10% in inference performance) |

|

|

|

|

|

## Optimizing for Coding |

|

|

Smaller quants, like `UD-Q2_K_XL` are much faster when generating tokens, but often produce code that fails to run or contains bugs. Based on empirical observations, coding seems to be strongly affected by the model quantization. So we use larger quantization where it matters to reduce perplexity while remaining within the target system constraints of 24GB-32GB VRAM, 512GB RAM. |

|

|

|

|

|

### Quantization Approach |

|

|

When running with **Flash MLA** optimization enabled, **ik_llama** will unpack **attention** tensors into `Q8_0`, so we match that in our model (similar to ubergarm's ik_llama.cpp quants). We also keep all the other small tensors as `Q8_0` while also leaving any `F32` tensors untouched. The MoE tensors make up the bulk of the model. The **ffn_down_exps** tensors are especially sensitive to quantization (we borrow this idea from `unsloth` quants), so we quantize them as `Q6_K_R4`. Finally, all the other large MoE tensors (ffn_up_exps, ffn_gate_exps) are quantized as `Q4_K_R4` |

|

|

|

|

|

Quantization Summary: |

|

|

- Keep all the small `F32` tensors untouched |

|

|

- Quantize all the **attention** and related tensors to `Q8_0` |

|

|

- Quantize all the **ffn_down_exps** tensors to `Q6_K_R4` |

|

|

- Quantize all the **ffn_up_exps** and **ffn_gate_exps** tensors to `Q4_K_R4` |

|

|

|

|

|

The **attn_kv_b** tensors are included in the original model, but they contain the same information as **attn_k_b** and **attn_v_b** tensors. Some quants, like `unsloth`, remove **attn_k_b** and **attn_v_b** tensors altogether. We keep all these tensors for completeness, but push **attn_kv_b** out of VRAM with `attn_kv_b=CPU`, since `ik_llama` prefers to use **attn_k_b** and **attn_v_b** when all the tensors are available. This behavior may change between releases, so try with `attn_k_b=CPU,attn_v_b=CPU` instead and check which option gives you the best performance! |

|

|

|

|

|

### No imatrix |

|

|

Generally, imatrix is not recommended for Q4 and larger quants. The problem with imatrix is that it will guide what model remembers, while anything not covered by the text sample used to generate the imartrix is more likely to be forgotten. For example, an imatrix derived from wikipedia sample is likely to negatively affect tasks like coding. In other words, while imatrix can improve specific benchmarks, that are similar to the imatrix input sample, it will also skew the model performance towards tasks similar to the imatrix sample at the expense of other tasks. |

|

|

|

|

|

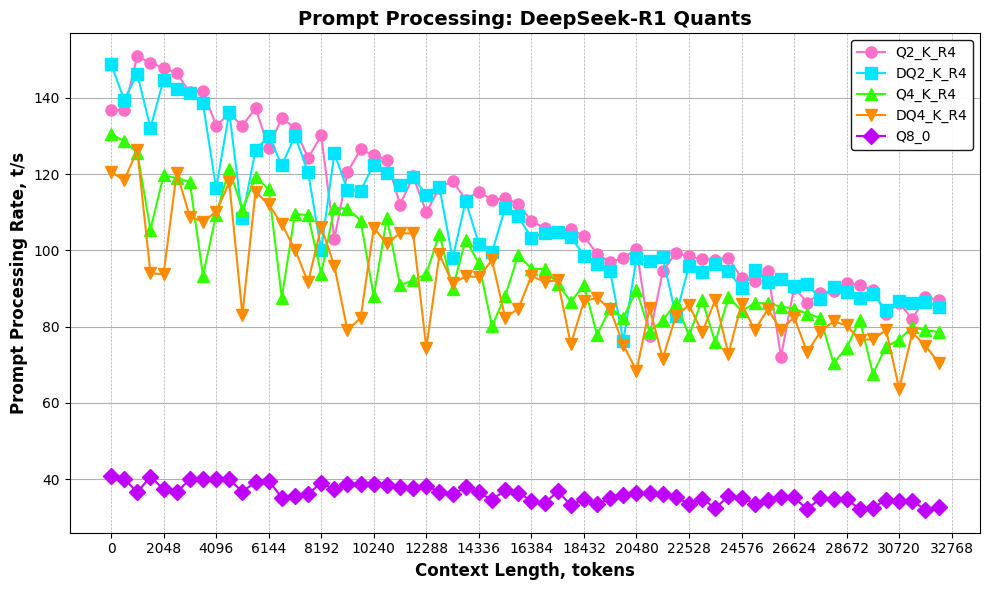

## Benchmarks |

|

|

**Benchmark System:** Threadripper Pro 7975WX, 768GB DDR5@5600MHz, RTX 5090 32GB |

|

|

|

|

|

The following quants were tested: |

|

|

- **Q2_K_R4** (attention - `Q8_0`, all MoE - `Q2_K_R4`) |

|

|

- **DQ2_K_R4** (attention - `Q8_0`, ffn_down_exps - `Q3_K_R4`, ffn_up_exps and ffn_gate_exps - `Q2_K_R4`) |

|

|

- **Q4_K_R4** (attention - `Q8_0`, all MoE - `Q4_K_R4`) |

|

|

- **DQ4_K_R4** (attention - `Q8_0`, ffn_down_exps - `Q6_K_R4`, ffn_up_exps and ffn_gate_exps - `Q4_K_R4`) |

|

|

- **Q8_0** (all - `Q8_0`) |

|

|

|

|

|

### Prompt Processing |

|

|

|

|

|

|

|

|

### Token Generation |

|

|

|

|

|

|

|

|

## Perplexity |

|

|

|

|

|

### Absolute Perplexity |

|

|

|

|

|

|

|

|

### Perplexity Relative to Q8_0 |

|

|

|

|

|

|

|

|

### Perplexity Numbers |

|

|

| Quant | Final estimate PPL | Difference from Q8_0 | |

|

|

|----------|--------------------|----------------------| |

|

|

| Q8_0 | 3.5184 +/- 0.01977 | +0.0000 | |

|

|

| DQ4_K_R4 | 3.5308 +/- 0.01986 | +0.0124 | |

|

|

| Q4_K_R4 | 3.5415 +/- 0.01993 | +0.0231 | |

|

|

| DQ2_K_R4 | 3.8099 +/- 0.02187 | +0.2915 | |

|

|

| Q2_K_R4 | 3.9535 +/- 0.02292 | +0.4351 | |

|

|

|

|

|

## GGUF-DUMP |

|

|

|

|

|

<details> |

|

|

|

|

|

<summary> |

|

|

Click here to see the output of `gguf-dump` |

|

|

</summary> |

|

|

|

|

|

```text |

|

|

INFO:gguf-dump:* Loading: /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00001-of-00010.gguf |

|

|

* File is LITTLE endian, script is running on a LITTLE endian host. |

|

|

* Dumping 49 key/value pair(s) |

|

|

1: UINT32 | 1 | GGUF.version = 3 |

|

|

2: UINT64 | 1 | GGUF.tensor_count = 186 |

|

|

3: UINT64 | 1 | GGUF.kv_count = 46 |

|

|

4: STRING | 1 | general.architecture = 'deepseek2' |

|

|

5: STRING | 1 | general.type = 'model' |

|

|

6: STRING | 1 | general.name = 'DeepSeek R1 0528 Bf16' |

|

|

7: STRING | 1 | general.size_label = '256x21B' |

|

|

8: UINT32 | 1 | deepseek2.block_count = 61 |

|

|

9: UINT32 | 1 | deepseek2.context_length = 163840 |

|

|

10: UINT32 | 1 | deepseek2.embedding_length = 7168 |

|

|

11: UINT32 | 1 | deepseek2.feed_forward_length = 18432 |

|

|

12: UINT32 | 1 | deepseek2.attention.head_count = 128 |

|

|

13: UINT32 | 1 | deepseek2.attention.head_count_kv = 128 |

|

|

14: FLOAT32 | 1 | deepseek2.rope.freq_base = 10000.0 |

|

|

15: FLOAT32 | 1 | deepseek2.attention.layer_norm_rms_epsilon = 9.999999974752427e-07 |

|

|

16: UINT32 | 1 | deepseek2.expert_used_count = 8 |

|

|

17: UINT32 | 1 | general.file_type = 214 |

|

|

18: UINT32 | 1 | deepseek2.leading_dense_block_count = 3 |

|

|

19: UINT32 | 1 | deepseek2.vocab_size = 129280 |

|

|

20: UINT32 | 1 | deepseek2.attention.q_lora_rank = 1536 |

|

|

21: UINT32 | 1 | deepseek2.attention.kv_lora_rank = 512 |

|

|

22: UINT32 | 1 | deepseek2.attention.key_length = 192 |

|

|

23: UINT32 | 1 | deepseek2.attention.value_length = 128 |

|

|

24: UINT32 | 1 | deepseek2.expert_feed_forward_length = 2048 |

|

|

25: UINT32 | 1 | deepseek2.expert_count = 256 |

|

|

26: UINT32 | 1 | deepseek2.expert_shared_count = 1 |

|

|

27: FLOAT32 | 1 | deepseek2.expert_weights_scale = 2.5 |

|

|

28: BOOL | 1 | deepseek2.expert_weights_norm = True |

|

|

29: UINT32 | 1 | deepseek2.expert_gating_func = 2 |

|

|

30: UINT32 | 1 | deepseek2.rope.dimension_count = 64 |

|

|

31: STRING | 1 | deepseek2.rope.scaling.type = 'yarn' |

|

|

32: FLOAT32 | 1 | deepseek2.rope.scaling.factor = 40.0 |

|

|

33: UINT32 | 1 | deepseek2.rope.scaling.original_context_length = 4096 |

|

|

34: FLOAT32 | 1 | deepseek2.rope.scaling.yarn_log_multiplier = 0.10000000149011612 |

|

|

35: STRING | 1 | tokenizer.ggml.model = 'gpt2' |

|

|

36: STRING | 1 | tokenizer.ggml.pre = 'deepseek-v3' |

|

|

37: [STRING] | 129280 | tokenizer.ggml.tokens |

|

|

38: [INT32] | 129280 | tokenizer.ggml.token_type |

|

|

39: [STRING] | 127741 | tokenizer.ggml.merges |

|

|

40: UINT32 | 1 | tokenizer.ggml.bos_token_id = 0 |

|

|

41: UINT32 | 1 | tokenizer.ggml.eos_token_id = 1 |

|

|

42: UINT32 | 1 | tokenizer.ggml.padding_token_id = 1 |

|

|

43: BOOL | 1 | tokenizer.ggml.add_bos_token = True |

|

|

44: BOOL | 1 | tokenizer.ggml.add_eos_token = False |

|

|

45: STRING | 1 | tokenizer.chat_template = '{% if not add_generation_prompt is defined %}{% set add_gene' |

|

|

46: UINT32 | 1 | general.quantization_version = 2 |

|

|

47: UINT16 | 1 | split.no = 0 |

|

|

48: UINT16 | 1 | split.count = 10 |

|

|

49: INT32 | 1 | split.tensors.count = 1147 |

|

|

* Dumping 186 tensor(s) |

|

|

1: 926679040 | 7168, 129280, 1, 1 | Q8_0 | token_embd.weight |

|

|

2: 7168 | 7168, 1, 1, 1 | F32 | blk.0.attn_norm.weight |

|

|

3: 132120576 | 18432, 7168, 1, 1 | Q8_0 | blk.0.ffn_down.weight |

|

|

4: 132120576 | 7168, 18432, 1, 1 | Q8_0 | blk.0.ffn_gate.weight |

|

|

5: 132120576 | 7168, 18432, 1, 1 | Q8_0 | blk.0.ffn_up.weight |

|

|

6: 7168 | 7168, 1, 1, 1 | F32 | blk.0.ffn_norm.weight |

|

|

7: 512 | 512, 1, 1, 1 | F32 | blk.0.attn_kv_a_norm.weight |

|

|

8: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.0.attn_kv_a_mqa.weight |

|

|

9: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.0.attn_kv_b.weight |

|

|

10: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.0.attn_k_b.weight |

|

|

11: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.0.attn_v_b.weight |

|

|

12: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.0.attn_output.weight |

|

|

13: 1536 | 1536, 1, 1, 1 | F32 | blk.0.attn_q_a_norm.weight |

|

|

14: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.0.attn_q_a.weight |

|

|

15: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.0.attn_q_b.weight |

|

|

16: 7168 | 7168, 1, 1, 1 | F32 | blk.1.attn_norm.weight |

|

|

17: 132120576 | 18432, 7168, 1, 1 | Q8_0 | blk.1.ffn_down.weight |

|

|

18: 132120576 | 7168, 18432, 1, 1 | Q8_0 | blk.1.ffn_gate.weight |

|

|

19: 132120576 | 7168, 18432, 1, 1 | Q8_0 | blk.1.ffn_up.weight |

|

|

20: 7168 | 7168, 1, 1, 1 | F32 | blk.1.ffn_norm.weight |

|

|

21: 512 | 512, 1, 1, 1 | F32 | blk.1.attn_kv_a_norm.weight |

|

|

22: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.1.attn_kv_a_mqa.weight |

|

|

23: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.1.attn_kv_b.weight |

|

|

24: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.1.attn_k_b.weight |

|

|

25: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.1.attn_v_b.weight |

|

|

26: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.1.attn_output.weight |

|

|

27: 1536 | 1536, 1, 1, 1 | F32 | blk.1.attn_q_a_norm.weight |

|

|

28: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.1.attn_q_a.weight |

|

|

29: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.1.attn_q_b.weight |

|

|

30: 7168 | 7168, 1, 1, 1 | F32 | blk.2.attn_norm.weight |

|

|

31: 132120576 | 18432, 7168, 1, 1 | Q8_0 | blk.2.ffn_down.weight |

|

|

32: 132120576 | 7168, 18432, 1, 1 | Q8_0 | blk.2.ffn_gate.weight |

|

|

33: 132120576 | 7168, 18432, 1, 1 | Q8_0 | blk.2.ffn_up.weight |

|

|

34: 7168 | 7168, 1, 1, 1 | F32 | blk.2.ffn_norm.weight |

|

|

35: 512 | 512, 1, 1, 1 | F32 | blk.2.attn_kv_a_norm.weight |

|

|

36: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.2.attn_kv_a_mqa.weight |

|

|

37: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.2.attn_kv_b.weight |

|

|

38: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.2.attn_k_b.weight |

|

|

39: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.2.attn_v_b.weight |

|

|

40: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.2.attn_output.weight |

|

|

41: 1536 | 1536, 1, 1, 1 | F32 | blk.2.attn_q_a_norm.weight |

|

|

42: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.2.attn_q_a.weight |

|

|

43: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.2.attn_q_b.weight |

|

|

44: 256 | 256, 1, 1, 1 | F32 | blk.3.exp_probs_b.bias |

|

|

45: 1835008 | 7168, 256, 1, 1 | F32 | blk.3.ffn_gate_inp.weight |

|

|

46: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.3.ffn_down_shexp.weight |

|

|

47: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.3.ffn_gate_shexp.weight |

|

|

48: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.3.ffn_up_shexp.weight |

|

|

49: 512 | 512, 1, 1, 1 | F32 | blk.3.attn_kv_a_norm.weight |

|

|

50: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.3.attn_kv_a_mqa.weight |

|

|

51: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.3.attn_kv_b.weight |

|

|

52: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.3.attn_k_b.weight |

|

|

53: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.3.attn_v_b.weight |

|

|

54: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.3.attn_output.weight |

|

|

55: 1536 | 1536, 1, 1, 1 | F32 | blk.3.attn_q_a_norm.weight |

|

|

56: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.3.attn_q_a.weight |

|

|

57: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.3.attn_q_b.weight |

|

|

58: 7168 | 7168, 1, 1, 1 | F32 | blk.3.attn_norm.weight |

|

|

59: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.3.ffn_down_exps.weight |

|

|

60: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.3.ffn_gate_exps.weight |

|

|

61: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.3.ffn_up_exps.weight |

|

|

62: 7168 | 7168, 1, 1, 1 | F32 | blk.3.ffn_norm.weight |

|

|

63: 256 | 256, 1, 1, 1 | F32 | blk.4.exp_probs_b.bias |

|

|

64: 1835008 | 7168, 256, 1, 1 | F32 | blk.4.ffn_gate_inp.weight |

|

|

65: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.4.ffn_down_shexp.weight |

|

|

66: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.4.ffn_gate_shexp.weight |

|

|

67: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.4.ffn_up_shexp.weight |

|

|

68: 512 | 512, 1, 1, 1 | F32 | blk.4.attn_kv_a_norm.weight |

|

|

69: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.4.attn_kv_a_mqa.weight |

|

|

70: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.4.attn_kv_b.weight |

|

|

71: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.4.attn_k_b.weight |

|

|

72: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.4.attn_v_b.weight |

|

|

73: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.4.attn_output.weight |

|

|

74: 1536 | 1536, 1, 1, 1 | F32 | blk.4.attn_q_a_norm.weight |

|

|

75: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.4.attn_q_a.weight |

|

|

76: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.4.attn_q_b.weight |

|

|

77: 7168 | 7168, 1, 1, 1 | F32 | blk.4.attn_norm.weight |

|

|

78: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.4.ffn_down_exps.weight |

|

|

79: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.4.ffn_gate_exps.weight |

|

|

80: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.4.ffn_up_exps.weight |

|

|

81: 7168 | 7168, 1, 1, 1 | F32 | blk.4.ffn_norm.weight |

|

|

82: 512 | 512, 1, 1, 1 | F32 | blk.5.attn_kv_a_norm.weight |

|

|

83: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.5.attn_kv_a_mqa.weight |

|

|

84: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.5.attn_kv_b.weight |

|

|

85: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.5.attn_k_b.weight |

|

|

86: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.5.attn_v_b.weight |

|

|

87: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.5.attn_output.weight |

|

|

88: 1536 | 1536, 1, 1, 1 | F32 | blk.5.attn_q_a_norm.weight |

|

|

89: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.5.attn_q_a.weight |

|

|

90: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.5.attn_q_b.weight |

|

|

91: 256 | 256, 1, 1, 1 | F32 | blk.5.exp_probs_b.bias |

|

|

92: 1835008 | 7168, 256, 1, 1 | F32 | blk.5.ffn_gate_inp.weight |

|

|

93: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.5.ffn_down_shexp.weight |

|

|

94: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.5.ffn_gate_shexp.weight |

|

|

95: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.5.ffn_up_shexp.weight |

|

|

96: 7168 | 7168, 1, 1, 1 | F32 | blk.5.attn_norm.weight |

|

|

97: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.5.ffn_down_exps.weight |

|

|

98: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.5.ffn_gate_exps.weight |

|

|

99: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.5.ffn_up_exps.weight |

|

|

100: 7168 | 7168, 1, 1, 1 | F32 | blk.5.ffn_norm.weight |

|

|

101: 256 | 256, 1, 1, 1 | F32 | blk.6.exp_probs_b.bias |

|

|

102: 1835008 | 7168, 256, 1, 1 | F32 | blk.6.ffn_gate_inp.weight |

|

|

103: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.6.ffn_down_shexp.weight |

|

|

104: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.6.ffn_gate_shexp.weight |

|

|

105: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.6.ffn_up_shexp.weight |

|

|

106: 512 | 512, 1, 1, 1 | F32 | blk.6.attn_kv_a_norm.weight |

|

|

107: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.6.attn_kv_a_mqa.weight |

|

|

108: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.6.attn_kv_b.weight |

|

|

109: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.6.attn_k_b.weight |

|

|

110: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.6.attn_v_b.weight |

|

|

111: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.6.attn_output.weight |

|

|

112: 1536 | 1536, 1, 1, 1 | F32 | blk.6.attn_q_a_norm.weight |

|

|

113: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.6.attn_q_a.weight |

|

|

114: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.6.attn_q_b.weight |

|

|

115: 7168 | 7168, 1, 1, 1 | F32 | blk.6.attn_norm.weight |

|

|

116: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.6.ffn_down_exps.weight |

|

|

117: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.6.ffn_gate_exps.weight |

|

|

118: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.6.ffn_up_exps.weight |

|

|

119: 7168 | 7168, 1, 1, 1 | F32 | blk.6.ffn_norm.weight |

|

|

120: 256 | 256, 1, 1, 1 | F32 | blk.7.exp_probs_b.bias |

|

|

121: 1835008 | 7168, 256, 1, 1 | F32 | blk.7.ffn_gate_inp.weight |

|

|

122: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.7.ffn_down_shexp.weight |

|

|

123: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.7.ffn_gate_shexp.weight |

|

|

124: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.7.ffn_up_shexp.weight |

|

|

125: 512 | 512, 1, 1, 1 | F32 | blk.7.attn_kv_a_norm.weight |

|

|

126: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.7.attn_kv_a_mqa.weight |

|

|

127: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.7.attn_kv_b.weight |

|

|

128: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.7.attn_k_b.weight |

|

|

129: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.7.attn_v_b.weight |

|

|

130: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.7.attn_output.weight |

|

|

131: 1536 | 1536, 1, 1, 1 | F32 | blk.7.attn_q_a_norm.weight |

|

|

132: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.7.attn_q_a.weight |

|

|

133: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.7.attn_q_b.weight |

|

|

134: 7168 | 7168, 1, 1, 1 | F32 | blk.7.attn_norm.weight |

|

|

135: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.7.ffn_down_exps.weight |

|

|

136: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.7.ffn_gate_exps.weight |

|

|

137: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.7.ffn_up_exps.weight |

|

|

138: 7168 | 7168, 1, 1, 1 | F32 | blk.7.ffn_norm.weight |

|

|

139: 256 | 256, 1, 1, 1 | F32 | blk.8.exp_probs_b.bias |

|

|

140: 1835008 | 7168, 256, 1, 1 | F32 | blk.8.ffn_gate_inp.weight |

|

|

141: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.8.ffn_down_shexp.weight |

|

|

142: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.8.ffn_gate_shexp.weight |

|

|

143: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.8.ffn_up_shexp.weight |

|

|

144: 512 | 512, 1, 1, 1 | F32 | blk.8.attn_kv_a_norm.weight |

|

|

145: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.8.attn_kv_a_mqa.weight |

|

|

146: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.8.attn_kv_b.weight |

|

|

147: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.8.attn_k_b.weight |

|

|

148: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.8.attn_v_b.weight |

|

|

149: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.8.attn_output.weight |

|

|

150: 1536 | 1536, 1, 1, 1 | F32 | blk.8.attn_q_a_norm.weight |

|

|

151: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.8.attn_q_a.weight |

|

|

152: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.8.attn_q_b.weight |

|

|

153: 7168 | 7168, 1, 1, 1 | F32 | blk.8.attn_norm.weight |

|

|

154: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.8.ffn_down_exps.weight |

|

|

155: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.8.ffn_gate_exps.weight |

|

|

156: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.8.ffn_up_exps.weight |

|

|

157: 7168 | 7168, 1, 1, 1 | F32 | blk.8.ffn_norm.weight |

|

|

158: 256 | 256, 1, 1, 1 | F32 | blk.9.exp_probs_b.bias |

|

|

159: 1835008 | 7168, 256, 1, 1 | F32 | blk.9.ffn_gate_inp.weight |

|

|

160: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.9.ffn_down_shexp.weight |

|

|

161: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.9.ffn_gate_shexp.weight |

|

|

162: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.9.ffn_up_shexp.weight |

|

|

163: 512 | 512, 1, 1, 1 | F32 | blk.9.attn_kv_a_norm.weight |

|

|

164: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.9.attn_kv_a_mqa.weight |

|

|

165: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.9.attn_kv_b.weight |

|

|

166: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.9.attn_k_b.weight |

|

|

167: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.9.attn_v_b.weight |

|

|

168: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.9.attn_output.weight |

|

|

169: 1536 | 1536, 1, 1, 1 | F32 | blk.9.attn_q_a_norm.weight |

|

|

170: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.9.attn_q_a.weight |

|

|

171: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.9.attn_q_b.weight |

|

|

172: 256 | 256, 1, 1, 1 | F32 | blk.10.exp_probs_b.bias |

|

|

173: 1835008 | 7168, 256, 1, 1 | F32 | blk.10.ffn_gate_inp.weight |

|

|

174: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.10.ffn_down_shexp.weight |

|

|

175: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.10.ffn_gate_shexp.weight |

|

|

176: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.10.ffn_up_shexp.weight |

|

|

177: 512 | 512, 1, 1, 1 | F32 | blk.10.attn_kv_a_norm.weight |

|

|

178: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.10.attn_kv_a_mqa.weight |

|

|

179: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.10.attn_kv_b.weight |

|

|

180: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.10.attn_k_b.weight |

|

|

181: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.10.attn_v_b.weight |

|

|

182: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.10.attn_output.weight |

|

|

183: 1536 | 1536, 1, 1, 1 | F32 | blk.10.attn_q_a_norm.weight |

|

|

184: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.10.attn_q_a.weight |

|

|

185: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.10.attn_q_b.weight |

|

|

186: 7168 | 7168, 1, 1, 1 | F32 | blk.9.attn_norm.weight |

|

|

INFO:gguf-dump:* Loading: /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00002-of-00010.gguf |

|

|

* File is LITTLE endian, script is running on a LITTLE endian host. |

|

|

* Dumping 6 key/value pair(s) |

|

|

1: UINT32 | 1 | GGUF.version = 3 |

|

|

2: UINT64 | 1 | GGUF.tensor_count = 101 |

|

|

3: UINT64 | 1 | GGUF.kv_count = 3 |

|

|

4: UINT16 | 1 | split.no = 1 |

|

|

5: UINT16 | 1 | split.count = 10 |

|

|

6: INT32 | 1 | split.tensors.count = 1147 |

|

|

* Dumping 101 tensor(s) |

|

|

1: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.9.ffn_down_exps.weight |

|

|

2: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.9.ffn_gate_exps.weight |

|

|

3: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.9.ffn_up_exps.weight |

|

|

4: 7168 | 7168, 1, 1, 1 | F32 | blk.9.ffn_norm.weight |

|

|

5: 7168 | 7168, 1, 1, 1 | F32 | blk.10.attn_norm.weight |

|

|

6: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.10.ffn_down_exps.weight |

|

|

7: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.10.ffn_gate_exps.weight |

|

|

8: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.10.ffn_up_exps.weight |

|

|

9: 7168 | 7168, 1, 1, 1 | F32 | blk.10.ffn_norm.weight |

|

|

10: 256 | 256, 1, 1, 1 | F32 | blk.11.exp_probs_b.bias |

|

|

11: 1835008 | 7168, 256, 1, 1 | F32 | blk.11.ffn_gate_inp.weight |

|

|

12: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.11.ffn_down_shexp.weight |

|

|

13: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.11.ffn_gate_shexp.weight |

|

|

14: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.11.ffn_up_shexp.weight |

|

|

15: 512 | 512, 1, 1, 1 | F32 | blk.11.attn_kv_a_norm.weight |

|

|

16: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.11.attn_kv_a_mqa.weight |

|

|

17: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.11.attn_kv_b.weight |

|

|

18: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.11.attn_k_b.weight |

|

|

19: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.11.attn_v_b.weight |

|

|

20: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.11.attn_output.weight |

|

|

21: 1536 | 1536, 1, 1, 1 | F32 | blk.11.attn_q_a_norm.weight |

|

|

22: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.11.attn_q_a.weight |

|

|

23: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.11.attn_q_b.weight |

|

|

24: 7168 | 7168, 1, 1, 1 | F32 | blk.11.attn_norm.weight |

|

|

25: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.11.ffn_down_exps.weight |

|

|

26: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.11.ffn_gate_exps.weight |

|

|

27: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.11.ffn_up_exps.weight |

|

|

28: 7168 | 7168, 1, 1, 1 | F32 | blk.11.ffn_norm.weight |

|

|

29: 256 | 256, 1, 1, 1 | F32 | blk.12.exp_probs_b.bias |

|

|

30: 1835008 | 7168, 256, 1, 1 | F32 | blk.12.ffn_gate_inp.weight |

|

|

31: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.12.ffn_down_shexp.weight |

|

|

32: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.12.ffn_gate_shexp.weight |

|

|

33: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.12.ffn_up_shexp.weight |

|

|

34: 512 | 512, 1, 1, 1 | F32 | blk.12.attn_kv_a_norm.weight |

|

|

35: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.12.attn_kv_a_mqa.weight |

|

|

36: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.12.attn_kv_b.weight |

|

|

37: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.12.attn_k_b.weight |

|

|

38: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.12.attn_v_b.weight |

|

|

39: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.12.attn_output.weight |

|

|

40: 1536 | 1536, 1, 1, 1 | F32 | blk.12.attn_q_a_norm.weight |

|

|

41: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.12.attn_q_a.weight |

|

|

42: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.12.attn_q_b.weight |

|

|

43: 7168 | 7168, 1, 1, 1 | F32 | blk.12.attn_norm.weight |

|

|

44: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.12.ffn_down_exps.weight |

|

|

45: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.12.ffn_gate_exps.weight |

|

|

46: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.12.ffn_up_exps.weight |

|

|

47: 7168 | 7168, 1, 1, 1 | F32 | blk.12.ffn_norm.weight |

|

|

48: 256 | 256, 1, 1, 1 | F32 | blk.13.exp_probs_b.bias |

|

|

49: 1835008 | 7168, 256, 1, 1 | F32 | blk.13.ffn_gate_inp.weight |

|

|

50: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.13.ffn_down_shexp.weight |

|

|

51: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.13.ffn_gate_shexp.weight |

|

|

52: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.13.ffn_up_shexp.weight |

|

|

53: 512 | 512, 1, 1, 1 | F32 | blk.13.attn_kv_a_norm.weight |

|

|

54: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.13.attn_kv_a_mqa.weight |

|

|

55: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.13.attn_kv_b.weight |

|

|

56: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.13.attn_k_b.weight |

|

|

57: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.13.attn_v_b.weight |

|

|

58: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.13.attn_output.weight |

|

|

59: 1536 | 1536, 1, 1, 1 | F32 | blk.13.attn_q_a_norm.weight |

|

|

60: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.13.attn_q_a.weight |

|

|

61: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.13.attn_q_b.weight |

|

|

62: 7168 | 7168, 1, 1, 1 | F32 | blk.13.attn_norm.weight |

|

|

63: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.13.ffn_down_exps.weight |

|

|

64: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.13.ffn_gate_exps.weight |

|

|

65: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.13.ffn_up_exps.weight |

|

|

66: 7168 | 7168, 1, 1, 1 | F32 | blk.13.ffn_norm.weight |

|

|

67: 256 | 256, 1, 1, 1 | F32 | blk.14.exp_probs_b.bias |

|

|

68: 1835008 | 7168, 256, 1, 1 | F32 | blk.14.ffn_gate_inp.weight |

|

|

69: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.14.ffn_down_shexp.weight |

|

|

70: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.14.ffn_gate_shexp.weight |

|

|

71: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.14.ffn_up_shexp.weight |

|

|

72: 512 | 512, 1, 1, 1 | F32 | blk.14.attn_kv_a_norm.weight |

|

|

73: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.14.attn_kv_a_mqa.weight |

|

|

74: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.14.attn_kv_b.weight |

|

|

75: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.14.attn_k_b.weight |

|

|

76: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.14.attn_v_b.weight |

|

|

77: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.14.attn_output.weight |

|

|

78: 1536 | 1536, 1, 1, 1 | F32 | blk.14.attn_q_a_norm.weight |

|

|

79: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.14.attn_q_a.weight |

|

|

80: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.14.attn_q_b.weight |

|

|

81: 7168 | 7168, 1, 1, 1 | F32 | blk.14.attn_norm.weight |

|

|

82: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.14.ffn_down_exps.weight |

|

|

83: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.14.ffn_gate_exps.weight |

|

|

84: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.14.ffn_up_exps.weight |

|

|

85: 7168 | 7168, 1, 1, 1 | F32 | blk.14.ffn_norm.weight |

|

|

86: 256 | 256, 1, 1, 1 | F32 | blk.15.exp_probs_b.bias |

|

|

87: 1835008 | 7168, 256, 1, 1 | F32 | blk.15.ffn_gate_inp.weight |

|

|

88: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.15.ffn_down_shexp.weight |

|

|

89: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.15.ffn_gate_shexp.weight |

|

|

90: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.15.ffn_up_shexp.weight |

|

|

91: 512 | 512, 1, 1, 1 | F32 | blk.15.attn_kv_a_norm.weight |

|

|

92: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.15.attn_kv_a_mqa.weight |

|

|

93: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.15.attn_kv_b.weight |

|

|

94: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.15.attn_k_b.weight |

|

|

95: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.15.attn_v_b.weight |

|

|

96: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.15.attn_output.weight |

|

|

97: 1536 | 1536, 1, 1, 1 | F32 | blk.15.attn_q_a_norm.weight |

|

|

98: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.15.attn_q_a.weight |

|

|

99: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.15.attn_q_b.weight |

|

|

100: 7168 | 7168, 1, 1, 1 | F32 | blk.15.attn_norm.weight |

|

|

101: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.15.ffn_down_exps.weight |

|

|

INFO:gguf-dump:* Loading: /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00003-of-00010.gguf |

|

|

* File is LITTLE endian, script is running on a LITTLE endian host. |

|

|

* Dumping 6 key/value pair(s) |

|

|

1: UINT32 | 1 | GGUF.version = 3 |

|

|

2: UINT64 | 1 | GGUF.tensor_count = 132 |

|

|

3: UINT64 | 1 | GGUF.kv_count = 3 |

|

|

4: UINT16 | 1 | split.no = 2 |

|

|

5: UINT16 | 1 | split.count = 10 |

|

|

6: INT32 | 1 | split.tensors.count = 1147 |

|

|

* Dumping 132 tensor(s) |

|

|

1: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.15.ffn_gate_exps.weight |

|

|

2: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.15.ffn_up_exps.weight |

|

|

3: 7168 | 7168, 1, 1, 1 | F32 | blk.15.ffn_norm.weight |

|

|

4: 256 | 256, 1, 1, 1 | F32 | blk.16.exp_probs_b.bias |

|

|

5: 1835008 | 7168, 256, 1, 1 | F32 | blk.16.ffn_gate_inp.weight |

|

|

6: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.16.ffn_down_shexp.weight |

|

|

7: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.16.ffn_gate_shexp.weight |

|

|

8: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.16.ffn_up_shexp.weight |

|

|

9: 512 | 512, 1, 1, 1 | F32 | blk.16.attn_kv_a_norm.weight |

|

|

10: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.16.attn_kv_a_mqa.weight |

|

|

11: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.16.attn_kv_b.weight |

|

|

12: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.16.attn_k_b.weight |

|

|

13: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.16.attn_v_b.weight |

|

|

14: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.16.attn_output.weight |

|

|

15: 1536 | 1536, 1, 1, 1 | F32 | blk.16.attn_q_a_norm.weight |

|

|

16: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.16.attn_q_a.weight |

|

|

17: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.16.attn_q_b.weight |

|

|

18: 7168 | 7168, 1, 1, 1 | F32 | blk.16.attn_norm.weight |

|

|

19: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.16.ffn_down_exps.weight |

|

|

20: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.16.ffn_gate_exps.weight |

|

|

21: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.16.ffn_up_exps.weight |

|

|

22: 7168 | 7168, 1, 1, 1 | F32 | blk.16.ffn_norm.weight |

|

|

23: 256 | 256, 1, 1, 1 | F32 | blk.17.exp_probs_b.bias |

|

|

24: 1835008 | 7168, 256, 1, 1 | F32 | blk.17.ffn_gate_inp.weight |

|

|

25: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.17.ffn_down_shexp.weight |

|

|

26: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.17.ffn_gate_shexp.weight |

|

|

27: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.17.ffn_up_shexp.weight |

|

|

28: 512 | 512, 1, 1, 1 | F32 | blk.17.attn_kv_a_norm.weight |

|

|

29: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.17.attn_kv_a_mqa.weight |

|

|

30: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.17.attn_kv_b.weight |

|

|

31: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.17.attn_k_b.weight |

|

|

32: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.17.attn_v_b.weight |

|

|

33: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.17.attn_output.weight |

|

|

34: 1536 | 1536, 1, 1, 1 | F32 | blk.17.attn_q_a_norm.weight |

|

|

35: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.17.attn_q_a.weight |

|

|

36: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.17.attn_q_b.weight |

|

|

37: 7168 | 7168, 1, 1, 1 | F32 | blk.17.attn_norm.weight |

|

|

38: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.17.ffn_down_exps.weight |

|

|

39: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.17.ffn_gate_exps.weight |

|

|

40: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.17.ffn_up_exps.weight |

|

|

41: 7168 | 7168, 1, 1, 1 | F32 | blk.17.ffn_norm.weight |

|

|

42: 256 | 256, 1, 1, 1 | F32 | blk.18.exp_probs_b.bias |

|

|

43: 1835008 | 7168, 256, 1, 1 | F32 | blk.18.ffn_gate_inp.weight |

|

|

44: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.18.ffn_down_shexp.weight |

|

|

45: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.18.ffn_gate_shexp.weight |

|

|

46: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.18.ffn_up_shexp.weight |

|

|

47: 512 | 512, 1, 1, 1 | F32 | blk.18.attn_kv_a_norm.weight |

|

|

48: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.18.attn_kv_a_mqa.weight |

|

|

49: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.18.attn_kv_b.weight |

|

|

50: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.18.attn_k_b.weight |

|

|

51: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.18.attn_v_b.weight |

|

|

52: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.18.attn_output.weight |

|

|

53: 1536 | 1536, 1, 1, 1 | F32 | blk.18.attn_q_a_norm.weight |

|

|

54: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.18.attn_q_a.weight |

|

|

55: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.18.attn_q_b.weight |

|

|

56: 7168 | 7168, 1, 1, 1 | F32 | blk.18.attn_norm.weight |

|

|

57: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.18.ffn_down_exps.weight |

|

|

58: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.18.ffn_gate_exps.weight |

|

|

59: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.18.ffn_up_exps.weight |

|

|

60: 7168 | 7168, 1, 1, 1 | F32 | blk.18.ffn_norm.weight |

|

|

61: 256 | 256, 1, 1, 1 | F32 | blk.19.exp_probs_b.bias |

|

|

62: 1835008 | 7168, 256, 1, 1 | F32 | blk.19.ffn_gate_inp.weight |

|

|

63: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.19.ffn_down_shexp.weight |

|

|

64: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.19.ffn_gate_shexp.weight |

|

|

65: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.19.ffn_up_shexp.weight |

|

|

66: 512 | 512, 1, 1, 1 | F32 | blk.19.attn_kv_a_norm.weight |

|

|

67: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.19.attn_kv_a_mqa.weight |

|

|

68: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.19.attn_kv_b.weight |

|

|

69: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.19.attn_k_b.weight |

|

|

70: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.19.attn_v_b.weight |

|

|

71: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.19.attn_output.weight |

|

|

72: 1536 | 1536, 1, 1, 1 | F32 | blk.19.attn_q_a_norm.weight |

|

|

73: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.19.attn_q_a.weight |

|

|

74: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.19.attn_q_b.weight |

|

|

75: 7168 | 7168, 1, 1, 1 | F32 | blk.19.attn_norm.weight |

|

|

76: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.19.ffn_down_exps.weight |

|

|

77: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.19.ffn_gate_exps.weight |

|

|

78: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.19.ffn_up_exps.weight |

|

|

79: 7168 | 7168, 1, 1, 1 | F32 | blk.19.ffn_norm.weight |

|

|

80: 256 | 256, 1, 1, 1 | F32 | blk.20.exp_probs_b.bias |

|

|

81: 1835008 | 7168, 256, 1, 1 | F32 | blk.20.ffn_gate_inp.weight |

|

|

82: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.20.ffn_down_shexp.weight |

|

|

83: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.20.ffn_gate_shexp.weight |

|

|

84: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.20.ffn_up_shexp.weight |

|

|

85: 512 | 512, 1, 1, 1 | F32 | blk.20.attn_kv_a_norm.weight |

|

|

86: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.20.attn_kv_a_mqa.weight |

|

|

87: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.20.attn_kv_b.weight |

|

|

88: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.20.attn_k_b.weight |

|

|

89: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.20.attn_v_b.weight |

|

|

90: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.20.attn_output.weight |

|

|

91: 1536 | 1536, 1, 1, 1 | F32 | blk.20.attn_q_a_norm.weight |

|

|

92: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.20.attn_q_a.weight |

|

|

93: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.20.attn_q_b.weight |

|

|

94: 7168 | 7168, 1, 1, 1 | F32 | blk.20.attn_norm.weight |

|

|

95: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.20.ffn_down_exps.weight |

|

|

96: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.20.ffn_gate_exps.weight |

|

|

97: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.20.ffn_up_exps.weight |

|

|

98: 7168 | 7168, 1, 1, 1 | F32 | blk.20.ffn_norm.weight |

|

|

99: 256 | 256, 1, 1, 1 | F32 | blk.21.exp_probs_b.bias |

|

|

100: 1835008 | 7168, 256, 1, 1 | F32 | blk.21.ffn_gate_inp.weight |

|

|

101: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.21.ffn_down_shexp.weight |

|

|

102: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.21.ffn_gate_shexp.weight |

|

|

103: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.21.ffn_up_shexp.weight |

|

|

104: 512 | 512, 1, 1, 1 | F32 | blk.21.attn_kv_a_norm.weight |

|

|

105: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.21.attn_kv_a_mqa.weight |

|

|

106: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.21.attn_kv_b.weight |

|

|

107: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.21.attn_k_b.weight |

|

|

108: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.21.attn_v_b.weight |

|

|

109: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.21.attn_output.weight |

|

|

110: 1536 | 1536, 1, 1, 1 | F32 | blk.21.attn_q_a_norm.weight |

|

|

111: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.21.attn_q_a.weight |

|

|

112: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.21.attn_q_b.weight |

|

|

113: 7168 | 7168, 1, 1, 1 | F32 | blk.21.attn_norm.weight |

|

|

114: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.21.ffn_down_exps.weight |

|

|

115: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.21.ffn_gate_exps.weight |

|

|

116: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.21.ffn_up_exps.weight |

|

|

117: 7168 | 7168, 1, 1, 1 | F32 | blk.21.ffn_norm.weight |

|

|

118: 256 | 256, 1, 1, 1 | F32 | blk.22.exp_probs_b.bias |

|

|

119: 1835008 | 7168, 256, 1, 1 | F32 | blk.22.ffn_gate_inp.weight |

|

|

120: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.22.ffn_down_shexp.weight |

|

|

121: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.22.ffn_gate_shexp.weight |

|

|

122: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.22.ffn_up_shexp.weight |

|

|

123: 512 | 512, 1, 1, 1 | F32 | blk.22.attn_kv_a_norm.weight |

|

|

124: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.22.attn_kv_a_mqa.weight |

|

|

125: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.22.attn_kv_b.weight |

|

|

126: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.22.attn_k_b.weight |

|

|

127: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.22.attn_v_b.weight |

|

|

128: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.22.attn_output.weight |

|

|

129: 1536 | 1536, 1, 1, 1 | F32 | blk.22.attn_q_a_norm.weight |

|

|

130: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.22.attn_q_a.weight |

|

|

131: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.22.attn_q_b.weight |

|

|

132: 7168 | 7168, 1, 1, 1 | F32 | blk.22.attn_norm.weight |

|

|

INFO:gguf-dump:* Loading: /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00004-of-00010.gguf |

|

|

* File is LITTLE endian, script is running on a LITTLE endian host. |

|

|

* Dumping 6 key/value pair(s) |

|

|

1: UINT32 | 1 | GGUF.version = 3 |

|

|

2: UINT64 | 1 | GGUF.tensor_count = 115 |

|

|

3: UINT64 | 1 | GGUF.kv_count = 3 |

|

|

4: UINT16 | 1 | split.no = 3 |

|

|

5: UINT16 | 1 | split.count = 10 |

|

|

6: INT32 | 1 | split.tensors.count = 1147 |

|

|

* Dumping 115 tensor(s) |

|

|

1: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.22.ffn_down_exps.weight |

|

|

2: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.22.ffn_gate_exps.weight |

|

|

3: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.22.ffn_up_exps.weight |

|

|

4: 7168 | 7168, 1, 1, 1 | F32 | blk.22.ffn_norm.weight |

|

|

5: 256 | 256, 1, 1, 1 | F32 | blk.23.exp_probs_b.bias |

|

|

6: 1835008 | 7168, 256, 1, 1 | F32 | blk.23.ffn_gate_inp.weight |

|

|

7: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.23.ffn_down_shexp.weight |

|

|

8: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.23.ffn_gate_shexp.weight |

|

|

9: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.23.ffn_up_shexp.weight |

|

|

10: 512 | 512, 1, 1, 1 | F32 | blk.23.attn_kv_a_norm.weight |

|

|

11: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.23.attn_kv_a_mqa.weight |

|

|

12: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.23.attn_kv_b.weight |

|

|

13: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.23.attn_k_b.weight |

|

|

14: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.23.attn_v_b.weight |

|

|

15: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.23.attn_output.weight |

|

|

16: 1536 | 1536, 1, 1, 1 | F32 | blk.23.attn_q_a_norm.weight |

|

|

17: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.23.attn_q_a.weight |

|

|

18: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.23.attn_q_b.weight |

|

|

19: 7168 | 7168, 1, 1, 1 | F32 | blk.23.attn_norm.weight |

|

|

20: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.23.ffn_down_exps.weight |

|

|

21: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.23.ffn_gate_exps.weight |

|

|

22: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.23.ffn_up_exps.weight |

|

|

23: 7168 | 7168, 1, 1, 1 | F32 | blk.23.ffn_norm.weight |

|

|

24: 256 | 256, 1, 1, 1 | F32 | blk.24.exp_probs_b.bias |

|

|

25: 1835008 | 7168, 256, 1, 1 | F32 | blk.24.ffn_gate_inp.weight |

|

|

26: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.24.ffn_down_shexp.weight |

|

|

27: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.24.ffn_gate_shexp.weight |

|

|

28: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.24.ffn_up_shexp.weight |

|

|

29: 512 | 512, 1, 1, 1 | F32 | blk.24.attn_kv_a_norm.weight |

|

|

30: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.24.attn_kv_a_mqa.weight |

|

|

31: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.24.attn_kv_b.weight |

|

|

32: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.24.attn_k_b.weight |

|

|

33: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.24.attn_v_b.weight |

|

|

34: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.24.attn_output.weight |

|

|

35: 1536 | 1536, 1, 1, 1 | F32 | blk.24.attn_q_a_norm.weight |

|

|

36: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.24.attn_q_a.weight |

|

|

37: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.24.attn_q_b.weight |

|

|

38: 7168 | 7168, 1, 1, 1 | F32 | blk.24.attn_norm.weight |

|

|

39: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.24.ffn_down_exps.weight |

|

|

40: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.24.ffn_gate_exps.weight |

|

|

41: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.24.ffn_up_exps.weight |

|

|

42: 7168 | 7168, 1, 1, 1 | F32 | blk.24.ffn_norm.weight |

|

|

43: 256 | 256, 1, 1, 1 | F32 | blk.25.exp_probs_b.bias |

|

|

44: 1835008 | 7168, 256, 1, 1 | F32 | blk.25.ffn_gate_inp.weight |

|

|

45: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.25.ffn_down_shexp.weight |

|

|

46: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.25.ffn_gate_shexp.weight |

|

|

47: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.25.ffn_up_shexp.weight |

|

|

48: 512 | 512, 1, 1, 1 | F32 | blk.25.attn_kv_a_norm.weight |

|

|

49: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.25.attn_kv_a_mqa.weight |

|

|

50: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.25.attn_kv_b.weight |

|

|

51: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.25.attn_k_b.weight |

|

|

52: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.25.attn_v_b.weight |

|

|

53: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.25.attn_output.weight |

|

|

54: 1536 | 1536, 1, 1, 1 | F32 | blk.25.attn_q_a_norm.weight |

|

|

55: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.25.attn_q_a.weight |

|

|

56: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.25.attn_q_b.weight |

|

|

57: 7168 | 7168, 1, 1, 1 | F32 | blk.25.attn_norm.weight |

|

|

58: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.25.ffn_down_exps.weight |

|

|

59: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.25.ffn_gate_exps.weight |

|

|

60: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.25.ffn_up_exps.weight |

|

|

61: 7168 | 7168, 1, 1, 1 | F32 | blk.25.ffn_norm.weight |

|

|

62: 256 | 256, 1, 1, 1 | F32 | blk.26.exp_probs_b.bias |

|

|

63: 1835008 | 7168, 256, 1, 1 | F32 | blk.26.ffn_gate_inp.weight |

|

|

64: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.26.ffn_down_shexp.weight |

|

|

65: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.26.ffn_gate_shexp.weight |

|

|

66: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.26.ffn_up_shexp.weight |

|

|

67: 512 | 512, 1, 1, 1 | F32 | blk.26.attn_kv_a_norm.weight |

|

|

68: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.26.attn_kv_a_mqa.weight |

|

|

69: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.26.attn_kv_b.weight |

|

|

70: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.26.attn_k_b.weight |

|

|

71: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.26.attn_v_b.weight |

|

|

72: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.26.attn_output.weight |

|

|

73: 1536 | 1536, 1, 1, 1 | F32 | blk.26.attn_q_a_norm.weight |

|

|

74: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.26.attn_q_a.weight |

|

|

75: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.26.attn_q_b.weight |

|

|

76: 7168 | 7168, 1, 1, 1 | F32 | blk.26.attn_norm.weight |

|

|

77: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.26.ffn_down_exps.weight |

|

|

78: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.26.ffn_gate_exps.weight |

|

|

79: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.26.ffn_up_exps.weight |

|

|

80: 7168 | 7168, 1, 1, 1 | F32 | blk.26.ffn_norm.weight |

|

|

81: 256 | 256, 1, 1, 1 | F32 | blk.27.exp_probs_b.bias |

|

|

82: 1835008 | 7168, 256, 1, 1 | F32 | blk.27.ffn_gate_inp.weight |

|

|

83: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.27.ffn_down_shexp.weight |

|

|

84: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.27.ffn_gate_shexp.weight |

|

|

85: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.27.ffn_up_shexp.weight |

|

|

86: 512 | 512, 1, 1, 1 | F32 | blk.27.attn_kv_a_norm.weight |

|

|

87: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.27.attn_kv_a_mqa.weight |

|

|

88: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.27.attn_kv_b.weight |

|

|

89: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.27.attn_k_b.weight |

|

|

90: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.27.attn_v_b.weight |

|

|

91: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.27.attn_output.weight |

|

|

92: 1536 | 1536, 1, 1, 1 | F32 | blk.27.attn_q_a_norm.weight |

|

|

93: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.27.attn_q_a.weight |

|

|

94: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.27.attn_q_b.weight |

|

|

95: 7168 | 7168, 1, 1, 1 | F32 | blk.27.attn_norm.weight |

|

|

96: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.27.ffn_down_exps.weight |

|

|

97: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.27.ffn_gate_exps.weight |

|

|

98: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.27.ffn_up_exps.weight |

|

|

99: 7168 | 7168, 1, 1, 1 | F32 | blk.27.ffn_norm.weight |

|

|

100: 256 | 256, 1, 1, 1 | F32 | blk.28.exp_probs_b.bias |

|

|

101: 1835008 | 7168, 256, 1, 1 | F32 | blk.28.ffn_gate_inp.weight |

|

|

102: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.28.ffn_down_shexp.weight |

|

|

103: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.28.ffn_gate_shexp.weight |

|

|

104: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.28.ffn_up_shexp.weight |

|

|

105: 512 | 512, 1, 1, 1 | F32 | blk.28.attn_kv_a_norm.weight |

|

|

106: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.28.attn_kv_a_mqa.weight |

|

|

107: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.28.attn_kv_b.weight |

|

|

108: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.28.attn_k_b.weight |

|

|

109: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.28.attn_v_b.weight |

|

|

110: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.28.attn_output.weight |

|

|

111: 1536 | 1536, 1, 1, 1 | F32 | blk.28.attn_q_a_norm.weight |

|

|

112: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.28.attn_q_a.weight |

|

|

113: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.28.attn_q_b.weight |

|

|

114: 7168 | 7168, 1, 1, 1 | F32 | blk.28.attn_norm.weight |

|

|

115: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.28.ffn_down_exps.weight |

|

|

INFO:gguf-dump:* Loading: /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00005-of-00010.gguf |

|

|

* File is LITTLE endian, script is running on a LITTLE endian host. |

|

|

* Dumping 6 key/value pair(s) |

|

|

1: UINT32 | 1 | GGUF.version = 3 |

|

|

2: UINT64 | 1 | GGUF.tensor_count = 132 |

|

|

3: UINT64 | 1 | GGUF.kv_count = 3 |

|

|

4: UINT16 | 1 | split.no = 4 |

|

|

5: UINT16 | 1 | split.count = 10 |

|

|

6: INT32 | 1 | split.tensors.count = 1147 |

|

|

* Dumping 132 tensor(s) |

|

|

1: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.28.ffn_gate_exps.weight |

|

|

2: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.28.ffn_up_exps.weight |

|

|

3: 7168 | 7168, 1, 1, 1 | F32 | blk.28.ffn_norm.weight |

|

|

4: 256 | 256, 1, 1, 1 | F32 | blk.29.exp_probs_b.bias |

|

|

5: 1835008 | 7168, 256, 1, 1 | F32 | blk.29.ffn_gate_inp.weight |

|

|

6: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.29.ffn_down_shexp.weight |

|

|

7: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.29.ffn_gate_shexp.weight |

|

|

8: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.29.ffn_up_shexp.weight |

|

|

9: 512 | 512, 1, 1, 1 | F32 | blk.29.attn_kv_a_norm.weight |

|

|

10: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.29.attn_kv_a_mqa.weight |

|

|

11: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.29.attn_kv_b.weight |

|

|

12: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.29.attn_k_b.weight |

|

|

13: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.29.attn_v_b.weight |

|

|

14: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.29.attn_output.weight |

|

|

15: 1536 | 1536, 1, 1, 1 | F32 | blk.29.attn_q_a_norm.weight |

|

|

16: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.29.attn_q_a.weight |

|

|

17: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.29.attn_q_b.weight |

|

|

18: 7168 | 7168, 1, 1, 1 | F32 | blk.29.attn_norm.weight |

|

|

19: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.29.ffn_down_exps.weight |

|

|

20: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.29.ffn_gate_exps.weight |

|

|

21: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.29.ffn_up_exps.weight |

|

|

22: 7168 | 7168, 1, 1, 1 | F32 | blk.29.ffn_norm.weight |

|

|

23: 256 | 256, 1, 1, 1 | F32 | blk.30.exp_probs_b.bias |

|

|

24: 1835008 | 7168, 256, 1, 1 | F32 | blk.30.ffn_gate_inp.weight |

|

|

25: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.30.ffn_down_shexp.weight |

|

|

26: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.30.ffn_gate_shexp.weight |

|

|

27: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.30.ffn_up_shexp.weight |

|

|

28: 512 | 512, 1, 1, 1 | F32 | blk.30.attn_kv_a_norm.weight |

|

|

29: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.30.attn_kv_a_mqa.weight |

|

|

30: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.30.attn_kv_b.weight |

|

|

31: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.30.attn_k_b.weight |

|

|

32: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.30.attn_v_b.weight |

|

|

33: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.30.attn_output.weight |

|

|

34: 1536 | 1536, 1, 1, 1 | F32 | blk.30.attn_q_a_norm.weight |

|

|

35: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.30.attn_q_a.weight |

|

|

36: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.30.attn_q_b.weight |

|

|

37: 7168 | 7168, 1, 1, 1 | F32 | blk.30.attn_norm.weight |

|

|

38: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.30.ffn_down_exps.weight |

|

|

39: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.30.ffn_gate_exps.weight |

|

|

40: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.30.ffn_up_exps.weight |

|

|

41: 7168 | 7168, 1, 1, 1 | F32 | blk.30.ffn_norm.weight |

|

|

42: 256 | 256, 1, 1, 1 | F32 | blk.31.exp_probs_b.bias |

|

|

43: 1835008 | 7168, 256, 1, 1 | F32 | blk.31.ffn_gate_inp.weight |

|

|

44: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.31.ffn_down_shexp.weight |

|

|

45: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.31.ffn_gate_shexp.weight |

|

|

46: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.31.ffn_up_shexp.weight |

|

|

47: 512 | 512, 1, 1, 1 | F32 | blk.31.attn_kv_a_norm.weight |

|

|

48: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.31.attn_kv_a_mqa.weight |

|

|

49: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.31.attn_kv_b.weight |

|

|

50: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.31.attn_k_b.weight |

|

|

51: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.31.attn_v_b.weight |

|

|

52: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.31.attn_output.weight |

|

|

53: 1536 | 1536, 1, 1, 1 | F32 | blk.31.attn_q_a_norm.weight |

|

|

54: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.31.attn_q_a.weight |

|

|

55: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.31.attn_q_b.weight |

|

|

56: 7168 | 7168, 1, 1, 1 | F32 | blk.31.attn_norm.weight |

|

|

57: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.31.ffn_down_exps.weight |

|

|

58: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.31.ffn_gate_exps.weight |

|

|

59: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.31.ffn_up_exps.weight |

|

|

60: 7168 | 7168, 1, 1, 1 | F32 | blk.31.ffn_norm.weight |

|

|

61: 256 | 256, 1, 1, 1 | F32 | blk.32.exp_probs_b.bias |

|

|

62: 1835008 | 7168, 256, 1, 1 | F32 | blk.32.ffn_gate_inp.weight |

|

|

63: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.32.ffn_down_shexp.weight |

|

|

64: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.32.ffn_gate_shexp.weight |

|

|

65: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.32.ffn_up_shexp.weight |

|

|

66: 512 | 512, 1, 1, 1 | F32 | blk.32.attn_kv_a_norm.weight |

|

|

67: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.32.attn_kv_a_mqa.weight |

|

|

68: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.32.attn_kv_b.weight |

|

|

69: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.32.attn_k_b.weight |

|

|

70: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.32.attn_v_b.weight |

|

|

71: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.32.attn_output.weight |

|

|

72: 1536 | 1536, 1, 1, 1 | F32 | blk.32.attn_q_a_norm.weight |

|

|

73: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.32.attn_q_a.weight |

|

|

74: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.32.attn_q_b.weight |

|

|

75: 7168 | 7168, 1, 1, 1 | F32 | blk.32.attn_norm.weight |

|

|

76: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.32.ffn_down_exps.weight |

|

|

77: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.32.ffn_gate_exps.weight |

|

|

78: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.32.ffn_up_exps.weight |

|

|

79: 7168 | 7168, 1, 1, 1 | F32 | blk.32.ffn_norm.weight |

|

|

80: 256 | 256, 1, 1, 1 | F32 | blk.33.exp_probs_b.bias |

|

|

81: 1835008 | 7168, 256, 1, 1 | F32 | blk.33.ffn_gate_inp.weight |

|

|

82: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.33.ffn_down_shexp.weight |

|

|

83: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.33.ffn_gate_shexp.weight |

|

|

84: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.33.ffn_up_shexp.weight |

|

|

85: 512 | 512, 1, 1, 1 | F32 | blk.33.attn_kv_a_norm.weight |

|

|

86: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.33.attn_kv_a_mqa.weight |

|

|

87: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.33.attn_kv_b.weight |

|

|

88: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.33.attn_k_b.weight |

|

|

89: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.33.attn_v_b.weight |

|

|

90: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.33.attn_output.weight |

|

|

91: 1536 | 1536, 1, 1, 1 | F32 | blk.33.attn_q_a_norm.weight |

|

|

92: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.33.attn_q_a.weight |

|

|

93: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.33.attn_q_b.weight |

|

|

94: 7168 | 7168, 1, 1, 1 | F32 | blk.33.attn_norm.weight |

|

|

95: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.33.ffn_down_exps.weight |

|

|

96: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.33.ffn_gate_exps.weight |

|

|

97: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.33.ffn_up_exps.weight |

|

|

98: 7168 | 7168, 1, 1, 1 | F32 | blk.33.ffn_norm.weight |

|

|

99: 256 | 256, 1, 1, 1 | F32 | blk.34.exp_probs_b.bias |

|

|

100: 1835008 | 7168, 256, 1, 1 | F32 | blk.34.ffn_gate_inp.weight |

|

|

101: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.34.ffn_down_shexp.weight |

|

|

102: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.34.ffn_gate_shexp.weight |

|

|

103: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.34.ffn_up_shexp.weight |

|

|

104: 512 | 512, 1, 1, 1 | F32 | blk.34.attn_kv_a_norm.weight |

|

|

105: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.34.attn_kv_a_mqa.weight |

|

|

106: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.34.attn_kv_b.weight |

|

|

107: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.34.attn_k_b.weight |

|

|

108: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.34.attn_v_b.weight |

|

|

109: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.34.attn_output.weight |

|

|

110: 1536 | 1536, 1, 1, 1 | F32 | blk.34.attn_q_a_norm.weight |

|

|

111: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.34.attn_q_a.weight |

|

|

112: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.34.attn_q_b.weight |

|

|

113: 7168 | 7168, 1, 1, 1 | F32 | blk.34.attn_norm.weight |

|

|

114: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.34.ffn_down_exps.weight |

|

|

115: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.34.ffn_gate_exps.weight |

|

|

116: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.34.ffn_up_exps.weight |

|

|

117: 7168 | 7168, 1, 1, 1 | F32 | blk.34.ffn_norm.weight |

|

|

118: 256 | 256, 1, 1, 1 | F32 | blk.35.exp_probs_b.bias |

|

|

119: 1835008 | 7168, 256, 1, 1 | F32 | blk.35.ffn_gate_inp.weight |

|

|

120: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.35.ffn_down_shexp.weight |

|

|

121: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.35.ffn_gate_shexp.weight |

|

|

122: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.35.ffn_up_shexp.weight |

|

|

123: 512 | 512, 1, 1, 1 | F32 | blk.35.attn_kv_a_norm.weight |

|

|

124: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.35.attn_kv_a_mqa.weight |

|

|

125: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.35.attn_kv_b.weight |

|

|

126: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.35.attn_k_b.weight |

|

|

127: 8388608 | 512, 16384, 1, 1 | Q8_0 | blk.35.attn_v_b.weight |

|

|

128: 117440512 | 16384, 7168, 1, 1 | Q8_0 | blk.35.attn_output.weight |

|

|

129: 1536 | 1536, 1, 1, 1 | F32 | blk.35.attn_q_a_norm.weight |

|

|

130: 11010048 | 7168, 1536, 1, 1 | Q8_0 | blk.35.attn_q_a.weight |

|

|

131: 37748736 | 1536, 24576, 1, 1 | Q8_0 | blk.35.attn_q_b.weight |

|

|

132: 7168 | 7168, 1, 1, 1 | F32 | blk.35.attn_norm.weight |

|

|

INFO:gguf-dump:* Loading: /mnt/data/Models/anikifoss/DeepSeek-R1-0528-DQ4_K_R4/DeepSeek-R1-0528-DQ4_K_R4-00006-of-00010.gguf |

|

|

* File is LITTLE endian, script is running on a LITTLE endian host. |

|

|

* Dumping 6 key/value pair(s) |

|

|

1: UINT32 | 1 | GGUF.version = 3 |

|

|

2: UINT64 | 1 | GGUF.tensor_count = 115 |

|

|

3: UINT64 | 1 | GGUF.kv_count = 3 |

|

|

4: UINT16 | 1 | split.no = 5 |

|

|

5: UINT16 | 1 | split.count = 10 |

|

|

6: INT32 | 1 | split.tensors.count = 1147 |

|

|

* Dumping 115 tensor(s) |

|

|

1: 3758096384 | 2048, 7168, 256, 1 | Q6_K_R4 | blk.35.ffn_down_exps.weight |

|

|

2: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.35.ffn_gate_exps.weight |

|

|

3: 3758096384 | 7168, 2048, 256, 1 | Q4_K_R4 | blk.35.ffn_up_exps.weight |

|

|

4: 7168 | 7168, 1, 1, 1 | F32 | blk.35.ffn_norm.weight |

|

|

5: 256 | 256, 1, 1, 1 | F32 | blk.36.exp_probs_b.bias |

|

|

6: 1835008 | 7168, 256, 1, 1 | F32 | blk.36.ffn_gate_inp.weight |

|

|

7: 14680064 | 2048, 7168, 1, 1 | Q8_0 | blk.36.ffn_down_shexp.weight |

|

|

8: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.36.ffn_gate_shexp.weight |

|

|

9: 14680064 | 7168, 2048, 1, 1 | Q8_0 | blk.36.ffn_up_shexp.weight |

|

|

10: 512 | 512, 1, 1, 1 | F32 | blk.36.attn_kv_a_norm.weight |

|

|

11: 4128768 | 7168, 576, 1, 1 | Q8_0 | blk.36.attn_kv_a_mqa.weight |

|

|

12: 16777216 | 512, 32768, 1, 1 | Q8_0 | blk.36.attn_kv_b.weight |

|

|

13: 8388608 | 128, 65536, 1, 1 | Q8_0 | blk.36.attn_k_b.weight |

|

|