1 · Project Summary

This project started as a course assignment whose goal was to build a complete small-scale language-model pipeline from scratch:

- Train a byte-level BPE tokenizer.

- Implement a Transformer-based causal LM.

- Train the LM on a corpus of Russian anecdotes.

All tasks are now complete; the repository history contains several model generations and ablation experiments.

2 · Model Variants

| preset | layers | heads | Kv-heads | hidden | FFN | max seq | extras |

|---|---|---|---|---|---|---|---|

| nano | 3 | 4 | 2 | 96 | 256 | 128 | – |

| mini | 6 | 6 | 3 | 384 | 1024 | 128 | – |

| small | 12 | 12 | 6 | 768 | 2048 | 128 | – |

Baseline architecture – ALiBi positional bias · Grouped-Query Attention · SwiGLU feed-forward.

| preset | layers | heads | Kv-heads | hidden | FFN | max seq | extras |

|---|---|---|---|---|---|---|---|

| nano-rope | 3 | 4 | 2 | 96 | 256 | 128 | RoPE ✓ · 16 latent tokens |

| mini-rope | 6 | 6 | 3 | 384 | 1024 | 128 | RoPE ✓ · 24 latent tokens |

| small-rope | 12 | 12 | 6 | 768 | 2048 | 128 | RoPE ✓ · 48 latent tokens |

Enhanced architecture – Rotary Positional Embedding + Multi-Head Latent Attention on top of the baseline.

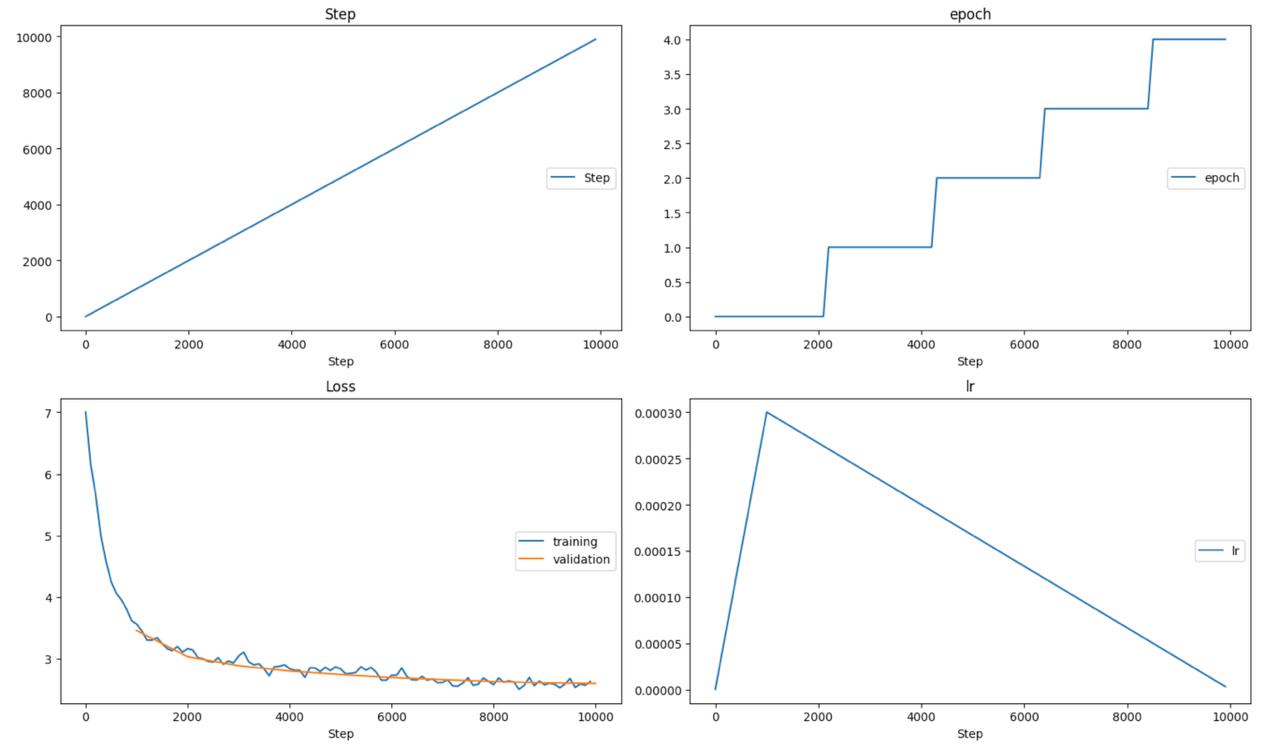

3 · Training & Data

- Dataset: a cleaned collection of Russian jokes (IgorVolochay/russian_jokes).

- Optimizer: AdamW, linear warm-up, gradient-clipping 1.0.

- Loss: masked next-token cross-entropy, padding-aware.

(The full training scripts and notebooks will be published right after grading is finished.)

4 · Evaluation

| model | eval loss ↓ |

|---|---|

| base nano | 3.3025 |

| base mini | 2.6147 |

| rope + latent nano | 3.2494 |

| rope + latent mini | 2.5952 |

Lower is better. The rotary + latent tweak gives a consistent (though modest) improvement on both sizes.

5 · Repository Notes

- Each major experiment lives in its own commit tag.

- After course review, the complete training source will be pushed to the public branch.

6 · Quick peek at the model’s sense of humour

(exact raw samples taken from my own training run)

| prompt (ru) | continuation produced by the model |

|---|---|

Встречаются как-то → |

→ Встречаются как-то в Москве, один другому говорит: — Представляешь, на кого ты встретишься? |

Приходит мужик к врачу → |

→ Приходит мужик к врачу и видит, что у него болит голова. Врач его спрашивает: — А у меня есть палки? |

Сидит Вовочка на уроке → |

→ Сидит Вовочка на уроке, с мамой папе идёт к маме: — Сынок, ты в капусте, а ты — виски? — А ты козёл! Дай мне денег на розетку. |

Штирлиц подумал → |

→ Штирлиц подумал и говорит: «Теперь я буду дарить тебе макуляцию». |

Звонок в дверь → |

→ Звонок в дверь. Упал с работы. Она: — Всё! По-моему, мы с дружкой пропали. |

(top_k=10, max_new_tokens=200, eos_token_id=tokenizer.eos_token_id, do_sample=True)

7 · How to run the model

pip install transformers>=4.40 torch

import torch

REPO_NAME = "amocualg/llm-course-hw1"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

tokenizer = ByteLevelBPETokenizer.from_pretrained(REPO_NAME)

check_model = TransformerForCausalLM.from_pretrained(REPO_NAME)

check_model = check_model.to(device)

check_model = check_model.eval()

text = "Штирлиц пришел домой" # change if needed

input_ids = torch.tensor(tokenizer.encode(text), device=device)

model_output = check_model.generate(

input_ids[None, :], max_new_tokens=200, eos_token_id=tokenizer.eos_token_id, do_sample=True, top_k=10

)

print(tokenizer.decode(model_output[0].tolist()))

ByteLevelBPETokenizer and TransformerForCausalLM will be available along with the source code

This model has been pushed to the Hub using the PytorchModelHubMixin

- Downloads last month

- 27