metadata

license: apache-2.0

language:

- en

- zh

base_model:

- deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

pipeline_tag: text2text-generation

tags:

- Reward_Model

- Reasoning_Model

Model Card for Model ID

Model Details

Model Description

- Developed by: Hao Peng@THUKEG

- Model type: Generative reward model

- Language(s) (NLP): English, CHinese

- License: apache-2.0

- Finetuned from model [optional]: deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

Model Sources [optional]

- Repository: https://github.com/THU-KEG/VerIF

- Paper: https://arxiv.org/abs/2506.09942

Training Details

Training Data

This model is trained from DeepSeek-R1-Distill-Qwen-7B using 131k critic data IF-Verifier-Data. This model is used for verifying soft constraints of instruction following. Deploying IF-Verifier-7B requires only one single H800 GPU, with an average reward computation time of 120 seconds per batch, which can be further reduced with multi-GPUs.

Results

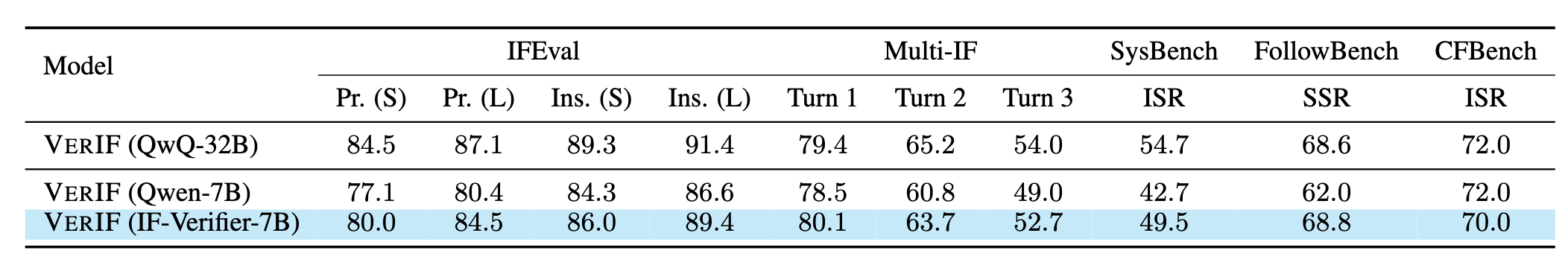

The model trained using this model is comparable with that of QwQ 32B.

Summary

Please refer to our paper and our GitHub repo (https://github.com/THU-KEG/VerIF) for more details.

Citation

If this model helps, please kindly cite us:

@misc{peng2025verif,

title={VerIF: Verification Engineering for Reinforcement Learning in Instruction Following},

author={Hao Peng and Yunjia Qi and Xiaozhi Wang and Bin Xu and Lei Hou and Juanzi Li},

year={2025},

eprint={2506.09942},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2506.09942},

}