Motivation :

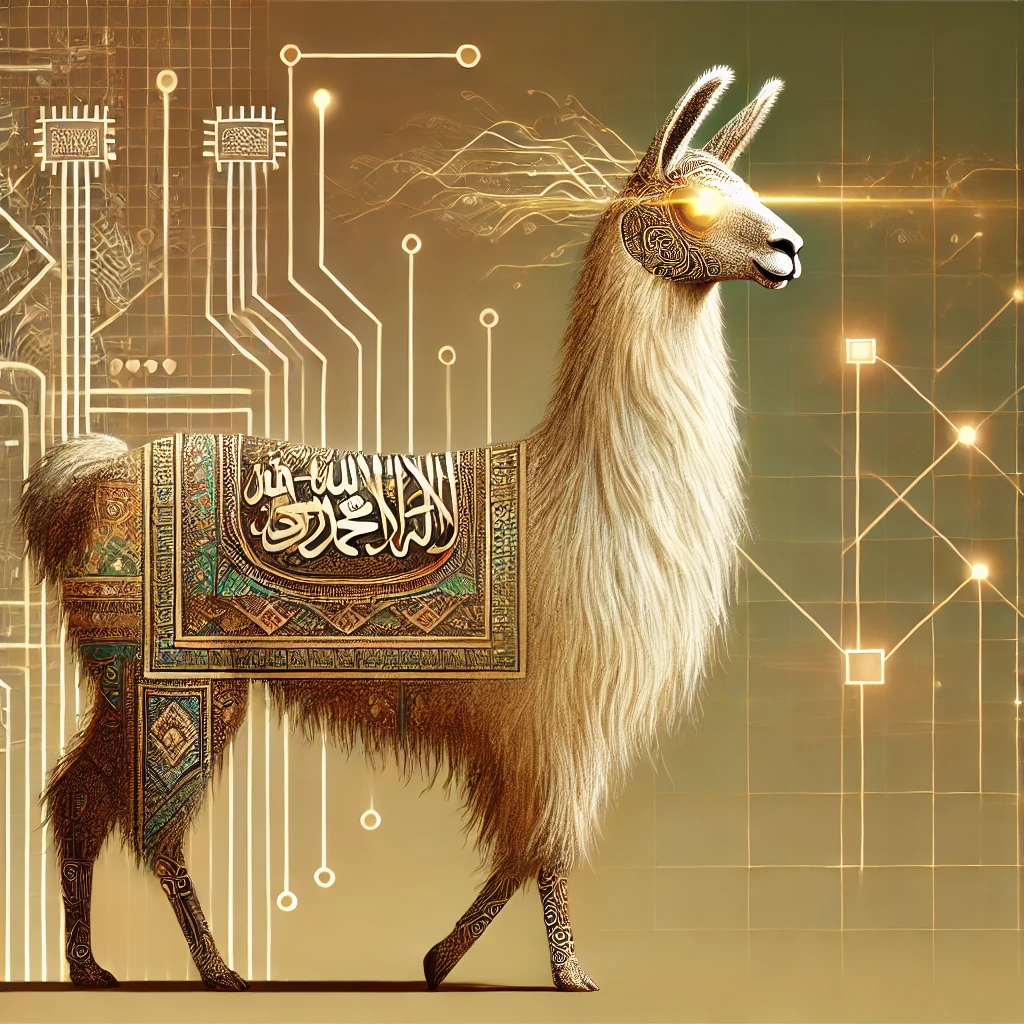

The goal of the project was to adapt large language models for the Arabic language and create a new state-of-the-art Arabic LLM. Due to the scarcity of Arabic instruction fine-tuning data, not many LLMs have been trained specifically in Arabic, which is surprising given the large number of Arabic speakers.

Our final model was trained on a high-quality instruction fine-tuning (IFT) dataset, generated synthetically and then evaluated using the Hugging Face Arabic leaderboard.

Training :

This model is the 9B version. It was trained for a week on 4 A100 GPUs using LoRA with a rank of 128, a learning rate of 1e-4, and a cosine learning rate schedule.

Evaluation :

| Metric | Slim205/Barka-9b-it |

|---|---|

| Average | 61.71 |

| ACVA | 73.68 |

| AlGhafa | 54.42 |

| MMLU | 52.52 |

| EXAMS | 52.51 |

| ARC Challenge | 59.14 |

| ARC Easy | 59.69 |

| BOOLQ | 86.41 |

| COPA | 58.89 |

| HELLAWSWAG | 38.04 |

| OPENBOOK QA | 56.16 |

| PIQA | 72.01 |

| RACE | 48.71 |

| SCIQ | 66.43 |

| TOXIGEN | 85.35 |

Please refer to https://github.com/Slim205/Arabicllm/ for more details.

- Downloads last month

- 19

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support