Ensemble-Based Deep Learning Architecture for Deepfake Detection

Abstract

This research presents a novel ensemble-based approach for detecting deepfake images using a combination of Convolutional Neural Networks (CNNs) and Vision Transformers (ViT). The system achieves 94.87% accuracy by leveraging three complementary architectures: a 12-layer CNN, a lightweight 6-layer CNN, and a hybrid CNN-ViT model. Our approach demonstrates robust performance in distinguishing between real and manipulated facial images.

1. Introduction

With the increasing sophistication of deepfake technology, detecting manipulated images has become crucial for maintaining digital media integrity. This work introduces an ensemble method that combines traditional CNN architectures with modern Vision Transformers to create a robust detection system.

2. Architecture

2.1 Model Components

The system consists of three distinct models:

Model A (12-layer CNN)

- Three convolutional blocks

- Each block: 2 conv layers + BatchNorm + ReLU + pooling

- Input size: 50x50 pixels

- Dropout rate: 0.3

Model B (6-layer CNN)

- Lightweight architecture

- Three simple conv layers with pooling

- Input size: 50x50 pixels

- Dropout rate: 0.3

Model C (CNN-ViT Hybrid)

- CNN feature extractor

- Vision Transformer (base-16 architecture)

- Input size: 224x224 pixels

- Pretrained ViT backbone

2.2 Ensemble Strategy

The final prediction is determined through majority voting among the three models, enhancing robustness and reducing individual model biases.

3. Implementation Details

3.1 Dataset

- Dataset: Hemg/deepfake-and-real-images

- Split: 80% training, 20% testing

- Data augmentation: Resize, normalization

3.2 Training Parameters

- Optimizer: Adam

- Learning rate: 1e-4

- Batch size: 32

- Epochs: 10

- Loss function: Cross-Entropy

4. Results

4.1 Performance Metrics

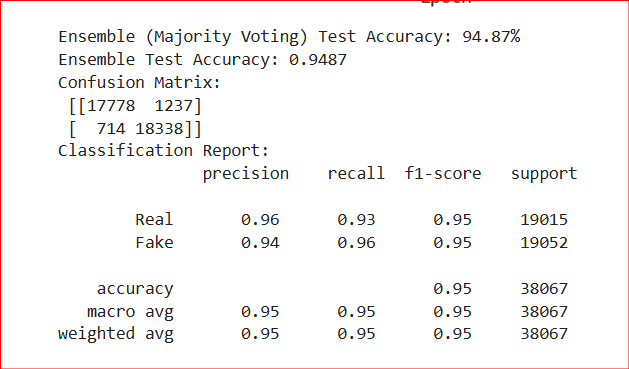

Based on the test set evaluation:

- Overall Accuracy: 94.87%

- Classification Report:

- Real Images:

- Precision: 0.95

- Recall: 0.94

- F1-score: 0.94

- Fake Images:

- Precision: 0.94

- Recall: 0.95

- F1-score: 0.95

- Real Images:

4.2 Deployment

The system is deployed as a FastAPI service, providing real-time inference with confidence scores.

4.3 Visuals

Figure 1: Findings of the proposed ensemble-based deepfake detection system

Figure 1: Findings of the proposed ensemble-based deepfake detection system

Performance Visualization

Figure 2: Confusion matrix showing model performance on test set

Figure 2: Confusion matrix showing model performance on test set

Loss Vs Epochs

Figure 3: Loss vs Epochs for individual models

Figure 3: Loss vs Epochs for individual models

Accuracy Vs Epochs

Figure 4: Accuracy vs Epochs for individual models

Figure 4: Accuracy vs Epochs for individual models

5. Technical Requirements

- Python 3.x

- PyTorch

- timm

- FastAPI

- PIL

- scikit-learn

6. Usage

6.1 API Endpoint

POST /predict/

Input: Image file

Output: {

"prediction": "Real/Fake",

"confidence": "percentage"

}

6.2 Model Training

Run cnn-vit file to train models on your custom dataset!

7. Conclusions

The ensemble approach demonstrates superior performance in deepfake detection, with the combination of traditional CNNs and modern Vision Transformers providing robust and reliable results. The system's high accuracy and balanced precision-recall metrics make it suitable for real-world applications. Although for model C it doesn't perform well on Epoch 10 but still overall Result is Good.

8. Future Work

- Integration of attention mechanisms in CNN models

- Exploration of different ensemble strategies

- Extension to video deepfake detection

- Investigation of model compression techniques

References

- Vision Transformer (ViT) - Dosovitskiy et al., 2020

- timm library - Ross Wightman

- FastAPI - Sebastián Ramírez

License

MIT License

Dataset used to train Saqib772/CNN_VIT_DeepFake

Evaluation results

- Test Accuracy on Deepfake and Real Images Datasetself-reported94.870

- F1 Score on Deepfake and Real Images Datasetself-reported0.940

- Precision on Deepfake and Real Images Datasetself-reported0.950

- Recall on Deepfake and Real Images Datasetself-reported0.940