Sentinel Probing Classifier (Logistic Regression)

This repository contains the sentence-level classifier used in Sentinel, a lightweight context compression framework introduced in our paper:

Sentinel: Attention Probing of Proxy Models for LLM Context Compression with an Understanding Perspective

Yong Zhang, Yanwen Huang, Ning Cheng, Yang Guo, Yun Zhu, Yanmeng Wang, Shaojun Wang, Jing Xiao

📄 Paper (Arxiv 2025) | 💻 Code on GitHub

🧠 What is Sentinel?

Sentinel reframes LLM context compression as a lightweight attention-based understanding task. Instead of fine-tuning a full compression model, it:

- Extracts decoder attention from a small proxy LLM (e.g., Qwen-2.5-0.5B)

- Computes sentence-level attention features

- Applies a logistic regression (LR) classifier to select relevant sentences

This approach is efficient, model-agnostic, and highly interpretable.

📦 Files Included

| File | Description |

|---|---|

sentinel_lr_model.pkl |

Trained logistic regression classifier |

sentinel_config.json |

Feature extraction configuration |

🚀 Usage

Use this classifier on attention-derived feature vectors to predict sentence-level relevance scores:

🛠 Feature extraction code and full pipeline available at: 👉 https://github.com/yzhangchuck/Sentinel

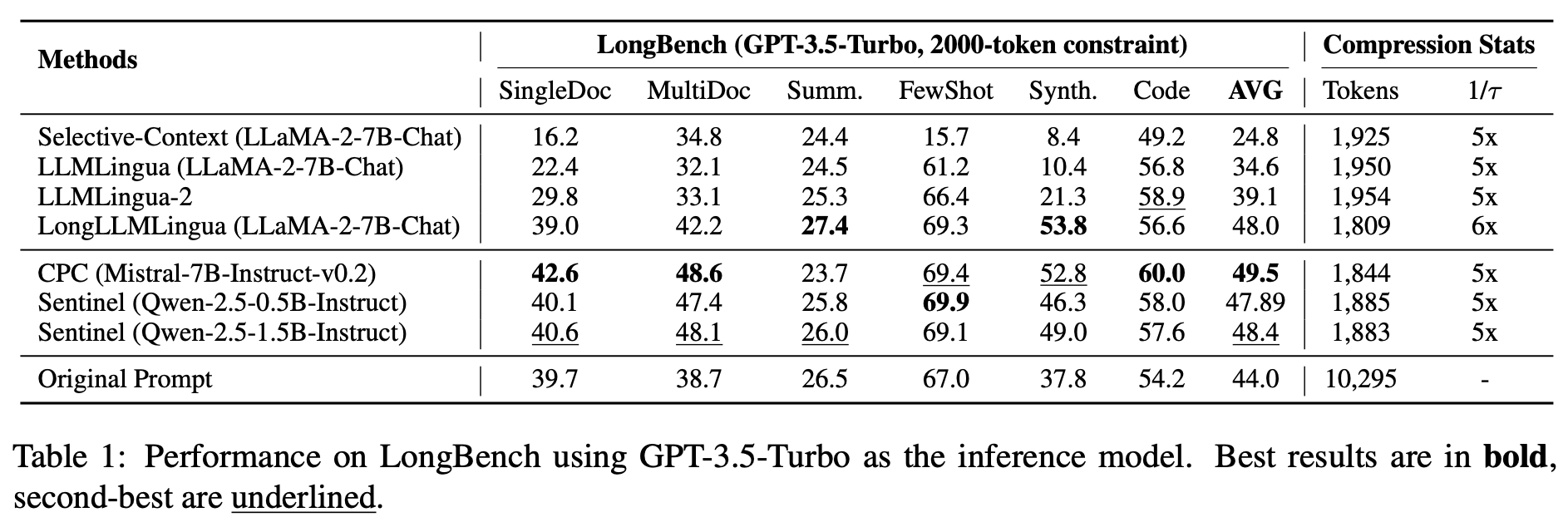

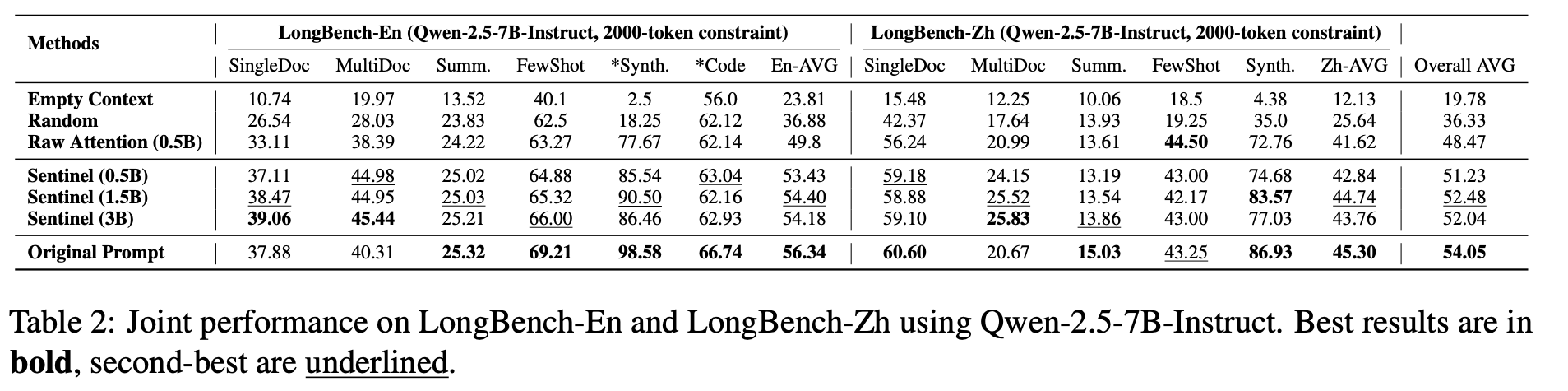

📈 Benchmark Results

📄 Citation

Please cite us if you use this model:

@misc{zhang2025sentinelattentionprobingproxy, title={Sentinel: Attention Probing of Proxy Models for LLM Context Compression with an Understanding Perspective}, author={Yong Zhang and Yanwen Huang and Ning Cheng and Yang Guo and Yun Zhu and Yanmeng Wang and Shaojun Wang and Jing Xiao}, year={2025}, eprint={2505.23277}, archivePrefix={arXiv}, primaryClass={cs.CL}, url={https://arxiv.org/abs/2505.23277}, }

📬 Contact

• 📧 [email protected]

• 🔗 Project: https://github.com/yzhangchuck/Sentinel

🔒 License

Apache License 2.0 — Free for research and commercial use with attribution.

- Downloads last month

- 0