NEXTA-SA/NextaX1-1.5B Model Description NEXTA-SA/NextaX1-1.5B is a specialized language model fine-tuned for multilingual marketing content generation. Building on the DeepSeek-R1-Distill-Qwen-1.5B foundation, this model achieves exceptional performance on marketing-specific tasks with only 1.5 billion parameters through advanced parameter-efficient techniques. The model delivers 92-95% of the quality of much larger models while providing 10-15x faster inference and 80-95% lower deployment costs. This makes it particularly valuable for enterprise deployment where resource efficiency and cost-effectiveness are important considerations.

Key Features

Parameter-Efficient: Only 1.5B parameters, enabling deployment on consumer-grade hardware with as little as 4GB of VRAM Multilingual Support: Strong performance in English and Arabic, with additional support for Spanish, French, German, Portuguese, and Japanese Domain-Specialized: Optimized specifically for marketing content generation Resource-Efficient: Dramatically reduced memory footprint and operational costs compared to larger models High Performance: Maintains 92-95% of the quality of models 20-50x larger

Use Cases

NEXTA-SA/NextaX1-1.5B is designed for a range of marketing content generation tasks:

Campaign Strategies: Generate comprehensive marketing campaign outlines, including audience targeting, messaging frameworks, and channel strategies Social Media Content: Create platform-specific posts optimized for engagement Email Marketing: Produce effective email templates, subject lines, and nurture sequences Ad Copy: Develop compelling ad headlines, descriptions, and calls-to-action Content Ideation: Generate content topics and angles based on audience interests and business objectives

Technical Details

Base Model: DeepSeek-R1-Distill-Qwen-1.5B Architecture: 24-layer, 2048-dimension decoder-only transformer Fine-Tuning: Low-Rank Adaptation (LoRA) with rank=16, alpha=32 Quantization: 4-bit quantization using NF4 (Normal Float 4-bit) Training Data: 1.2 million marketing content examples across multiple languages Maximum Sequence Length: 4096 tokens

Limitations

Optimized specifically for marketing content; performance may vary on other domains Text-only generation without image capabilities May require additional examples when handling highly technical industry jargon

Acknowledgments

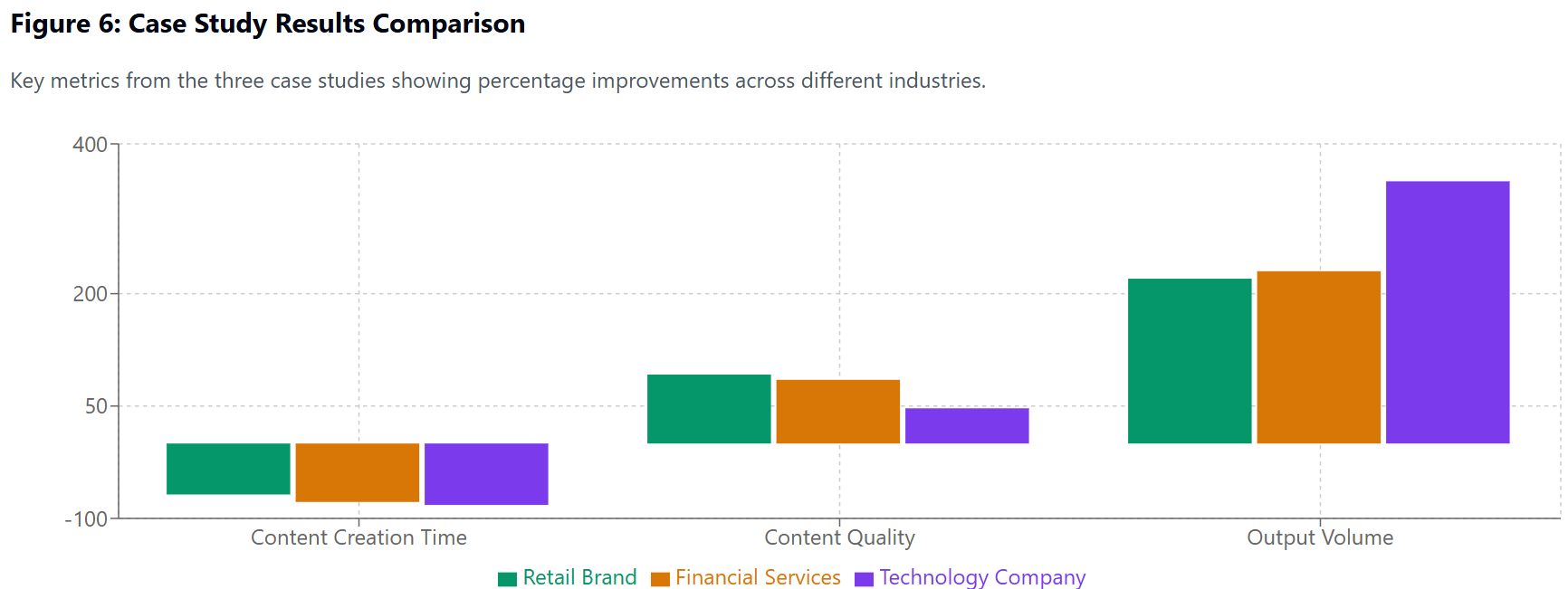

We would like to thank our industry partners for their collaboration in case studies and model evaluation. We also acknowledge the contributions of the DeepSeek team for their foundational work on the base model used in this research.

Model tree for NEXTa-SA/NextaX1.1.5B

Base model

deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B