metadata

tags:

- text-to-image

library_name: diffusers

license: apache-2.0

DMM: Building a Versatile Image Generation Model via Distillation-Based Model Merging

Introduction

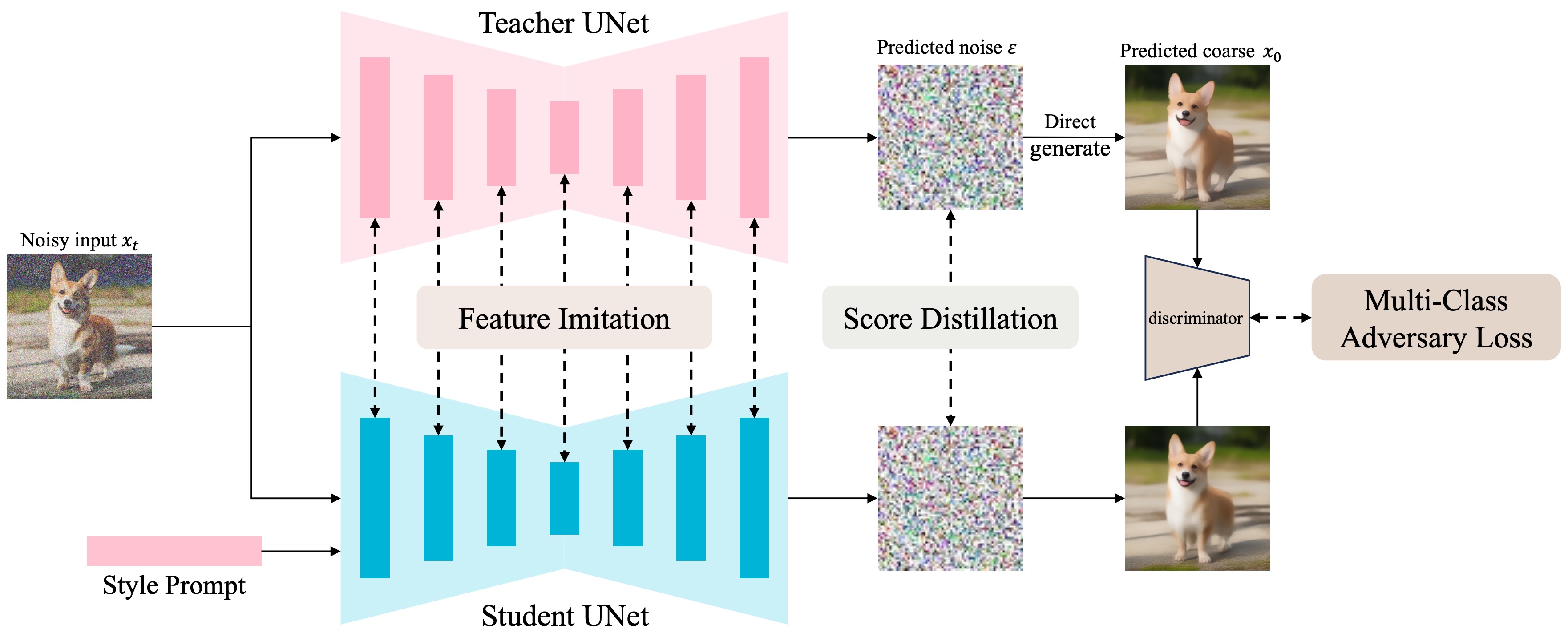

We propose a score distillation based model merging paradigm DMM, compressing multiple models into a single versatile T2I model.

This checkpoint merges pre-trained models from many different domains, including realistic style, Asian portrait, anime style, illustration, etc. Specifically, the source models are listed below:

- JuggernautReborn

- MajicmixRealisticV7

- EpicRealismV5

- RealisticVisionV5

- MajicmixFantasyV3

- MinimalismV2

- RealCartoon3dV17

- AWPaintingV1.4

Visualization

Results

Results combined with charactor LoRA

Results of interpolation between two styles

Online Demo

https://huggingface.co/spaces/MCG-NJU/DMM .

Usage

Please refer to https://github.com/MCG-NJU/DMM .

import torch

from modeling.dmm_pipeline import StableDiffusionDMMPipeline

pipe = StableDiffusionDMMPipeline.from_pretrained("path/to/pipeline/checkpoint", torch_dtype=torch.float16, use_safetensors=True)

pipe = pipe.to("cuda")

# select model index

model_id = 5

output = pipe(

prompt="portrait photo of a girl, long golden hair, flowers, best quality",

negative_prompt="worst quality,low quality,normal quality,lowres,watermark,nsfw",

width=512,

height=512,

num_inference_steps=25,

guidance_scale=7,

model_id=model_id,

).images[0]