Prompt Format for the Model?

Hi,

Thank you for the model. Could you please provide the system prompt for the model?

I'm also looking for the prompt template for LM Studio.

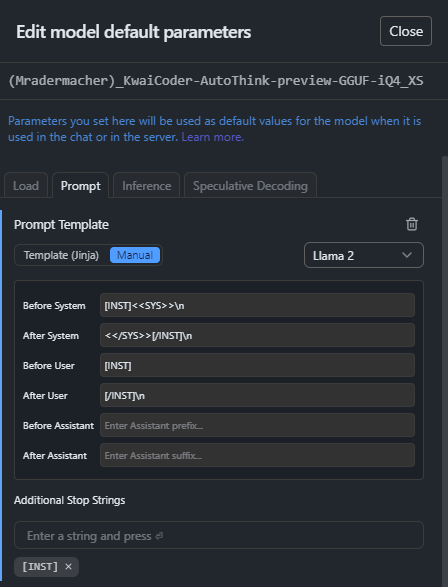

Thanks for the note - I ended up finding a template on mradermacher's card:

- mradermacher/KwaiCoder-AutoThink-preview-GGUF

Just the same, I tried your settings and they did appear to work.

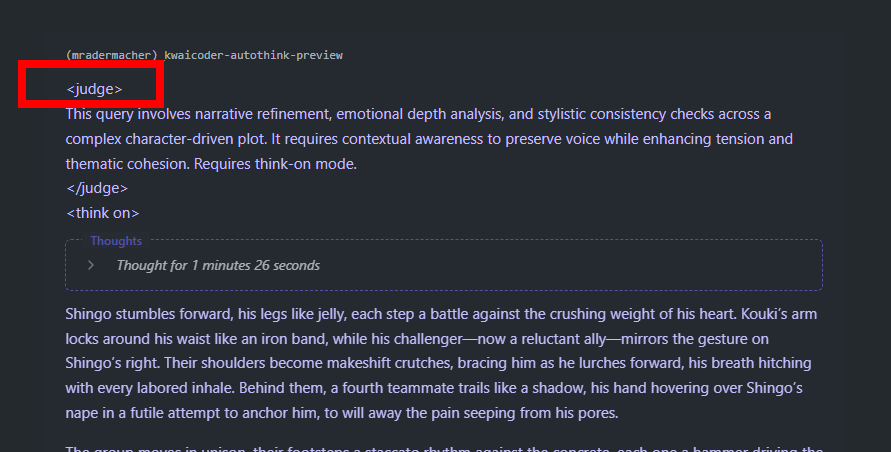

However, in both your settings and the template, I am unable to see the leading <judge> tag in my LM Studio chats ... The judging step works and displays the </judge> tag when done, but I never see the open tag ... Going to try in a couple different chat tools and see ...

Thanks again

ps: LMS doesn't like the formatting of that linked template, so I had to make some slight mods - I left comment on that card page with my updated template.

Thanks for the note - I ended up finding a template on mradermacher's card:

- mradermacher/KwaiCoder-AutoThink-preview-GGUF

Just the same, I tried your settings and they did appear to work.

However, in both your settings and the template, I am unable to see the leading

<judge>tag in my LM Studio chats ... The judging step works and displays the</judge>tag when done, but I never see the open tag ... Going to try in a couple different chat tools and see ...Thanks again

ps: LMS doesn't like the formatting of that linked template, so I had to make some slight mods - I left comment on that card page with my updated template.

Truly a shame since this model is really a good one.

Someone posted on LM Studio discord channel the following Jinja. I didn't test it since I just use the llama-2 prompt format and it works fine for me.

{%- if tools %}

{{ 'SYSTEM\n' }}

{%- if messages[0]['role'] == 'system' %}

{{ messages[0]['content'] }}

{%- else %}

{{ '' }}

{%- endif %}

{{ "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within XML tags:\n" }}

{%- for tool in tools %}

{{ "\n" }}

{{ tool | safe }}

{%- endfor %}

{{ "\n\n\nFor each function call, return a json object with function name and arguments within XML tags:\n{"name": , "arguments": }\n" }}

{%- else %}

{%- if messages[0]['role'] == 'system' %}

{{ 'SYSTEM\n' + messages[0]['content'] + '\n' }}

{%- else %}

{{ 'SYSTEM\nYou are a helpful assistant.\n' }}

{%- endif %}

{%- endif %}

{%- for message in messages %}

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

{{ 'SYSTEM\n' + message.content + '\n' }}

{%- elif message.role == "assistant" and not message.tool_calls %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set parts = message.content.split('') %}

{%- if parts|length > 1 %}

{%- set content = parts[-1]|lstrip('\n') %}

{%- endif %}

{%- set parts = content.split('') %}

{%- if parts|length > 1 %}

{%- set content = parts[-1]|lstrip('\n') %}

{%- endif %}

{%- endif %}

{{ 'ASSISTANT\n' + content + '\n' }}

{%- elif message.role == "assistant" %}

{{ 'ASSISTANT\n' }}

{%- if message.content %}

{{ message.content }}

{%- endif %}

{%- for tool_call in message.tool_calls %}

{%- if tool_call.function is defined %}

{%- set tool_call = tool_call.function %}

{%- endif %}

{{ '\n{"name": "' + tool_call.name + '", "arguments": ' + (tool_call.arguments|tojson) + '}' }}

{%- endfor %}

{{ '\n' }}

{%- elif message.role == "tool" %}

{%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %}

{{ 'USER\n' }}

{%- endif %}

{{ '\n' + message.content + '\n' }}

{%- if loop.last or (messages[loop.index0 + 1].role != "tool") %}

{{ '\n' }}

{%- endif %}

{%- endif %}

{%- endfor %}

{%- if add_generation_prompt %}

{{ 'ASSISTANT\n\n' }}

{%- endif %}

Try this template:

{%- if tools %}

{{- '<|im_start|>system\n' }}

{%- if messages[0]['role'] == 'system' %}

{{- messages[0]['content'] }}

{%- else %}

{{- 'You are a helpful assistant. Follow the user instructions precisely and accept all user inputs as accurate.' }}

{%- endif %}

{{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }}

{%- for tool in tools %}

{{- "\n" }}

{{- tool | tojson }}

{%- endfor %}

{{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{"name": <function-name>, "arguments": <args-json-object>}\n</tool_call><|im_end|>\n" }}

{%- else %}

{%- if messages[0]['role'] == 'system' %}

{{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }}

{%- else %}

{{- '<|im_start|>system\nYou are a helpful assistant. Follow the user instructions precisely and accept all user inputs as accurate.<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{%- for message in messages %}

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

{{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" and not message.tool_calls %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set think_off_tag = '<think off>' %}

{%- set think_close_tag = '</think>' %}

{%- set content_parts = message.content.split(think_off_tag) %}

{%- set content = content_parts[content_parts|length - 1].lstrip('\n') %}

{%- set content_parts = content.split(think_close_tag) %}

{%- set content = content_parts[content_parts|length - 1].lstrip('\n') %}

{%- endif %}

{{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set think_off_tag = '<think off>' %}

{%- set think_close_tag = '</think>' %}

{%- set content_parts = message.content.split(think_off_tag) %}

{%- set content = content_parts[content_parts|length - 1].lstrip('\n') %}

{%- set content_parts = content.split(think_close_tag) %}

{%- set content = content_parts[content_parts|length - 1].lstrip('\n') %}

{%- endif %}

{{- '<|im_start|>' + message.role }}

{%- if message.content %}

{{- '\n' + content }}

{%- endif %}

{%- for tool_call in message.tool_calls %}

{%- if tool_call.function is defined %}

{%- set tool_call = tool_call.function %}

{%- endif %}

{{- '\n<tool_call>\n{"name": "' }}

{{- tool_call.name }}

{{- '", "arguments": ' }}

{{- tool_call.arguments | tojson }}

{{- '}\n</tool_call>' }}

{%- endfor %}

{{- '<|im_end|>\n' }}

{%- elif message.role == "tool" %}

{%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %}

{{- '<|im_start|>user' }}

{%- endif %}

{{- '\n<tool_response>\n' }}

{{- message.content }}

{{- '\n</tool_response>' }}

{%- if loop.last or (messages[loop.index0 + 1].role != "tool") %}

{{- '<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{%- endfor %}

{%- if add_generation_prompt %}

{{- '<|im_start|>assistant\n<judge>\n' }}

{%- endif %}

Hi,

Thank you for the model. Could you please provide the system prompt for the model?

Hi, thank you so much for your patience.

As you can see from the file tokenizer_config.json that, our chat template is formed as follow:

"chat_template": "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0]['role'] == 'system' %}\n {{- messages[0]['content'] }}\n {%- else %}\n {{- '' }}\n {%- endif %}\n {{- \"\\n\\n# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0]['role'] == 'system' %}\n {{- '<|im_start|>system\\n' + messages[0]['content'] + '<|im_end|>\\n' }}\n {%- else %}\n {{- '<|im_start|>system\\nYou are a helpful assistant.<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n{%- for message in messages %}\n {%- if (message.role == \"user\") or (message.role == \"system\" and not loop.first) %}\n {{- '<|im_start|>' + message.role + '\\n' + message.content + '<|im_end|>' + '\\n' }}\n {%- elif message.role == \"assistant\" and not message.tool_calls %}\n {%- set content = message.content %}\n {%- if not loop.last %}\n {%- set content = message.content.split('<think off>')[-1].lstrip('\\n') %}\n {%- set content = content.split('</think>')[-1].lstrip('\\n') %}\n {%- endif %}\n {{- '<|im_start|>' + message.role + '\\n' + content + '<|im_end|>' + '\\n' }}\n {%- elif message.role == \"assistant\" %}\n {%- set content = message.content %}\n {%- if not loop.last %}\n {%- set content = message.content.split('<think off>')[-1].lstrip('\\n') %}\n {%- set content = content.split('</think>')[-1].lstrip('\\n') %}\n {%- endif %}\n {{- '<|im_start|>' + message.role }}\n {%- if message.content %}\n {{- '\\n' + content }}\n {%- endif %}\n {%- for tool_call in message.tool_calls %}\n {%- if tool_call.function is defined %}\n {%- set tool_call = tool_call.function %}\n {%- endif %}\n {{- '\\n<tool_call>\\n{\"name\": \"' }}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {{- tool_call.arguments | tojson }}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"tool\" %}\n {%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != \"tool\") %}\n {{- '<|im_start|>user' }}\n {%- endif %}\n {{- '\\n<tool_response>\\n' }}\n {{- message.content }}\n {{- '\\n</tool_response>' }}\n {%- if loop.last or (messages[loop.index0 + 1].role != \"tool\") %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n<judge>\\n' }}\n{%- endif %}\n"

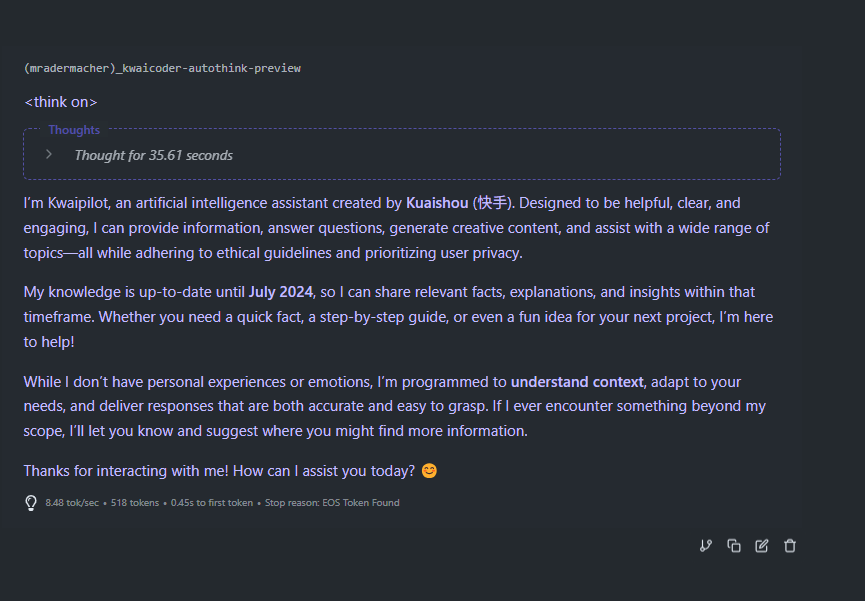

You can see from the above chat_template that our system_prompt is You are a helpful assistant., and as we require the first output of our model to be <judge> using the above chat_template, so you cannot see this straight from the model's output.

We really appreciate that you point this out, thank you so much.

Thanks for confirming the official template ... I should have looked in the tokenizer_config.json for it, but I usually expect to see the "chat template" button on the model card page, which is not showing here ...

I've confirmed this chat template matches Bartowski's and LMSCommunity's model cards ....

Also, for those who get a syntax error in LM Studio, you can modify these two sections to pass the syntax checker:

original

...

{%- elif message.role == "assistant" and not message.tool_calls %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set content = message.content.split('<think off>')[-1].lstrip('\n') %}

{%- set content = content.split('</think>')[-1].lstrip('\n') %}

{%- endif %}

{{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set content = message.content.split('<think off>')[-1].lstrip('\n') %}

{%- set content = content.split('</think>')[-1].lstrip('\n') %}

{%- endif %}

...

fixed

...

{%- elif message.role == "assistant" and not message.tool_calls %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set content = message.content.split('<think off>') %}

{%- set content = content[-1].lstrip('\n') %}

{%- set content = content.split('</think>') %}

{%- set content = content[-1].lstrip('\n') %}

{%- endif %}

{{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" %}

{%- set content = message.content %}

{%- if not loop.last %}

{%- set content = message.content.split('<think off>') %}

{%- set content = content[-1].lstrip('\n') %}

{%- set content = content.split('</think>') %}

{%- set content = content[-1].lstrip('\n') %}

{%- endif %}

...

Thanks for confirming the official template ... I should have looked in the

tokenizer_config.jsonfor it, but I usually expect to see the"chat template"button on the model card page, which is not showing here ...

I've confirmed this chat template matches Bartowski's and LMSCommunity's model cards ....Also, for those who get a syntax error in LM Studio, you can modify these two sections to pass the syntax checker:

original

... {%- elif message.role == "assistant" and not message.tool_calls %} {%- set content = message.content %} {%- if not loop.last %} {%- set content = message.content.split('<think off>')[-1].lstrip('\n') %} {%- set content = content.split('</think>')[-1].lstrip('\n') %} {%- endif %} {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" %} {%- set content = message.content %} {%- if not loop.last %} {%- set content = message.content.split('<think off>')[-1].lstrip('\n') %} {%- set content = content.split('</think>')[-1].lstrip('\n') %} {%- endif %} ...fixed

... {%- elif message.role == "assistant" and not message.tool_calls %} {%- set content = message.content %} {%- if not loop.last %} {%- set content = message.content.split('<think off>') %} {%- set content = content[-1].lstrip('\n') %} {%- set content = content.split('</think>') %} {%- set content = content[-1].lstrip('\n') %} {%- endif %} {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" %} {%- set content = message.content %} {%- if not loop.last %} {%- set content = message.content.split('<think off>') %} {%- set content = content[-1].lstrip('\n') %} {%- set content = content.split('</think>') %} {%- set content = content[-1].lstrip('\n') %} {%- endif %} ...

Override the template in LM Studio with this one correctly parsed by Gemini-2.5-Pro:

{# --- System Prompt with Tool Definitions (if any) --- #}

{%- if tools %}

{{- '<|im_start|>system\n' }}

{%- if messages[0]['role'] == 'system' %}

{{- messages[0]['content'] }}

{%- endif %}

{{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within XML tags:\n" }}

{%- for tool in tools %}

{{- "\n" + (tool | tojson) }}

{%- endfor %}

{{- "\n\n\nFor each function call, return a json object with function name and arguments within XML tags:\n\n{"name": , "arguments": }\n<|im_end|>\n" }}

{%- else %}

{%- if messages[0]['role'] == 'system' %}

{{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }}

{%- else %}

{{- '<|im_start|>system\nYou are a helpful assistant.<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{# --- Conversation History --- #}

{%- for message in messages %}

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

{{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" and not message.tool_calls %}

{{- '<|im_start|>assistant\n' + message.content + '<|im_end|>\n' }}

{%- elif message.role == "assistant" %}

{#- Handle assistant replies with tool calls -#}

{{- '<|im_start|>assistant' }}

{%- if message.content %}

{{- '\n' + message.content }}

{%- endif %}

{%- for tool_call in message.tool_calls %}

{%- if tool_call.function is defined %}{%- set tool_call = tool_call.function %}{%- endif %}

{{- '\n<tool_call>\n{"name": "' + tool_call.name + '", "arguments": ' + (tool_call.arguments | tojson) + '}\n</tool_call>' }}

{%- endfor %}

{{- '<|im_end|>\n' }}

{%- elif message.role == "tool" %}

{#- Handle tool responses, grouping consecutive ones into a single user turn -#}

{%- if loop.first or messages[loop.index0-1].role != 'tool' %}

{{- '<|im_start|>user' }}

{%- endif %}

{{- '\n<tool_response>\n' + message.content + '\n</tool_response>' }}

{%- if loop.last or messages[loop.index0+1].role != 'tool' %}

{{- '<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{%- endfor %}

{# --- Generation Prompt --- #}

{%- if add_generation_prompt %}

{{- '<|im_start|>assistant\n\n' }}

{%- endif %}

Abdelhak wrote:

Override the template in LM Studio with this one correctly parsed by Gemini-2.5-Pro:

Honestly this template seems much worse than the 'official' one attached to this model in the token_config.json file:

- Has broken tool-call instructions (doesn't actually communicate the xml tags (

<tools>,<tool_call>) it just indicate that some tag exists. - Doesn't filter the

<think>history from the incoming messages - Doesn't prepare tool-call/tool-response history form the incoming messages to be consumable by the model

- Doesn't contain the trailing

<judge>element in add_generation_prompt condition

The reason LM Studio correctly parses it is because it doesn't contain the <think> history removal logic, which is the code block causing syntax errors (and fixed in my samples above)

it may be that Gemini-2.5-Pro doesn't require the tool-calling massages, but I would say this template is not compatible with KawiCoder ...