Add model files

Browse files- .gitattributes +6 -0

- README.md +469 -3

- added_tokens.json +34 -0

- asset/architecture.png +3 -0

- asset/keye_logo_2.png +3 -0

- asset/post1.jpeg +0 -0

- asset/post2.jpeg +3 -0

- asset/pre-train.png +3 -0

- asset/teaser.png +3 -0

- chat_template.json +3 -0

- config.json +72 -0

- configuration_keye.py +243 -0

- generation_config.json +13 -0

- image_processing_keye.py +570 -0

- merges.txt +0 -0

- model-00001-of-00004.safetensors +3 -0

- model-00002-of-00004.safetensors +3 -0

- model-00003-of-00004.safetensors +3 -0

- model-00004-of-00004.safetensors +3 -0

- model.safetensors.index.json +861 -0

- modeling_keye.py +0 -0

- preprocessor_config.json +18 -0

- processing_keye.py +298 -0

- processor_config.json +6 -0

- special_tokens_map.json +25 -0

- tokenizer.json +3 -0

- tokenizer_config.json +282 -0

- vocab.json +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,9 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

asset/architecture.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

asset/keye_logo_2.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

asset/post2.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

asset/pre-train.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

asset/teaser.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,3 +1,469 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

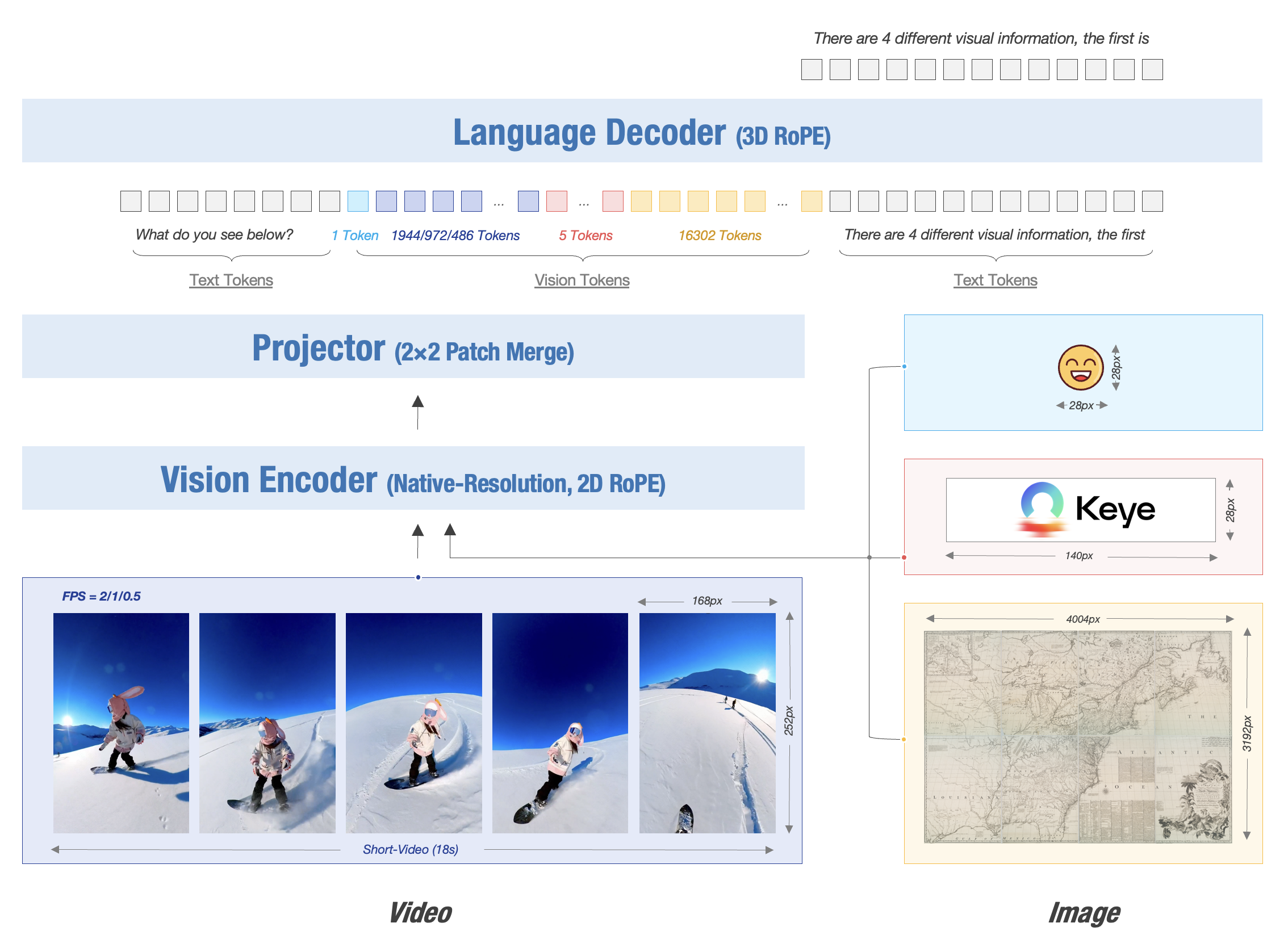

|

|

|

|

|

|

|

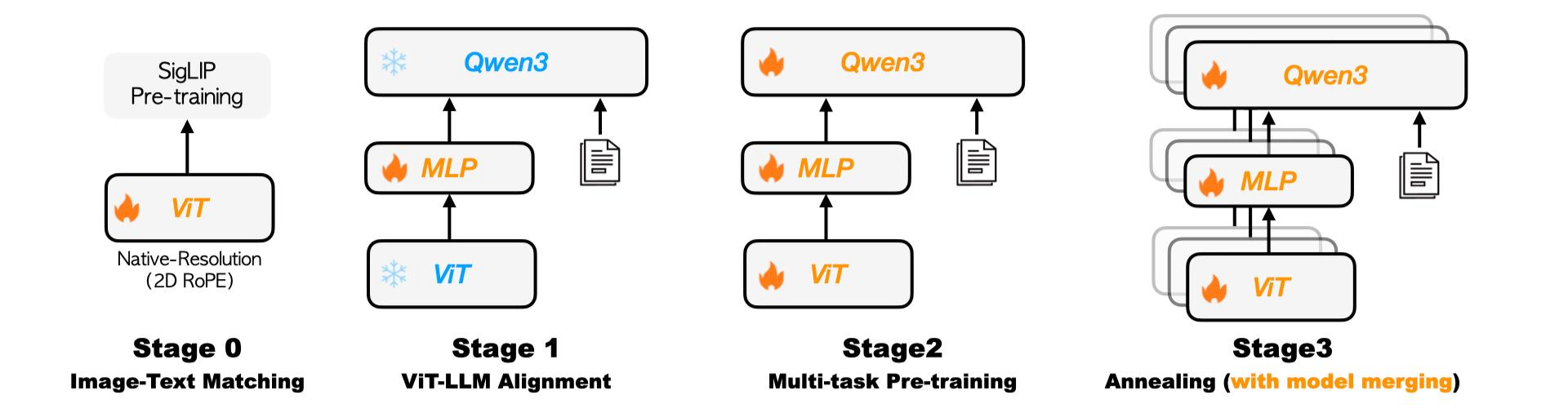

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

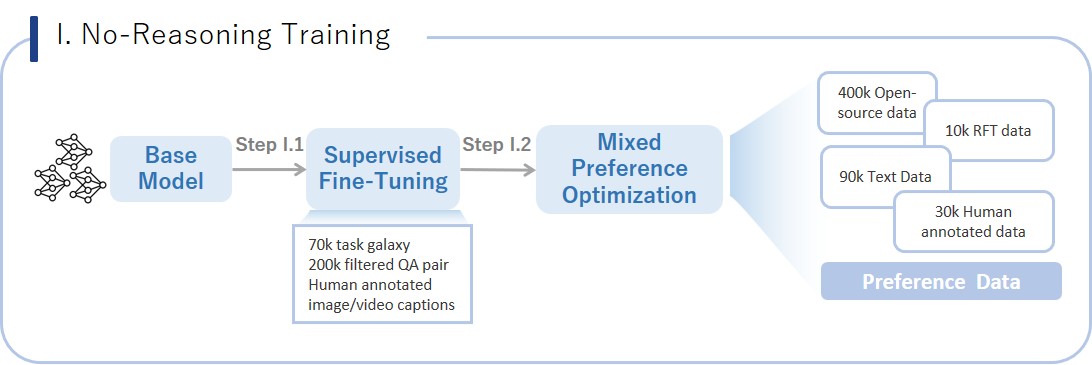

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

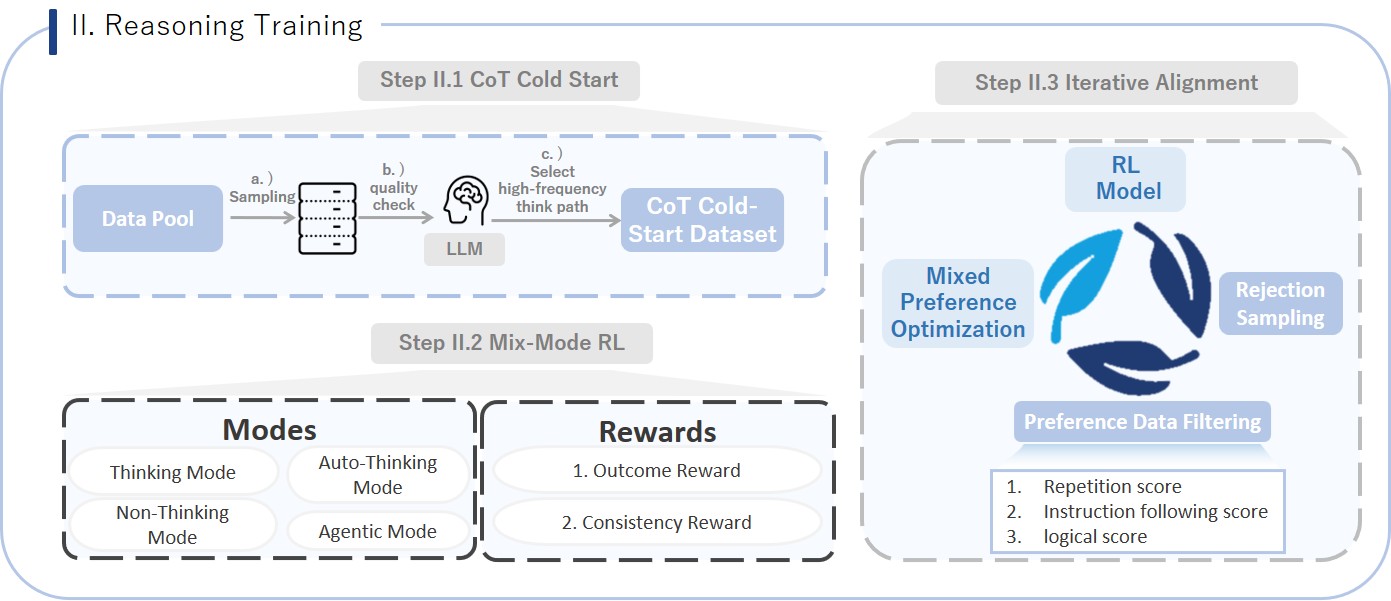

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

---

|

| 3 |

+

license: apache-2.0

|

| 4 |

+

language:

|

| 5 |

+

- en

|

| 6 |

+

pipeline_tag: image-text-to-text

|

| 7 |

+

tags:

|

| 8 |

+

- multimodal

|

| 9 |

+

library_name: transformers

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

# Kwai Keye-VL

|

| 13 |

+

|

| 14 |

+

<div align="center">

|

| 15 |

+

<img src="asset/keye_logo_2.png" width="100%" alt="Kwai Keye-VL Logo">

|

| 16 |

+

</div>

|

| 17 |

+

|

| 18 |

+

<font size=3><div align='center' > [[🍎 Home Page](https://kwai-keye.github.io/)] [[📖 Technical Report]()] [[📊 Models](https://huggingface.co/Kwai-Keye)] </div></font>

|

| 19 |

+

|

| 20 |

+

## 🔥 News

|

| 21 |

+

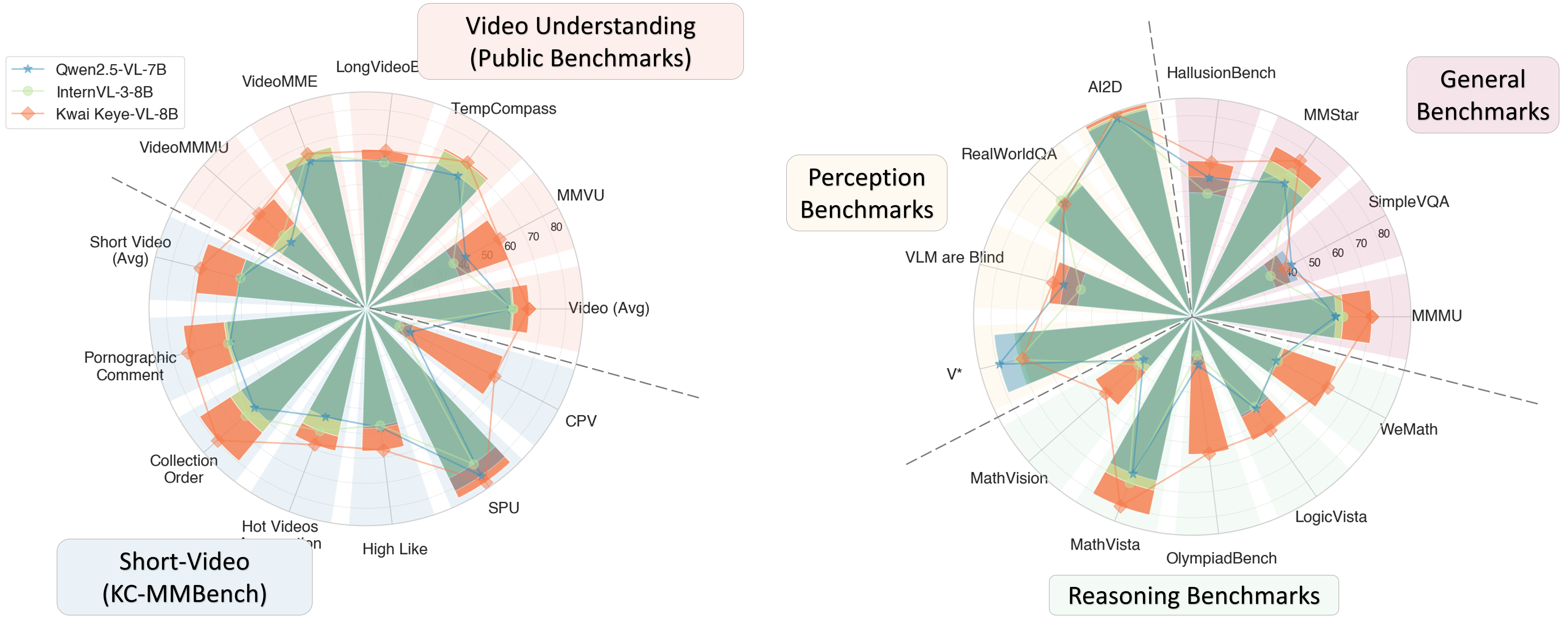

* **`2025.06.26`** 🌟 We are very proud to launch **Kwai Keye-VL**, a cutting-edge multimodal large language model meticulously crafted by the **Kwai Keye Team** at [Kuaishou](https://www.kuaishou.com/). As a cornerstone AI product within Kuaishou's advanced technology ecosystem, Keye excels in video understanding, visual perception, and reasoning tasks, setting new benchmarks in performance. Our team is working tirelessly to push the boundaries of what's possible, so stay tuned for more exciting updates!

|

| 22 |

+

|

| 23 |

+

<div align="center">

|

| 24 |

+

<img src="asset/teaser.png" width="100%" alt="Kwai Keye-VL Performance">

|

| 25 |

+

</div>

|

| 26 |

+

|

| 27 |

+

## Quickstart

|

| 28 |

+

|

| 29 |

+

Below, we provide simple examples to show how to use Kwai Keye-VL with 🤗 Transformers.

|

| 30 |

+

|

| 31 |

+

The code of Kwai Keye-VL has been in the latest Hugging face transformers and we advise you to build from source with command:

|

| 32 |

+

```

|

| 33 |

+

pip install git+https://github.com/huggingface/transformers accelerate

|

| 34 |

+

```

|

| 35 |

+

or you might encounter the following error:

|

| 36 |

+

```

|

| 37 |

+

KeyError: 'Keye-VL'

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

We offer a toolkit to help you handle various types of visual input more conveniently, as if you were using an API. This includes base64, URLs, and interleaved images and videos. You can install it using the following command:

|

| 42 |

+

|

| 43 |

+

```bash

|

| 44 |

+

# It's highly recommanded to use `[decord]` feature for faster video loading.

|

| 45 |

+

pip install keye-vl-utils[decord]==1.0.0

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

If you are not using Linux, you might not be able to install `decord` from PyPI. In that case, you can use `pip install keye-vl-utils` which will fall back to using torchvision for video processing. However, you can still [install decord from source](https://github.com/dmlc/decord?tab=readme-ov-file#install-from-source) to get decord used when loading video.

|

| 49 |

+

|

| 50 |

+

### Using 🤗 Transformers to Chat

|

| 51 |

+

|

| 52 |

+

Here we show a code snippet to show you how to use the chat model with `transformers` and `keye_vl_utils`:

|

| 53 |

+

|

| 54 |

+

```python

|

| 55 |

+

from transformers import AutoModel, AutoTokenizer, AutoProcessor

|

| 56 |

+

from keye_vl_utils import process_vision_info

|

| 57 |

+

|

| 58 |

+

# default: Load the model on the available device(s)

|

| 59 |

+

model_path = "Kwai-Keye/Keye-VL-8B-Preview"

|

| 60 |

+

|

| 61 |

+

model = AutoModel.from_pretrained(

|

| 62 |

+

model_path, torch_dtype="auto", device_map="auto", attn_implementation="flash_attention_2", trust_remote_code=True,

|

| 63 |

+

).to('cuda')

|

| 64 |

+

|

| 65 |

+

# We recommend enabling flash_attention_2 for better acceleration and memory saving, especially in multi-image and video scenarios.

|

| 66 |

+

# model = KeyeForConditionalGeneration.from_pretrained(

|

| 67 |

+

# "Kwai-Keye/Keye-VL-8B-Preview",

|

| 68 |

+

# torch_dtype=torch.bfloat16,

|

| 69 |

+

# attn_implementation="flash_attention_2",

|

| 70 |

+

# device_map="auto",

|

| 71 |

+

# )

|

| 72 |

+

|

| 73 |

+

# default processer

|

| 74 |

+

processor = AutoProcessor.from_pretrained(model_path, trust_remote_code=True)

|

| 75 |

+

|

| 76 |

+

# The default range for the number of visual tokens per image in the model is 4-16384.

|

| 77 |

+

# You can set min_pixels and max_pixels according to your needs, such as a token range of 256-1280, to balance performance and cost.

|

| 78 |

+

# min_pixels = 256*28*28

|

| 79 |

+

# max_pixels = 1280*28*28

|

| 80 |

+

# processor = AutoProcessor.from_pretrained(model_pat, min_pixels=min_pixels, max_pixels=max_pixels, trust_remote_code=True)

|

| 81 |

+

|

| 82 |

+

messages = [

|

| 83 |

+

{

|

| 84 |

+

"role": "user",

|

| 85 |

+

"content": [

|

| 86 |

+

{

|

| 87 |

+

"type": "image",

|

| 88 |

+

"image": "https://s1-11508.kwimgs.com/kos/nlav11508/mllm_all/ziran_jiafeimao_11.jpg",

|

| 89 |

+

},

|

| 90 |

+

{"type": "text", "text": "Describe this image."},

|

| 91 |

+

],

|

| 92 |

+

}

|

| 93 |

+

]

|

| 94 |

+

|

| 95 |

+

# Preparation for inference

|

| 96 |

+

text = processor.apply_chat_template(

|

| 97 |

+

messages, tokenize=False, add_generation_prompt=True

|

| 98 |

+

)

|

| 99 |

+

image_inputs, video_inputs = process_vision_info(messages)

|

| 100 |

+

inputs = processor(

|

| 101 |

+

text=[text],

|

| 102 |

+

images=image_inputs,

|

| 103 |

+

videos=video_inputs,

|

| 104 |

+

padding=True,

|

| 105 |

+

return_tensors="pt",

|

| 106 |

+

)

|

| 107 |

+

inputs = inputs.to("cuda")

|

| 108 |

+

|

| 109 |

+

# Inference: Generation of the output

|

| 110 |

+

generated_ids = model.generate(**inputs, max_new_tokens=128)

|

| 111 |

+

generated_ids_trimmed = [

|

| 112 |

+

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

|

| 113 |

+

]

|

| 114 |

+

output_text = processor.batch_decode(

|

| 115 |

+

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 116 |

+

)

|

| 117 |

+

print(output_text)

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

</details>

|

| 121 |

+

|

| 122 |

+

<details>

|

| 123 |

+

<summary>Video inference</summary>

|

| 124 |

+

|

| 125 |

+

```python

|

| 126 |

+

# Messages containing a images list as a video and a text query

|

| 127 |

+

messages = [

|

| 128 |

+

{

|

| 129 |

+

"role": "user",

|

| 130 |

+

"content": [

|

| 131 |

+

{

|

| 132 |

+

"type": "video",

|

| 133 |

+

"video": [

|

| 134 |

+

"file:///path/to/frame1.jpg",

|

| 135 |

+

"file:///path/to/frame2.jpg",

|

| 136 |

+

"file:///path/to/frame3.jpg",

|

| 137 |

+

"file:///path/to/frame4.jpg",

|

| 138 |

+

],

|

| 139 |

+

},

|

| 140 |

+

{"type": "text", "text": "Describe this video."},

|

| 141 |

+

],

|

| 142 |

+

}

|

| 143 |

+

]

|

| 144 |

+

|

| 145 |

+

# Messages containing a local video path and a text query

|

| 146 |

+

messages = [

|

| 147 |

+

{

|

| 148 |

+

"role": "user",

|

| 149 |

+

"content": [

|

| 150 |

+

{

|

| 151 |

+

"type": "video",

|

| 152 |

+

"video": "file:///path/to/video1.mp4",

|

| 153 |

+

"max_pixels": 360 * 420,

|

| 154 |

+

"fps": 1.0,

|

| 155 |

+

},

|

| 156 |

+

{"type": "text", "text": "Describe this video."},

|

| 157 |

+

],

|

| 158 |

+

}

|

| 159 |

+

]

|

| 160 |

+

|

| 161 |

+

# Messages containing a video url and a text query

|

| 162 |

+

messages = [

|

| 163 |

+

{

|

| 164 |

+

"role": "user",

|

| 165 |

+

"content": [

|

| 166 |

+

{

|

| 167 |

+

"type": "video",

|

| 168 |

+

"video": "http://s2-11508.kwimgs.com/kos/nlav11508/MLLM/videos_caption/98312843263.mp4",

|

| 169 |

+

},

|

| 170 |

+

{"type": "text", "text": "Describe this video."},

|

| 171 |

+

],

|

| 172 |

+

}

|

| 173 |

+

]

|

| 174 |

+

|

| 175 |

+

#In Keye-VL, frame rate information is also input into the model to align with absolute time.

|

| 176 |

+

# Preparation for inference

|

| 177 |

+

text = processor.apply_chat_template(

|

| 178 |

+

messages, tokenize=False, add_generation_prompt=True

|

| 179 |

+

)

|

| 180 |

+

image_inputs, video_inputs, video_kwargs = process_vision_info(messages, return_video_kwargs=True)

|

| 181 |

+

inputs = processor(

|

| 182 |

+

text=[text],

|

| 183 |

+

images=image_inputs,

|

| 184 |

+

videos=video_inputs,

|

| 185 |

+

padding=True,

|

| 186 |

+

return_tensors="pt",

|

| 187 |

+

**video_kwargs,

|

| 188 |

+

)

|

| 189 |

+

inputs = inputs.to("cuda")

|

| 190 |

+

|

| 191 |

+

# Inference

|

| 192 |

+

generated_ids = model.generate(**inputs, max_new_tokens=128)

|

| 193 |

+

generated_ids_trimmed = [

|

| 194 |

+

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

|

| 195 |

+

]

|

| 196 |

+

output_text = processor.batch_decode(

|

| 197 |

+

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 198 |

+

)

|

| 199 |

+

print(output_text)

|

| 200 |

+

```

|

| 201 |

+

|

| 202 |

+

Video URL compatibility largely depends on the third-party library version. The details are in the table below. change the backend by `FORCE_KEYEVL_VIDEO_READER=torchvision` or `FORCE_KEYEVL_VIDEO_READER=decord` if you prefer not to use the default one.

|

| 203 |

+

|

| 204 |

+

| Backend | HTTP | HTTPS |

|

| 205 |

+

|-------------|------|-------|

|

| 206 |

+

| torchvision >= 0.19.0 | ✅ | ✅ |

|

| 207 |

+

| torchvision < 0.19.0 | ❌ | ❌ |

|

| 208 |

+

| decord | ✅ | ❌ |

|

| 209 |

+

</details>

|

| 210 |

+

|

| 211 |

+

<details>

|

| 212 |

+

<summary>Batch inference</summary>

|

| 213 |

+

|

| 214 |

+

```python

|

| 215 |

+

# Sample messages for batch inference

|

| 216 |

+

messages1 = [

|

| 217 |

+

{

|

| 218 |

+

"role": "user",

|

| 219 |

+

"content": [

|

| 220 |

+

{"type": "image", "image": "file:///path/to/image1.jpg"},

|

| 221 |

+

{"type": "image", "image": "file:///path/to/image2.jpg"},

|

| 222 |

+

{"type": "text", "text": "What are the common elements in these pictures?"},

|

| 223 |

+

],

|

| 224 |

+

}

|

| 225 |

+

]

|

| 226 |

+

messages2 = [

|

| 227 |

+

{"role": "system", "content": "You are a helpful assistant."},

|

| 228 |

+

{"role": "user", "content": "Who are you?"},

|

| 229 |

+

]

|

| 230 |

+

# Combine messages for batch processing

|

| 231 |

+

messages = [messages1, messages2]

|

| 232 |

+

|

| 233 |

+

# Preparation for batch inference

|

| 234 |

+

texts = [

|

| 235 |

+

processor.apply_chat_template(msg, tokenize=False, add_generation_prompt=True)

|

| 236 |

+

for msg in messages

|

| 237 |

+

]

|

| 238 |

+

image_inputs, video_inputs = process_vision_info(messages)

|

| 239 |

+

inputs = processor(

|

| 240 |

+

text=texts,

|

| 241 |

+

images=image_inputs,

|

| 242 |

+

videos=video_inputs,

|

| 243 |

+

padding=True,

|

| 244 |

+

return_tensors="pt",

|

| 245 |

+

)

|

| 246 |

+

inputs = inputs.to("cuda")

|

| 247 |

+

|

| 248 |

+

# Batch Inference

|

| 249 |

+

generated_ids = model.generate(**inputs, max_new_tokens=128)

|

| 250 |

+

generated_ids_trimmed = [

|

| 251 |

+

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

|

| 252 |

+

]

|

| 253 |

+

output_texts = processor.batch_decode(

|

| 254 |

+

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 255 |

+

)

|

| 256 |

+

print(output_texts)

|

| 257 |

+

```

|

| 258 |

+

</details>

|

| 259 |

+

|

| 260 |

+

### More Usage Tips

|

| 261 |

+

|

| 262 |

+

For input images, we support local files, base64, and URLs. For videos, we currently only support local files.

|

| 263 |

+

|

| 264 |

+

```python

|

| 265 |

+

# You can directly insert a local file path, a URL, or a base64-encoded image into the position where you want in the text.

|

| 266 |

+

## Local file path

|

| 267 |

+

messages = [

|

| 268 |

+

{

|

| 269 |

+

"role": "user",

|

| 270 |

+

"content": [

|

| 271 |

+

{"type": "image", "image": "file:///path/to/your/image.jpg"},

|

| 272 |

+

{"type": "text", "text": "Describe this image."},

|

| 273 |

+

],

|

| 274 |

+

}

|

| 275 |

+

]

|

| 276 |

+

## Image URL

|

| 277 |

+

messages = [

|

| 278 |

+

{

|

| 279 |

+

"role": "user",

|

| 280 |

+

"content": [

|

| 281 |

+

{"type": "image", "image": "http://path/to/your/image.jpg"},

|

| 282 |

+

{"type": "text", "text": "Describe this image."},

|

| 283 |

+

],

|

| 284 |

+

}

|

| 285 |

+

]

|

| 286 |

+

## Base64 encoded image

|

| 287 |

+

messages = [

|

| 288 |

+

{

|

| 289 |

+

"role": "user",

|

| 290 |

+

"content": [

|

| 291 |

+

{"type": "image", "image": "data:image;base64,/9j/..."},

|

| 292 |

+

{"type": "text", "text": "Describe this image."},

|

| 293 |

+

],

|

| 294 |

+

}

|

| 295 |

+

]

|

| 296 |

+

```

|

| 297 |

+

|

| 298 |

+

#### Image Resolution for performance boost

|

| 299 |

+

|

| 300 |

+

The model supports a wide range of resolution inputs. By default, it uses the native resolution for input, but higher resolutions can enhance performance at the cost of more computation. Users can set the minimum and maximum number of pixels to achieve an optimal configuration for their needs, such as a token count range of 256-1280, to balance speed and memory usage.

|

| 301 |

+

|

| 302 |

+

```python

|

| 303 |

+

min_pixels = 256 * 28 * 28

|

| 304 |

+

max_pixels = 1280 * 28 * 28

|

| 305 |

+

processor = AutoProcessor.from_pretrained(

|

| 306 |

+

"Kwai-Keye/Keye-VL-8B-Preview", min_pixels=min_pixels, max_pixels=max_pixels

|

| 307 |

+

)

|

| 308 |

+

```

|

| 309 |

+

|

| 310 |

+

Besides, We provide two methods for fine-grained control over the image size input to the model:

|

| 311 |

+

|

| 312 |

+

1. Define min_pixels and max_pixels: Images will be resized to maintain their aspect ratio within the range of min_pixels and max_pixels.

|

| 313 |

+

|

| 314 |

+

2. Specify exact dimensions: Directly set `resized_height` and `resized_width`. These values will be rounded to the nearest multiple of 28.

|

| 315 |

+

|

| 316 |

+

```python

|

| 317 |

+

# min_pixels and max_pixels

|

| 318 |

+

messages = [

|

| 319 |

+

{

|

| 320 |

+

"role": "user",

|

| 321 |

+

"content": [

|

| 322 |

+

{

|

| 323 |

+

"type": "image",

|

| 324 |

+

"image": "file:///path/to/your/image.jpg",

|

| 325 |

+

"resized_height": 280,

|

| 326 |

+

"resized_width": 420,

|

| 327 |

+

},

|

| 328 |

+

{"type": "text", "text": "Describe this image."},

|

| 329 |

+

],

|

| 330 |

+

}

|

| 331 |

+

]

|

| 332 |

+

# resized_height and resized_width

|

| 333 |

+

messages = [

|

| 334 |

+

{

|

| 335 |

+

"role": "user",

|

| 336 |

+

"content": [

|

| 337 |

+

{

|

| 338 |

+

"type": "image",

|

| 339 |

+

"image": "file:///path/to/your/image.jpg",

|

| 340 |

+

"min_pixels": 50176,

|

| 341 |

+

"max_pixels": 50176,

|

| 342 |

+

},

|

| 343 |

+

{"type": "text", "text": "Describe this image."},

|

| 344 |

+

],

|

| 345 |

+

}

|

| 346 |

+

]

|

| 347 |

+

```

|

| 348 |

+

|

| 349 |

+

## 👀 Architecture and Training Strategy

|

| 350 |

+

|

| 351 |

+

<div align="center">

|

| 352 |

+

<img src="asset/architecture.png" width="100%" alt="Kwai Keye Architecture">

|

| 353 |

+

<i> The Kwai Keye-VL model architecture is based on the Qwen3-8B language model and incorporates a vision encoder initialized from the open-source SigLIP. It supports native dynamic resolution, preserving the original aspect ratio of images by dividing each into a 14x14 patch sequence. A simple MLP layer then maps and merges the visual tokens. The model uses 3D RoPE for unified processing of text, image, and video information, establishing a one-to-one correspondence between position encoding and absolute time to ensure precise perception of temporal changes in video information.</i>

|

| 354 |

+

</div>

|

| 355 |

+

|

| 356 |

+

### 🌟 Pre-Train

|

| 357 |

+

|

| 358 |

+

<div align="center">

|

| 359 |

+

<img src="asset/pre-train.png" width="100%" alt="Kwai Keye Pretraining">

|

| 360 |

+

<i>The Kwai Keye pre-training pipeline, featuring a four-stage progressive strategy: Image-Text Matching, ViT-LLM Alignment, Multi-task Pre-training, and Annealing with model merging.</i>

|

| 361 |

+

</div>

|

| 362 |

+

<details>

|

| 363 |

+

<summary>More Details</summary>

|

| 364 |

+

|

| 365 |

+

#### Pre-training Data: Massive, High-Quality, Diverse

|

| 366 |

+

|

| 367 |

+

- **Diversity**: Includes image-text pairs, videos, pure text, etc., with tasks such as fine-grained description, OCR, Q&A, localization, and more.

|

| 368 |

+

- **High Quality**: Data is filtered using CLIP scores and VLM discriminators, and MinHASH is used for deduplication to prevent data leakage.

|

| 369 |

+

- **Self-Built Datasets**: High-quality internal datasets are specifically constructed, especially for detailed captions and Chinese OCR, to compensate for the shortcomings of open-source data.

|

| 370 |

+

|

| 371 |

+

#### Training Process: Four-Stage Progressive Optimization

|

| 372 |

+

Kwai Keye-VL adopts a four-stage progressive training strategy:

|

| 373 |

+

|

| 374 |

+

- **Stage 0 (Visual Pre-training)**: Continuously pre-trains the visual encoder to adapt to internal data distribution and support dynamic resolution.

|

| 375 |

+

- **Stage 1 (Cross-Modal Alignment)**: Freezes the backbone model and trains only the MLP to establish robust image-text alignment at low cost.

|

| 376 |

+

- **Stage 2 (Multi-Task Pre-training)**: Unlocks all parameters to comprehensively enhance the model's visual understanding capabilities.

|

| 377 |

+

- **Stage 3 (Annealing Training)**: Fine-tunes with high-quality data to further improve the model's fine-grained understanding capabilities.

|

| 378 |

+

|

| 379 |

+

Finally, Kwai Keye-VL explores isomorphic heterogeneous fusion technology by averaging parameters of annealed training models with different data ratios, reducing model bias while retaining multidimensional capabilities, thereby enhancing the model's robustness.

|

| 380 |

+

|

| 381 |

+

</details>

|

| 382 |

+

|

| 383 |

+

### 🌟 Post-Train

|

| 384 |

+

|

| 385 |

+

The post-training phase of Kwai Keye is meticulously designed into two phases with five stages, aiming to comprehensively enhance the model's performance, especially its reasoning ability in complex tasks. This is a key breakthrough for achieving advanced cognitive functions.

|

| 386 |

+

|

| 387 |

+

#### Stage I. No-Reasoning Training: Strengthening Basic Performance

|

| 388 |

+

|

| 389 |

+

<div align="center">

|

| 390 |

+

<img src="asset/post1.jpeg" width="100%" alt="Kwai Keye Post-Training">

|

| 391 |

+

<i>This phase focuses on the model's basic performance and stability in non-reasoning scenarios.</i>

|

| 392 |

+

</div>

|

| 393 |

+

|

| 394 |

+

<details>

|

| 395 |

+

<summary>More Details</summary>

|

| 396 |

+

|

| 397 |

+

- **Stage II.1: Supervised Fine-Tuning (SFT)**

|

| 398 |

+

- Data Composition: Includes 5 million multimodal data, built on a diverse task classification system (70,000 tasks) using the self-developed TaskGalaxy framework. High-difficulty data is selected by multimodal large models and manually annotated to ensure data quality and challenge.

|

| 399 |

+

|

| 400 |

+

- **Stage II.2: Mixed Preference Optimization (MPO)**

|

| 401 |

+

- Data Composition: Comprises open-source data and pure text preference data. Bad cases from the SFT model are used as quality prompts, and preference data is generated through rejection sampling using Qwen2.5VL 72B and SFT models, with manual scoring and ranking.

|

| 402 |

+

|

| 403 |

+

</details>

|

| 404 |

+

|

| 405 |

+

#### Stage II. Reasoning Training: Core Breakthrough for Complex Cognition

|

| 406 |

+

|

| 407 |

+

<div align="center">

|

| 408 |

+

<img src="asset/post2.jpeg" width="100%" alt="Kwai Keye Post-Training">

|

| 409 |

+

<br>

|

| 410 |

+

<i>This phase is the highlight and major contribution of the Kwai Keye training process. By introducing a mix-mode Chain of Thought (CoT) and multi-thinking mode reinforcement learning (RL) mechanisms, it significantly enhances the model's multimodal perception, reasoning, and think-with-image capabilities, enabling it to handle more complex, multi-step tasks.</i>

|

| 411 |

+

</div>

|

| 412 |

+

|

| 413 |

+

<details>

|

| 414 |

+

<summary>More Details</summary>

|

| 415 |

+

|

| 416 |

+

- **Step II.1: CoT Cold-Start**

|

| 417 |

+

- Objective: Cold-start the model's chain of thought reasoning ability, allowing it to mimic human step-by-step thinking.

|

| 418 |

+

- Data Composition: Combines non-reasoning data (330,000), reasoning data (230,000), auto-reasoning data (20,000), and agentic reasoning data (100,000) to teach the model different modes.

|

| 419 |

+

- Thinking Data: Focuses on high-difficulty perception and reasoning scenarios like math, science, charts, complex Chinese, and OCR, using multimodal large models for multiple sampling and evaluation to build over 70,000 complex thought chain data.

|

| 420 |

+

- Pure Text Data: Constructs a pure text long thought chain dataset from dimensions like code, math, science, instruction following, and general reasoning tasks.

|

| 421 |

+

- Auto-Think Data: Automatically selects "think" or "no_think" modes based on the complexity of prompts, enabling adaptive reasoning mode switching.

|

| 422 |

+

- Think with Image Data: 100,000 agent data entries, asking Qwen 2.5 VL-72B if image operations (e.g., cropping, rotating, enhancing contrast) are needed to simplify problems or improve answer quality, combined with external sandbox code execution to empower the model to solve problems by writing code to manipulate images or perform mathematical calculations.

|

| 423 |

+

- Training Strategy: Trains with a mix of four modes to achieve cold-start in different reasoning modes.

|

| 424 |

+

- **Step II.2: CoT-Mix RL**

|

| 425 |

+

- Objective: Deeply optimize the model's comprehensive abilities in multimodal perception, reasoning, pure text math, short video understanding, and agentic tasks through reinforcement learning based on the chain of thought, making the reasoning process more robust and efficient.

|

| 426 |

+

- Data Composition: Covers complex tasks from multimodal perception (complex text recognition, object counting), multimodal reasoning, high-difficulty math problems, short video content understanding to Think with Image.

|

| 427 |

+

- Training Strategy: Uses a mix-mode GRPO algorithm for reinforcement learning, where reward signals evaluate both the correctness of results and the consistency of the process and results, ensuring synchronized optimization of reasoning processes and final outcomes.

|

| 428 |

+

- **Step II.2: Iterative Alignment**

|

| 429 |

+

- Objective: Address common issues like repetitive crashes and poor logic in model-generated content, and enable spontaneous reasoning mode selection to enhance final performance and stability.

|

| 430 |

+

- Data Composition: Constructs preference data through Rejection Fine-Tuning (RFT), combining rule-based scoring (judging repetition, instruction following, etc.) and model scoring (cognitive scores provided by large models) to rank various model responses, building a high-quality preference dataset.

|

| 431 |

+

- Training Strategy: Multi-round iterative optimization with the constructed "good/bad" preference data pairs through the MPO algorithm. This aims to correct model generation flaws and ultimately enable it to intelligently and adaptively choose whether to activate deep reasoning modes based on problem complexity.

|

| 432 |

+

|

| 433 |

+

</details>

|

| 434 |

+

|

| 435 |

+

## 📈 Experimental Results

|

| 436 |

+

|

| 437 |

+

|

| 438 |

+

|

| 439 |

+

1. Keye-VL-8B establishes itself with powerful, state-of-the-art perceptual abilities that are competitive with leading models.

|

| 440 |

+

2. Keye-VL-8B demonstrates exceptional proficiency in video understanding. Across a comprehensive suite of authoritative public video benchmarks, including Video-MME, Video-MMMU, TempCompass, LongVideoBench, and MMVU, the model's performance significantly surpasses that of other top-tier models of a comparable size.

|

| 441 |

+

3. In evaluation sets that require complex logical reasoning and mathematical problem-solving, such as WeMath, MathVerse, and LogicVista, Kwai Keye-VL-8B displays a strong performance curve. This highlights its advanced capacity for logical deduction and solving complex quantitative problems.

|

| 442 |

+

|

| 443 |

+

## Requirements

|

| 444 |

+

The code of Kwai Keye-VL has been in the latest Hugging face transformers and we advise you to build from source with command:

|

| 445 |

+

```

|

| 446 |

+

pip install git+https://github.com/huggingface/transformers accelerate

|

| 447 |

+

```

|

| 448 |

+

or you might encounter the following error:

|

| 449 |

+

```

|

| 450 |

+

KeyError: 'Keye-VL'

|

| 451 |

+

```

|

| 452 |

+

|

| 453 |

+

## ✒️ Citation

|

| 454 |

+

|

| 455 |

+

If you find our work helpful for your research, please consider citing our work.

|

| 456 |

+

|

| 457 |

+

```bibtex

|

| 458 |

+

@misc{Keye-VL-8B-Preview,

|

| 459 |

+

title = {Keye-VL-8B-Preview},

|

| 460 |

+

url = {https://github.com/Kwai-Keye/Keye},

|

| 461 |

+

author = {Keye Team},

|

| 462 |

+

month = {June},

|

| 463 |

+

year = {2025}

|

| 464 |

+

}

|

| 465 |

+

```

|

| 466 |

+

|

| 467 |

+

## Acknowledgement

|

| 468 |

+

|

| 469 |

+

Kwai Keye-VL is developed based on the codebases of the following projects: [SigLIP](https://huggingface.co/google/siglip-so400m-patch14-384), [Qwen3](https://github.com/QwenLM/Qwen3), [Qwen2.5-VL](https://github.com/QwenLM/Qwen2.5-VL), [VLMEvalKit](https://github.com/open-compass/VLMEvalKit). We sincerely thank these projects for their outstanding work.

|

added_tokens.json

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</think>": 151668,

|

| 3 |

+

"</tool_call>": 151658,

|

| 4 |

+

"</tool_response>": 151666,

|

| 5 |

+

"<think>": 151667,

|

| 6 |

+

"<tool_call>": 151657,

|

| 7 |

+

"<tool_response>": 151665,

|

| 8 |

+

"<|box_end|>": 151649,

|

| 9 |

+

"<|box_start|>": 151648,

|

| 10 |

+

"<|clip_time_end|>": 151674,

|

| 11 |

+

"<|clip_time_start|>": 151673,

|

| 12 |

+

"<|endoftext|>": 151643,

|

| 13 |

+

"<|file_sep|>": 151664,

|

| 14 |

+

"<|fim_middle|>": 151660,

|

| 15 |

+

"<|fim_pad|>": 151662,

|

| 16 |

+

"<|fim_prefix|>": 151659,

|

| 17 |

+

"<|fim_suffix|>": 151661,

|

| 18 |

+

"<|im_end|>": 151645,

|

| 19 |

+

"<|im_start|>": 151644,

|

| 20 |

+

"<|image_pad|>": 151655,

|

| 21 |

+

"<|object_ref_end|>": 151647,

|

| 22 |

+

"<|object_ref_start|>": 151646,

|

| 23 |

+

"<|ocr_text_end|>": 151672,

|

| 24 |

+

"<|ocr_text_start|>": 151671,

|

| 25 |

+

"<|point_end|>": 151670,

|

| 26 |

+

"<|point_start|>": 151669,

|

| 27 |

+

"<|quad_end|>": 151651,

|

| 28 |

+

"<|quad_start|>": 151650,

|

| 29 |

+

"<|repo_name|>": 151663,

|

| 30 |

+

"<|video_pad|>": 151656,

|

| 31 |

+

"<|vision_end|>": 151653,

|

| 32 |

+

"<|vision_pad|>": 151654,

|

| 33 |

+

"<|vision_start|>": 151652

|

| 34 |

+

}

|

asset/architecture.png

ADDED

|

Git LFS Details

|

asset/keye_logo_2.png

ADDED

|

Git LFS Details

|

asset/post1.jpeg

ADDED

|

asset/post2.jpeg

ADDED

|

Git LFS Details

|

asset/pre-train.png

ADDED

|

Git LFS Details

|

asset/teaser.png

ADDED

|

Git LFS Details

|

chat_template.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"chat_template": "{% set image_count = namespace(value=0) %}{% set video_count = namespace(value=0) %}{% for message in messages %}<|im_start|>{{ message['role'] }}\n{% if message['content'] is string %}{{ message['content'] }}<|im_end|>\n{% else %}{% for content in message['content'] %}{% if content['type'] == 'image' or 'image' in content or 'image_url' in content %}{% set image_count.value = image_count.value + 1 %}{% if add_vision_id %}Picture {{ image_count.value }}: {% endif %}<|vision_start|><|image_pad|><|vision_end|>{% elif content['type'] == 'video' or 'video' in content %}{% set video_count.value = video_count.value + 1 %}{% if add_vision_id %}Video {{ video_count.value }}: {% endif %}<|vision_start|><|video_pad|><|vision_end|>{% elif 'text' in content %}{{ content['text'] }}{% endif %}{% endfor %}<|im_end|>\n{% endif %}{% endfor %}{% if add_generation_prompt %}<|im_start|>assistant\n{% endif %}"

|

| 3 |

+

}

|

config.json

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_commit_hash": null,

|

| 3 |

+

"auto_map": {

|

| 4 |

+

"AutoConfig": "configuration_keye.KeyeConfig",

|

| 5 |

+

"AutoModel": "modeling_keye.KeyeForConditionalGeneration",

|

| 6 |

+

"AutoModelForCausalLM": "modeling_keye.KeyeForConditionalGeneration"

|

| 7 |

+

},

|

| 8 |

+

"architectures": [

|

| 9 |

+

"KeyeForConditionalGeneration"

|

| 10 |

+

],

|

| 11 |

+

"attention_bias": false,

|

| 12 |

+

"attention_dropout": 0.0,

|

| 13 |

+

"bos_token_id": 151643,

|

| 14 |

+

"eos_token_id": 151645,

|

| 15 |

+

"vision_start_token_id": 151652,

|

| 16 |

+

"vision_end_token_id": 151653,

|

| 17 |

+

"vision_token_id": 151654,

|

| 18 |

+

"image_token_id": 151655,

|

| 19 |

+

"video_token_id": 151656,

|

| 20 |

+

"head_dim": 128,

|

| 21 |

+

"hidden_act": "silu",

|

| 22 |

+

"hidden_size": 4096,

|

| 23 |

+

"initializer_range": 0.02,

|

| 24 |

+

"intermediate_size": 12288,

|

| 25 |

+

"max_position_embeddings": 40960,

|

| 26 |

+

"max_window_layers": 36,

|

| 27 |

+

"model_type": "Keye",

|

| 28 |

+

"num_attention_heads": 32,

|

| 29 |

+

"num_hidden_layers": 36,

|

| 30 |

+

"num_key_value_heads": 8,

|

| 31 |

+

"rms_norm_eps": 1e-06,

|

| 32 |

+

"rope_scaling": null,

|

| 33 |

+

"rope_theta": 1000000,

|

| 34 |

+

"sliding_window": null,

|

| 35 |

+

"tie_word_embeddings": false,

|

| 36 |

+

"torch_dtype": "bfloat16",

|

| 37 |

+

"transformers_version": "4.41.2",

|

| 38 |

+

"use_cache": true,

|

| 39 |

+

"use_sliding_window": false,

|

| 40 |

+

"initializer_factor": 1.0,

|

| 41 |

+

"vision_config": {

|

| 42 |

+

"_attn_implementation_autoset": true,

|

| 43 |

+

"add_cross_attention": false,

|

| 44 |

+

"architectures": [

|

| 45 |

+

"SiglipVisionModel"

|

| 46 |

+

],

|

| 47 |

+

"attention_dropout": 0.0,

|

| 48 |

+

"auto_map": {

|

| 49 |

+

"AutoConfig": "configuration_keye.KeyeVisionConfig",

|

| 50 |

+

"AutoModel": "modeling_keye.SiglipVisionModel"

|

| 51 |

+

},

|

| 52 |

+

"hidden_size": 1152,

|

| 53 |

+

"image_size": 384,

|

| 54 |

+

"intermediate_size": 4304,

|

| 55 |

+

"model_type": "siglip_vision_model",

|

| 56 |

+

"num_attention_heads": 16,

|

| 57 |

+

"num_hidden_layers": 27,

|

| 58 |

+

"patch_size": 14,

|

| 59 |

+

"spatial_merge_size": 2,

|

| 60 |

+

"tokens_per_second": 2,

|

| 61 |

+

"temporal_patch_size": 2

|

| 62 |

+

},

|

| 63 |

+

"rope_scaling": {

|

| 64 |

+

"type": "mrope",

|

| 65 |

+

"mrope_section": [

|

| 66 |

+

16,

|

| 67 |

+

24,

|

| 68 |

+

24

|

| 69 |

+

]

|

| 70 |

+

},

|

| 71 |

+

"vocab_size": 151936

|

| 72 |

+

}

|

configuration_keye.py

ADDED

|

@@ -0,0 +1,243 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# coding=utf-8

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

from transformers.configuration_utils import PretrainedConfig

|

| 15 |

+

from transformers.modeling_rope_utils import rope_config_validation

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

class KeyeVisionConfig(PretrainedConfig):

|

| 19 |

+

model_type = "Keye"

|

| 20 |

+

base_config_key = "vision_config"

|

| 21 |

+

|

| 22 |

+

def __init__(

|

| 23 |

+

self,

|

| 24 |

+

hidden_size=768,

|

| 25 |

+

intermediate_size=3072,

|

| 26 |

+

num_hidden_layers=12,

|

| 27 |

+

num_attention_heads=12,

|

| 28 |

+

num_channels=3,

|

| 29 |

+

image_size=224,

|

| 30 |

+

patch_size=14,

|

| 31 |

+

hidden_act="gelu_pytorch_tanh",

|

| 32 |

+

layer_norm_eps=1e-6,

|

| 33 |

+

attention_dropout=0.0,

|

| 34 |

+

spatial_merge_size=2,

|

| 35 |

+

temporal_patch_size=2,

|

| 36 |

+

tokens_per_second=2,

|

| 37 |

+

**kwargs,

|

| 38 |

+

):

|

| 39 |

+

super().__init__(**kwargs)

|

| 40 |

+

|

| 41 |

+

self.hidden_size = hidden_size

|

| 42 |

+

self.intermediate_size = intermediate_size

|

| 43 |

+

self.num_hidden_layers = num_hidden_layers

|

| 44 |

+

self.num_attention_heads = num_attention_heads

|

| 45 |

+

self.num_channels = num_channels

|

| 46 |

+

self.patch_size = patch_size

|

| 47 |

+

self.image_size = image_size

|

| 48 |

+

self.attention_dropout = attention_dropout

|

| 49 |

+

self.layer_norm_eps = layer_norm_eps

|

| 50 |

+

self.hidden_act = hidden_act

|

| 51 |

+

self.spatial_merge_size = spatial_merge_size

|

| 52 |

+

self.temporal_patch_size = temporal_patch_size

|

| 53 |

+

self.tokens_per_second = tokens_per_second

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

class KeyeConfig(PretrainedConfig):

|

| 57 |

+

r"""

|

| 58 |

+

This is the configuration class to store the configuration of a [`KeyeForConditionalGeneration`].

|

| 59 |

+

|

| 60 |

+

Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

|

| 61 |

+

documentation from [`PretrainedConfig`] for more information.

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

Args:

|

| 65 |

+

vocab_size (`int`, *optional*, defaults to 152064):

|

| 66 |

+

Vocabulary size of the Keye model. Defines the number of different tokens that can be represented by the

|

| 67 |

+

`inputs_ids` passed when calling [`KeyeForConditionalGeneration`]

|

| 68 |

+

hidden_size (`int`, *optional*, defaults to 8192):

|

| 69 |

+

Dimension of the hidden representations.

|

| 70 |

+

intermediate_size (`int`, *optional*, defaults to 29568):

|

| 71 |

+

Dimension of the MLP representations.

|

| 72 |

+

num_hidden_layers (`int`, *optional*, defaults to 80):

|

| 73 |

+

Number of hidden layers in the Transformer encoder.

|

| 74 |

+

num_attention_heads (`int`, *optional*, defaults to 64):

|

| 75 |

+

Number of attention heads for each attention layer in the Transformer encoder.

|

| 76 |

+

num_key_value_heads (`int`, *optional*, defaults to 8):

|

| 77 |

+

This is the number of key_value heads that should be used to implement Grouped Query Attention. If

|

| 78 |

+

`num_key_value_heads=num_attention_heads`, the model will use Multi Head Attention (MHA), if

|

| 79 |

+

`num_key_value_heads=1` the model will use Multi Query Attention (MQA) otherwise GQA is used. When

|

| 80 |

+

converting a multi-head checkpoint to a GQA checkpoint, each group key and value head should be constructed

|

| 81 |

+

by meanpooling all the original heads within that group. For more details checkout [this

|

| 82 |

+

paper](https://arxiv.org/pdf/2305.13245.pdf). If it is not specified, will default to `32`.

|

| 83 |

+

hidden_act (`str` or `function`, *optional*, defaults to `"silu"`):

|

| 84 |

+

The non-linear activation function (function or string) in the decoder.

|

| 85 |

+

max_position_embeddings (`int`, *optional*, defaults to 32768):

|

| 86 |

+

The maximum sequence length that this model might ever be used with.

|

| 87 |

+

initializer_range (`float`, *optional*, defaults to 0.02):

|

| 88 |

+

The standard deviation of the truncated_normal_initializer for initializing all weight matrices.

|

| 89 |

+

rms_norm_eps (`float`, *optional*, defaults to 1e-05):

|

| 90 |

+

The epsilon used by the rms normalization layers.

|

| 91 |

+

use_cache (`bool`, *optional*, defaults to `True`):

|

| 92 |

+

Whether or not the model should return the last key/values attentions (not used by all models). Only

|

| 93 |

+

relevant if `config.is_decoder=True`.

|

| 94 |

+

tie_word_embeddings (`bool`, *optional*, defaults to `False`):

|

| 95 |

+

Whether the model's input and output word embeddings should be tied.

|

| 96 |

+

rope_theta (`float`, *optional*, defaults to 1000000.0):

|

| 97 |

+

The base period of the RoPE embeddings.

|

| 98 |

+

use_sliding_window (`bool`, *optional*, defaults to `False`):

|

| 99 |

+

Whether to use sliding window attention.

|

| 100 |

+

sliding_window (`int`, *optional*, defaults to 4096):

|

| 101 |

+

Sliding window attention (SWA) window size. If not specified, will default to `4096`.

|

| 102 |

+

max_window_layers (`int`, *optional*, defaults to 80):

|

| 103 |

+

The number of layers that use SWA (Sliding Window Attention). The bottom layers use SWA while the top use full attention.

|

| 104 |

+

attention_dropout (`float`, *optional*, defaults to 0.0):

|

| 105 |

+

The dropout ratio for the attention probabilities.

|

| 106 |

+

vision_config (`Dict`, *optional*):

|

| 107 |

+

The config for the visual encoder initialization.

|

| 108 |

+

rope_scaling (`Dict`, *optional*):

|

| 109 |

+

Dictionary containing the scaling configuration for the RoPE embeddings. NOTE: if you apply new rope type

|

| 110 |

+

and you expect the model to work on longer `max_position_embeddings`, we recommend you to update this value

|

| 111 |

+

accordingly.

|

| 112 |

+

Expected contents:

|

| 113 |

+

`rope_type` (`str`):

|

| 114 |

+

The sub-variant of RoPE to use. Can be one of ['default', 'linear', 'dynamic', 'yarn', 'longrope',

|

| 115 |

+

'llama3'], with 'default' being the original RoPE implementation.

|

| 116 |

+

`factor` (`float`, *optional*):

|

| 117 |

+

Used with all rope types except 'default'. The scaling factor to apply to the RoPE embeddings. In

|

| 118 |

+

most scaling types, a `factor` of x will enable the model to handle sequences of length x *

|

| 119 |

+

original maximum pre-trained length.

|

| 120 |

+

`original_max_position_embeddings` (`int`, *optional*):

|

| 121 |

+

Used with 'dynamic', 'longrope' and 'llama3'. The original max position embeddings used during

|

| 122 |

+

pretraining.

|

| 123 |

+

`attention_factor` (`float`, *optional*):

|

| 124 |

+

Used with 'yarn' and 'longrope'. The scaling factor to be applied on the attention

|

| 125 |

+